mongodb分片集群

环境 : 三台服务器(192.168.0.102,192.168.0.103,192.168.0.104)

三台机子版本相同的mongo, 做了两个分片(需要更多的可以自己添加)

第一步: 解压mongo, 我这里的解压完mongo的bin目录为 /root/mongodb-linux-x86_64-rhel70-3.6.3/bin(3台机子都解压)

第二步: 创建mongo配置环境

- 先做mongo的复制集,在三台机子上做如下配置 /home/mongo/config/mongo.conf(102,103,104)

fork=true

dbpath=/home/mongo/data/db

port=27017

bind_ip=0.0.0.0

logpath=/home/mongo/logs/mongodb.log

logappend=true

replSet=tuji

smallfiles=true

shardsvr=true

fork=true

dbpath=/home/mongo/data/db2

port=27018

bind_ip=0.0.0.0

logpath=/home/mongo/logs/mongodb2.log

logappend=true

replSet=tuji2

smallfiles=true

shardsvr=true

上述出现的dbpath和logpath自己需要去创建,上述是我自己的创建路径,其中replSet为副本集名称,可以自己定义,上述创建两个副本集,副本集的名称不一样,端口不一样,上述两个配置文件在3台机子上都要配置。

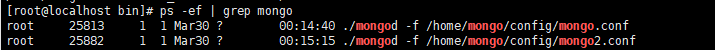

2. 启动复制集

# 到mongo解压后的bin目录下执行(三台服务器都执行下边两个命令,启动mongo)

./mongod -f /home/mongo/config/mongo.conf

./mongod -f /home/mongo/config/mongo2.conf

启动完可以查看下进程:

3. 进入mongo,做初始化(三台服务器,任选一台)。

# 进入mongo bin目录下 分别执行

./mongo -port 27017

./mongo -port 27018

首先是进入27017这个端口的mongo, 配置复制集。

var rsconf = {

_id:"tuji", #上边定义的副本集名称,27017对应的

members:

[

{

_id:1,

host:'192.168.0.103:27017'

},

{

_id:2,

host:'192.168.0.102:27017'

},

{

_id:3,

host:'192.168.0.104:27017'

}

]

}

rs.initiate(rsconf); #加载初始化

rs.status(); 查看状态状态应该是:

{

"set" : "tuji",

"date" : ISODate("2020-03-31T01:29:02.204Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 1,

"name" : "192.168.0.103:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 77688,

"optime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-03-31T01:28:59Z"),

"optimeDurableDate" : ISODate("2020-03-31T01:28:59Z"),

"lastHeartbeat" : ISODate("2020-03-31T01:29:01.520Z"),

"lastHeartbeatRecv" : ISODate("2020-03-31T01:29:01.520Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.104:27017",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.0.102:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 78845,

"optime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-03-31T01:28:59Z"),

"electionTime" : Timestamp(1585540465, 1),

"electionDate" : ISODate("2020-03-30T03:54:25Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 3,

"name" : "192.168.0.104:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 77688,

"optime" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1585618139, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-03-31T01:28:59Z"),

"optimeDurableDate" : ISODate("2020-03-31T01:28:59Z"),

"lastHeartbeat" : ISODate("2020-03-31T01:29:01.220Z"),

"lastHeartbeatRecv" : ISODate("2020-03-31T01:29:00.809Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.102:27017",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1585618139, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1585618139, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1585618138, 4),

"t" : NumberLong(1)

}

}

}

4 执行的./mongod -port 27018 也做副本集初始化操作,

var rsconf = {

_id:"tuji2",

members:

[

{

_id:1,

host:'192.168.0.103:27018'

},

{

_id:2,

host:'192.168.0.102:27018'

},

{

_id:3,

host:'192.168.0.104:27018'

}

]

}

rs.initiate(rsconf);

rs.status();第三步: 配置config节点的配置文件(三台机子都需要做)

systemLog:

destination: file

path: /home/mongo/mongo-cfg/logs/mongodb.log #文件需要创建

logAppend: true

storage:

journal:

enabled: true

dbPath: /home/mongo/mongo-cfg/data # 需要自己创建

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 4

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.0.102 # 三台机子 这个ip不同的

port: 28018 # 又是一个新端口,注意区分

replication:

oplogSizeMB: 2048

replSetName: configReplSet 名字可以自己定义

sharding:

clusterRole: configsvr

processManagement:

fork: true启动复制集:

# 启动mongo的配置服务

./mongod -f /home/mongo/mongo-cfg.conf

#登陆配置节点(做配置节点的初始化 我在102这个服务器上做的,现在启动的mongo比较多,指定一下)

./mongo -host 192.168.0.102 -port 28018

# 初始化一下

var rsconf = {

_id:'configReplSet',

configsvr: true,

members:[

{_id:0, host:'192.168.0.102:28018'},

{_id:1, host:'192.168.0.103:28018'},

{_id:2, host:'192.168.0.104:28018'},

]

}

rs.initiate(rsconf);

rs.status();第四步:配置路由服务,mongos节点 选一台 我用的是102

#mongos的配置文件 /home/mongo/mongos/mongos.conf

systemLog:

destination: file

path: /home/mongo/mongos/log/mongos.log #自己创建

logAppend: true

net:

bindIp: 192.168.0.102

port: 28017 #新的一个端口

sharding:

configDB: configReplSet/192.168.0.102:28018,192.168.0.103:28018,192.168.0.10:28018

processManagement:

fork: true登陆mongos

#启动mongos, bin目录下

./mongos -config /home/mongo/mongos/mongos.conf

#登陆mongos

./mongo 192.168.0.102:28017

# 切换admin

use admin

添加shard1复制集

db.runCommand({

addshard: 'tuji/192.168.0.102:27017,192.168.0.103:27017,192.168.0.104:27017',

name: 'shard1'

})

添加shard2复制集

db.runCommand({

addshard: 'tuji2/192.168.0.102:27018,192.168.0.103:27018,192.168.0.104:27018',

name: 'shard2'

})

# 查看分片

db.runCommand({listshards:1})

# 查看分片状态

sh.status()

第五步:测试下分片集群

# 开启数据库分片配置

db.runCommand({enablesharding:'testdb'})

# 创建分片的健(id)

db.runCommand({shardcollection:"testdb.users",key:{id:1}})

use testdb

# 添加数据

var arr=[];

for(var i=0; i<1500000;i++){

var uid = i;

var name = "name" + i;

arr.push({"id":uid,'name':name});

}

db.users.insertMany(arr);

sh.status(); #查看一下数据的分片存储结果额外补充:

如果要是想添加跟多的分片,那么需要在三台服务器上添加复制集,如下添加就好。

db.runCommand({

addshard: 'tuji3/192.168.0.102:27019,192.168.0.103:27019,192.168.0.104:27019',

name: 'shard3'

})

删除: db.runCommand({removeShard:'shard3'})