Storm入门(二)

1、storm内部原理和任务提交

- 客户端提交topology到nimbus主节点

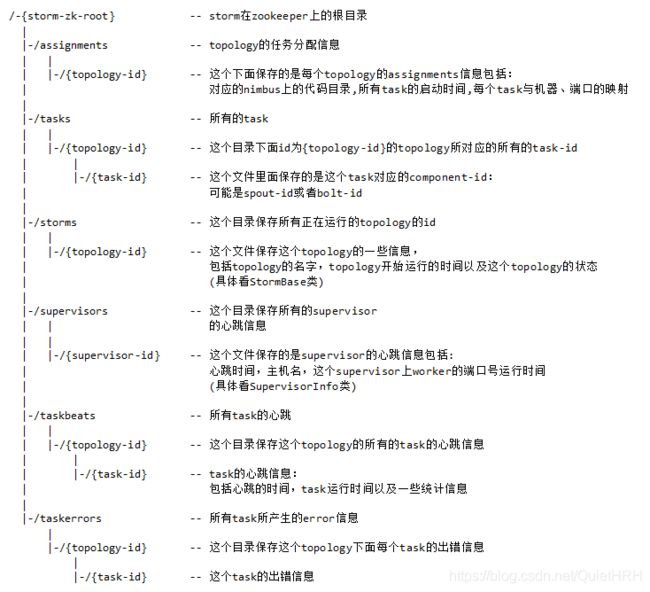

- nimbus主节点接受到客户端的任务信息,然后保存到本地目录,后期把任务的分配信息写入到zk集群中

- zk保存任务的划分信息,包括后期再哪些supervisor节点启动对应的worker进程来运行对应的task

- supervisor定时向zk进行通信,获取得到属于自己的任务信息

- supervisor找到活着的nimbus老大,然后把对应的task涉及到的jar包和代码拷贝到自己的机器,然后运行

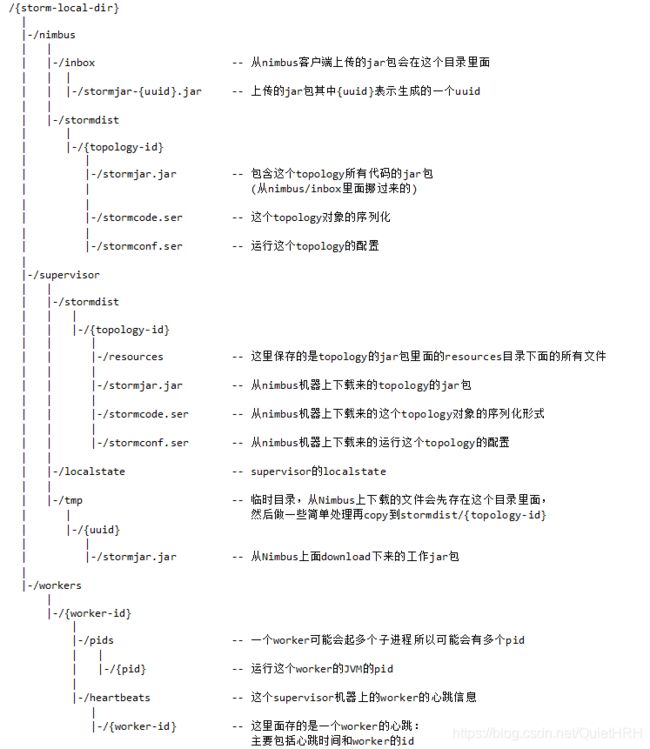

- storm的本地目录树

2、storm整合hdfs

数据处理流程:

- 通过RandomSpout实时产生一些订单数据,发送给下游的bolt进行处理

- 通过构建一个CountMoneyBolt实时统计所有订单数据的总金额,最后把订单数据发送下游的其他的bolt

- 构建HdfsBolt把实时流入的订单数据写入到hdfs上

- 基于hive构建外部分区表,关联订单数据,后期对于这些订单数据进行离线分析处理

代码开发

1、引入依赖

<dependency> <groupId>org.apache.stormgroupId> <artifactId>storm-hdfsartifactId> <version>1.1.1version> dependency>2、RandomOrderSpout

package com.hrh.hdfs; import com.hrh.realBoard.domain.PaymentInfo; import org.apache.storm.spout.SpoutOutputCollector; import org.apache.storm.task.TopologyContext; import org.apache.storm.topology.OutputFieldsDeclarer; import org.apache.storm.topology.base.BaseRichSpout; import org.apache.storm.tuple.Fields; import org.apache.storm.tuple.Values; import java.util.Map; import java.util.Random; //todo:随机产生大量的订单数据,然后发送给下游bolt去处理 public class RandomOrderSpout extends BaseRichSpout { private SpoutOutputCollector collector; private PaymentInfo paymentInfo; /** * 初始化方法,只会被调用一次 * @param conf * @param context * @param collector */ @Override public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) { this.collector=collector; this.paymentInfo=new PaymentInfo(); } @Override public void nextTuple() { //随机产生一个订单 String order = paymentInfo.random(); collector.emit(new Values(order)); } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("order")); } }3、CountMoenyBolt

package com.hrh.hdfs; import com.hrh.realBoard.domain.PaymentInfo; import com.alibaba.fastjson.JSONObject; import org.apache.storm.topology.BasicOutputCollector; import org.apache.storm.topology.OutputFieldsDeclarer; import org.apache.storm.topology.base.BaseBasicBolt; import org.apache.storm.tuple.Fields; import org.apache.storm.tuple.Tuple; import org.apache.storm.tuple.Values; import java.util.HashMap; //todo:接受spout发送的订单数据。实时订单金额的实时统计,把订单数据发送给下游的bolt public class CountMoneyBolt extends BaseBasicBolt { private HashMap<String,Long> map=new HashMap<String,Long>(); @Override public void execute(Tuple input, BasicOutputCollector collector) { String orderJson = input.getStringByField("order"); JSONObject jsonObject = new JSONObject(); PaymentInfo paymentInfo = jsonObject.parseObject(orderJson, PaymentInfo.class); long price = paymentInfo.getPayPrice(); if(!map.containsKey("totalPrice")){ map.put("totalPrice",price); }else{ map.put("totalPrice",price+map.get("totalPrice")); } System.out.println(map); collector.emit(new Values(orderJson)); } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("orderJson")); } }4、StormHdfsTopology

package com.hrh.hdfs; import org.apache.storm.Config; import org.apache.storm.LocalCluster; import org.apache.storm.StormSubmitter; import org.apache.storm.generated.AlreadyAliveException; import org.apache.storm.generated.AuthorizationException; import org.apache.storm.generated.InvalidTopologyException; import org.apache.storm.hdfs.bolt.HdfsBolt; import org.apache.storm.hdfs.bolt.format.DefaultFileNameFormat; import org.apache.storm.hdfs.bolt.format.DelimitedRecordFormat; import org.apache.storm.hdfs.bolt.format.FileNameFormat; import org.apache.storm.hdfs.bolt.format.RecordFormat; import org.apache.storm.hdfs.bolt.rotation.FileRotationPolicy; import org.apache.storm.hdfs.bolt.rotation.FileSizeRotationPolicy; import org.apache.storm.hdfs.bolt.sync.CountSyncPolicy; import org.apache.storm.hdfs.bolt.sync.SyncPolicy; import org.apache.storm.topology.TopologyBuilder; public class StormHdfsTopology { public static void main(String[] args) throws InvalidTopologyException, AuthorizationException, AlreadyAliveException { TopologyBuilder topologyBuilder = new TopologyBuilder(); topologyBuilder.setSpout("randomOrderSpout",new RandomOrderSpout()); topologyBuilder.setBolt("countMoneyBolt",new CountMoneyBolt()).shuffleGrouping("randomOrderSpout"); // use "|" instead of "," for field delimiter //指定字段的分割符是| RecordFormat format = new DelimitedRecordFormat() .withFieldDelimiter("|"); // sync the filesystem after every 1k tuples //数据量条数到达1千条批量写入 SyncPolicy syncPolicy = new CountSyncPolicy(1000); // rotate files when they reach 5MB //数据量大小到达5m批量写入 FileRotationPolicy rotationPolicy = new FileSizeRotationPolicy(5.0f, FileSizeRotationPolicy.Units.MB); //指定数据保存在hdfs上的目录名称 FileNameFormat fileNameFormat = new DefaultFileNameFormat() .withPath("/storm-data/"); // 构建hdfsBolt,指定namenode地址和策略 HdfsBolt hdfsBolt = new HdfsBolt() .withFsUrl("hdfs://node1:9000") .withFileNameFormat(fileNameFormat) .withRecordFormat(format) .withRotationPolicy(rotationPolicy) .withSyncPolicy(syncPolicy); topologyBuilder.setBolt("hdfsBolt",hdfsBolt).shuffleGrouping("countMoneyBolt"); Config config = new Config(); if(args !=null && args.length>0){ //集群提交 StormSubmitter.submitTopology(args[0],config,topologyBuilder.createTopology()); }else { //本地运行 LocalCluster localCluster = new LocalCluster(); localCluster.submitTopology("storm-hdfs",config,topologyBuilder.createTopology()); } } }

3、storm的ack机制

3.1 storm的ack机制是什么

storm是一个实时处理的框架,后期需要对数据的处理,处理的时候需要保证数据不丢失,或者是说数据需要把完全处理成功,以及数据处理失败之后,有对应的处理策略。 ack机制可以保证数据被处理成功或者是数据处理失败之后有对应的策略,比如说,某一条数据处理失败了,这个时候把处理失败的数据重新发送下,再次处理。3.2 ack机制的原理

每一次数据发送和接受都会把一个成功的标识写入到某一个内存区域,然后后期再这块内存区域中就有大量的标识,

最后使用一个算法: 异或算法

相同为0

不同为1

0|0=0

0|1=1

1|0=1

1|1=0最后异或的结果如果为0,就表示该数据在每一个阶段都是处理成功的,如果后期结果不为0 就是表示处理失败。

3.3 如何开启ack机制

1、在spout中处理逻辑

在发送数据的时候需要指定一个messageId,后期可以通过这个messageId来定位到那条数据处理成功或者是那条数据处理失败。 //这里为了后期定位到失败的数据是什么,可以简单处理,就把数据看成是messageId collector.emit(new Values(line),line); 数据处理成功之后会调用ack方法: public void ack(Object msgId) { //该方法会在数据处理成功之后被调用 System.out.println("哪一条数据处理成功:"+msgId); } 数据处理失败之后会调用fail方法: public void fail(Object msgId) { //该方法会在数据处理失败之后被调用 System.out.println("处理失败的数据:"+msgId); //把失败的数据重新发送 collector.emit(new Values(msgId),msgId); }2、在bolt中的逻辑

BaseBasicBolt

它会把我们每一数据处理成功之后,它会自己去调用ack方法 或者数据处理失败会调用fail方法 后期就不需要自己去实现ack或者是fail方法BaseRichBolt

需要自己实现成功后调用ack方法 collector.ack(input) 失败后调用fail方法 collector.fail(input)3.4 关闭ack机制

(1)在spout中发送数据的时候,不指定messageId (2)可以设置ack线程数为0 config.setNumAckers(0);

4、storm的定时器和mysql整合

1、引入依赖

org.apache.storm storm-jdbc 1.1.1 mysql mysql-connector-java 5.1.38 com.google.collections google-collections 1.0 2、代码开发

1、RandomSpout

package com.hrh.tickAndMysql; import org.apache.storm.spout.SpoutOutputCollector; import org.apache.storm.task.TopologyContext; import org.apache.storm.topology.OutputFieldsDeclarer; import org.apache.storm.topology.base.BaseRichSpout; import org.apache.storm.tuple.Fields; import org.apache.storm.tuple.Values; import java.util.Map; import java.util.Random; //todo:随机产生数据,发送给下游 public class RandomSpout extends BaseRichSpout { private SpoutOutputCollector collector; private Random random; private String[] user; @Override public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) { this.collector=collector; this.random=new Random(); this.user=new String[]{"1 zhangsan 30","2 lisi 40"}; } @Override public void nextTuple() { try { //随机产生数据 int index = random.nextInt(user.length); String line = user[index]; collector.emit(new Values(line)); Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("line")); } }2、TickBolt

package com.hrh.tickAndMysql; import org.apache.storm.Config; import org.apache.storm.Constants; import org.apache.storm.topology.BasicOutputCollector; import org.apache.storm.topology.OutputFieldsDeclarer; import org.apache.storm.topology.base.BaseBasicBolt; import org.apache.storm.tuple.Fields; import org.apache.storm.tuple.Tuple; import org.apache.storm.tuple.Values; import java.text.SimpleDateFormat; import java.util.Date; import java.util.Map; //todo:接受spout的数据,定时每隔5s打印系统的时间,同时把解析数据,最后把结果数据传递给下游bolt public class TickBolt extends BaseBasicBolt{ @Override public Map<String, Object> getComponentConfiguration() { //可以实现发送系统级别的tuple Config config = new Config(); //每隔5s发送一个系统级别的tuple config.put(Config.TOPOLOGY_TICK_TUPLE_FREQ_SECS,5); return config; } @Override public void execute(Tuple input, BasicOutputCollector collector) { //判断一下数据到底是来自于spout或者是系统 if(input.getSourceComponent().equals(Constants.SYSTEM_COMPONENT_ID) && input.getSourceStreamId().equals(Constants.SYSTEM_TICK_STREAM_ID)){ //实现每隔5s打印下系统时间 Date date = new Date(); SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss"); String dateFormat = format.format(date); System.out.println(dateFormat); }else{ String user = input.getStringByField("line"); String[] split = user.split(" "); String id = split[0]; String name = split[1]; String age = split[2]; //发送数据的时候,需要跟表中的字段的结果类型一致 collector.emit(new Values(id,name,age)); } } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { //声明字段的时候,需要跟mysql表中的字段名称一致 declarer.declare(new Fields("id","name","age")); } }3、驱动主类代码开发

package com.hrh.tickAndMysql; import com.google.common.collect.Maps; import com.sun.org.apache.xpath.internal.operations.Or; import org.apache.storm.Config; import org.apache.storm.LocalCluster; import org.apache.storm.StormSubmitter; import org.apache.storm.generated.AlreadyAliveException; import org.apache.storm.generated.AuthorizationException; import org.apache.storm.generated.InvalidTopologyException; import org.apache.storm.generated.StormTopology; import org.apache.storm.jdbc.bolt.JdbcInsertBolt; import org.apache.storm.jdbc.common.ConnectionProvider; import org.apache.storm.jdbc.common.HikariCPConnectionProvider; import org.apache.storm.jdbc.mapper.JdbcMapper; import org.apache.storm.jdbc.mapper.SimpleJdbcMapper; import org.apache.storm.topology.TopologyBuilder; import java.util.Map; public class TickMysqlTopology { public static void main(String[] args) throws InvalidTopologyException, AuthorizationException, AlreadyAliveException { TopologyBuilder topologyBuilder = new TopologyBuilder(); topologyBuilder.setSpout("randomSpout",new RandomSpout()); topologyBuilder.setBolt("tickBolt",new TickBolt()).shuffleGrouping("randomSpout"); //构建mysqlBolt Map hikariConfigMap = Maps.newHashMap(); hikariConfigMap.put("dataSourceClassName","com.mysql.jdbc.jdbc2.optional.MysqlDataSource"); hikariConfigMap.put("dataSource.url", "jdbc:mysql://node1/test"); hikariConfigMap.put("dataSource.user","root"); hikariConfigMap.put("dataSource.password","123456"); ConnectionProvider connectionProvider = new HikariCPConnectionProvider(hikariConfigMap); String tableName = "person"; JdbcMapper simpleJdbcMapper = new SimpleJdbcMapper(tableName, connectionProvider); JdbcInsertBolt mysqlBolt = new JdbcInsertBolt(connectionProvider, simpleJdbcMapper) .withTableName("person") .withQueryTimeoutSecs(90); // Or // JdbcInsertBolt userPersistanceBolt = new JdbcInsertBolt(connectionProvider, simpleJdbcMapper) // .withInsertQuery("insert into user values (?,?)") // .withQueryTimeoutSecs(30); topologyBuilder.setBolt("mysqlBolt",mysqlBolt).shuffleGrouping("tickBolt"); Config config = new Config(); StormTopology stormTopology = topologyBuilder.createTopology(); if(args!=null && args.length>0){ StormSubmitter.submitTopology(args[0],config,stormTopology); }else{ LocalCluster localCluster = new LocalCluster(); localCluster.submitTopology("storm-mysql",config,stormTopology); } } }

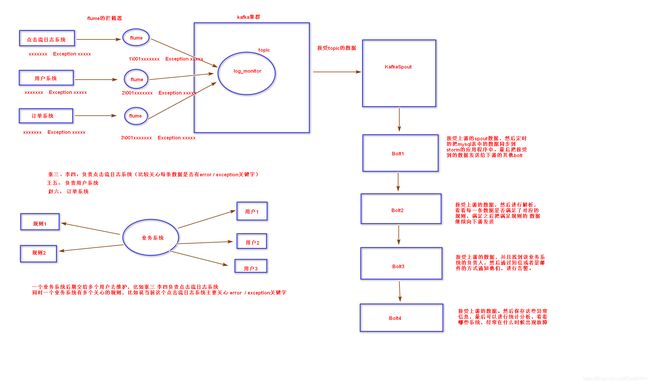

5、日志监控告警系统

5.1 flume自定义拦截器开发

package com.hrh.flume; import org.apache.commons.lang.StringUtils; import org.apache.flume.Context; import org.apache.flume.Event; import org.apache.flume.interceptor.Interceptor; import java.io.UnsupportedEncodingException; import java.util.ArrayList; import java.util.List; //todo:功能需求:实现在每条日志前面加上一个appId来做唯一标识 public class FlumeInterceptorApp implements Interceptor { //属性 private String appId; public FlumeInterceptorApp(String appId){ this.appId = appId; } public Event intercept(Event event){ String message = null; try { message = new String(event.getBody(), "utf-8"); } catch (UnsupportedEncodingException e) { message = new String(event.getBody()); } // error java.lang.TypeNotPresentException ---> 1\001error java.lang.TypeNotPresentException if (StringUtils.isNotBlank(message)) { message = this.appId + "\001" + message; event.setBody(message.getBytes()); return event; } return event; } public List<Event> intercept(List<Event> list){ List resultList = new ArrayList(); for (Event event : list) { Event r = intercept(event); if (r != null) { resultList.add(r); } } return resultList; } public void close() { } public void initialize(){ } public static class AppInterceptorBuilder implements Interceptor.Builder{ private String appId; public Interceptor build() { return new FlumeInterceptorApp(this.appId); } public void configure(Context context) { this.appId = context.getString("appId", "default"); System.out.println("appId:" + this.appId); } } }5.2 flume的配置和运行

a1.sources = r1 a1.channels = c1 a1.sinks = k1 a1.sources.r1.type = exec a1.sources.r1.command = tail -F /export/data/flume/click_log/error.log a1.sources.r1.channels = c1 a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = com.hrh.flume.FlumeInterceptorApp$AppInterceptorBuilder a1.sources.r1.interceptors.i1.appId = 1 a1.channels.c1.type=memory a1.channels.c1.capacity=10000 a1.channels.c1.transactionCapacity=100 a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.topic = log_monitor a1.sinks.k1.brokerList = node1:9092,node2:9092,node3:9092 a1.sinks.k1.requiredAcks = 1 a1.sinks.k1.batchSize = 20