RPN网络介绍

rpn(Region Proposal Network, 区域候选网络)是faster rcnn中最重要的改进。它的主要功能是生成区域候选(Region Proposal),通俗来说,区域候选可以看做是许多潜在的边界框(也叫anchor,它是包含4个坐标的矩形框)。

那么为什么需要区域候选呢,因为在目标检测之前,网络并不知道图片中存在多少个目标物体,所以rpn通常会预先在原图上生成若干个边界框,并最终输出最有可能包含物体的anchor,也称之为区域候选,训练阶段会不断的调整区域候选的位置,使其与真实物体框的偏差最小。

rpn的结构如下图所示,可以看到,backbone输出的特征图经过一个3 * 3卷积之后分别进入了不同的分支,对应不同的1 * 1卷积。第一个卷积为定位层,输出anchor的4个坐标偏移。第二个卷积为分类层,输出anchor的前后景概率。

![]()

RPN网络结构及过程

看完了rpn的大致结构,下面来看rpn的详细过程。上图中展示的就不细讲了,主要来看一下,rpn是如何生成以及处理anchor的。下图表示了rpn网络的详细结构

![]()

第一步,生成基础anchor(base_anchor),基础anchor的数目 = 长宽比的数目 * anchor的缩放比例数目, 即anchors_num = len(ratios) * len(scales)。这里,设置了3种长宽比(1:1, 1:2,2:1)和3种缩放尺度(8, 16, 32),因此anchor_num = 9. 下图表示了其中一个位置对应的9个尺寸的anchor。

![]()

Code

def _generate_base(self, base_size=16):

"""

generate base anchor

"""

# size = t.tensor(self.scales) * base_size

# center coordinate

px = base_size / 2.

py = base_size / 2.

base_anchors = t.zeros(self.num_base, 4)

for i in range(len(self.ratios)):

for j in range(len(self.scales)):

# make sure anchors have the same area under the same scales

w = base_size * self.scales[j] * np.sqrt(self.ratios[i])

# h = w / np.sqrt(self.ratios[i])

h = base_size * self.scales[j] / np.sqrt(self.ratios[i])

idx = i*len(self.ratios)+j

base_anchors[idx, 0] = px - w/2

base_anchors[idx, 1] = py - h/2

base_anchors[idx, 2] = px + w/2

base_anchors[idx, 3] = py + h/2

# base_anchors.append([px - w/2, py - h/2, px + w/2, py + h/2])

return base_anchors

第二步,根据base_anchor,对特征图上的每一个像素,都会以它为中心生成9种不同尺寸的边界框,所以总共生成$60 \times 40 \times 9 = 21600$个anchor。下图所示的为特征图上的每个像素点在原图上对应的位置。需要注意的是,所有生成的anchor都是相对于原图而言的。

![]()

Code:

def _generate_all(self, h, w):

"""

generate all anchors on input image based on base anchors

Params:

h(integer): feature map height

w(integer): feature map width

"""

# base_num = len(self.base_anchors)

# anchors = np.zeros((base_num, h, w))

# Note: the first parameter is for width, and second parameter is for height

# should be careful

y_shift, x_shift = t.meshgrid(t.arange(w), t.arange(h))

# t.meshgrid

x_shift, y_shift = x_shift.contiguous(), y_shift.contiguous()

anchors = t.stack([

x_shift.view((-1, 1))*self.feat_stride + self.base_anchors[:, 0],

y_shift.view((-1, 1))*self.feat_stride + self.base_anchors[:, 1],

x_shift.view((-1, 1))*self.feat_stride + self.base_anchors[:, 2],

y_shift.view((-1, 1))*self.feat_stride + self.base_anchors[:, 3]], \

dim=-1)

return anchors.view((-1, 4))第三步,也是最后一步,进行anchor的筛选。首先将定位层输出的坐标偏移应用到所有生成的anchor(也就是图2中anchor to iou),然后将所有anchor按照前景概率/得分进行从高到低排序。如图2所示,只取前pre_nms_num个anchor(训练阶段),最后anchor通过非极大值抑制(Non-Maximum-Suppression, nms)筛选得到post_nms_num(训练阶段)个anchor,也称作roi。

RPN代码实现

首先是RegionProposalNetwork类的详细代码。

# ------------------------ rpn----------------------#

import torch.nn as nn

import torch as t

import numpy as np

import torch.nn.functional as F

from model.utils.proposal_creator import ProposalCreator

t.random.manual_seed(0)

class RegionProposalNetwork(nn.Module):

"""

Description:

To produce proposal anchors

Params:

in_channels: feature map channels input to RPN

mid_channels: feature map channels input to RPN

ratios(list):

scales(list):

"""

def __init__(self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2], scales=[8, 16, 32], \

feat_stride=16, **kwargs):

super(RegionProposalNetwork, self).__init__()

self.ratios = ratios

self.feat_stride = feat_stride

self.scales = scales

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels, mid_channels, 3, padding=1),

nn.ReLU(inplace=True)

)

self.num_base = len(ratios) * len(scales)

self.base_anchors = self._generate_base()

# regression branch

self.reg_conv = nn.Conv2d(mid_channels, 4 * self.num_base, 1)

# classification branch

self.cls_conv = nn.Conv2d(mid_channels, 2 * self.num_base, 1)

self.norm_init(self.conv1[0], 0, 0.01)

self.norm_init(self.cls_conv, 0, 0.01)

self.norm_init(self.reg_conv, 0, 0.01)

self.proposalCreator = ProposalCreator(self, **kwargs)

def forward(self, img, img_size, scale=1.):

"""

Params:

img(tensor): extracted feature map, with shape -> `[batch_size, channels, height, width]`, here channel is 512

img_size(tuple): input image size, [height, width]

scale(float): ratio of input image and original image

Returns:

reg_off(tensor): rpn prediction bbox offsets, with shape -> `[B, N, 4]`

cls_score(tensor): rpn prediction bbox offsets, with shape -> `[B, N, 2]`

roi_idxes(tensor): roi batch index which indicate which batch it belong to, with shape -> `[R,]`

rois(tensor): generated anchor proposal, with shape -> `[R, 4]`

cls_score(tensor): rpn prediction bbox offsets, with shape -> `[B, N, 2]`

anchors(tensor):

"""

# generate all anchors

b, _, h, w = img.shape

anchors = self._generate_all(h, w)

# anchors1 = self._enumerate_shifted_anchor_torch(self.feat_stride, h, w)

# num_base = len(self.base_anchors)

mid_feat = self.conv1(img)

# rpn prediction foreground probability and background propobality

# with shape -> [batch_size, num_base*2, h, w]

cls_score = self.cls_conv(mid_feat)

# prediction coordinate offset, with shape -> [batch_size, num_base*4, h, w]

reg_off = self.reg_conv(mid_feat)

# contiguous() should be used before view in most cases

# reference: https://discuss.pytorch.org/t/when-and-why-do-we-use-contiguous/47588

reg_off = reg_off.permute(0, 2, 3, 1).contiguous().view(b, -1, 4)

cls_score = cls_score.permute(0, 2, 3, 1).contiguous().view((b, h, w, -1, 2))

# cls_score = cls_score

soft_score = F.softmax(cls_score, -1)

fg_score = soft_score[..., 1].contiguous().view((b, -1))

cls_score = cls_score.view((b, -1, 2))

rois = []

roi_idxes = []

for batch in range(b):

# apply network offset prediction to anchors

roi = self.proposalCreator(anchors, reg_off[batch].cpu(), fg_score[batch].cpu(), \

img_size, scale)

rois.append(roi)

roi_idx = t.ones(len(roi), dtype=t.int32)

roi_idxes.append(roi_idx)

rois = t.cat(rois, dim=0)

roi_idxes = t.cat(roi_idxes)

return reg_off, cls_score, roi_idxes, rois, anchors

然后是ProposalCreator类的代码,它负责rpn网络的anchor筛选,输出区域候选(roi)

# -------------------- ProposalCreator ---------------#

import torch as t

from utils.bbox_tool import loc2bbox

import cupy as cp

from model.utils.nms.non_maximum_suppression import non_maximum_suppression

class ProposalCreator:

"""

Description:

Create proposal anchors for roi head from all pre-defined anchors

Params:

train_pre_nms(int): anchors which pass to nms nums in training stage

train_post_nms(int): anchors after nms nums in training stage

test_pre_nms(int): anchor which pass to nms nums in testing stage

test_post_nms(int): anchors after nms nums in testing stage

"""

def __init__(self,

parent_model,

train_pre_nms = 12000,

train_post_nms=2000,

test_pre_nms=6000,

test_post_nms=300,

min_size=16,

nms_thresh=0.7):

self.train_pre_nms = train_pre_nms

self.train_post_nms = train_post_nms

self.test_pre_nms = test_pre_nms

self.test_post_nms = test_post_nms

self.parent_model = parent_model

self.min_size = min_size

self.nms_thresh = nms_thresh

def __call__(self, anchors, offs, scores, img_size, scale=1.):

"""

filter anchors from all anchors

Params:

anchors(tensor): all anchors, with shape ->`[w*h*9, 4]`

offs(tensor): prediction coordinate offsets for all anchors, with shape ->`[w*h*9, 4]`

scores(tensor): foreground propobility for all anchors,

img_size(tuple): `[height, width]`

"""

# if rpn network is in training

if self.parent_model.training:

pre_num = self.train_pre_nms

post_num = self.train_post_nms

else:

pre_num = self.test_pre_nms

post_num = self.test_post_nms

# apply offset to anchors

bboxes = loc2bbox(anchors, offs)

# clamp bboxes to to make sure xmin in range(0, img_size[1]) and ymin in range(0, img_size[0])

bboxes[:, 0] = t.clamp(bboxes[:, 0], min=0, max=img_size[1])

bboxes[:, 1] = t.clamp(bboxes[:, 1], min=0, max=img_size[0])

# remove those bboxes which min size less than specified min size

min_size = self.min_size * scale

keep = t.all(bboxes[:, 2:]-bboxes[:, :2] >= min_size, dim=1)

bboxes = bboxes[keep]

scores = scores[keep]

idx = scores.argsort(descending=True)

# descending rank by score

bboxes = bboxes[idx]

scores = scores[idx]

if len(scores) > pre_num:

bboxes = bboxes[:pre_num]

scores = scores[:pre_num]

# non-maximum suppression, we use cuda version of nms

# here no need to pass the score because bboxes is ranked by score

keep = non_maximum_suppression(cp.ascontiguousarray(cp.array(bboxes.detach().numpy())), self.nms_thresh)

keep = t.from_numpy(keep).type(t.long)

bboxes = bboxes[keep]

# scores =scores[keep]

if len(keep) >= post_num:

return bboxes[:post_num]

# scores = scores[:post_num]

return bboxes思考

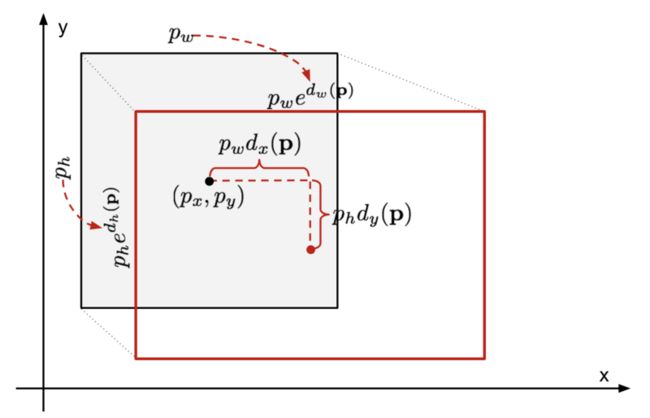

- 为什么不是直接预测anchor的中心坐标以及长宽或者4个坐标,而是预测anchor的坐标偏移(图中的$dx, dy,dw,dh$)呢?

a. 如直接预测中心坐标以及长宽或者是预测4个坐标,则大部分预测都是无效预测,因为网络预测的输出并不太可能满足这种约束条件。

b. 图片中的物体通常大小不一,形状也大不相同,直接预测中心坐标以及长宽或者是4个坐标,范围过大,这使得网络难以训练。

c. 而坐标偏移一方面大小较小,同时,坐标偏移有良好的数学公式,能够方便的求导。 - 坐标的转换关系

从上图可以得出, 预测框和预测anchor偏移之间的关系如下所示

$$\begin{cases}gx=px+dx*pw\\ gy=py+dy*ph\\gw=pw*exp(dw)\\gh=ph*exp(dh)\end{cases}$$

$[px,py,pw,ph]$表示anchor的尺寸, $[dx,dy, dw,dh]$表示RPN网络预测的坐标偏移,$[gx,gy,gw,gh]$表示应用坐标偏移后的anchor。

Code

def to_xyxy(bbox):

"""

convert bbox with format [centerx, centery, w, h] to bbox with format [xmin, ymin, xmax, ymax]

"""

bbox1 = bbox.clone()

bbox1[:, :2] = bbox[:, :2] - bbox[:, 2:] / 2.

bbox1[:, 2:] = bbox[:, :2] + bbox[:, 2:] / 2.

return bbox1

def to_xywh(bbox):

"""

convert bbox with format [xmin, ymin, xmax, ymax] to bbox with format [centerx, centery, w, h]

"""

bbox1 = bbox.clone()

# width = bbox[:, 2:3] - bbox[:, 0:1]

# height = bbox[:, 3:4] - bbox[:, 1:2]

# center_x = width + width / 2.

# center_y = height + height / 2.

bbox1[:, 2:] = bbox[:, 2:] - bbox[:, :2]

bbox1[:, :2] = bbox[:, :2] + bbox1[:, 2:] / 2

# return t.cat([center_x, center_y, width, height], dim=1)

return bbox1

def loc2bbox(anchors, offsets, xyxy=True):

"""

apply offset to anchors

Params:

anchors(tensor): anchors with format [xmin, ymin, xmax, ymax]

offsets(tensor): [dx, dy, dw, dh]

[gx, gy, gw, gy] is translated anchors size

[px, py, pw, py] is anchors size

gx = px + pw * dx

gy = py + ph * dy

gw = pw * exp(dw)

gh = ph * exp(dw)

"""

if xyxy:

anchors = to_xywh(anchors)

gx = anchors[:, 0:1] + anchors[:, 2:3] * offsets[:, 0:1]

gy = anchors[:, 1:2] + anchors[:, 3:4] * offsets[:, 1:2]

gw = anchors[:, 2:3] * t.exp(offsets[:, 2:3])

gh = anchors[:, 3:4] * t.exp(offsets[:, 3:4])

# anchors[:, :2] += offsets[:, :2] * anchors[:, 2:]

# anchors[:, ] += offsets[:, :2] * anchors[:, 2:]

# anchors[:, 2:] = t.exp(offsets[:, 2:]) * anchors[:, 2:]

anchors = t.cat([gx, gy, gw, gh], dim=1)

anchors = to_xyxy(anchors)

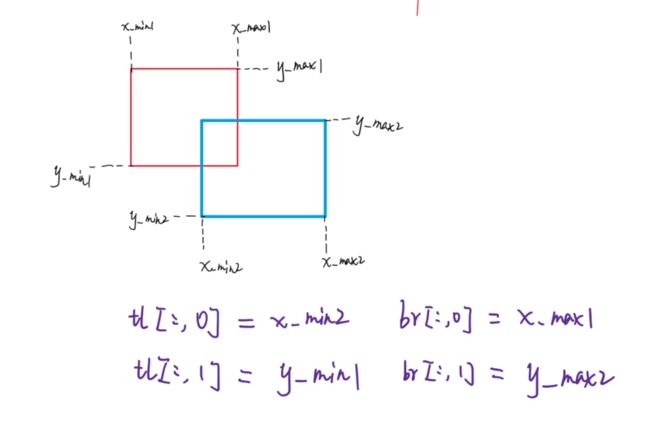

return anchors- iou的计算

def bbox_iou(bbox_a, bbox_b):

"""

return:

array: shape -> (bbox_a.shape[0], bbox_b.shape[1])

"""

if bbox_a.shape[1] != 4 or bbox_b.shape[1] != 4:

raise IndexError

# 上边界和左边界

tl = np.maximum(bbox_a[:, None, :2], bbox_b[:, :2])

# 下边界和右边界

br = np.minimum(bbox_a[:, None, 2:], bbox_b[:, 2:])

area_i = np.prod(br - tl, axis=2) * (tl < br).all(axis=2)

area_a = np.prod(bbox_a[:, 2:] - bbox_a[:, :2], axis=1)

area_b = np.prod(bbox_b[:, 2:] - bbox_b[:, :2], axis=1)

return area_i / (area_a[:, None] + area_b - area_i)有关RPN误差,后续会在faster rcnn误差那一节详细说明。

Reference:

[1] Object Detection and Classification using R-CNNs

[2] faster r-cnn for object detection a technical summary

[3] faster-r-cnn-down-the-rabbit-hole-of-modern-object-detection