写在前面

机器翻译主要用的是seq2seq的模型,配合上Attentional Model

项目参见: Seq2seq-Translation

Introduction of Sequence Model

Language Model

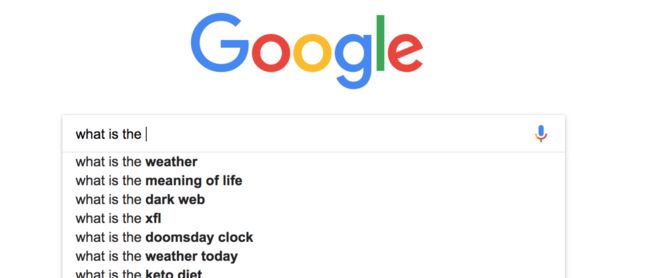

Language Modeling is the task of predicting what word comes next

Conventional LM

- Probability Presentation Problem

- Sparsity Problem

- Model Size huge

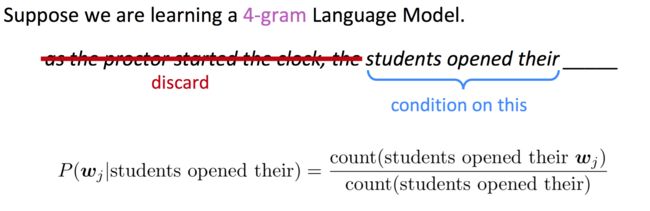

Neural Language Model

- fixed-window size too small

- Can't process any input length cases

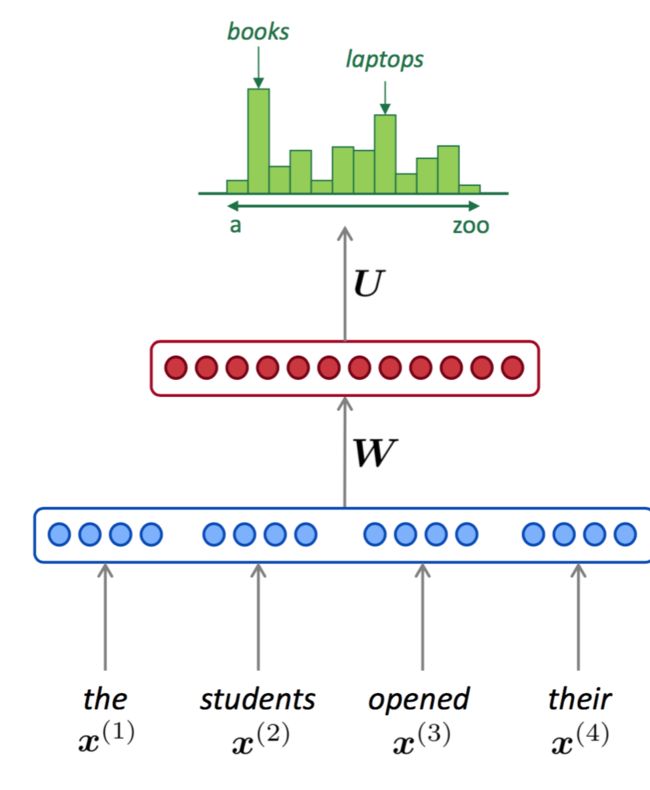

Recurrent Neural Networks Language Model

Advantages:

- process any input length

- share Weights

Disadvantages:

- gradient vanishes or explodes

- training costs time

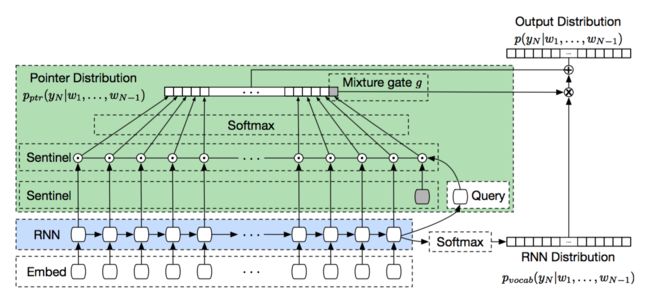

Pointer Sentinel Mixture Models

- integrate RNN with pointer-sentinel networks

- RNN networks predicts word on given Vocabulary while pointer-sentinel networks predicts word on previous window

- Pointer Sentinel Network makes effort to QA, Passage Summarization et.

- 论文参见: Pointer networks | Point Sentinel Mixture Models

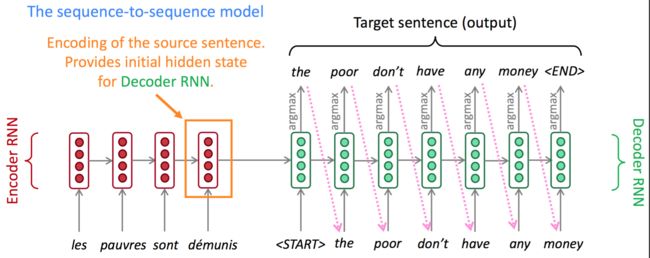

Machine Translation

Neural Machine Translation

Advantages:

- More fluent

- Better use of context

- Better use of phrase similarities

- A single neural network to be optimized end-to-end

- Requires much less human engineering effort

BottleNecks:

Encoding of the source sentence. This needs to capture all information about the source sentence

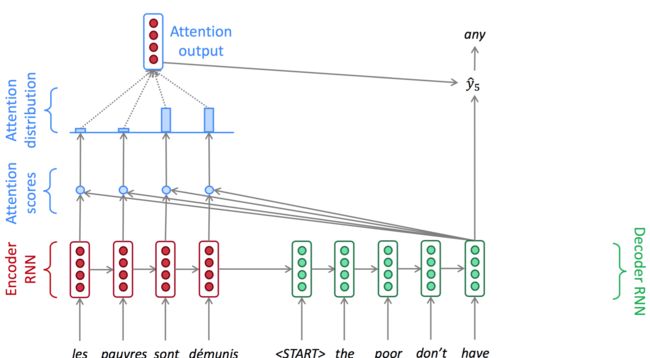

Attentional Model

- In contrast of Neural MT Model above, we don't feed the decoder with all encoder outputs

- Integrate decoder hidden state with encoder outputs to get

attention scores - apply

attention scoresto encoder outputs, generatingcontext - feed

contextto decoder input

Intuition on Attention

Fundamental Attentional Model

Bahandanau Attentional Model

- 使用的是 global attention

- encoder使用了Bi-LSTM或者Bi-GRU

- 使用

concat方式生成attention - 解析顺序: ht−1 → at → ct → ht

- 参考论文 Neural Machine Translation by Jointly Learning to Align and Translate

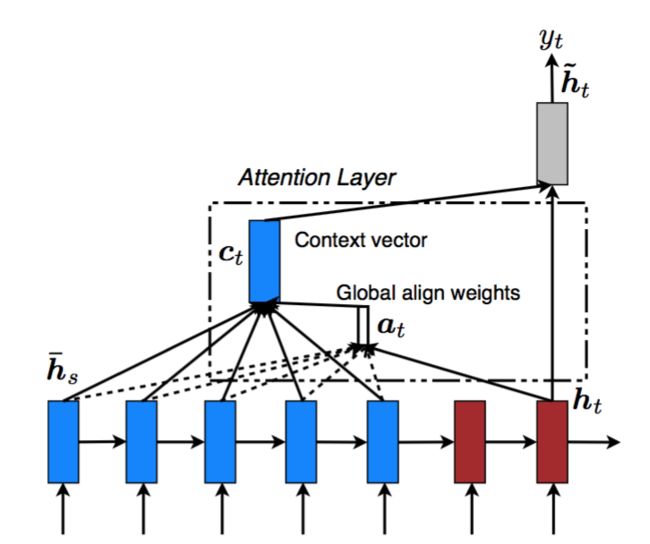

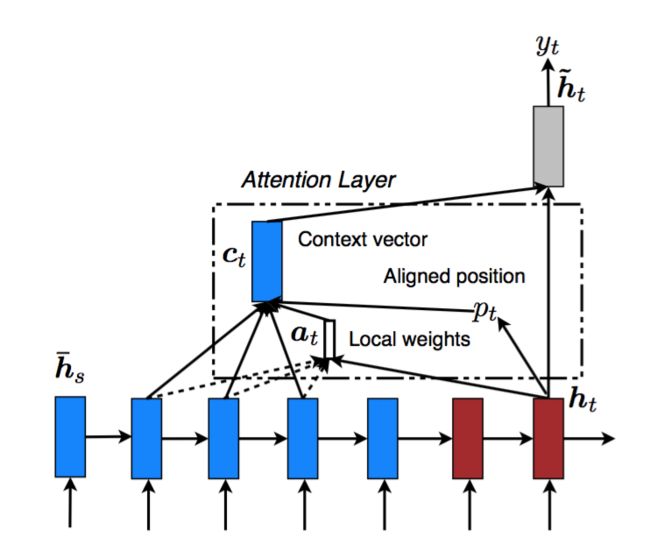

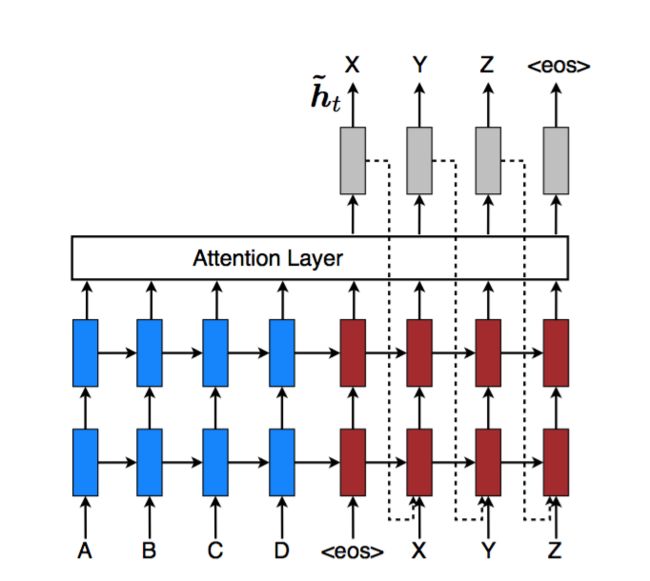

Luong Attentional Model

- 这一篇论文主要是改进了 Bahdanau 中的attentional model

- 提供了 global attention 以及 local attention,并提供了两种确定local position的方法(线性对应或是预测对应)

- encoder使用了stack-LSTM或者stack-GRU

- 提供了

dotgeneralconcat三种方式计算attention - decoder解析顺序与 Bahdanau有所不同 ht →at →ct →h ̃t

- 提出了

input-feed,将上一步生成的attention作为当前decoder的输入 - 参考论文 Effective Approaches to Attention-based Neural Machine Translation

Global Attention

Local Attention

- the model first generates an aligned position pt for each target word at time t

- The context vec-tor ct is then derived as a weighted average over the set of source hidden states within the window [pt−D,pt+D]

- Monotonic alignment (local-m)

pt = t - Predictive alignment (local-p)

pt = S · sigmoid(v⊤p tanh(Wpht))

Input Feed

Advanced Attentional Model

Intra-Decoder attention for Summarization

- Reinforcement learning

- 论文参见: A Deep Reinforced Model for Abstractive Summarization

More advanced attention

- More advanced similarity function than simple inner product

- Temporal attention function, penalizing input tokens that have obtained high attention scores in past decoding steps

- Improves coverage and prevent repeated attention to same inputs

- Combine softmax’ed weighted hidden states from encoder

Self-attention on decoder

- each hidden state attends to the previous hidden states of the same RNN

- Apply softmax to get attention distribution over previous hidden hd(t) states for t’ = 1,...,t-1

Hybrid NMT

- When applying to more languages,

tag may occur more often - Char-LSTM used to translate

tag char-by-char

Results

DataSet

English - Chinese

Small Sample: 10k sentences, 3k eng words, 2.9k cn words

Mary came in. 瑪麗進來了。

Mary is tall. 瑪麗很高。

May I go now? 我现在能去了吗?

Move quietly. 轻轻地移动。

My eyes hurt. 我的眼睛痛。

No one knows. 沒有人知道。

Nobody asked. 没人问过。

Basic Attentional Model

Train Loss

0m 30s (- 50m 7s) (1000 1%) 4.6231

1m 4s (- 52m 17s) (2000 2%) 4.3355

1m 39s (- 53m 35s) (3000 3%) 4.0968

2m 15s (- 54m 4s) (4000 4%) 3.8949

2m 51s (- 54m 9s) (5000 5%) 3.7295

3m 26s (- 53m 52s) (6000 6%) 3.6388

4m 1s (- 53m 34s) (7000 7%) 3.5656

4m 36s (- 53m 4s) (8000 8%) 3.4461

5m 12s (- 52m 39s) (9000 9%) 3.3745

5m 47s (- 52m 11s) (10000 10%) 3.2642

6m 23s (- 51m 45s) (11000 11%) 3.1839

6m 59s (- 51m 13s) (12000 12%) 3.0886

7m 35s (- 50m 46s) (13000 13%) 3.0016

8m 10s (- 50m 14s) (14000 14%) 2.9388

8m 46s (- 49m 44s) (15000 15%) 2.8103

9m 22s (- 49m 10s) (16000 16%) 2.7448

9m 56s (- 48m 30s) (17000 17%) 2.7605

10m 30s (- 47m 52s) (18000 18%) 2.6554

11m 7s (- 47m 24s) (19000 19%) 2.6391

11m 43s (- 46m 54s) (20000 20%) 2.5279

12m 19s (- 46m 22s) (21000 21%) 2.4900

12m 56s (- 45m 54s) (22000 22%) 2.4153

13m 33s (- 45m 22s) (23000 23%) 2.3262

14m 10s (- 44m 52s) (24000 24%) 2.3369

14m 46s (- 44m 19s) (25000 25%) 2.3140

15m 20s (- 43m 38s) (26000 26%) 2.2905

15m 55s (- 43m 4s) (27000 27%) 2.1696

16m 32s (- 42m 31s) (28000 28%) 2.1323

17m 7s (- 41m 56s) (29000 28%) 2.0508

17m 45s (- 41m 25s) (30000 30%) 2.0222

18m 21s (- 40m 51s) (31000 31%) 1.9533

18m 54s (- 40m 10s) (32000 32%) 1.9775

19m 29s (- 39m 34s) (33000 33%) 1.9728

20m 5s (- 38m 59s) (34000 34%) 1.8780

20m 41s (- 38m 25s) (35000 35%) 1.8047

21m 17s (- 37m 51s) (36000 36%) 1.8641

21m 54s (- 37m 18s) (37000 37%) 1.7819

22m 31s (- 36m 45s) (38000 38%) 1.7386

23m 8s (- 36m 11s) (39000 39%) 1.6892

23m 44s (- 35m 36s) (40000 40%) 1.6438

24m 21s (- 35m 3s) (41000 41%) 1.5385

24m 58s (- 34m 29s) (42000 42%) 1.6697

25m 36s (- 33m 56s) (43000 43%) 1.5461

26m 11s (- 33m 20s) (44000 44%) 1.5460

26m 46s (- 32m 43s) (45000 45%) 1.5115

27m 23s (- 32m 9s) (46000 46%) 1.5514

27m 59s (- 31m 34s) (47000 47%) 1.4065

28m 35s (- 30m 58s) (48000 48%) 1.4017

29m 12s (- 30m 23s) (49000 49%) 1.3494

29m 43s (- 29m 43s) (50000 50%) 1.3298

30m 4s (- 28m 54s) (51000 51%) 1.3180

30m 40s (- 28m 19s) (52000 52%) 1.3411

31m 17s (- 27m 45s) (53000 53%) 1.3513

31m 53s (- 27m 10s) (54000 54%) 1.1807

32m 30s (- 26m 35s) (55000 55%) 1.2839

33m 6s (- 26m 0s) (56000 56%) 1.2610

33m 40s (- 25m 24s) (57000 56%) 1.1819

34m 13s (- 24m 46s) (58000 57%) 1.0888

34m 45s (- 24m 9s) (59000 59%) 1.1754

35m 17s (- 23m 31s) (60000 60%) 1.1238

35m 50s (- 22m 55s) (61000 61%) 1.1342

36m 23s (- 22m 18s) (62000 62%) 1.1035

36m 55s (- 21m 41s) (63000 63%) 1.1070

37m 23s (- 21m 1s) (64000 64%) 1.0512

37m 43s (- 20m 18s) (65000 65%) 1.0274

38m 5s (- 19m 37s) (66000 66%) 1.0114

38m 25s (- 18m 55s) (67000 67%) 0.9937

38m 46s (- 18m 14s) (68000 68%) 0.9291

39m 8s (- 17m 35s) (69000 69%) 0.9563

39m 33s (- 16m 57s) (70000 70%) 0.9308

40m 0s (- 16m 20s) (71000 71%) 0.9932

40m 28s (- 15m 44s) (72000 72%) 0.9481

40m 53s (- 15m 7s) (73000 73%) 0.9157

41m 15s (- 14m 29s) (74000 74%) 0.8805

41m 32s (- 13m 50s) (75000 75%) 0.9156

41m 54s (- 13m 14s) (76000 76%) 0.8637

42m 25s (- 12m 40s) (77000 77%) 0.8662

42m 57s (- 12m 6s) (78000 78%) 0.8124

43m 29s (- 11m 33s) (79000 79%) 0.8772

44m 0s (- 11m 0s) (80000 80%) 0.8268

44m 28s (- 10m 25s) (81000 81%) 0.8146

44m 56s (- 9m 51s) (82000 82%) 0.8086

45m 13s (- 9m 15s) (83000 83%) 0.7818

45m 41s (- 8m 42s) (84000 84%) 0.7374

46m 8s (- 8m 8s) (85000 85%) 0.7332

46m 38s (- 7m 35s) (86000 86%) 0.8005

47m 1s (- 7m 1s) (87000 87%) 0.7465

47m 30s (- 6m 28s) (88000 88%) 0.8085

47m 47s (- 5m 54s) (89000 89%) 0.7231

48m 13s (- 5m 21s) (90000 90%) 0.7111

48m 34s (- 4m 48s) (91000 91%) 0.7396

49m 3s (- 4m 15s) (92000 92%) 0.6561

49m 34s (- 3m 43s) (93000 93%) 0.7108

50m 4s (- 3m 11s) (94000 94%) 0.6574

50m 31s (- 2m 39s) (95000 95%) 0.6983

50m 56s (- 2m 7s) (96000 96%) 0.7017

51m 28s (- 1m 35s) (97000 97%) 0.6549

52m 0s (- 1m 3s) (98000 98%) 0.5979

52m 32s (- 0m 31s) (99000 99%) 0.6844

53m 2s (- 0m 0s) (100000 100%) 0.6341

Sample

> 我藏在桌子底下

= i hid under the table .

< i am the the the table

> 我不想看起來傻

= i don t want to look stupid .

< i don t want to look .

> 把鹽遞給我好嗎

= pass me the salt would you ?

< pass me the salt would ?

> 新年快樂

= happy new year !

< happy new year ! !

> 她來這裡放鬆的嗎

= did she come here to relax ?

< did she come on the watch ?

> 现在道歉也迟了

= it s too late to apologize .

< it s too late apologize.

> 让我们回家吧

= let us go home .

< let us go home .

> 它真的很便宜

= it is really cheap .

< it really is cheap .

> 社區是安靜的

= the neighborhood was silent .

< the neighborhood was silent .

> 你可以随便去哪儿

= you may go anywhere .

< you may go anywhere .

Loung Attentional Model(2-Layer GRU, Global Attention, Dot, Teacher Forcing)

Train Loss

0m 34s (- 1427m 31s) (20 0%) 5.8900

1m 9s (- 1437m 13s) (40 0%) 4.4671

1m 43s (- 1438m 23s) (60 0%) 4.2377

2m 19s (- 1455m 7s) (80 0%) 4.0304

2m 55s (- 1461m 40s) (100 0%) 3.8420

3m 31s (- 1466m 16s) (120 0%) 3.7378

4m 7s (- 1469m 7s) (140 0%) 3.5625

4m 42s (- 1466m 36s) (160 0%) 3.3969

5m 18s (- 1468m 55s) (180 0%) 3.3081

5m 52s (- 1462m 29s) (200 0%) 3.1576

6m 27s (- 1462m 18s) (220 0%) 3.0534

7m 3s (- 1463m 35s) (240 0%) 2.9494

7m 39s (- 1465m 15s) (260 0%) 2.8771

8m 16s (- 1469m 28s) (280 0%) 2.7319

8m 53s (- 1473m 20s) (300 0%) 2.6896

9m 30s (- 1475m 46s) (320 0%) 2.6084

10m 6s (- 1477m 14s) (340 0%) 2.5069

10m 42s (- 1476m 17s) (360 0%) 2.4839

11m 17s (- 1474m 43s) (380 0%) 2.4190

11m 54s (- 1476m 36s) (400 0%) 2.3489

12m 30s (- 1476m 46s) (420 0%) 2.2978

13m 6s (- 1476m 9s) (440 0%) 2.1818

13m 42s (- 1476m 47s) (460 0%) 2.1761

14m 19s (- 1477m 54s) (480 0%) 2.0791

14m 55s (- 1478m 11s) (500 1%) 2.0150

15m 32s (- 1478m 16s) (520 1%) 1.9801

16m 9s (- 1479m 33s) (540 1%) 1.9012

16m 45s (- 1479m 33s) (560 1%) 1.8956

17m 22s (- 1479m 52s) (580 1%) 1.8302

17m 58s (- 1480m 34s) (600 1%) 1.7954

18m 35s (- 1480m 41s) (620 1%) 1.7853

19m 11s (- 1480m 26s) (640 1%) 1.6581

19m 48s (- 1480m 15s) (660 1%) 1.6432

20m 24s (- 1480m 16s) (680 1%) 1.6289

21m 0s (- 1479m 48s) (700 1%) 1.5508

21m 36s (- 1478m 49s) (720 1%) 1.5253

22m 12s (- 1478m 34s) (740 1%) 1.5154

22m 48s (- 1478m 9s) (760 1%) 1.4367

23m 25s (- 1478m 21s) (780 1%) 1.3914

24m 2s (- 1478m 6s) (800 1%) 1.4067

24m 38s (- 1477m 26s) (820 1%) 1.3517

25m 14s (- 1477m 25s) (840 1%) 1.2992

25m 51s (- 1477m 10s) (860 1%) 1.2663

26m 27s (- 1476m 42s) (880 1%) 1.2122

27m 3s (- 1475m 50s) (900 1%) 1.2101

27m 38s (- 1475m 0s) (920 1%) 1.1728

28m 15s (- 1474m 43s) (940 1%) 1.1274

28m 51s (- 1474m 27s) (960 1%) 1.1317

29m 28s (- 1474m 22s) (980 1%) 1.0582

30m 4s (- 1473m 33s) (1000 2%) 1.0331

30m 41s (- 1473m 26s) (1020 2%) 1.0256

31m 18s (- 1473m 31s) (1040 2%) 0.9916

31m 54s (- 1473m 27s) (1060 2%) 0.9797

32m 31s (- 1473m 12s) (1080 2%) 0.9083

33m 8s (- 1473m 0s) (1100 2%) 0.8935

33m 44s (- 1472m 20s) (1120 2%) 0.9068

34m 21s (- 1472m 24s) (1140 2%) 0.8751

34m 56s (- 1471m 26s) (1160 2%) 0.8495

35m 33s (- 1471m 22s) (1180 2%) 0.8085

36m 10s (- 1471m 27s) (1200 2%) 0.8214

36m 45s (- 1469m 47s) (1220 2%) 0.8005

37m 21s (- 1469m 13s) (1240 2%) 0.7534

37m 59s (- 1469m 21s) (1260 2%) 0.7534

38m 34s (- 1468m 33s) (1280 2%) 0.7450

39m 11s (- 1468m 27s) (1300 2%) 0.7239

39m 48s (- 1468m 8s) (1320 2%) 0.6771

40m 25s (- 1467m 56s) (1340 2%) 0.6904

41m 1s (- 1467m 25s) (1360 2%) 0.6561

41m 38s (- 1466m 54s) (1380 2%) 0.6191

42m 14s (- 1466m 27s) (1400 2%) 0.5595

42m 50s (- 1465m 38s) (1420 2%) 0.5670

43m 26s (- 1465m 10s) (1440 2%) 0.5784

44m 2s (- 1464m 24s) (1460 2%) 0.5575

44m 39s (- 1464m 2s) (1480 2%) 0.5276

45m 12s (- 1461m 53s) (1500 3%) 0.5492

45m 48s (- 1461m 4s) (1520 3%) 0.5415

46m 25s (- 1460m 50s) (1540 3%) 0.5114

47m 2s (- 1460m 28s) (1560 3%) 0.4885

47m 38s (- 1459m 45s) (1580 3%) 0.4923

48m 14s (- 1459m 14s) (1600 3%) 0.4519

48m 51s (- 1458m 54s) (1620 3%) 0.4560

49m 27s (- 1458m 22s) (1640 3%) 0.4397

50m 3s (- 1457m 43s) (1660 3%) 0.4215

50m 38s (- 1456m 30s) (1680 3%) 0.4191

51m 13s (- 1455m 31s) (1700 3%) 0.4042

51m 49s (- 1454m 31s) (1720 3%) 0.4179

52m 25s (- 1453m 54s) (1740 3%) 0.3818

53m 1s (- 1453m 30s) (1760 3%) 0.3581

53m 38s (- 1453m 9s) (1780 3%) 0.3668

54m 15s (- 1453m 2s) (1800 3%) 0.3531

54m 53s (- 1452m 54s) (1820 3%) 0.3542

55m 30s (- 1452m 41s) (1840 3%) 0.3418

56m 6s (- 1452m 20s) (1860 3%) 0.3171

56m 43s (- 1451m 48s) (1880 3%) 0.3197

57m 19s (- 1451m 23s) (1900 3%) 0.3101

57m 55s (- 1450m 37s) (1920 3%) 0.3008

58m 32s (- 1450m 6s) (1940 3%) 0.2947

59m 8s (- 1449m 38s) (1960 3%) 0.2741

59m 45s (- 1449m 13s) (1980 3%) 0.2970

60m 21s (- 1448m 40s) (2000 4%) 0.2850

60m 57s (- 1447m 58s) (2020 4%) 0.2623

Sample

> 他騎腳踏車去

= he went by bicycle .

< he went by bicycle .

> 雞肉還不夠熟

= the chicken is undercooked .

< the chicken is undercooked .

> 她看起来很年轻

= she looks young .

< she looks very young .

> 别让汤姆走了

= don t let tom leave .

< don t let tom leave .

> 終於星期五了

= finally it s friday .

< finally it s friday .

> 也许下一次吧

= maybe some other time .

< maybe it a piece s time .

> 你真壞

= you re so bad .

< you re really terrible .

> 坦率地说 他错了

= frankly speaking he is wrong .

< frankly speaking he s wrong .

> 我是个高中生

= i m a high school student .

< i m a high school student .