贾志刚OpenCV3.2图像分割实战学习笔记

OpenCV图像分割资料分享:贾志刚的OpenCV图像分割实战视频教程全套资料(包含配套视频、配套PPT的PDF文件、源码和用到的图片素材等):点这里

目录:

实例1:读取单张JPG图像(测试环境)

实例2:KMeans对随机生成数据进行分类

实例3:KMeans图像分割

实例4:GMM(高斯混合模型)样本数据训练与预言

实例5:GMM(高斯混合模型)图像分割

实例6:基于距离变换的分水岭粘连对象分离与计数

实例7:基于分水岭图像分割

实例8:Grabcut原理与演示应用

实例9:K-Means-证件照背景替换

实例10:绿幕视频背景替换

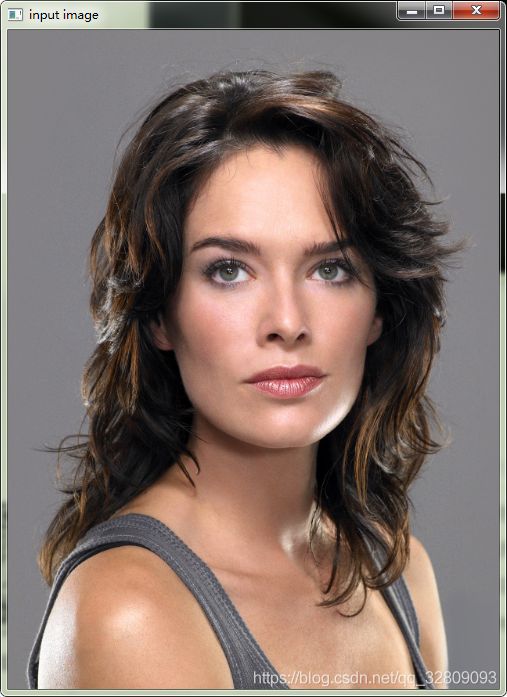

实例1:读取单张JPG图像(测试环境)

#include

#include

using namespace cv;

int main(int argc, char** argv)

{

Mat src = imread("toux.jpg");//读取图像

if (src.empty())

{

printf("could not load image..\n");

return -1;

}

namedWindow("input image", CV_WINDOW_AUTOSIZE);//自动调整显示窗口大小

imshow("input image", src);//显示图像

waitKey(0);//等待

return 0;

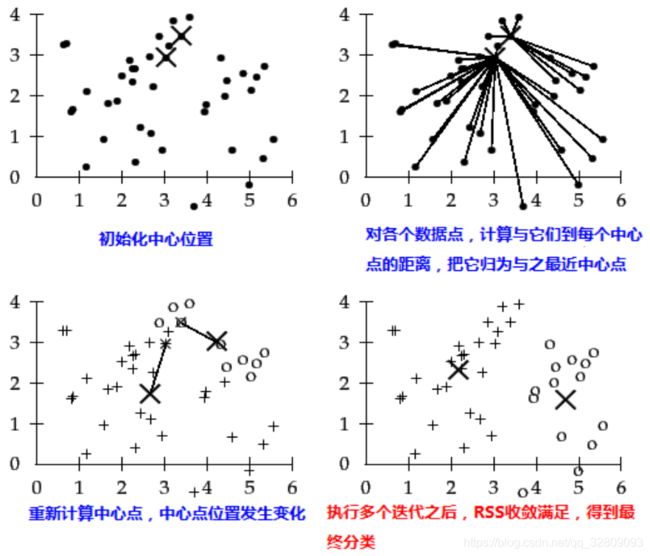

} KMeans方法概述

1. 无监督学习方法(整个过程不需要人为干预)

2. 分类问题,输入分类数目,初始化中心位置

3. 硬分类方法,以距离度量(离那个中心点近就被划分为哪一类)

4. 迭代分类为聚类(不断迭代寻找最优中心点)

基本流程:

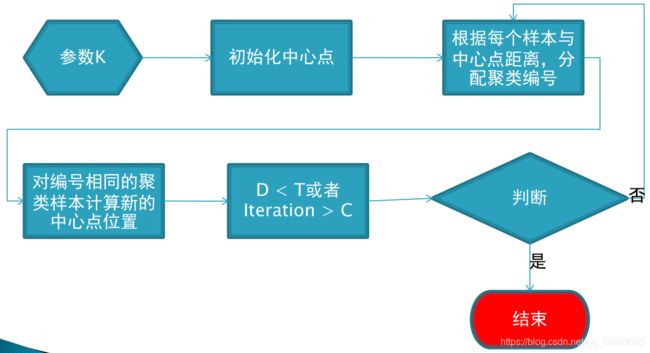

相关API:

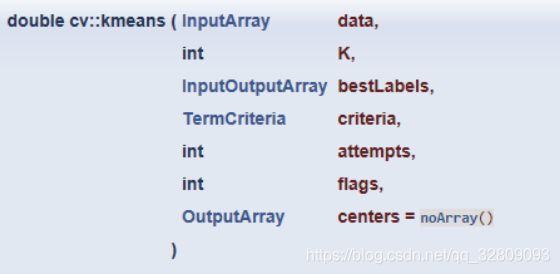

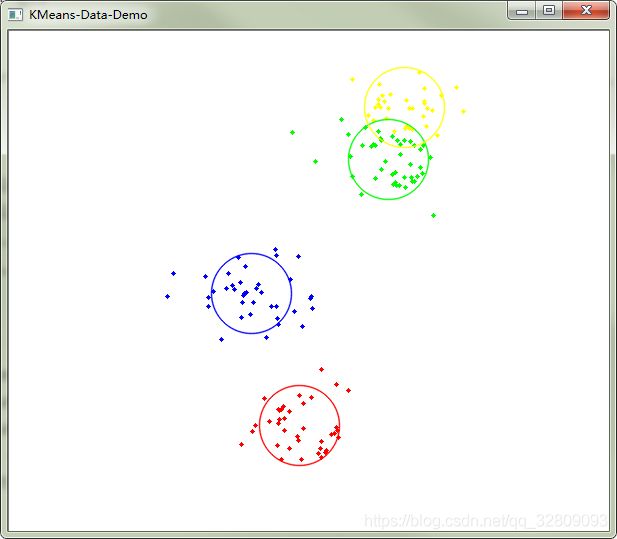

实例2:KMeans对随机生成数据进行分类

#include

#include

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat img(500, 600, CV_8UC3);//定义一张图

RNG rng(12345);//定义随机数

//不同类定义为不同颜色

Scalar colorTab[] = {

Scalar(0, 0, 255),

Scalar(0, 255, 0),

Scalar(255, 0, 0),

Scalar(0, 255, 255),

Scalar(255, 0, 255)

};

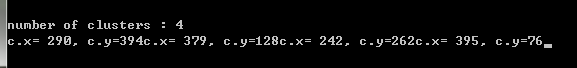

int numCluster = rng.uniform(2, 5);//定义分类种类数量块

printf("number of clusters : %d\n", numCluster);

//设置从原图像中抽取多少个数据点

int sampleCount = rng.uniform(5, 1000);

Mat points(sampleCount, 1, CV_32FC2);

Mat labels;

Mat centers;

// 生成随机数

for (int k = 0; k < numCluster; k++) {

Point center;

center.x = rng.uniform(0, img.cols);

center.y = rng.uniform(0, img.rows);

//得到不同小块

Mat pointChunk = points.rowRange(k*sampleCount / numCluster,

k == numCluster - 1 ? sampleCount : (k + 1)*sampleCount / numCluster);

//用随机数对小块点进行填充

rng.fill(pointChunk, RNG::NORMAL, Scalar(center.x, center.y), Scalar(img.cols*0.05, img.rows*0.05));

}

randShuffle(points, 1, &rng);

// 使用KMeans

kmeans(points, numCluster, labels, TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1), 3, KMEANS_PP_CENTERS, centers);

// 用不同颜色显示分类

img = Scalar::all(255);

for (int i = 0; i < sampleCount; i++) {

int index = labels.at(i);

Point p = points.at(i);

circle(img, p, 2, colorTab[index], -1, 8);

}

// 每个聚类的中心来绘制圆

for (int i = 0; i < centers.rows; i++) {

int x = centers.at(i, 0);

int y = centers.at(i, 1);

printf("c.x= %d, c.y=%d", x, y);

circle(img, Point(x, y), 40, colorTab[i], 1, LINE_AA);

}

imshow("KMeans-Data-Demo", img);

waitKey(0);

return 0;

} 可见,随机生成的数据被分成了四块,每块的中心坐标如下:

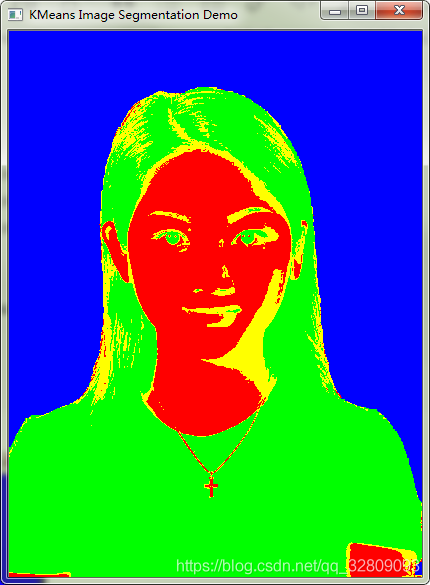

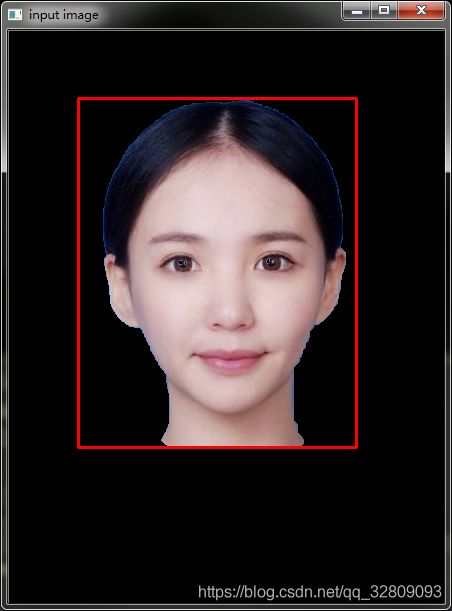

实例3:KMeans图像分割

#include

#include

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("toux.jpg");

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

namedWindow("input image", CV_WINDOW_AUTOSIZE);

imshow("input image", src);

Scalar colorTab[] = {

Scalar(0, 0, 255),

Scalar(0, 255, 0),

Scalar(255, 0, 0),

Scalar(0, 255, 255),

Scalar(255, 0, 255)

};

int width = src.cols;//图像的列

int height = src.rows;//图像的行

int dims = src.channels();//图像的通道数

// 初始化定义

int sampleCount = width*height;//获取图像的像素点数

int clusterCount = 4;//要分类块数

Mat points(sampleCount, dims, CV_32F, Scalar(10));//定义样本数据

Mat labels;

Mat centers(clusterCount, 1, points.type());//定义中心点(浮点数)

// RGB 数据转换到样本数据

int index = 0;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

Vec3b bgr = src.at(row, col);

points.at(index, 0) = static_cast(bgr[0]);//通道0

points.at(index, 1) = static_cast(bgr[1]);//通道1

points.at(index, 2) = static_cast(bgr[2]);//通道2

}

}

// 运行K-Means

TermCriteria criteria = TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1);

kmeans(points, clusterCount, labels, criteria, 3, KMEANS_PP_CENTERS, centers);//尝试3次

// 显示图像分割结果

Mat result = Mat::zeros(src.size(), src.type());

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

int label = labels.at(index, 0);

result.at(row, col)[0] = colorTab[label][0];

result.at(row, col)[1] = colorTab[label][1];

result.at(row, col)[2] = colorTab[label][2];

}

}

for (int i = 0; i < centers.rows; i++) {

int x = centers.at(i, 0);

int y = centers.at(i, 1);

printf("center %d = c.x : %d, c.y : %d\n", i, x, y);

}

imshow("KMeans Image Segmentation Demo", result);

waitKey(0);

return 0;

} 注:中心点是相对于图像RGB像素值而求定的位置。

实例4:GMM(高斯混合模型)样本数据训练与预言

#include

#include

using namespace cv;

using namespace cv::ml;

using namespace std;

int main(int argc, char** argv) {

Mat img = Mat::zeros(500, 500, CV_8UC3);

RNG rng(12345);

Scalar colorTab[] = {

Scalar(0, 0, 255),

Scalar(0, 255, 0),

Scalar(255, 0, 0),

Scalar(0, 255, 255),

Scalar(255, 0, 255)

};

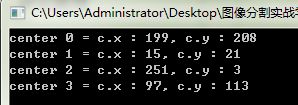

int numCluster = rng.uniform(2, 5);

printf("number of clusters : %d\n", numCluster);

int sampleCount = rng.uniform(5, 1000);

Mat points(sampleCount, 2, CV_32FC1);

Mat labels;

// 生成随机数

for (int k = 0; k < numCluster; k++) {

Point center;

center.x = rng.uniform(0, img.cols);

center.y = rng.uniform(0, img.rows);

Mat pointChunk = points.rowRange(k*sampleCount / numCluster,

k == numCluster - 1 ? sampleCount : (k + 1)*sampleCount / numCluster);

rng.fill(pointChunk, RNG::NORMAL, Scalar(center.x, center.y), Scalar(img.cols*0.05, img.rows*0.05));

}

randShuffle(points, 1, &rng);

Ptr em_model = EM::create();

em_model->setClustersNumber(numCluster);

em_model->setCovarianceMatrixType(EM::COV_MAT_SPHERICAL);//协方差矩阵

//训练次数设置为100

em_model->setTermCriteria(TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 100, 0.1));

em_model->trainEM(points, noArray(), labels, noArray());

// classify every image pixels

Mat sample(1, 2, CV_32FC1);

for (int row = 0; row < img.rows; row++) {

for (int col = 0; col < img.cols; col++) {

sample.at(0) = (float)col;

sample.at(1) = (float)row;

int response = cvRound(em_model->predict2(sample, noArray())[1]);

Scalar c = colorTab[response];

circle(img, Point(col, row), 1, c*0.75, -1);

}

}

// draw the clusters

for (int i = 0; i < sampleCount; i++) {

Point p(cvRound(points.at(i, 0)), points.at(i, 1));

circle(img, p, 1, colorTab[labels.at(i)], -1);

}

imshow("GMM-EM Demo", img);

waitKey(0);

return 0;

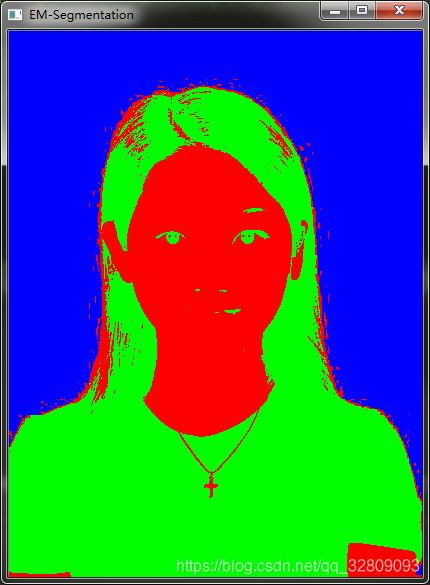

} 实例5:GMM(高斯混合模型)图像分割

#include

#include

using namespace cv;

using namespace cv::ml;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("toux.jpg");

if (src.empty()) {

printf("could not load iamge...\n");

return -1;

}

namedWindow("input image", CV_WINDOW_AUTOSIZE);

imshow("input image", src);

// 初始化

int numCluster = 3;

const Scalar colors[] = {

Scalar(255, 0, 0),

Scalar(0, 255, 0),

Scalar(0, 0, 255),

Scalar(255, 255, 0)

};

int width = src.cols;

int height = src.rows;

int dims = src.channels();

int nsamples = width*height;

Mat points(nsamples, dims, CV_64FC1);

Mat labels;

Mat result = Mat::zeros(src.size(), CV_8UC3);

// 图像RGB像素数据转换为样本数据

int index = 0;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

Vec3b rgb = src.at(row, col);

points.at(index, 0) = static_cast(rgb[0]);

points.at(index, 1) = static_cast(rgb[1]);

points.at(index, 2) = static_cast(rgb[2]);

}

}

// EM Cluster Train

Ptr em_model = EM::create();

em_model->setClustersNumber(numCluster);

em_model->setCovarianceMatrixType(EM::COV_MAT_SPHERICAL);//设置协方差矩阵

//设置停止条件,训练100次结束

em_model->setTermCriteria(TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 100, 0.1));

em_model->trainEM(points, noArray(), labels, noArray());

// 对每个像素标记颜色与显示

Mat sample(dims, 1, CV_64FC1);

double time = getTickCount();

int r = 0, g = 0, b = 0;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

int label = labels.at(index, 0);

Scalar c = colors[label];

result.at(row, col)[0] = c[0];

result.at(row, col)[1] = c[1];

result.at(row, col)[2] = c[2];

/*b = src.at(row, col)[0];

g = src.at(row, col)[1];

r = src.at(row, col)[2];

sample.at(0) = b;

sample.at(1) = g;

sample.at(2) = r;

int response = cvRound(em_model->predict2(sample, noArray())[1]);

Scalar c = colors[response];

result.at(row, col)[0] = c[0];

result.at(row, col)[1] = c[1];

result.at(row, col)[2] = c[2];*/

}

}

printf("execution time(ms) : %.2f\n", (getTickCount() - time)/getTickFrequency()*1000);

imshow("EM-Segmentation", result);

waitKey(0);

return 0;

} 可见,GMM算法处理时间较长,并不适合工程实时图像处理。

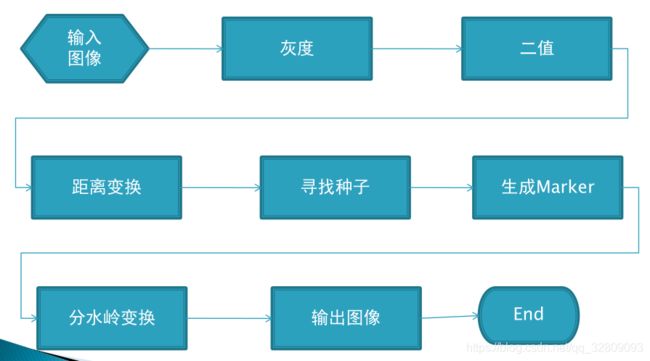

分水岭分割方法概述

图像形态学操作:

腐蚀与膨胀

开闭操作

分水岭分割方法原理:

- 基于浸泡理论的分水岭分割方法

- 基于连通图的方法

- 基于距离变换的方法

分水岭算法的运用:

分割粘连对象,实现形态学操作与对象计数

图像分割

基于距离的分水岭分割流程:

API说明:

OpenCV3.x中

distanceTransform

watershed

实例6:基于距离变换的分水岭粘连对象分离与计数

#include

#include

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat src = imread("pill_002.png");//pill_002.png coins_001.jpg

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

namedWindow("input image", CV_WINDOW_AUTOSIZE);

imshow("input image", src);

Mat gray, binary, shifted;

//边缘保留(空间、颜色差值<21、51) 减少差异化

pyrMeanShiftFiltering(src, shifted, 21, 51);

imshow("shifted", shifted);

cvtColor(shifted, gray, COLOR_BGR2GRAY);//转换为灰度图像

//灰度图像进行二值化

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

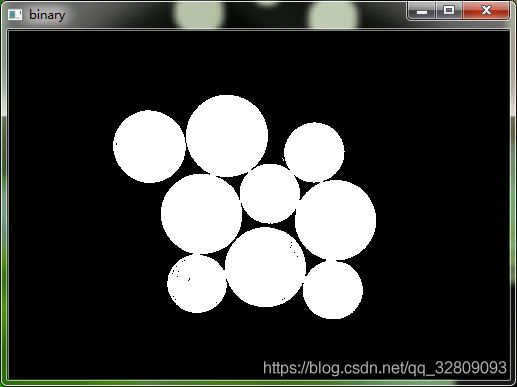

imshow("binary", binary);

// distance transform 距离变化

Mat dist;

distanceTransform(binary, dist, DistanceTypes::DIST_L2, 3, CV_32F);//使用3*3进行

normalize(dist, dist, 0, 1, NORM_MINMAX);

imshow("distance result", dist);

// binary

threshold(dist, dist, 0.4, 1, THRESH_BINARY);

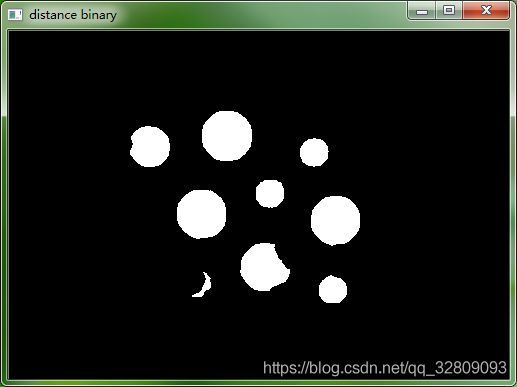

imshow("distance binary", dist);

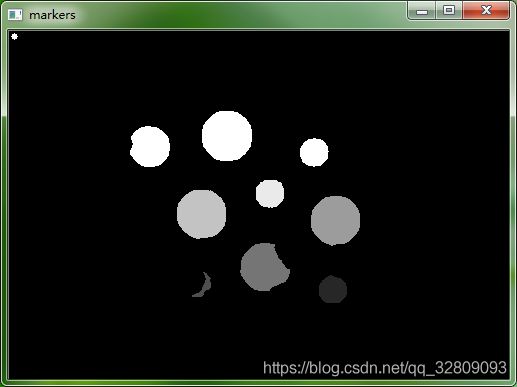

// markers 找到山峰的旗子

Mat dist_m;

dist.convertTo(dist_m, CV_8U);

vector> contours;

//寻找轮廓contours

findContours(dist_m, contours, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point(0, 0));

// create markers 填充轮廓

Mat markers = Mat::zeros(src.size(), CV_32SC1);

for (size_t t = 0; t < contours.size(); t++) {

drawContours(markers, contours, static_cast(t), Scalar::all(static_cast(t) + 1), -1);

}

//左上角显示一个圆(可有可无)

circle(markers, Point(5, 5), 3, Scalar(255), -1);

//markers放大10000倍进行显示

imshow("markers", markers*10000);

// 形态学操作 - 彩色图像也可以,目的是去掉干扰,让结果更好

Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(src, src, MORPH_ERODE, k);

// 完成分水岭变换

watershed(src, markers);

Mat mark = Mat::zeros(markers.size(), CV_8UC1);

markers.convertTo(mark, CV_8UC1);

bitwise_not(mark, mark, Mat());

//imshow("watershed result", mark);//中间是白色线进行分割

// generate random color 生成随机颜色

vector colors;

for (size_t i = 0; i < contours.size(); i++) {

int r = theRNG().uniform(0, 255);

int g = theRNG().uniform(0, 255);

int b = theRNG().uniform(0, 255);

colors.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r));

}

// 颜色填充与最终显示

Mat dst = Mat::zeros(markers.size(), CV_8UC3);

int index = 0;

for (int row = 0; row < markers.rows; row++) {

for (int col = 0; col < markers.cols; col++) {

index = markers.at(row, col);

if (index > 0 && index <= contours.size()) {

dst.at(row, col) = colors[index - 1];

} else {

dst.at(row, col) = Vec3b(0, 0, 0);

}

}

}

imshow("Final Result", dst);

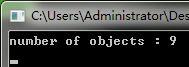

printf("number of objects : %d\n", contours.size());

waitKey(0);

return 0;

} 1 输入图像: 2 进行边缘保留的shifted转换:

3 灰度和二值化: 4 距离变换:

5 距离变换后进行二值化: 6 寻找种子,生产Marker:

7 分水岭变换并填充: 8 计数结果:

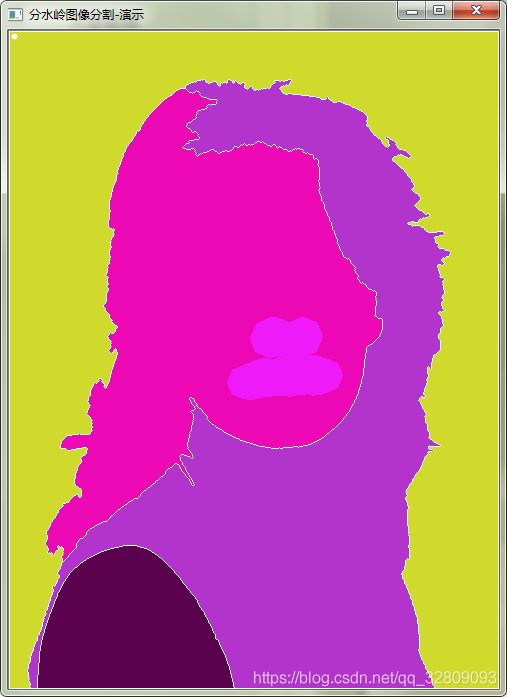

实例7:基于分水岭图像分割

#include

#include

using namespace cv;

using namespace std;

Mat watershedCluster(Mat &image, int &numSegments);

//参数分别为分割后图像,分割块数,输入原图

void createDisplaySegments(Mat &segments, int numSegments, Mat &image);

int main(int argc, char** argv) {

Mat src = imread("cvtest.png");

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

namedWindow("input image", CV_WINDOW_AUTOSIZE);

imshow("input image", src);

int numSegments;

Mat markers = watershedCluster(src, numSegments);

createDisplaySegments(markers, numSegments, src);

waitKey(0);

return 0;

}

Mat watershedCluster(Mat &image, int &numComp) {

// 二值化

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

// 形态学与距离变换

Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(binary, binary, MORPH_OPEN, k, Point(-1, -1));

Mat dist;

distanceTransform(binary, dist, DistanceTypes::DIST_L2, 3, CV_32F);

normalize(dist, dist, 0.0, 1.0, NORM_MINMAX);

// 开始生成标记

threshold(dist, dist, 0.1, 1.0, THRESH_BINARY);

normalize(dist, dist, 0, 255, NORM_MINMAX);

dist.convertTo(dist, CV_8UC1);

// 标记开始

vector> contours;

vector hireachy;

findContours(dist, contours, hireachy, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

if (contours.empty()) {

return Mat();

}

Mat markers(dist.size(), CV_32S);

markers = Scalar::all(0);

for (int i = 0; i < contours.size(); i++) {

drawContours(markers, contours, i, Scalar(i + 1), -1, 8, hireachy, INT_MAX);

}

circle(markers, Point(5, 5), 3, Scalar(255), -1);

// 分水岭变换

watershed(image, markers);

numComp = contours.size();

return markers;

}

void createDisplaySegments(Mat &markers, int numSegments, Mat &image) {

// generate random color

vector colors;

for (size_t i = 0; i < numSegments; i++) {

int r = theRNG().uniform(0, 255);

int g = theRNG().uniform(0, 255);

int b = theRNG().uniform(0, 255);

colors.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r));

}

// 颜色填充与最终显示

Mat dst = Mat::zeros(markers.size(), CV_8UC3);

int index = 0;

for (int row = 0; row < markers.rows; row++) {

for (int col = 0; col < markers.cols; col++) {

index = markers.at(row, col);

if (index > 0 && index <= numSegments) {

dst.at(row, col) = colors[index - 1];

}

else {

dst.at(row, col) = Vec3b(255, 255, 255);

}

}

}

imshow("分水岭图像分割-演示",dst);

return;

}

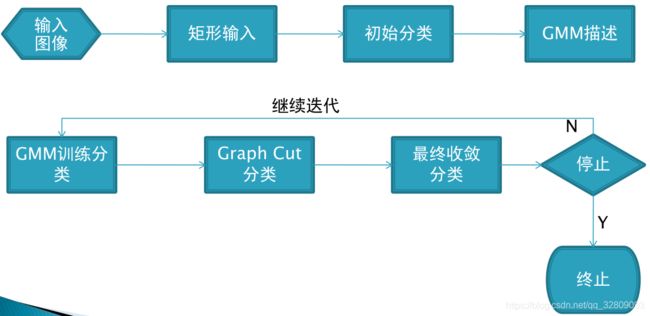

Grabcut算法的基本流程

API介绍:

- grabCut

- setMouseCallback

- onMouse(int event, int x, int y, int flags, void* param)

图像中对象抠图

实例8:Grabcut原理与演示应用

#include

#include

#include

using namespace cv;

using namespace std;

int numRun = 0;

Rect rect;

bool init = false;

Mat src, image;

Mat mask, bgModel, fgModel;//mask,背景,前景

const char* winTitle = "input image";

void onMouse(int event, int x, int y, int flags, void* param);

void setROIMask();

void showImage();

void runGrabCut();

int main(int argc, char** argv) {

src = imread("tx.png", 1);

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

mask.create(src.size(), CV_8UC1);

mask.setTo(Scalar::all(GC_BGD));

namedWindow(winTitle, CV_WINDOW_AUTOSIZE);//

setMouseCallback(winTitle, onMouse, 0);//设置鼠标反馈事件

imshow(winTitle, src);

while (true) {

char c = (char)waitKey(0);

if (c == 'n') {

runGrabCut();//运行GrabCut算法

numRun++;

showImage();

printf("current iteative times : %d\n", numRun);//当前迭代次数

}

if ((int)c == 27) {

break;

}

}

waitKey(0);

return 0;

}

void showImage() {

Mat result, binMask;

binMask.create(mask.size(), CV_8UC1);

binMask = mask & 1;

if (init) {

src.copyTo(result, binMask);

} else {

src.copyTo(result);//将src图像拷贝到result中

}

imwrite("tx2.png",result);

rectangle(result, rect, Scalar(0, 0, 255), 2, 8);//在result上绘制红色矩形框rect

imshow(winTitle, result);

}

void setROIMask() {

// GC_FGD = 1

// GC_BGD =0;

// GC_PR_FGD = 3

// GC_PR_BGD = 2

mask.setTo(GC_BGD);

rect.x = max(0, rect.x);

rect.y = max(0, rect.y);

rect.width = min(rect.width, src.cols - rect.x);

rect.height = min(rect.height, src.rows - rect.y);

mask(rect).setTo(Scalar(GC_PR_FGD));

}

//鼠标事件函数

void onMouse(int event, int x, int y, int flags, void* param) {

switch (event)

{

case EVENT_LBUTTONDOWN://鼠标左键按下

rect.x = x;//矩形左上角x,y坐标

rect.y = y;

rect.width = 1;//矩形线条宽度、高度

rect.height = 1;

init = false;

numRun = 0;

break;

case EVENT_MOUSEMOVE://鼠标移动

if (flags & EVENT_FLAG_LBUTTON) {

rect = Rect(Point(rect.x, rect.y), Point(x, y));

showImage();//显示带矩形框的图像

}

break;

case EVENT_LBUTTONUP://鼠标左键松开

if (rect.width > 1 && rect.height > 1) {

setROIMask();

showImage();

}

break;

default:

break;

}

}

//运行GrabCut函数

void runGrabCut() {

if (rect.width < 2 || rect.height < 2) {

return;

}

if (init) {

grabCut(src, mask, rect, bgModel, fgModel, 1);

} {

grabCut(src, mask, rect, bgModel, fgModel, 1, GC_INIT_WITH_RECT);

init = true;

}

} 鼠标选定方框后,按下键盘n键便可进行分割。

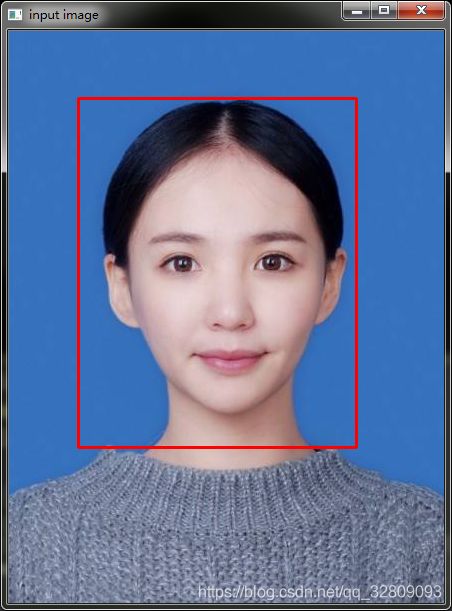

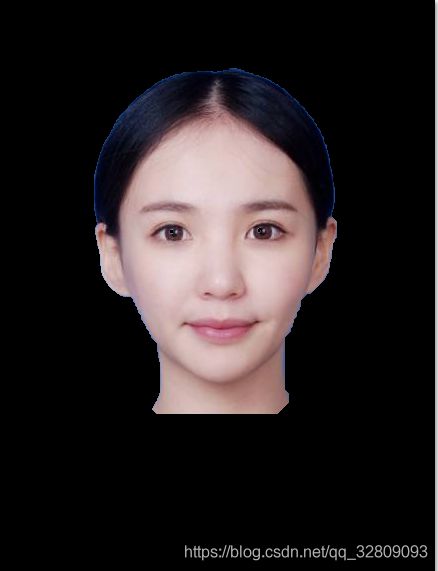

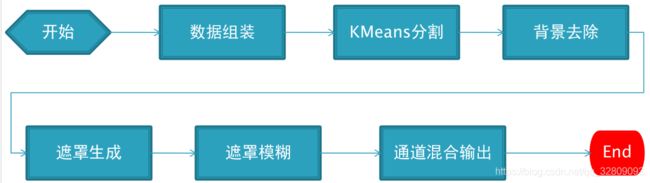

案例实战——证件照背景替换

关键知识点

K-Means

背景融合 – 高斯模糊

遮罩层生成

算法设计步骤

实例9:K-Means-证件照背景替换

#include

#include

using namespace cv;

using namespace std;

Mat mat_to_samples(Mat &image);

int main(int argc, char** argv) {

Mat src = imread("tx.png");

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

namedWindow("输入图像", CV_WINDOW_AUTOSIZE);

imshow("输入图像", src);

// 组装数据

Mat points = mat_to_samples(src);

// 运行KMeans

int numCluster = 4;

Mat labels;

Mat centers;

TermCriteria criteria = TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1);

kmeans(points, numCluster, labels, criteria, 3, KMEANS_PP_CENTERS, centers);

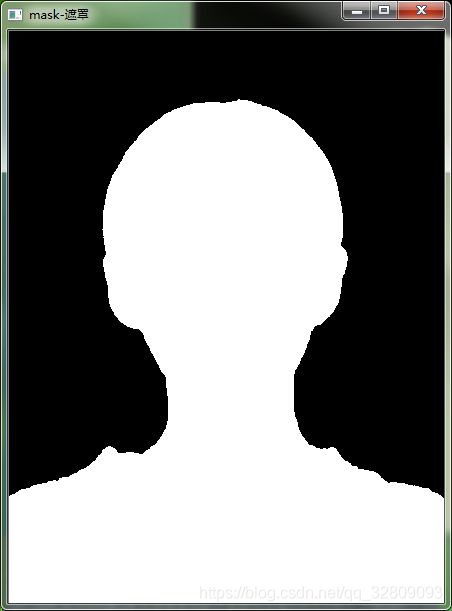

// 去背景+遮罩生成

Mat mask=Mat::zeros(src.size(), CV_8UC1);

int index = src.rows*2 + 2;

int cindex = labels.at(index, 0);

int height = src.rows;

int width = src.cols;

//Mat dst;

//src.copyTo(dst);

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

int label = labels.at(index, 0);

if (label == cindex) { // 背景

//dst.at(row, col)[0] = 0;

//dst.at(row, col)[1] = 0;

//dst.at(row, col)[2] = 0;

mask.at(row, col) = 0;

} else {

mask.at(row, col) = 255;

}

}

}

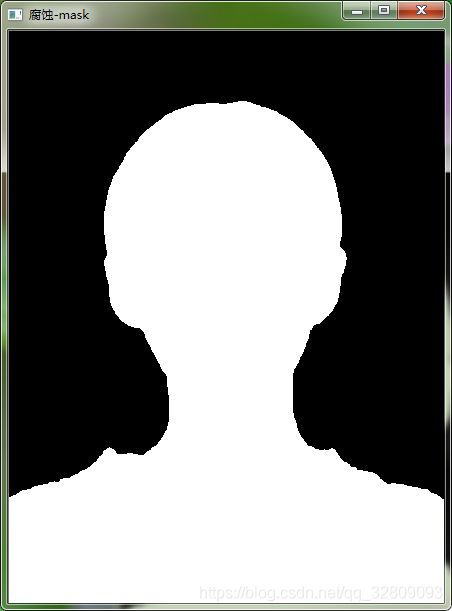

imshow("mask-遮罩", mask);

// 腐蚀 + 高斯模糊

Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));//用3*3像素进行腐蚀(减少3*3)

erode(mask, mask, k);

imshow("腐蚀-mask", mask);

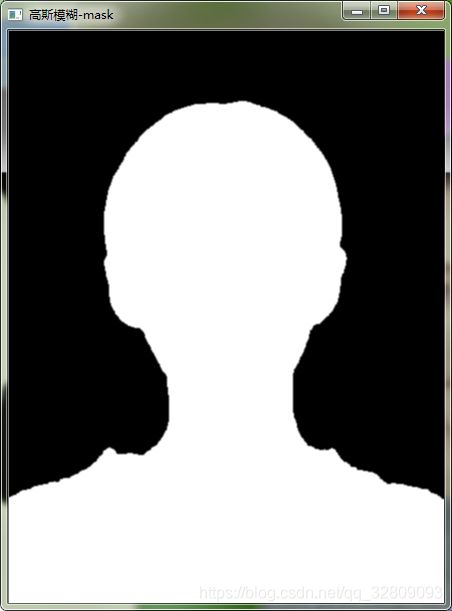

GaussianBlur(mask, mask, Size(3, 3), 0, 0);//用3*3像素进行高斯模糊(增加3*3) 所以边界不变

imshow("高斯模糊-mask", mask);

// 通道混合

RNG rng(12345);//随机数

Vec3b color;

color[0] = 217;//rng.uniform(0, 255);

color[1] = 60;// rng.uniform(0, 255);

color[2] = 160;// rng.uniform(0, 255);

Mat result(src.size(), src.type());

double w = 0.0;

int b = 0, g = 0, r = 0;

int b1 = 0, g1 = 0, r1 = 0;

int b2 = 0, g2 = 0, r2 = 0;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

int m = mask.at(row, col);

if (m == 255) {

result.at(row, col) = src.at(row, col); // 前景

}

else if (m == 0) {

result.at(row, col) = color; // 背景

}

else {

w = m / 255.0;

b1 = src.at(row, col)[0];

g1 = src.at(row, col)[1];

r1 = src.at(row, col)[2];

b2 = color[0];

g2 = color[1];

r2 = color[2];

b = b1*w + b2*(1.0 - w);

g = g1*w + g2*(1.0 - w);

r = r1*w + r2*(1.0 - w);

result.at(row, col)[0] = b;

result.at(row, col)[1] = g;

result.at(row, col)[2] = r;

}

}

}

imshow("背景替换", result);

waitKey(0);

return 0;

}

Mat mat_to_samples(Mat &image) {

int w = image.cols;

int h = image.rows;

int samplecount = w*h;

int dims = image.channels();

Mat points(samplecount, dims, CV_32F, Scalar(10));

int index = 0;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

index = row*w + col;

Vec3b bgr = image.at(row, col);

points.at(index, 0) = static_cast(bgr[0]);

points.at(index, 1) = static_cast(bgr[1]);

points.at(index, 2) = static_cast(bgr[2]);

}

}

return points;

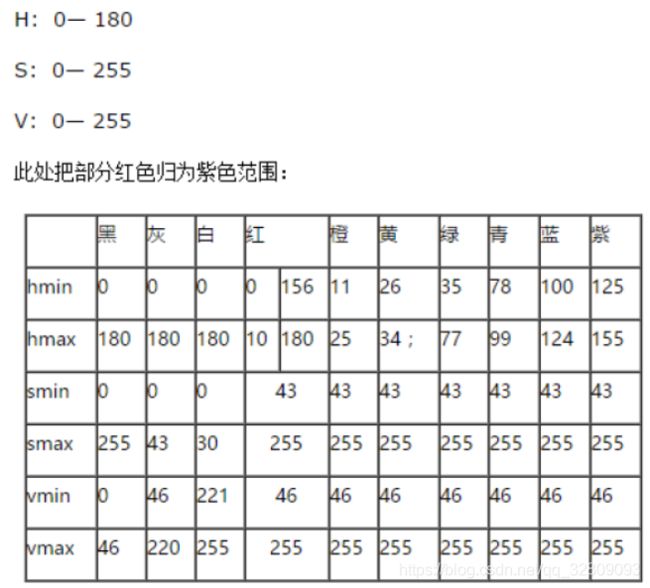

} 案例实战——绿幕视频背景替换

关键知识点

分割算法选择

背景融合 – 高斯模糊

遮罩层生成

算法选择

GMM或者Kmeans - ?? Bad idea, very slow!!!

基于色彩的处理方法

RGB与HSV色彩空间

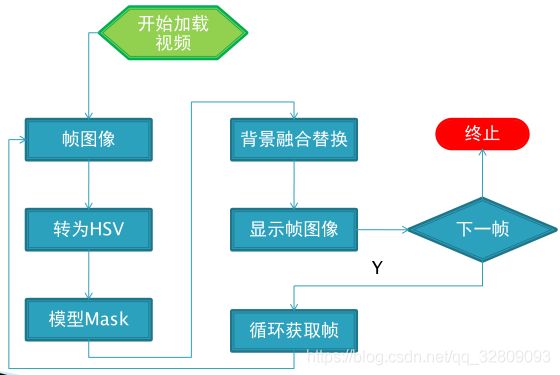

算法设计步骤

实例10:绿幕视频背景替换

如有资料等方面的问题,欢迎联系扣扣1837393417,共同探讨,共同进步!!!

#include

#include

using namespace cv;

using namespace std;

Mat replace_and_blend(Mat &frame, Mat &mask);

Mat background_01;//背景1

Mat background_02;//背景2

int main(int argc, char** argv) {

// start here...

background_01 = imread("bg_01.jpg");

background_02 = imread("bg_02.jpg");

VideoCapture capture;//视频抓取

capture.open("01.mp4");

if (!capture.isOpened()) {

printf("could not find the video file...\n");

return -1;

}

const char* title = "input video";

const char* resultWin = "result video";

namedWindow(title, CV_WINDOW_AUTOSIZE);

namedWindow(resultWin, CV_WINDOW_AUTOSIZE);

Mat frame, hsv, mask;

int count = 0;

//测试视频读取

//while (capture.read(frame)) {//判断读取视频单帧是否成功,读取单帧图像复制给frame对象

// imshow(title, frame);

// char c =waitKey(50);

// if (c == 27) {

// break;

// }

//}

///

mask测试

//while (capture.read(frame)) {

// cvtColor(frame, hsv, COLOR_BGR2HSV);//转换为HSV

// //原背景选择HSV--绿色

// inRange(hsv, Scalar(35, 43, 46), Scalar(60, 255, 255), mask);//mask数据调整60

// imshow("mask",mask);//显示mask

// count++;

// imshow(title, frame);//显示输入video

// char c = waitKey(1);

// if (c == 27) {

// break;

// }

//}

/

while (capture.read(frame)) {//判断读取视频单帧是否成功,读取单帧图像复制给frame对象

cvtColor(frame, hsv, COLOR_BGR2HSV);//转换为HSV

inRange(hsv, Scalar(35, 43, 46), Scalar(60, 255, 255), mask);

// 形态学操作,3*3进行腐蚀,然后高斯进行3*3的模糊,边界不变

Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(mask, mask, MORPH_CLOSE, k);

erode(mask, mask, k);

GaussianBlur(mask, mask, Size(3, 3), 0, 0);

//背景替换和混合

Mat result = replace_and_blend(frame, mask);

char c = waitKey(1);

if (c == 27) {

break;

}

imshow(resultWin, result);//显示处理结果video

imshow(title, frame);//显示输入video

}

waitKey(0);

return 0;

}

//背景替换和混合

Mat replace_and_blend(Mat &frame, Mat &mask) {

Mat result = Mat::zeros(frame.size(), frame.type());

int h = frame.rows;

int w = frame.cols;

int dims = frame.channels();

// replace and blend

int m = 0;

double wt = 0;

int r = 0, g = 0, b = 0;

int r1 = 0, g1 = 0, b1 = 0;

int r2 = 0, g2 = 0, b2 = 0;

for (int row = 0; row < h; row++) {

uchar* current = frame.ptr(row);//当前

uchar* bgrow = background_01.ptr(row);//背景2

uchar* maskrow = mask.ptr(row);//面罩 行

uchar* targetrow = result.ptr(row);//目标 行

for (int col = 0; col < w; col++) {

m = *maskrow++;

if (m == 255) { // 赋值为背景

*targetrow++ = *bgrow++;

*targetrow++ = *bgrow++;

*targetrow++ = *bgrow++;

current += 3;

} else if(m==0) {// 赋值为前景

*targetrow++ = *current++;

*targetrow++ = *current++;

*targetrow++ = *current++;

bgrow += 3;

} else {

b1 = *bgrow++;

g1 = *bgrow++;

r1 = *bgrow++;

b2 = *current++;

g2 = *current++;

r2 = *current++;

// 权重

wt = m / 255.0;

// 混合

b = b1*wt + b2*(1.0 - wt);

g = g1*wt + g2*(1.0 - wt);

r = r1*wt + r2*(1.0 - wt);

*targetrow++ = b;

*targetrow++ = g;

*targetrow++ = r;

}

}

}

return result;

} 视频读取测试 视频播放

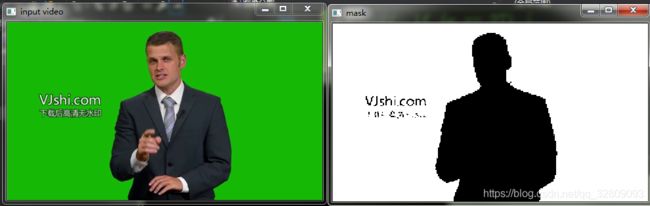

Mark测试

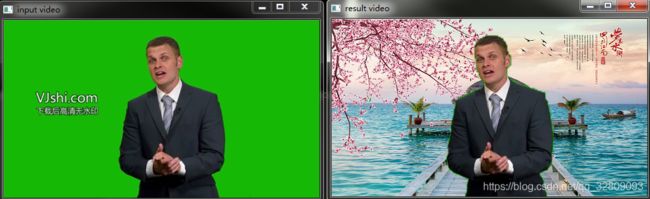

视频背景替换 视频背景替换播放