【目标检测_4】tesorflow目标识别API跑VOC2012数据集

没有做 mask_rcnn的模型,因为不知道掩膜信息怎么导入!其他模型兼可以按照以下步骤执行!(mask_rcnn会单独总结一篇)

说明:执行已有模型 执行demo是针对coco数据集;而本文是利用基于coco数据集训练的模型结合VOC2012数据微调,生成新的frozen_inference_graph.pb(保存网络结构和数据)~可以再次利用jupyter-notebook运行程序,把frozen_inference_graph.pb的路径更改为新生成的路径!,类别标签映射文本修改为pascal的类别标签映射文本~pascal_lab_map.txt(data文件夹下面)

参考博客

一 下载VOC2012数据集

下载地址

二 分析数据集结构

下载完成之后,解压;解压完成后文件夹名字为 VOCdevkit,包含子文件夹VOC2012,子文件夹下包含下面五个文件夹。

- Annotations

Annotations文件夹中存放的是xml格式的标签文件,每一个xml文件都对应于JPEGImages文件夹中的一张图片。

- ImageSets

其中Action下存放的是人的动作(例如running、jumping等等,这也是VOC challenge的一部分)VOC2007就没有Action,Layout下存放的是具有人体部位的数据(人的head、hand、feet等等,这也是VOC challenge的一部分)Main下存放的是图像物体识别的数据,总共分为20类。Main文件夹下包含了20个分类的***_train.txt、***_val.txt和***_trainval.txt。是两列数据,左边一列是对应图片的序号,右边一列是样本标签,-1表示负样本,+1表示正样本。

- JPEGImages

对应的图片信息

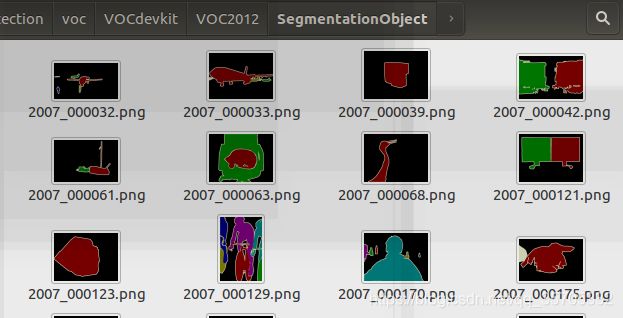

- SegmentationClass

语义分割的掩膜

- SegmentationObeject

4和5的区别是什么呢?待补充

- class segmentation: 标注出每一个像素的类别

- object segmentation: 标注出每一个像素属于哪一个物体

三 将VOC2012数据集格式转换为tfrecord格式

借助object_detection下的create_pascal_tf_record.py直接可以导出tf格式~

cd dir #dir为的models_master下的research中

# From tensorflow/models

python3 object_detection/create_pascal_tf_record.py \

--label_map_path=object_detection/data/pascal_label_map.pbtxt \

--data_dir=VOCdevkit --year=VOC2012 --set=train \

--output_path=pascal_train.record

python3 object_detection/create_pascal_tf_record.py \

--label_map_path=object_detection/data/pascal_label_map.pbtxt \

--data_dir=VOCdevkit --year=VOC2012 --set=val \

--output_path=pascal_val.record

跟新:以上出来的少了掩膜信息,针对mask_rcnn模型则无法训练

四 将pascal_lable_map.pbtxt 移到voc目录下

pascal_lable_map.pbtxt:原本在object_detection下data文件夹下

sudo cp pascal_lable_map.pbtxt voc/

五 下载模型 并训练

1我下载的是faster_rcnn_resnet101_coco_11_06_2017.tar.gz

2移动到voc下面,解压包含五个文件,并且修改文件名为train;

3此时voc下面包含如下几个文件

4在voc下建立train_dir

5object_detection/samples/config/faster_rcnn_resnet101_coco.config 文件移动到voc下;

6修改上一步的config文件 共七处

num_classes: 20

fine_tune_checkpoint: “voc/train/model.ckpt”

input_path: “voc/pascal_train.record”

label_map_path: “voc/pascal_label_map.pbtxt”

input_path: “voc/pascal_val.record”

label_map_path: “voc/pascal_label_map.pbtxt”

num_examples: 5823

7.在object_detection 打开终端,执行

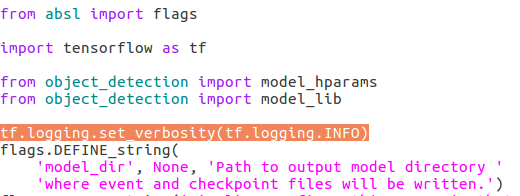

说明,最新的下载下来的model,train.py移动到了legacy文件夹下面,object_detection下面的model_main.py文件可以替代train.py,但是不会输出日志,只需加上tf.logging.set_verbosity(tf.logging.INFO)

python3 model_main --train_dir voc/train_dir

/ --pipeline_config_path voc/faster_rcnn_resnet101_coco.config

(为什么训练了之后train_dir还是为空?)

答:注意 ~~ --train_dir voc/train_dir~~ 改为 –model_dir voc/train_dir

因为之前版本训练用的是train.py 现在训练使用的是model_main.py 内部定义有轻微改变

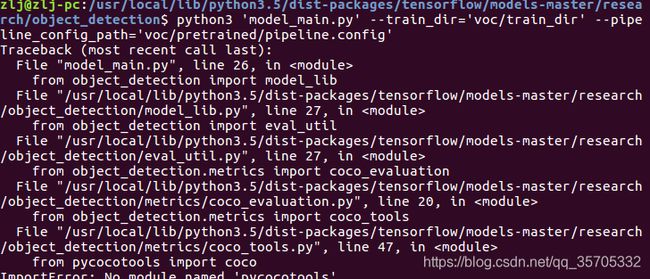

报错1:from pycocotools import coco

解决办法:

git clone https://github.com/pdollar/coco

cd coco/PythonAPI

#make -j3 make要报错用下面一句话代替

python3 setup.py build_ext --inplace#

#再次报错 提示安装python-tk

sudo apt-get install python3-tk

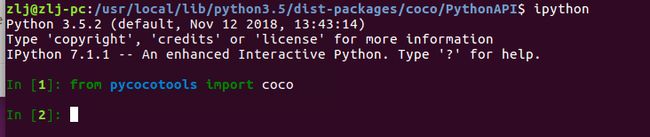

ipython

from pycocotools import coco

报错2:正常了!!!但是再次运行训练又报错依然说找from pycocotools import coco错误!!然后参考网上教程,添加环境变量:

重新输入import COCO命令即可若进入PythonAPI目录可以,而运行程序仍然报错则修改环境变量即可(我试了完全没用)

网上参考办法如下:

gedit/.bashrc 在末尾加上export PATHONPATH=/home/john/coco/PythonAPI:$PATH #当前PythonAPI目录的绝对路径

最后暴力解决了!把coco文件夹下面的pycocotools复制到了voc下面

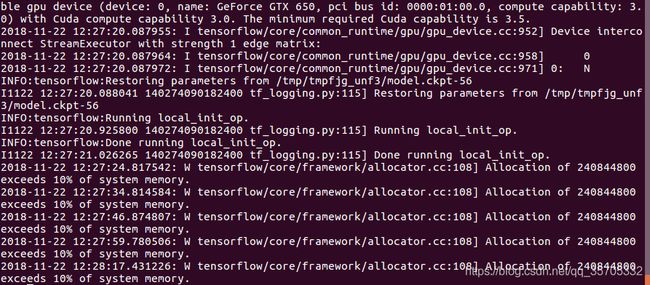

报错3超出系统内存

解决办法1:对输入图片进行缩放尺寸,原本最小尺寸600,最大尺寸1024;修改为300,512:对于我还是不能解决问题。

解决办法2:下载较小的模型~

解决办法3:把batch_size 原本是24,我在运行的时候出现显存不足的问题,为了保险起见,改为1,如果1还是出现类似问题的话,建议换电脑……

五 导出以及测试

可以参考mask_rcnn训练中导出与测试部分内容

六 小结-温习过程

1.新版model下训练文件以model_main.py(object_detection/)替换了train.py 其中训练过程中存放路径model_dir替换了train_dir;;;;;在看老版本书籍时一定要注意版本更新了的细微差别。

2.下载数据集,本次使用数据集为VOC2012

3.转为tfrecord格式 需要文件有:create_pascal_tf_record.py (官方给的就是针对voc2012数据集的)

From tensorflow/models

python3 object_detection/create_pascal_tf_record.py

–label_map_path=object_detection/data/pascal_label_map.pbtxt

–data_dir=VOCdevkit --year=VOC2007 --set=train

–output_path=pascal_train.record

python3 object_detection/create_pascal_tf_record.py

–label_map_path=object_detection/data/pascal_label_map.pbtxt

–data_dir=VOCdevkit --year=VOC2007 --set=val

–output_path=pascal_val.record

4.下载模型修改配置文件

5.导出冻结文件

6.测试

官方给的pascal2tf.py

没有修改任何东西!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

r"""Convert raw PASCAL dataset to TFRecord for object_detection.

Example usage:

./create_pascal_tf_record --data_dir=/home/user/VOCdevkit \

--year=VOC2012 \

--output_path=/home/user/pascal.record

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import hashlib

import io

import logging

import os

from lxml import etree

import PIL.Image

import tensorflow as tf

from object_detection.utils import dataset_util

from object_detection.utils import label_map_util

flags = tf.app.flags

flags.DEFINE_string('data_dir', '', 'Root directory to raw PASCAL VOC dataset.')

flags.DEFINE_string('set', 'train', 'Convert training set, validation set or '

'merged set.')

flags.DEFINE_string('annotations_dir', 'Annotations',

'(Relative) path to annotations directory.')

flags.DEFINE_string('year', 'VOC2007', 'Desired challenge year.')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

flags.DEFINE_string('label_map_path', 'object_detection/data/pascal_label_map.pbtxt',

'Path to label map proto')

flags.DEFINE_boolean('ignore_difficult_instances', False, 'Whether to ignore '

'difficult instances')

FLAGS = flags.FLAGS

SETS = ['train', 'val', 'trainval', 'test']

YEARS = ['VOC2007', 'VOC2012', 'merged']

def dict_to_tf_example(data,

dataset_directory,

label_map_dict,

ignore_difficult_instances=False,

image_subdirectory='JPEGImages'):

"""Convert XML derived dict to tf.Example proto.

Notice that this function normalizes the bounding box coordinates provided

by the raw data.

Args:

data: dict holding PASCAL XML fields for a single image (obtained by

running dataset_util.recursive_parse_xml_to_dict)

dataset_directory: Path to root directory holding PASCAL dataset

label_map_dict: A map from string label names to integers ids.

ignore_difficult_instances: Whether to skip difficult instances in the

dataset (default: False).

image_subdirectory: String specifying subdirectory within the

PASCAL dataset directory holding the actual image data.

Returns:

example: The converted tf.Example.

Raises:

ValueError: if the image pointed to by data['filename'] is not a valid JPEG

"""

img_path = os.path.join(data['folder'], image_subdirectory, data['filename'])

full_path = os.path.join(dataset_directory, img_path)

with tf.gfile.GFile(full_path, 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = PIL.Image.open(encoded_jpg_io)

if image.format != 'JPEG':

raise ValueError('Image format not JPEG')

key = hashlib.sha256(encoded_jpg).hexdigest()

width = int(data['size']['width'])

height = int(data['size']['height'])

xmin = []

ymin = []

xmax = []

ymax = []

classes = []

classes_text = []

truncated = []

poses = []

difficult_obj = []

for obj in data['object']:

difficult = bool(int(obj['difficult']))

if ignore_difficult_instances and difficult:

continue

difficult_obj.append(int(difficult))

xmin.append(float(obj['bndbox']['xmin']) / width)

ymin.append(float(obj['bndbox']['ymin']) / height)

xmax.append(float(obj['bndbox']['xmax']) / width)

ymax.append(float(obj['bndbox']['ymax']) / height)

classes_text.append(obj['name'].encode('utf8'))

classes.append(label_map_dict[obj['name']])

truncated.append(int(obj['truncated']))

poses.append(obj['pose'].encode('utf8'))

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/source_id': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature('jpeg'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmin),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmax),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymin),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymax),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

'image/object/difficult': dataset_util.int64_list_feature(difficult_obj),

'image/object/truncated': dataset_util.int64_list_feature(truncated),

'image/object/view': dataset_util.bytes_list_feature(poses),

}))

return example

def main(_):

if FLAGS.set not in SETS:

raise ValueError('set must be in : {}'.format(SETS))

if FLAGS.year not in YEARS:

raise ValueError('year must be in : {}'.format(YEARS))

data_dir = FLAGS.data_dir

years = ['VOC2007', 'VOC2012']

if FLAGS.year != 'merged':

years = [FLAGS.year]

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

label_map_dict = label_map_util.get_label_map_dict(FLAGS.label_map_path)

for year in years:

logging.info('Reading from PASCAL %s dataset.', year)

examples_path = os.path.join(data_dir, year, 'ImageSets', 'Main','aeroplane_' + FLAGS.set + '.txt')

annotations_dir = os.path.join(data_dir, year, FLAGS.annotations_dir)

examples_list = dataset_util.read_examples_list(examples_path)

for idx, example in enumerate(examples_list):

if idx % 100 == 0:

logging.info('On image %d of %d', idx, len(examples_list))

path = os.path.join(annotations_dir, example + '.xml')

with tf.gfile.GFile(path, 'r') as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

data = dataset_util.recursive_parse_xml_to_dict(xml)['annotation']

tf_example = dict_to_tf_example(data, FLAGS.data_dir, label_map_dict,

FLAGS.ignore_difficult_instances)

writer.write(tf_example.SerializeToString())

writer.close()

if __name__ == '__main__':

tf.app.run()