运用Keras框架对图书评论进行情感分析

写完毕业论文很久了,现在开始来写这篇博客

我的本科毕业论文是《融合图书评论情感分析、图书评分和用户评分的图书推荐系统》

其中一部分就运用到了自然语言处理中的情感分析,我用的是深度学习的方法解决,用的深度学习的Keras框架

语料数据来源于公开的ChineseNlpCorpus的数据集online_shopping_10_cats,截取其中的图书评论数据作为后面长短记忆神经网络的训练集。项目地址:https://github.com/liuhuanyong/ChineseNLPCorpus

1、情感分析的语料处理

在对图书评论的数据进行情感分析之前,去掉语料中的标点符号、特殊符号、英文字母、数字等没用的信息,这里使用的是正则表达式去除,运用jieba汉语分词库对评论文本进行分词。之后去掉一些停用词,使用的是哈工大的停用词库,将哈工大停用词库的数据读取成集合,每次对评论文本分词后的词语判断是否存在于停用词的集合当中,如果存在则去掉,否则进入下一步。最后形成一个常用词的词袋。

import collections

import pickle

import re

import jieba

# 数据过滤

def regex_filter(s_line):

# 剔除英文、数字,以及空格

special_regex = re.compile(r"[a-zA-Z0-9\s]+")

# 剔除英文标点符号和特殊符号

en_regex = re.compile(r"[.…{|}#$%&\'()*+,!-_./:~^;<=>?@★●,。]+")

# 剔除中文标点符号

zn_regex = re.compile(r"[《》、,“”;~?!:()【】]+")

s_line = special_regex.sub(r"", s_line)

s_line = en_regex.sub(r"", s_line)

s_line = zn_regex.sub(r"", s_line)

return s_line

# 加载停用词

def stopwords_list(file_path):

stopwords = [line.strip() for line in open(file_path, 'r', encoding='utf-8').readlines()]

return stopwords

#主函数开始

word_freqs = collections.Counter() # 词频

stopword = stopwords_list("stopWords.txt") #加载停用词

max_len = 0

with open("Corpus.txt", "r", encoding="utf-8",errors='ignore') as f:

for line in f:

comment , label = line.split(",")

sentence = comment.replace("\n","")

# 数据预处理

sentence = regex_filter(sentence) #去掉评论中的数字、字符、空格

words = jieba.cut(sentence) #使用jieba进行中文句子分词

x = 0

for word in words:

word_freqs[word] += 1

x += 1 #记录每个词语的词频

max_len = max(max_len, x) #将句子分词后,记录最长的句子长度

f.close() #关闭文件

with open("BookComments.txt", "r", encoding="utf-8",errors='ignore') as file:

for line in file:

line = line.replace("\n","")

bookid,cm = line.split(",")

comment_list = cm.split(";")

for comment in comment_list:

sentence = regex_filter(comment) #去掉评论中的数字、字符、空格

words = jieba.cut(sentence) #使用jieba进行中文句子分词

x = 0

for word in words:

word_freqs[word] += 1

x += 1 #记录每个词语的词频

max_len = max(max_len, x) #将句子分词后,记录最长的句子长度

file.close()

print(max_len)

print('nb_words ', len(word_freqs)) #输出词袋的大小

2、生成字典

对语料进行处理之后,对每个词语统计词频,取高频率的词,将每个高频词对应于唯一的一个数字编号,将字典写入文件中保存。

## 准备数据

MAX_FEATURES = 40000 # 最大词频数

vocab_size = min(MAX_FEATURES, len(word_freqs)) + 2

# 构建词频字典

word2index = {x[0]: i+2 for i, x in enumerate(word_freqs.most_common(MAX_FEATURES))}

word2index["PAD"] = 0 #增加一个"PAD"用于后面补0的词

word2index["UNK"] = 1 #增加一个"UNK"用于不在字典中的词

# 将字典写入文件中保存

with open('word_dict.pickle', 'wb') as handle:

pickle.dump(word2index, handle, protocol=pickle.HIGHEST_PROTOCOL)

3、构建LSTM模型

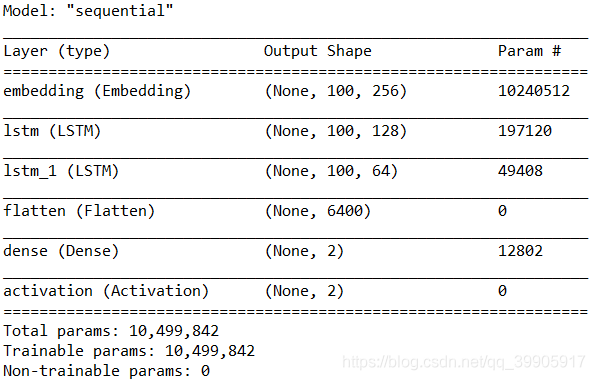

遍历数据集的每一条评论,将评论分词,之后去掉各种无用符号和常用词,通过上一节的字典,将词语序列转换成数字序列。之后划分训练集和测试集。先将数字序列通过Embedding嵌入层进行压缩,转变为词向量[12],之后构建隐藏层大小为128和64的LSTM,通过Flatten层将数据压平,进入Dense全连接层,最后进入激活层,之后构建模型,拟合数据。其中优化器选择adam,损失函数选择categorical_crossentropy。

#训练模型,找出模型的最佳迭代次数,即为4轮最佳

import pickle

from tensorflow.keras.layers import Flatten,Activation,Dense, SpatialDropout1D,Embedding,LSTM

from tensorflow.keras.models import Sequential

from tensorflow.keras.preprocessing import sequence

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

import jieba #用来分词

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

#https://blog.csdn.net/qq_40663357/article/details/102382992?utm_source=app

# 加载分词字典

with open('word_dict.pickle', 'rb') as handle:

word2index = pickle.load(handle)

### 准备数据

MAX_FEATURES = 40002 # 最大词频数

MAX_SENTENCE_LENGTH = 100 # 句子最大长度

num_recs = 0 # 样本数

with open("Corpus.txt", "r", encoding="utf-8",errors='ignore') as f:

for line in f: #遍历数据集的每一行

num_recs += 1

f.close()

# 初始化句子数组和label数组

X = np.empty(num_recs,dtype=list)

y = np.zeros(num_recs)

i=0

with open("Corpus.txt", "r", encoding="utf-8",errors='ignore') as f:

for line in f:

comment , label = line.split(",")

sentence = comment.replace(' ', '')

words = jieba.cut(sentence)

seqs = []

for word in words:

# 在词频中

if word in word2index:

seqs.append(word2index[word])

else:

seqs.append(word2index["UNK"]) # 不在词频内的补为UNK

X[i] = seqs

y[i] = int(label)

i += 1

f.close()

# 把句子转换成数字序列,并对句子进行统一长度,长的截断,短的补0

X = sequence.pad_sequences(X, maxlen=MAX_SENTENCE_LENGTH)

# 使用pandas对label进行one-hot编码

y1 = pd.get_dummies(y).values

print(X.shape)

print(y1.shape)

# 数据划分

Xtrain, Xtest, ytrain, ytest = train_test_split(X, y1, test_size=0.3, random_state=0)

## 网络构建

EMBEDDING_SIZE = 256 # 词向量维度

HIDDEN_LAYER_SIZE = 128 # 隐藏层大小

BATCH_SIZE = 64 # 每批大小

NUM_EPOCHS = 10 # 训练周期数

# 创建一个实例

model = Sequential()

# 构建词向量

model.add(Embedding(MAX_FEATURES, EMBEDDING_SIZE,input_length=MAX_SENTENCE_LENGTH))

model.add(LSTM(HIDDEN_LAYER_SIZE, dropout=0.1, return_sequences=True))

model.add(LSTM(64, return_sequences=True))

#model.add(layers.Dropout(0.1))

model.add(Flatten())

model.add(Dense(2)) #[0, 1] or [1, 0]

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam',metrics=['accuracy'])

model.summary()

history=model.fit(Xtrain, ytrain, epochs=10, batch_size=BATCH_SIZE, validation_data=(Xtest, ytest))

model.save('my_model.h5')

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()