Task 1: Introduction and Word Vectors(附代码)(Stanford CS224N NLP with Deep Learning Winter 2019)

Task 1: Introduction and Word Vectors

目录

- Task 1: Introduction and Word Vectors

- Lecture Plan

- 1. How do we represent the meaning of a word?

- How do we have usable meaning in a computer?

- Problems with resources like WordNet

- Representing words as discrete symbols

- Problem with words as discrete symbols

- Representing words by their context

- Word vectors

- Word meaning as a neural word vector – visualization

- 2.Word2vec: Overview

- Word2vec: objective function

- Word2Vec Overview with Vectors

- Word2vec: prediction function

- Training a model by optimizing parameters

- To train the model: Compute all vector gradients!

- 3.Word2vec derivations of gradient

- Chain Rule

- Interactive Whiteboard Session!

- 白板推导

- Calculating all gradients!

- Word2vec: More details

- 4.Optimization: Gradient Descent

- Gradient Descent

- Stochastic Gradient Descent

- 实战

- 【参考资料】

理论部分

- 介绍NLP研究的对象

- 如何表示单词的含义

- Word2Vec方法的基本原理

School:Stanford

Teacher:Prof. Christopher Manning

Library:Pytorch

Lecture Plan

- The course (10 mins)

- Human language and word meaning (15 mins)

- Word2vec introduction (15 mins)

- Word2vec objective function gradients (25 mins)

- Optimization basics (5 mins)

- Looking at word vectors (10 mins or less)

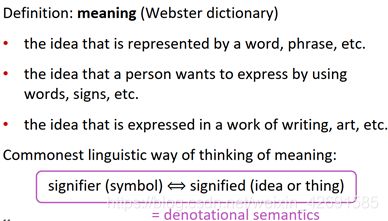

1. How do we represent the meaning of a word?

How do we have usable meaning in a computer?

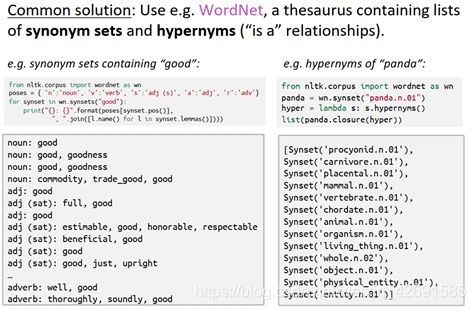

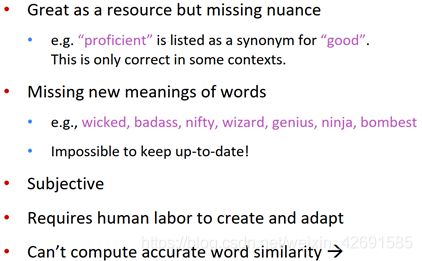

Problems with resources like WordNet

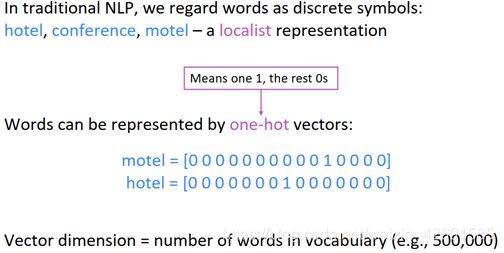

Representing words as discrete symbols

Problem with words as discrete symbols

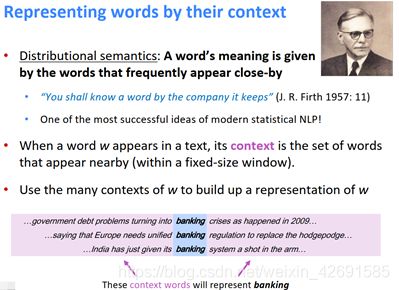

Representing words by their context

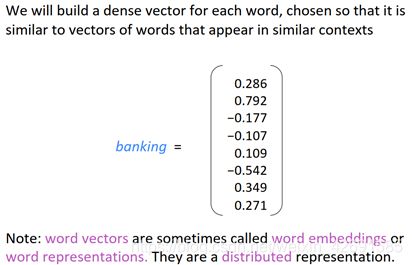

Word vectors

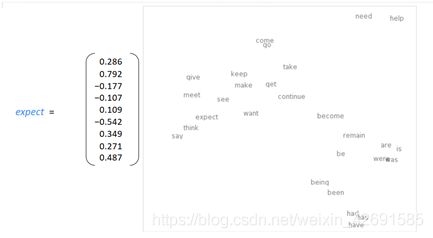

Word meaning as a neural word vector – visualization

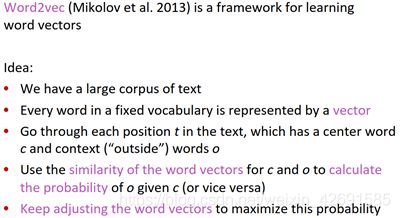

2.Word2vec: Overview

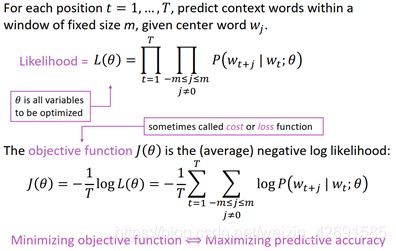

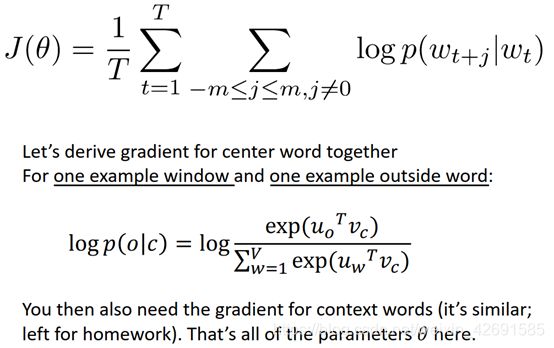

Word2vec: objective function

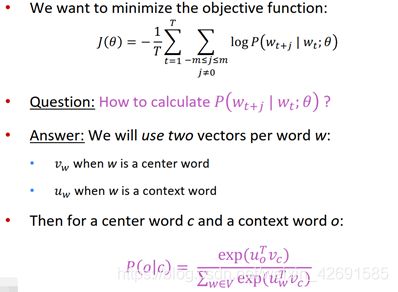

Word2Vec Overview with Vectors

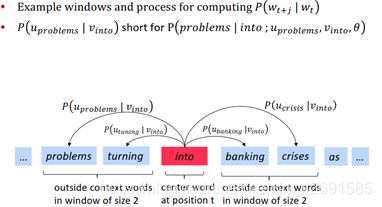

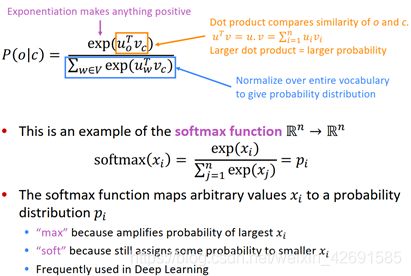

Word2vec: prediction function

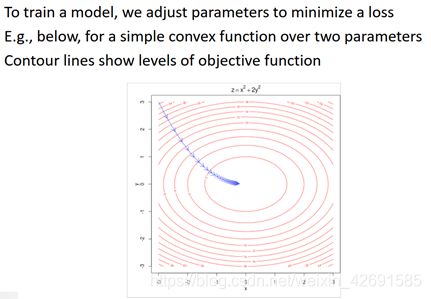

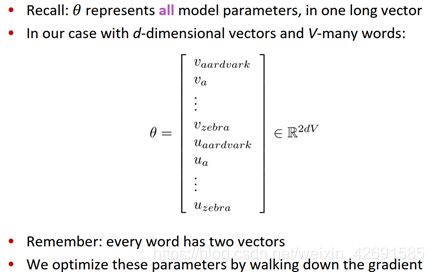

Training a model by optimizing parameters

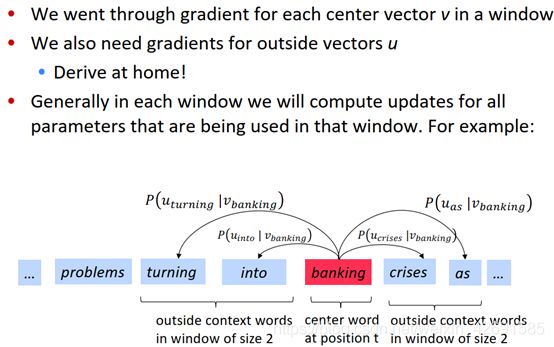

To train the model: Compute all vector gradients!

3.Word2vec derivations of gradient

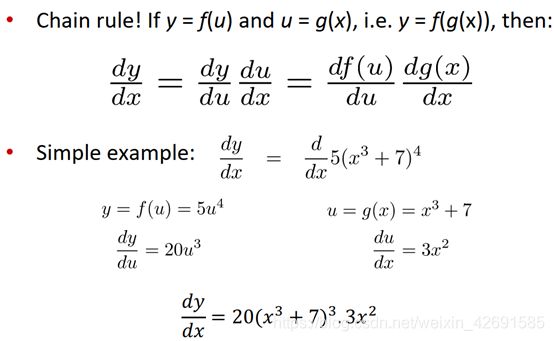

Chain Rule

Interactive Whiteboard Session!

白板推导

Calculating all gradients!

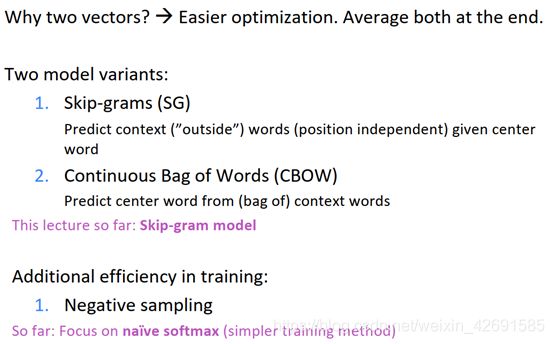

Word2vec: More details

4.Optimization: Gradient Descent

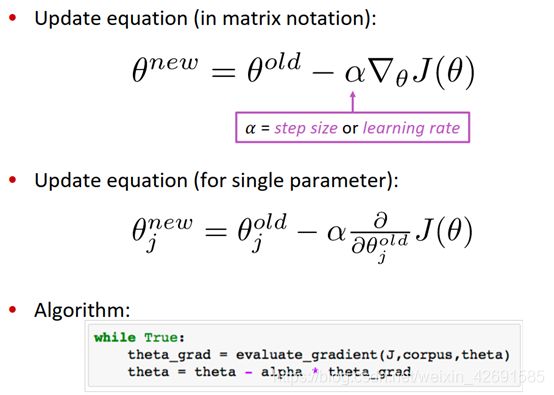

Gradient Descent

Stochastic Gradient Descent

实战

# Gensim word vector visualization of various word vectors

import numpy as np

# Get the interactive Tools for Matplotlib

import matplotlib.pyplot as plt

plt.style.use('ggplot')

from sklearn. manifold import TSNE

from sklearn. decomposition import PCA

from gensim.test.utils import datapath, get_tmpfile

from gensim. models import KeyedVectors

from gensim. scripts .glove2word2vec import glove2word2vec

# 将GloVe文件格式转换为word2vec文件格式

glove_file = datapath('D:\Python-text\\nlp_text\\nlp_datawhale\\task01\\glove.6B\\glove.6B.100d.txt')

word2vec_glove_file = get_tmpfile("D:\Python-text\\nlp_text\\nlp_datawhale\\task01\\glove.6B\\glove.6B.100d.word2vec.txt")

print(glove2word2vec(glove_file, word2vec_glove_file))

# 加载预训练词向量模型

model = KeyedVectors.load_word2vec_format(word2vec_glove_file)

# 与obama最相似的词

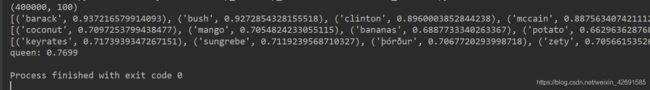

print(model.most_similar('obama'))

# 与banana最相似的词

print(model.most_similar('banana'))

print(model.most_similar(negative='banana'))

result = model.most_similar(positive=['woman', 'king'], negative=['man'])

print("{}: {:.4f}".format(*result[0]))

def analogy(x1, x2, y1):

result = model.most_similar(positive=[y1, x2], negative=[x1])

return result[0][0]

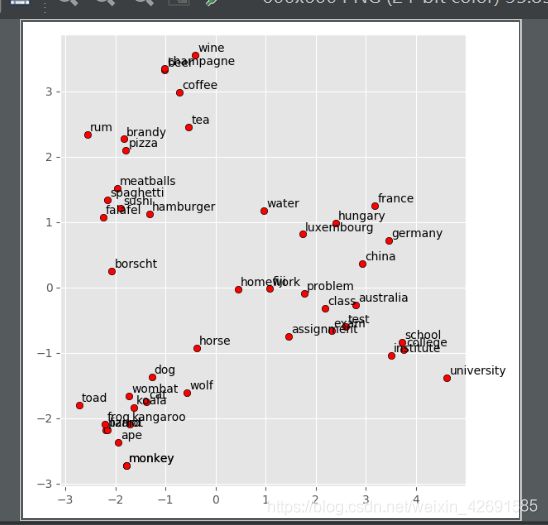

# 神经词向量的可视化散点图

def display_pca_scatterplot(model, words=None, sample=0):

if words == None:

if sample > 0:

words = np.random.choice(list(model.vocab.keys()), sample)

else:

words = [word for word in model.vocab]

word_vectors = np.array([model[w] for w in words])

twodim = PCA().fit_transform(word_vectors)[:, :2]

plt.figure(figsize=(6, 6))

plt.scatter(twodim[:, 0], twodim[:, 1], edgecolors='k', c='r')

for word, (x, y) in zip(words, twodim):

plt.text(x + 0.05, y + 0.05, word)

display_pca_scatterplot(model,

['coffee', 'tea', 'beer', 'wine', 'brandy', 'rum', 'champagne', 'water',

'spaghetti', 'borscht', 'hamburger', 'pizza', 'falafel', 'sushi', 'meatballs',

'dog', 'horse', 'cat', 'monkey', 'parrot', 'koala', 'lizard',

'frog', 'toad', 'monkey', 'ape', 'kangaroo', 'wombat', 'wolf',

'france', 'germany', 'hungary', 'luxembourg', 'australia', 'fiji', 'china',

'homework', 'assignment', 'problem', 'exam', 'test', 'class',

'school', 'college', 'university', 'institute'])

plt.show()

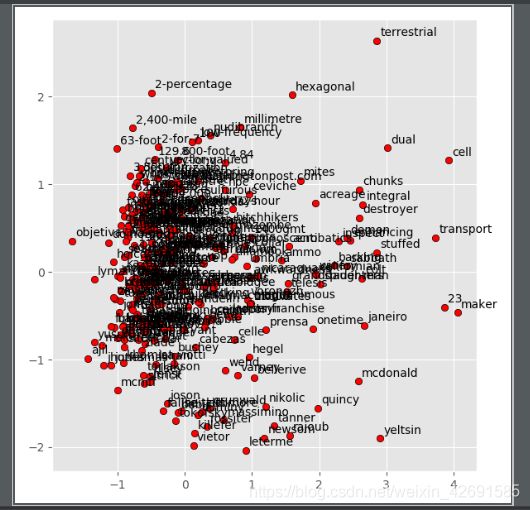

# 样本为300,可视化散点图

display_pca_scatterplot(model, sample=300)

plt.show()

【参考资料】

斯坦福cs224n-2019链接:https://web.stanford.edu/class/archive/cs/cs224n/cs224n.1194/

bilibili 视频:https://www.bilibili.com/video/BV1s4411N7fC?p=2