Tensorflow之RNN实战

RNN原理回顾

# 简单RNN原理回顾

h[t] = fw(h[t-1], x[t]) #fw is some function with parameters W

h[t] = tanh(W[h,h]*h[t-1] + W[x,h]*x[t]) #to be specific

y[t] = W[h,y]*h[t]

RNN实战情感分类

import os

import tensorflow as tf

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers

tf.random.set_seed(22)

np.random.seed(22)

assert tf.__version__.startswith('2.')

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

batchsz = 128 # batch大小

total_words = 10000 # 词汇表大小

max_review_len = 80 # 每个句子的长度(多截少填)

embedding_len = 100 # embedding向量长度

(x_train, y_train), (x_test, y_test) = keras.datasets.imdb.load_data(num_words=total_words)

# x_train:[b, 80],x_test: [b, 80]

x_train = keras.preprocessing.sequence.pad_sequences(x_train, maxlen=max_review_len)

x_test = keras.preprocessing.sequence.pad_sequences(x_test, maxlen=max_review_len)

db_train = tf.data.Dataset.from_tensor_slices((x_train, y_train))

db_train = db_train.shuffle(1000).batch(batchsz, drop_remainder=True) # 最后面的batch可能小于batch_size,把它drop掉

db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test))

db_test = db_test.batch(batchsz, drop_remainder=True)

class MyRNN(keras.Model):

def __init__(self, units):

super(MyRNN, self).__init__()

# [b, 80] => [b, 80, 100] , embedding operation

self.embedding = layers.Embedding(total_words, embedding_len, input_length=max_review_len)

# [b, 80, 100] , h_dim: 64

self.rnn = keras.Sequential([

layers.SimpleRNN(units, dropout=0.5, return_sequences=True, unroll=True),

layers.SimpleRNN(units, dropout=0.5, unroll=True) # unroll用来加速,return_sequences用来保存两层RNN间的连接参数

])

# fc, [b, 80, 100] => [b, 64] => [b, 1]

self.outlayer = layers.Dense(1)

def call(self, inputs, training=None):

# net(x) net(x, training=True) :train mode

# net(x, training=False) :test mode

# input : [b, 80]

x = inputs

# embedding: [b, 80] => [b, 80, 100]

x = self.embedding(x)

# x: [b, 80, 100] => [b, 64]

x = self.rnn(x, training=training)

# out: [b, 64] => [b, 1]

x = self.outlayer(x)

# p(y is pos|x)

prob = tf.sigmoid(x)

return prob

def main():

units = 64 # RNN单元数量

epochs = 10 # 训练次数

model = MyRNN(units)

model.compile(optimizer=keras.optimizers.Adam(0.001),

loss=tf.losses.BinaryCrossentropy(),

metrics=['accuracy'])

model.fit(db_train, epochs=epochs, validation_data=db_test) # training

model.evaluate(db_test) # test

if __name__ == '__main__':

main()

梯度剪枝(防止梯度爆炸)

with tf.GradientTape() as tape:

logits = model(x)

loss = criteon(y, logits)

grads = tape.gradient(loss, model.trainable_variables)

grads = [tf.clip_by_norm(g,15) for g in grads] # clip

optimizer.apply_gradients(zip(grads, model,trainable_variables))

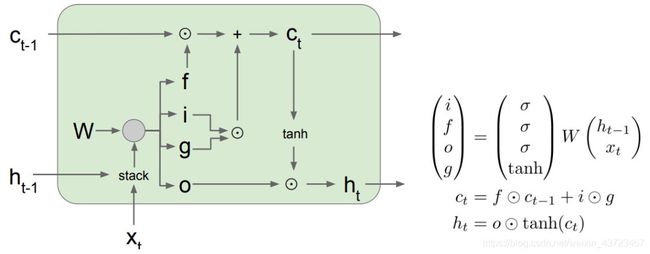

LSTM(防止梯度爆炸)—原理

有三个门:分别是遗忘门— f f f,输入门— i i i,输出门— o o o。对于输入输出变量: c t − 1 c_{t-1} ct−1是输入的memory(新增的,为了解决梯度离散增强记忆能力), x t x_t xt是输入, h t − 1 h_{t-1} ht−1是前一个时间单元的输出, c t c_{t} ct是传到下一个时间单元的memory。

- 遗忘门: f t = σ ( W f × [ h t − 1 , x t ] + b f ) f_t = \sigma(W_f \times [h_{t-1},x_{t}]+b_f) ft=σ(Wf×[ht−1,xt]+bf)

- 输入门: i t = σ ( W i × [ h t − 1 , x t ] + b i ) i_t = \sigma(W_i \times [h_{t-1},x_{t}]+b_i) it=σ(Wi×[ht−1,xt]+bi)

- 输出门: o t = σ ( W o × [ h t − 1 , x t ] + b o ) o_t = \sigma(W_o \times [h_{t-1},x_{t}]+b_o) ot=σ(Wo×[ht−1,xt]+bo)

- 过滤输入: c t ~ = t a n h ( W c × [ h t − 1 , x t ] + b c ) \widetilde{c_t} = tanh(W_c \times [h_{t-1},x_{t}]+b_c) ct =tanh(Wc×[ht−1,xt]+bc),得到的结果是过滤后的输入

那么,新的memory就等于 “遗忘门作用后保留的之前的memory” + “输入门作用后保留的新增的过滤后的输入”, 即 c t = f t × c t − 1 + i t × c t ~ c_t = f_t \times c_{t-1} + i_t \times \widetilde{c_t} ct=ft×ct−1+it×ct 。而新的输出(h)等于输出门作用后保留的"经tanh处理过的新的memory",即 h t = o t × t a n h ( c t ) h_t = o_t \times tanh(c_t) ht=ot×tanh(ct)。

LSTM实战

与上面RNN实现相同,只需要改变两行(layer行)

class MyLSTM(keras.Model):

def __init__(self, units):

super(MyLSTM, self).__init__()

# [b, 80] => [b, 80, 100] , embedding operation

self.embedding = layers.Embedding(total_words, embedding_len, input_length=max_review_len)

# [b, 80, 100] , h_dim: 64

self.lstm = keras.Sequential([

layers.LSTM(units, dropout=0.5, return_sequences=True, unroll=True),

layers.LSTM(units, dropout=0.5, unroll=True) # unroll用来加速,return_sequences用来保存两层RNN间的连接参数

])

# fc, [b, 80, 100] => [b, 64] => [b, 1]

self.outlayer = layers.Dense(1)

def call(self, inputs, training=None):

# net(x) net(x, training=True) :train mode

# net(x, training=False) :test mode

# input : [b, 80]

x = inputs

# embedding: [b, 80] => [b, 80, 100]

x = self.embedding(x)

# x: [b, 80, 100] => [b, 64]

x = self.lstm(x, training=training)

# out: [b, 64] => [b, 1]

x = self.outlayer(x)

# p(y is pos|x)

prob = tf.sigmoid(x)

return prob

aining)

# out: [b, 64] => [b, 1]

x = self.outlayer(x)

# p(y is pos|x)

prob = tf.sigmoid(x)

return prob