pytorch 实现RNN文本分类

除了模型不一样,其他部分和TextCNN实现过程都是一样的,可以参考下面的链接。这里就不详细解释了。

pytorch 实现 textCNN.

导入包

import torch

import torch.nn as nn

import torch.nn.functional as F

import pandas as pd

import jieba

import os

from torch.nn import init

from torchtext import data

from torchtext.vocab import Vectors

import time

数据预处理

# 分词

def tokenizer(text):

return [word for word in jieba.lcut(text) if word not in stop_words]

# 去停用词

def get_stop_words():

file_object = open('data/stopwords.txt',encoding='utf-8')

stop_words = []

for line in file_object.readlines():

line = line[:-1]

line = line.strip()

stop_words.append(line)

return stop_words

stop_words = get_stop_words() # 加载停用词表

text = data.Field(sequential=True,

lower=True,

tokenize=tokenizer,

stop_words=stop_words)

label = data.Field(sequential=False)

train, val = data.TabularDataset.splits(

path='data/',

skip_header=True,

train='train.tsv',

validation='validation.tsv',

format='tsv',

fields=[('index', None), ('label', label), ('text', text)],

)

加载词向量

import gensim

model = gensim.models.KeyedVectors.load_word2vec_format('data/myvector.vector', binary=False)

cache = 'data/.vector_cache'

if not os.path.exists(cache):

os.mkdir(cache)

vectors = Vectors(name='data/myvector.vector', cache=cache)

# 指定Vector缺失值的初始化方式,没有命中的token的初始化方式

#vectors.unk_init = nn.init.xavier_uniform_ 加上这句会报错

text.build_vocab(train, val, vectors=vectors)#加入测试集的vertor

label.build_vocab(train, val)

embedding_dim = text.vocab.vectors.size()[-1]

vectors = text.vocab.vectors

构造迭代器

batch_size=128

train_iter, val_iter = data.Iterator.splits(

(train, val),

sort_key=lambda x: len(x.text),

batch_sizes=(batch_size, len(val)), # 训练集设置batch_size,验证集整个集合用于测试

)

vocab_size = len(text.vocab)

label_num = len(label.vocab)

模型

class BiRNN(nn.Module):

def __init__(self, vocab_size, embedding_dim, num_hiddens, num_layers):

super(BiRNN, self).__init__()

self.word_embeddings = nn.Embedding(vocab_size, embedding_dim) # embedding之后的shape: torch.Size([200, 8, 300])

self.word_embeddings = self.word_embeddings.from_pretrained(vectors, freeze=False)

# bidirectional设为True即得到双向循环神经网络

self.encoder = nn.LSTM(input_size=embedding_dim,

hidden_size=num_hiddens,

num_layers=num_layers,

batch_first=True,

bidirectional=True)

# 初始时间步和最终时间步的隐藏状态作为全连接层输入

self.decoder = nn.Linear(2*num_hiddens, 2)

def forward(self, inputs):

# 输入x的维度为(batch_size, max_len), max_len可以通过torchtext设置或自动获取为训练样本的最大长度

embeddings = self.word_embeddings(inputs)

# 经过embedding,x的维度为(batch_size, time_step, input_size=embedding_dim)

# rnn.LSTM只传入输入embeddings,因此只返回最后一层的隐藏层在各时间步的隐藏状态。

# outputs形状是(batch_size, seq_length, hidden_size*2)

outputs, _ = self.encoder(embeddings) # output, (h, c)

# 我们只需要最后一步的输出,即(batch_size, -1, output_size)

outs = self.decoder(outputs[:, -1, :])

return outs

打印出来看看。

embedding_dim, num_hiddens, num_layers = 100, 64, 1

net = BiRNN(vocab_size, embedding_dim, num_hiddens, num_layers)

print(net)

训练

计算准确率的函数。

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

with torch.no_grad():

for batch_idx, batch in enumerate(train_iter):

X, y = batch.text, batch.label

X = X.permute(1, 0)

y.data.sub_(1) #X转置 y为啥要减1

if isinstance(net, torch.nn.Module):

net.eval() # 评估模式, 这会关闭dropout

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

net.train() # 改回训练模式

else: # 自定义的模型, 3.13节之后不会用到, 不考虑GPU

if('is_training' in net.__code__.co_varnames): # 如果有is_training这个参数

# 将is_training设置成False

acc_sum += (net(X, is_training=False).argmax(dim=1) == y).float().sum().item()

else:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

这个几乎是通用的。

def train(train_iter, test_iter, net, loss, optimizer, num_epochs):

batch_count = 0

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n, start = 0.0, 0.0, 0, time.time()

for batch_idx, batch in enumerate(train_iter):

X, y = batch.text, batch.label

X = X.permute(1, 0)

y.data.sub_(1) #X转置 y为啥要减1

y_hat = net(X)

l = loss(y_hat, y)

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

n += y.shape[0]

batch_count += 1

test_acc = evaluate_accuracy(test_iter, net)

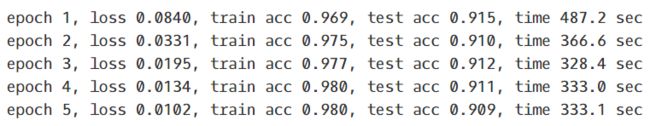

print(

'epoch %d, loss %.4f, train acc %.3f, test acc %.3f, time %.1f sec'

% (epoch + 1, train_l_sum / batch_count, train_acc_sum / n,

test_acc, time.time() - start))

训练过程也是。

lr, num_epochs = 0.01, 3

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss()

train(train_iter, val_iter, net, loss, optimizer, num_epochs)