Hadoop完全分布式配置

1.安装centos7.0

1、安装步骤见文档:VmWare安装Linux(博客中有)

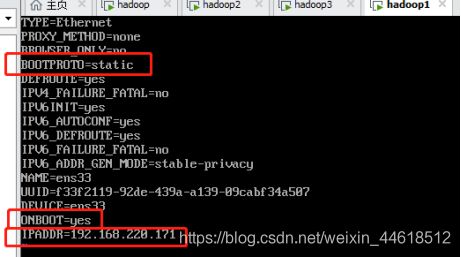

2、安装完成后,对网卡进行配置

vi /etc/sysconfig/network-scripts/ifcfg-ens33

#ens33每个人可能不一样,要根据安装linux时候,系统分配的是什么

3、配置完成,重启网络

Systemctl restart network

4、关闭防火墙并禁用(下次开机启动后防火墙服务不再启动)

systemctl stop firewalld #关闭防火墙

systemctl disable firewalld #禁用防火墙

systemctl status firewalld #查看防火墙状态,下面表示防火墙已关闭

2、MobaXterm连接linux

3、修改主机名

vi /etc/hostname

hostnamectl set-hostname hadoop1(可以使用该命令,让其立刻生效)

4、修改主机列表

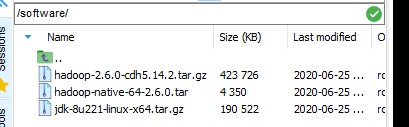

5、创建software文件夹,上传所需的安装包

6、对文件进行解压、并安装到opt目录

tar -zxvf hadoop-2.6.0-cdh5.14.2.tar.gz -C /opt

tar -zxvf jdk-8u221-linux-x64.tar.gz -C /opt

7、对文件改名

mv hadoop-2.6.0-cdh5.14.2/ hadoop

mv jdk1.8.0_221/ jdk8

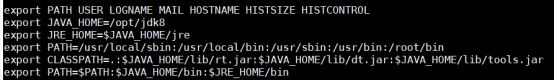

8、配置Java环境

9、让java环境配置生效

source /etc/profile

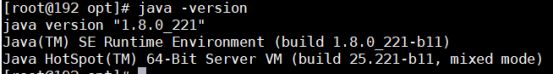

10、测试Java环境是否配置完成

11、生成秘钥,拷贝秘钥?

ssh-keygen -t rsa -P ""

cat /root/.ssh/id_rsa.pub > /root/.ssh/authorized_keys

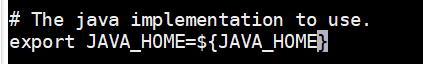

12、配置Hadoop下的JAVA_HOME(注意etc是hadoop下面的)

cd /opt/hadoop/etc/hadoop

vi hadoop-env.sh

将原先默认的JAVA_HOME进行修改,如下所述:

13、配置core-site.xml

fs.defaultFS

hdfs://192.168.220.171:9000

#配置hadoop临时数据的存储位置

hadoop.tmp.dir

/opt/hadoop/tmp

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

14、配置hdfs-site.xml

#配置副本数量

dfs.replication

1

#配置第二个namenode

dfs.namenode.secondary.http-address

hadoop1:50090

15、配置mapred-site.xml

系统默认是mapred-site.xml.template,将它改成mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop1:10020

mapreduce.jobhistory.webapp.address

hadoop1:19888

16、配置yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.hostname

hadoop1

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

17、配置 vi slaves

18、hadoop环境变量配置

export HADOOP_HOME=/opt/hadoop

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

19、让配置生效

source /etc/profile

20、查看配置是否生效

21、格式化HDFS

hadoop namenode -format

21、启动hadoop

start-all.sh

22、启动历史服务

mr-jobhistory-daemon.sh start historyserver

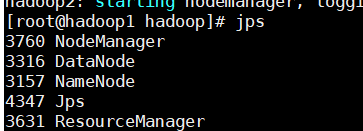

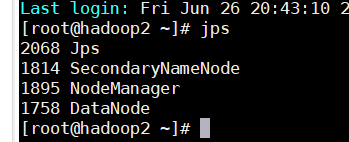

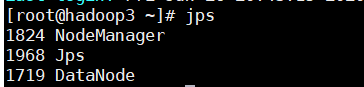

23、查看hadoop进程,正常应该有以下几个

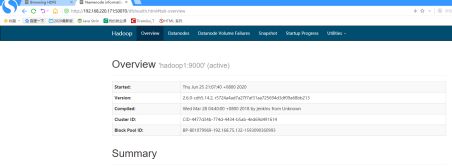

24、以上就是配置,下面用浏览器访问一下

HDFS页面:http://192.168.220.171:50070

YARN的管理界面:http://192.168.220.171:8088

JobHistory界面:http://192.168.220.171:19888/

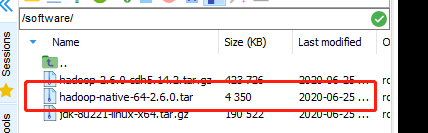

问题解决:

![]()

将software中hadoop-native-64-2.6.0.tar解压到hadoop/lib和hadoop/lib/native

tar -xvf hadoop-native-64-2.6.0.tar -C /opt/hadoop/lib

tar -xvf hadoop-native-64-2.6.0.tar -C /opt/hadoop/lib/native/

备注:如果安装包后缀只有.tar 用 -xvf ; 安装包后缀有.tar.gz 用 -zxvf

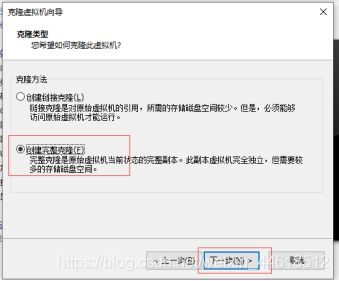

集群配置

1、克隆两个虚拟机

使用的是VmWare,选中要克隆的虚拟机,右键,管理,克隆

克隆完成后,需要将克隆好的虚拟机的网络适配器的MAC重新生成一下

1、对网卡进行配置(配置的ip地址要不同,我三台电脑分别ip分别为171,172,173)

vi /etc/sysconfig/network-scripts/ifcfg-ens33

2、配置完成,重启网络

Systemctl restart network

3、关闭防火墙并禁用(下次开机启动后防火墙服务不再启动)

systemctl stop firewalld #关闭防火墙

systemctl disable firewalld #禁用防火墙

systemctl status firewalld #查看防火墙状态,下面表示防火墙已关闭

2、修改克隆的两个主机名(我这里分别为hadoop2和hadoop3)

vi /etc/hostname

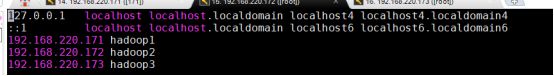

3、修改所有虚拟机的主机列表

4、对所有虚拟机生成秘钥,拷贝秘钥?(以171为例子,172和173一样配置)

ssh-keygen -t rsa -P ""

cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

5、对所有虚拟机开启远程免密登录配置

ssh-copy-id -i ~/.ssh/id_rsa.pub -p22 [email protected]

ssh-copy-id -i ~/.ssh/id_rsa.pub -p22 [email protected]

6、远程登录(最好先重启一下,登录时不需要密码即可)

ssh -p22 root@192.168.220.172

ssh -p22 root@192.168.220.173

7、另外两个虚拟机一样配置一下

8、修改hadoop配置文件

cd /opt/hadoop/etc/hadoop

vi slaves

备注:hadoop1为主节点,一般不加在里面,由于是学习使用,所以无所谓

vi hdfs-site.xml

备注:设置副本为3,secondnamenode为hadoop2

9、往其他两个虚拟机拷贝刚才配置的文件

scp etc/hadoop/hdfs-site.xml root@hadoop2:/opt/hadoop/etc/hadoop/hdfs-site.xml

scp etc/hadoop/hdfs-site.xml root@hadoop3:/opt/hadoop/etc/hadoop/hdfs-site.xml

scp etc/hadoop/slaves root@hadoop3:/opt/hadoop/etc/hadoop/slaves

scp etc/hadoop/slaves root@hadoop2:/opt/hadoop/etc/hadoop/slaves

11、删除tmp文件夹,tmp是配置core-site.xml中的hadoop.tmp.dir(3个都要删)

cd /opt/hadoop

rm -rf tmp

12、格式化HDFS

hadoop namenode -format

13、启动hadoop

start-all.sh