Elastic:利用 Elastic Stack 来分析浏览器历史访问数据

在今天的文章中,我将展示一个如果使用 Elastic Stack 来对我们的电脑上的浏览数据进行分析。浏览器的历史数据保存于一个叫做 SQLite 的数据库中。下面的所有浏览器将设置和历史记录数据保存到SQLite数据库。

我们可以通过编程的语言把这个数据文件读出来,并变为可以被 Elastic Stack 摄入的文件格式,从而把数据导入到 Elasticsearch 中,并使用 Kibana 对数据进行分析。

整个项目的源码在地址 https://github.com/liu-xiao-guo/elastic-stack-browser-history

准备工作

我们首先使用如下的命令把 github 上的代码下载下来:

git clone https://github.com/liu-xiao-guo/elastic-stack-browser-history然后我们进入到该项目的根目录中:

$ pwd

/Users/liuxg/python/elastic-stack-browser-history

liuxg:elastic-stack-browser-history liuxg$ tree -L 2

.

├── LICENSE

├── README.md

├── docker-compose-ingest.yml

├── docker-compose.yml

├── elastic-stack

│ └── config

├── images

│ ├── BrowserHistory.png

│ ├── BrowserHistoryDashboard.png

│ ├── brave-logo.png

│ ├── chrome-logo.png

│ ├── edge-logo.png

│ ├── edge_logo.png

│ ├── firefox-logo.png

│ └── safari-logo.png

└── scripts

├── __pycache__

├── browser_history.py

└── local.py从上面我们可以看出来有一个叫做 scripts 的子目录,它里面含有一个叫做 browser_history.py 的 python 文件:

browser_history.py

import json

import os

import sqlite3

import time

from local import LOG_DIR

from pathlib import Path

# Update the list based on the Browsers you use

browsers = ['brave','chrome','firefox','safari','edge']

USER_PATH = str(Path.home())

def get_path(browser,browser_params):

if browser == 'brave':

BRAVE_PATH = "/Library/Application Support/BraveSoftware/Brave-Browser/Default/History"

hist_path = USER_PATH + BRAVE_PATH

hist_sql = "SELECT datetime(last_visit_time/1000000-11644473600,'unixepoch','localtime') AS visit_date, url, title AS url_title FROM urls ORDER BY visit_date DESC"

browser_params['browser_name'] = browser

browser_params['hist_path'] = hist_path

browser_params['hist_sql'] = hist_sql

return browser_params

elif browser == 'chrome':

CHROME_PATH = "/Library/Application Support/Google/Chrome/Default/History"

hist_path = USER_PATH + CHROME_PATH

hist_sql = "SELECT datetime(last_visit_time/1000000-11644473600,'unixepoch','localtime') AS visit_date, url, title AS url_title FROM urls ORDER BY visit_date DESC"

browser_params['browser_name'] = browser

browser_params['hist_path'] = hist_path

browser_params['hist_sql'] = hist_sql

return browser_params

elif browser == 'edge':

EDGE_PATH = "/Library/Application Support/Microsoft Edge/Default/History"

hist_path = USER_PATH + EDGE_PATH

hist_sql = "SELECT datetime(last_visit_time/1000000-11644473600,'unixepoch','localtime') AS visit_date, url, title AS url_title FROM urls ORDER BY visit_date DESC"

browser_params['browser_name'] = browser

browser_params['hist_path'] = hist_path

browser_params['hist_sql'] = hist_sql

return browser_params

elif browser == 'firefox':

FIREFOX_PATH = "/Library/Application Support/Firefox/Profiles/qs6kpgry.default-release/places.sqlite"

hist_path = USER_PATH + FIREFOX_PATH

hist_sql = "SELECT datetime(moz_historyvisits.visit_date/1000000,'unixepoch','localtime') AS visit_date, moz_places.url AS url, moz_places.title AS url_title FROM moz_places, moz_historyvisits WHERE moz_places.id = moz_historyvisits.place_id ORDER BY visit_date DESC"

browser_params['browser_name'] = browser

browser_params['hist_path'] = hist_path

browser_params['hist_sql'] = hist_sql

return browser_params

elif browser == 'safari':

SAFARI_PATH = "/Library/Safari/History.db"

hist_path = USER_PATH + SAFARI_PATH

hist_sql = "SELECT datetime(visit_time + 978307200, 'unixepoch', 'localtime') AS visit_date, url, title AS url_title FROM history_visits INNER JOIN history_items ON history_items.id = history_visits.history_item ORDER BY visit_date DESC"

browser_params['browser_name'] = browser

browser_params['hist_path'] = hist_path

browser_params['hist_sql'] = hist_sql

return browser_params

def dict_factory(cursor, row):

d = {}

for idx, col in enumerate(cursor.description):

d[col[0]] = row[idx]

return d

def get_browser_hist(browser_params,timestr):

bn = browser_params['browser_name']

# connect to the SQlite databases

print("browser: " + bn)

print("hist_path: " + browser_params['hist_path'])

try:

connection = sqlite3.connect(browser_params['hist_path'])

except:

print("Connection to SQLite: " + bn + " failed!")

print("It may not be installed or not closed")

return

connection.row_factory = dict_factory

cursor = connection.cursor()

try:

cursor.execute(browser_params['hist_sql'])

except:

browser_open = ("\t" + bn + "!! " +"is open. Please close your browser and retry.")

print(browser_open.upper())

tables = cursor.fetchall()

results = len(tables)

print("{}: Results {}".format(bn, results))

print()

if results >0:

dict_bn = {"browser":bn}

jsonFile = open(LOG_DIR + "/"+ bn + "-" + timestr + ".json","w")

for v in tables:

v.update(dict_bn)

jsonFile.write(json.dumps(v) + '\n')

def main():

timestr = time.strftime("%Y%m%d-%H%M%S")

for browser in browsers:

print("Starting {}".format(browser))

browser_params = {}

path = get_path(browser,browser_params)

if path:

get_browser_hist(browser_params,timestr)

main()上面的历史数据路径是在我的 MacOS 电脑上的。你需要根据自己的实际使用电脑的操作系统来进行修改这些路径。在我使用 terminal 进行导入数据时,我发现 terminal 的权限不够,我需要做如下的配置才可以:

我们可以进入到 scripts 的目录中,打入如下的命令:

$ python3 browser_history.py 在我的电脑上显示:

$ python3 browser_history.py

Starting brave

browser: brave

hist_path: /Users/liuxg/Library/Application Support/BraveSoftware/Brave-Browser/Default/History

Connection to SQLite: brave failed!

It may not be installed or not closed

Starting chrome

browser: chrome

hist_path: /Users/liuxg/Library/Application Support/Google/Chrome/Default/History

chrome: Results 28647

Starting firefox

browser: firefox

hist_path: /Users/liuxg/Library/Application Support/Firefox/Profiles/qs6kpgry.default-release/places.sqlite

firefox: Results 9

Starting safari

browser: safari

hist_path: /Users/liuxg/Library/Safari/History.db

safari: Results 325

Starting edge

browser: edge

hist_path: /Users/liuxg/Library/Application Support/Microsoft Edge/Default/History

Connection to SQLite: edge failed!

It may not be installed or not closed上面显示我有三个浏览器:chrome, safari 及 firefox。针对这几个浏览器,通过 python 的脚本把 SQLite 中的数据导入到项目根目录下 data\logs 目录下的 .json 文件:

$ pwd

/Users/liuxg/python/elastic-stack-browser-history

liuxg:elastic-stack-browser-history liuxg$ ls

LICENSE docker-compose-ingest.yml images

README.md docker-compose.yml scripts

data elastic-stack

liuxg:elastic-stack-browser-history liuxg$ ls data/logs

chrome-20200819-160808.json safari-20200819-160808.json

firefox-20200819-160808.json

如上图所示,我们可以看到有三个生产的 json 文件生成,分别对应于我电脑上的三个浏览器。在这三个文件中,它们分别含有各个浏览器所对应的访问的历史数据:

{"visit_date": "2020-08-19 16:04:37", "url": "https://github.com/liu-xiao-guo/elastic-stack-browser-history", "url_title": "liu-xiao-guo/elastic-stack-browser-history: Import your browser history into Elastic Stack", "browser": "chrome"}

{"visit_date": "2020-08-19 16:04:36", "url": "https://github.com/liu-xiao-guo/elastic-stack-browser-history/tree/main/scripts", "url_title": "elastic-stack-browser-history/scripts at main \u00b7 liu-xiao-guo/elastic-stack-browser-history", "browser": "chrome"}

{"visit_date": "2020-08-19 16:03:59", "url": "https://github.com/liu-xiao-guo/elastic-stack-browser-history/blob/main/scripts/browser_history.py", "url_title": "elastic-stack-browser-history/browser_history.py at main \u00b7 liu-xiao-guo/elastic-stack-browser-history", "browser": "chrome"}

{"visit_date": "2020-08-19 16:03:46", "url": "https://github.com/?q=brow", "url_title": "GitHub", "browser": "chrome"}它们的数据如上面所示。每天记录如下:

{

"visit_date": "2020-08-19 16:04:37",

"url": "https://github.com/liu-xiao-guo/elastic-stack-browser-history",

"url_title": "liu-xiao-guo/elastic-stack-browser-history: Import your browser history into Elastic Stack",

"browser": "chrome"

}整个上面的数据显然不是很结构化的数据,我们需要使用 Logstash 或其它的方法来对这个结构的数据来进行结构化,比如我们分析每个记录的 domain 名称等等。

启动 Elastic Stack

在今天的练习中,我们将使用 docker 来安装 Elastic Stack:

- Elasticsearch

- Kibana

- Logstash

我们可以通过 docker-compose.yml 文件来启动这三个 docker。在项目的根目录中,我们会发现一个叫做 docker-compose-ingest.yml 的文件:

docker-compose-ingest.yml

version: '2'

services:

#Elasticsearch container

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELASTIC_VERSION}

environment:

# - cluster.name=docker-pocket

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "discovery.type=single-node"

ulimits:

memlock:

soft: -1

hard: -1

mem_limit: 1g

volumes:

- esdata1:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- esnet

#Logstash container

logstash:

image: docker.elastic.co/logstash/logstash:${ELASTIC_VERSION}

volumes:

- ./elastic-stack/config/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

- ./elastic-stack/config/logstash/pipeline:/usr/share/logstash/pipeline

- ./data/logs:/usr/share/data

ports:

- 5000:5000

- 9600:9600

environment:

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

networks:

- esnet

#Kibana container

kibana:

image: docker.elastic.co/kibana/kibana:${ELASTIC_VERSION}

ports:

- 5601:5601

networks:

- esnet

volumes:

esdata1:

driver: local

networks:

esnet:上面的文件非常简单。我们可以看到一个被定义的变量 ELASTIC_VERSION。这个变量被定义于 .env 文件中:

ELASTIC_VERSION=7.8.0也就是说,我们将使用 7.8.0 版本来进行安装。

由于我们要使用 Logstash 来对我们的数据进行处理,并导入到 Elasticsearch 中,如上图所示,它的配置文件在位置:

./elastic-stack/config/logstash/pipeline定义:

logstash.conf

# Logstash Input Plugin

# File: https://www.elastic.co/guide/en/logstash/current/plugins-inputs-file.html

# JSON Codec: https://www.elastic.co/guide/en/logstash/current/plugins-codecs-json.html

input {

file {

path => [ "/usr/share/data/*.json"]

# sincedb_path => "/usr/share/data/sincedb"

start_position => "beginning"

codec => "json"

}

}

# Logstash Filter Plugins

# Grok: https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

# Date: https://www.elastic.co/guide/en/logstash/current/plugins-filters-date.html

# Mutate: https://www.elastic.co/guide/en/logstash/current/plugins-filters-mutate.html

# Urldecode: https://www.elastic.co/guide/en/logstash/current/plugins-filters-urldecode.html

filter {

date {

match => ["visit_date","yyyy-MM-dd HH:mm:ss"]

timezone =>"America/Los_Angeles"

target => ["@timestamp"]

locale => "en"

}

if [url] {

grok {

break_on_match => "false"

match => { "[url]" => "%{URIPROTO:url_proto}://%{URIHOST:domain}%{URIPATH:url_path}%{URIPARAM:url_params}?" }

keep_empty_captures => "false"

}

if "." not in [domain] {

if ":" in [domain] {

mutate {

split => ["domain",":"]

add_field => ["site_domain", "%{[domain][0]}" ]

}

mutate {

replace => {

"domain" => "%{[domain][0]}"

}

}

}

else {

mutate {

copy => ["domain","site_domain"]

}

}

}

else {

mutate {

copy => {"domain" => "domain_copy"}

}

mutate {

split => ["domain_copy","."]

add_field => ["sub_domain", "%{[domain_copy][0]}" ]

add_field => ["site_domain", "%{[domain_copy][-2]}.%{[domain_copy][-1]}" ]

}

mutate {

# Join together whats left as the class name.

remove_field => ["domain_copy"]

}

}

urldecode {

field => "url_path"

}

}

}

# Logstash Output Plugins

# Elasticsearch: https://www.elastic.co/guide/en/logstash/current/plugins-outputs-elasticsearch.html

# StdOut: https://www.elastic.co/guide/en/logstash/current/plugins-outputs-stdout.html

output {

elasticsearch {

hosts => [ 'elasticsearch:9200' ]

manage_template => "false"

index => "logstash-browser"

}

stdout { }

}经过 Logstash 的处理,它会把之前的 json 文件中每一个文档都进行提取,结构化,并导入到 Elasticsearch 中。从上面我们可以看到被导入的索引的名字叫做 logstash-browser。

我们通过如下的方式来进行启动 Elastic Stack。在项目的根目录中:

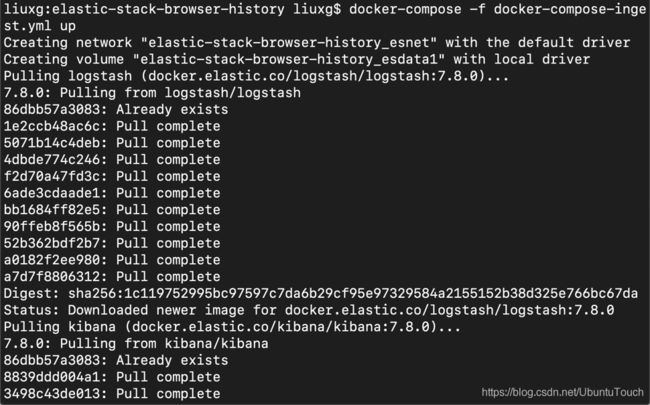

docker-compose -f docker-compose-ingest.yml up这样我们就启动了 Elastic Stack。如果你还没有下载过 7.8.0 的镜像的话,你需要耐心等待下载所有需要的镜像。等安装完后,Elasticsearch, Kibana 及 Logtash 将会被自动启动:

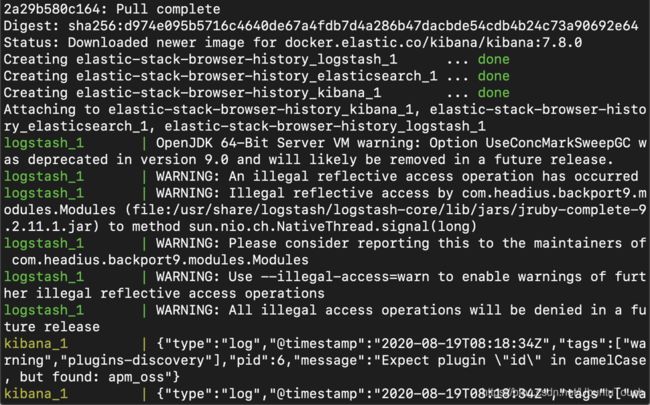

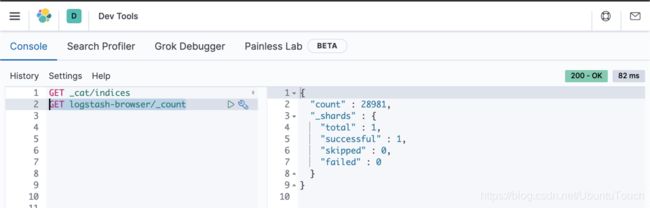

如果一切正常的话,你可以在浏览器器中查看 Kibana:

Hooray ! 我们的 Elastic Stack 已经成功起来了。

分析历史数据

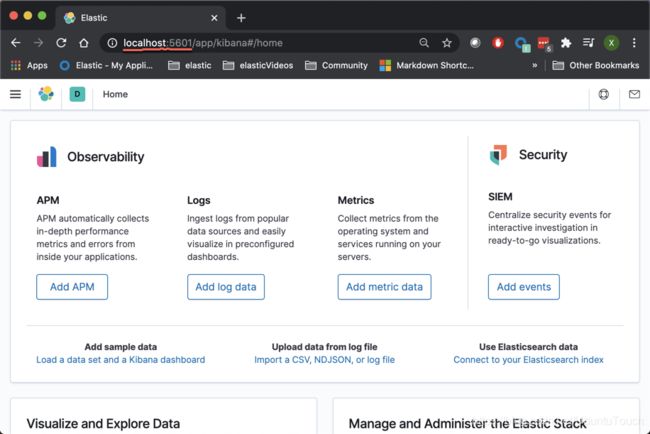

我们首先打开 Kibana,并查看被导入的索引:

GET logstash-browser/_count上面显示已经被导入的文档数目。我们需要为这个索引建立 index pattern。在这里我就不累述了。在我之前的很多教程中已经讲过了。

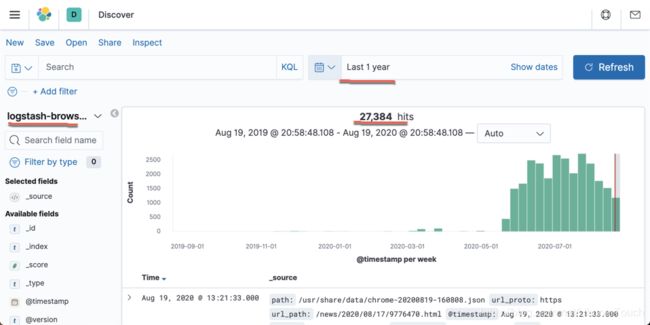

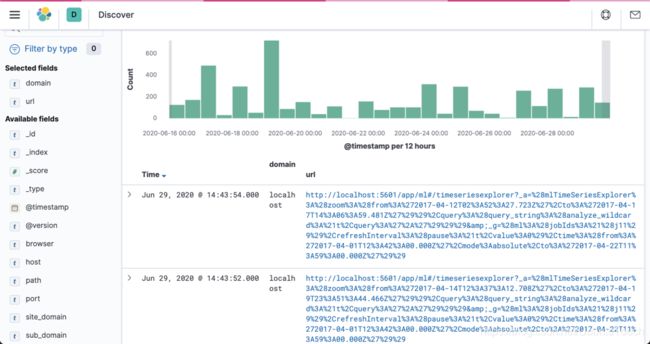

我们可以打开 Discover 来分分析数据:

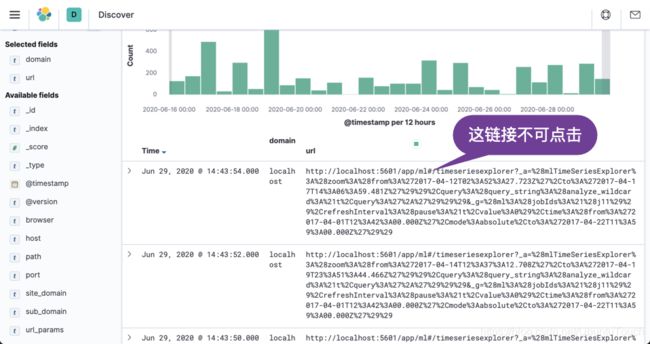

点击上面的 Add 按钮,添加 domain。我们也可以按照同样的方法来添加 url:

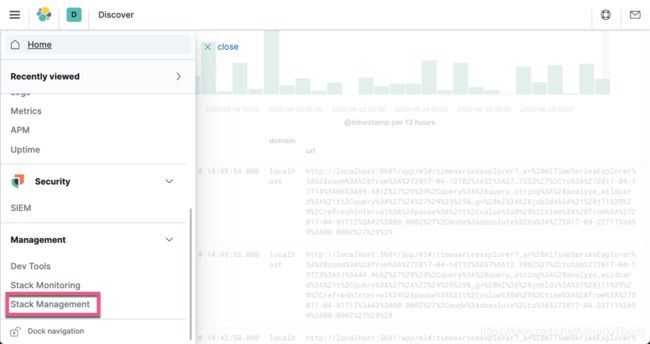

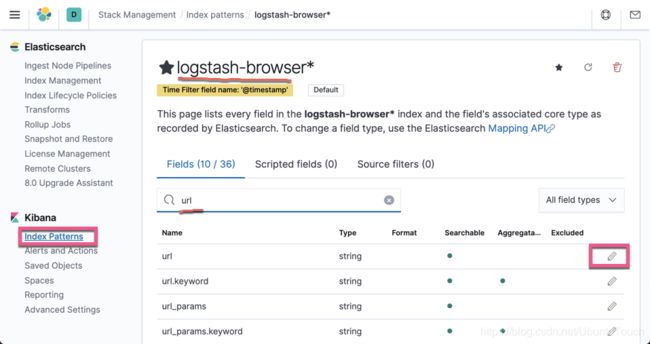

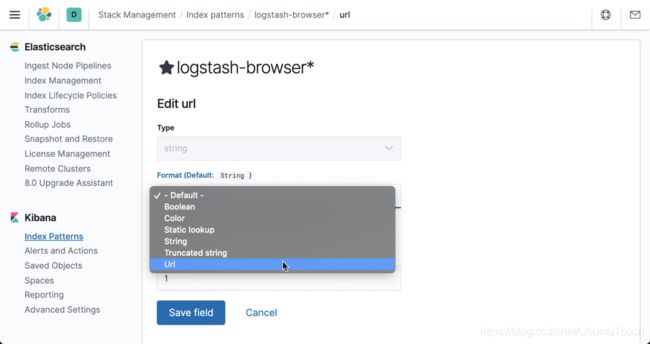

上面的 url 是不可以被点击的。我们可以按照如下的步骤,把 url 这个字段的格式进行改变:

选择 url 并保存。我们再次回到之前的 Discover 界面:

这次,我们可以看到在 url 这里显示的是可以被点击的链接了。

我们可以在 Discover 中进行我们想要的搜索。

可视化

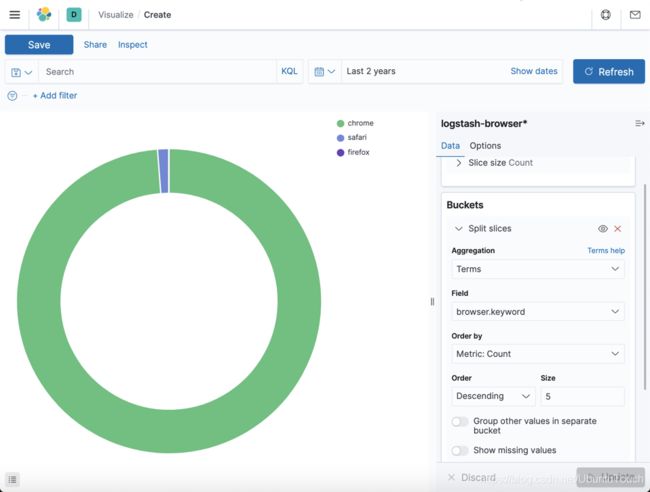

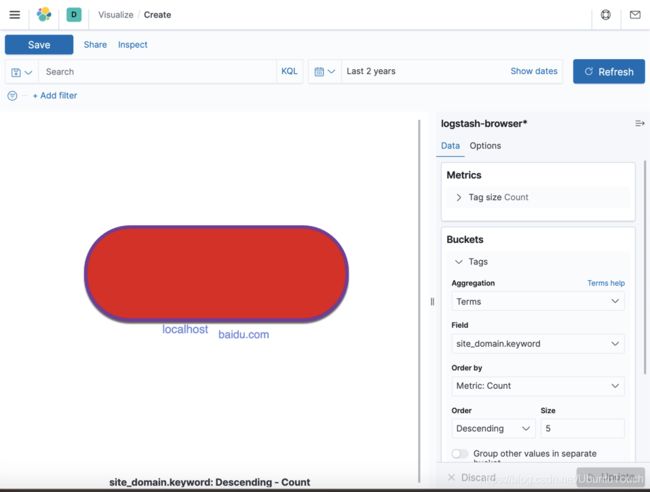

我们可以运用 Kibana 所提供的可视化工具对我们的数据进行可视化分析。

上面显示我使用的 browser 的情况。我主要是使用 chrome browser:

我最常用的几个 domains。

我最常常访问的几个网站。

在项目中,有一个如下的文件:

$ pwd

/Users/liuxg/python/elastic-stack-browser-history

liuxg:elastic-stack-browser-history liuxg$ ls elastic-stack/config/kibana/BrowserHistory-Kibana.ndjson

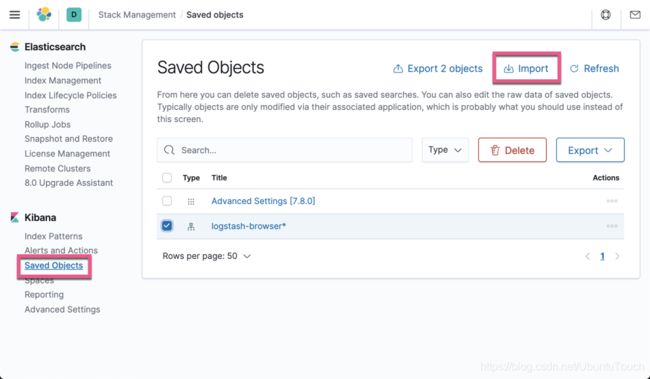

elastic-stack/config/kibana/BrowserHistory-Kibana.ndjson这个文件是已经被制作好的一个 dashboard 的文件。我们可以在 Kibana 中直接进行导入:

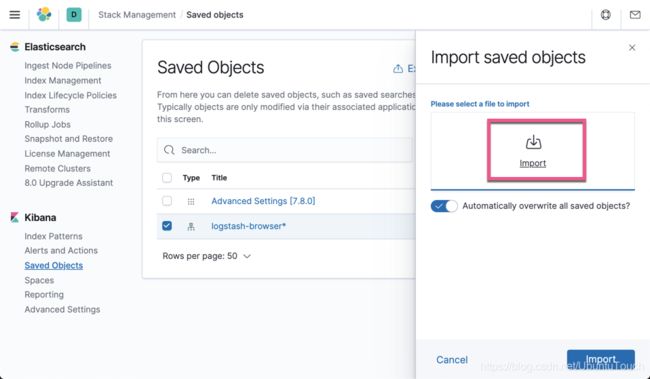

选择我们项目里的 BrowserHistory-Kibana.ndjson 文件,并选择 import:

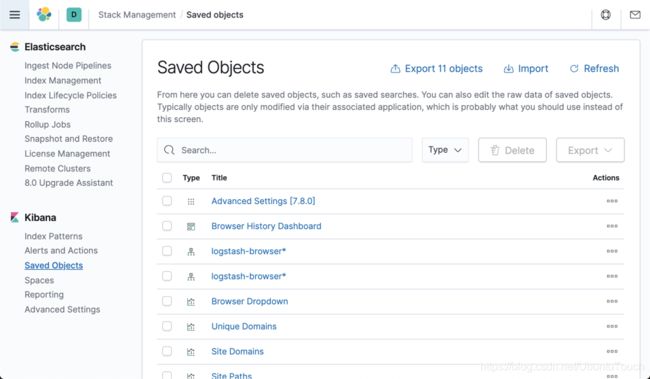

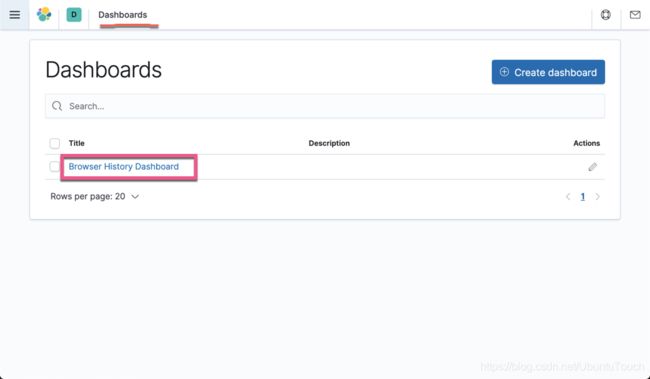

我们看到如上的图。我们进入到 dashboard:

我们将看到所有的访问的统计情况。