TensorFlow2.0入门到进阶系列——7_tensorflow分布式

7_tensorflow分布式

- 1、理论部分

- 1.1、GPU设置

- 1.1.1、API列表

- 1.2、分布式策略

- 1.2.1、MirroredStrategy镜像策略

- 1.2.2、CentralStorageStrategy

- 1.2.3、MultiworkerMirroredStrtegy

- 1.2.4、TPUStrategy

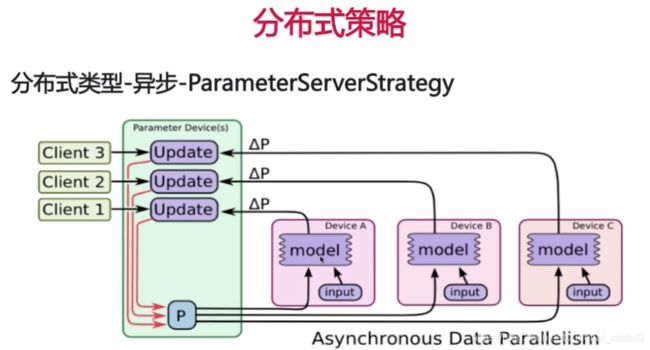

- 1.2.5、ParameterServerStrategy

- 2、实战部分

- 2.1、GPU设置实战

- 2.1.1、GPU使用实战

- 2.2、多GPU环境的使用(手动设置和分布式策略)

- 2.2.1、GPU手动设置实战

- 2.3、分布式训练实战

- 2.3.1、分布式策略

1、理论部分

1.1、GPU设置

- 默认使用全部GPU并且内存全部占满,这样很浪费资源

- 如何不浪费内存和计算资源

- 内存自增长

- 虚拟设备机制

- 多GPU使用

- 虚拟GPU & 实际GPU

- 手工设置 & 分布式机制

1.1.1、API列表

- tf.debugging.set_log_device_placement(打印一些信息,某个变量分配在哪个设备上)

- tf.config.expermental.set_visible_devices(设置本进程的可见设备)

- tf.config.experimental.list_logical_devices(获取所有的逻辑设备,eg:物理设备比作磁盘,逻辑设备就是磁盘分区)

- tf.config.experimental.list_physcial_devices(获取物理设备的列表,有多少个GPU就获取到多少个GPU)

- tf.config.experimental.set_memory_growth(内存自增,程序对GPU内存的占用,用多少占多少,而不是全占满)

- tf.config.experimental.VirtualDeviceConfiguration(建立逻辑分区)

- tf.config.set_soft_device_placement(自动把某个计算分配到某个设备上)

1.2、分布式策略

为什么需要分布式:

- 数据量太大

- 模型太复杂

tensorflow都支持哪些分布式策略: - MirroredStrategy

- CentralStorageStrategy

- MultiworkerMirroredStrtegy

- TPUStrategy

- ParameterServerStrategy

1.2.1、MirroredStrategy镜像策略

1.2.2、CentralStorageStrategy

1.2.3、MultiworkerMirroredStrtegy

1.2.4、TPUStrategy

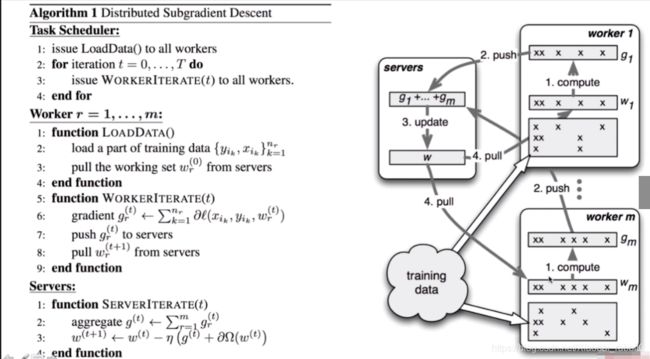

1.2.5、ParameterServerStrategy

伪代码讲解:

分布式类型:

同步异步优缺点:

同步:

异步:

2、实战部分

2.1、GPU设置实战

2.1.1、GPU使用实战

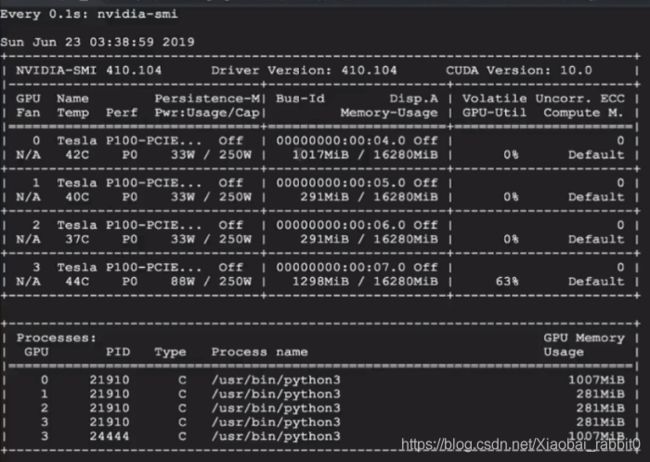

查看有多少个GPU,命令:nvidia-smi

设置GPU:内存增长

tf_gpu_1

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

tf.debugging.set_log_device_placement(True) #打印出模型的各个变量分布在哪个GPU上,

gpus = tf.config.experimental.list_physical_devices('GPU')

#必须要在GPU启动的时候被设置否则会报错

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True) #设置GPU自增长

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

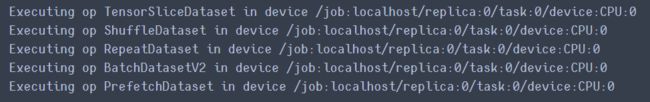

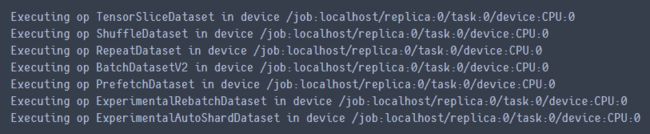

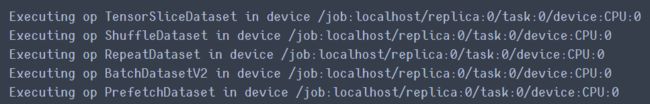

生成dataset;

def make_dataset(images, labels, epochs, batch_size, shuffle=True):

dataset = tf.data.Dataset.from_tensor_slices((images, labels))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 128

epochs = 100

#生成训练集的dataset

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

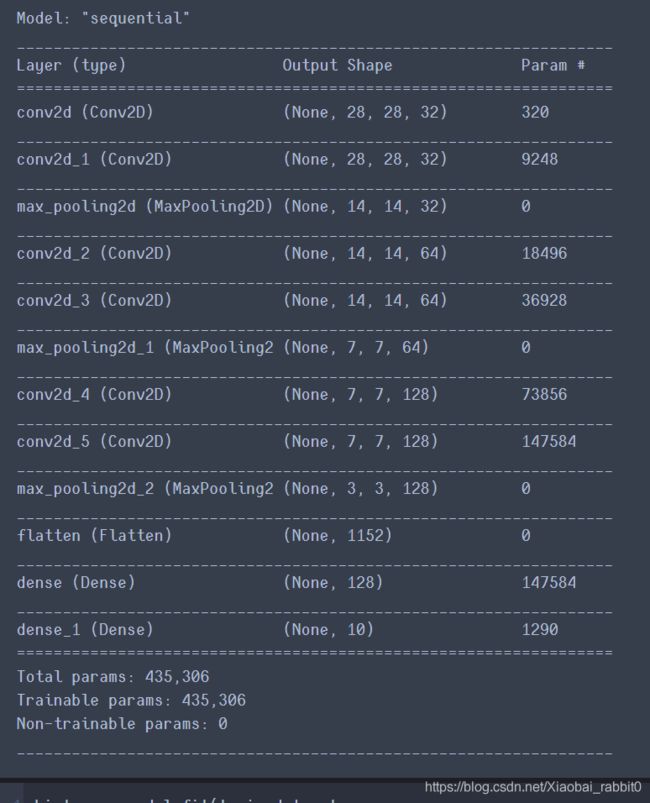

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(128, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

model.summary()

history = model.fit(train_dataset,

steps_per_epoch = x_train_scaled.shape[0] // batch_size,

epochs=10)

查看GPU占用情况:

使用命令实时监控 nvidia-smi 的结果:watch -n 0.1 -x nvidia-smi

(-n是时间间隔,刷新时间0.1s,-x表示要监控哪条命令)

其他GPU的使用(默认只使用第一个GPU)

tf_gpu_2-visible_gpu

方法:只让一个指定的GPU可见,设置其他GPU不可见

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

#设置哪个GPU可见(这里设置最后一个GPU可见)

tf.config.experimental.set_visible_devices(gpus[3], 'GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

查看GPU使用情况:

给GPU做逻辑切分

tf_gpu_3-virtual_device

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_visible_devices(gpus[1], 'GPU')

#GPU逻辑切分

tf.config.experimental.set_virtual_device_configuration(

gpus[1],

[tf.config.experimental.VirtualDeviceConfiguration(memory_limit=3072),

tf.config.experimental.VirtualDeviceConfiguration(memory_limit=3072)])

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

由上图可以看出,有4个GPU,有两个逻辑GPU,都是在GPU1上分出来的

其他代码同上

2.2、多GPU环境的使用(手动设置和分布式策略)

2.2.1、GPU手动设置实战

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

def make_dataset(images, labels, epochs, batch_size, shuffle=True):

dataset = tf.data.Dataset.from_tensor_slices((images, labels))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 128

epochs = 100

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

使用tf.device网络不同的层次放在了不同的GPU上(好处:当模型特别大,一个GPU放不下的时候,同过这种方式可以让模型在没有个GPU下均可放下,可以正常训练)但是,这样做并没有达到并行化的效果,它们之间是串行的关系

model = keras.models.Sequential()

with tf.device(logical_gpus[0].name):

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

with tf.device(logical_gpus[1].name):

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

with tf.device(logical_gpus[2].name):

model.add(keras.layers.Dense(128, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

model.summary()

2.3、分布式训练实战

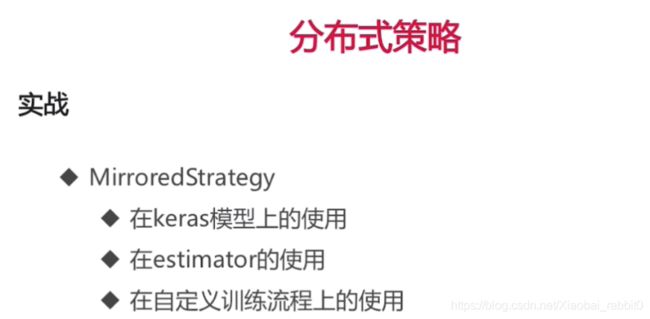

2.3.1、分布式策略

- 实战使用MirroredStrategy策略(因为初学者,接触不到大规模数据,所以使用一机多卡环境即可)

在keras模型上使用MirroredStrategy(tf_distributed_keras_baseline)

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_visible_devices(gpus[3], 'GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

def make_dataset(images, labels, epochs, batch_size, shuffle=True):

dataset = tf.data.Dataset.from_tensor_slices((images, labels))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 256

epochs = 100

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

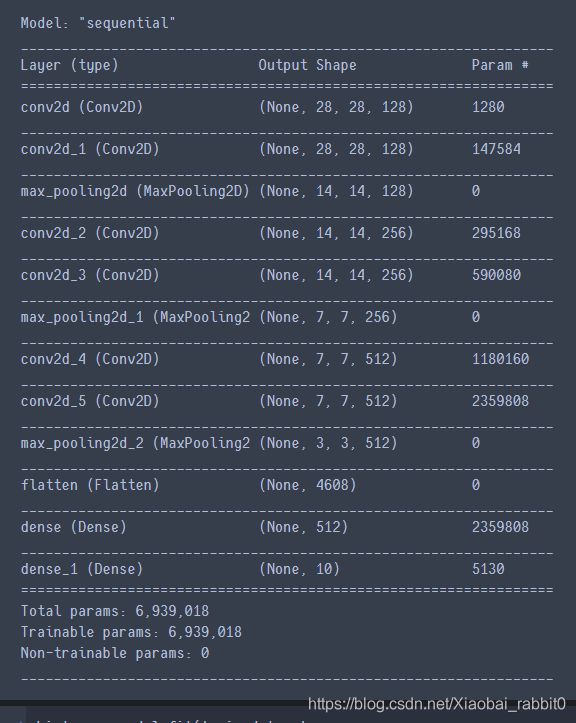

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

model.summary()

history = model.fit(train_dataset,

steps_per_epoch = x_train_scaled.shape[0] // batch_size,

epochs=10)

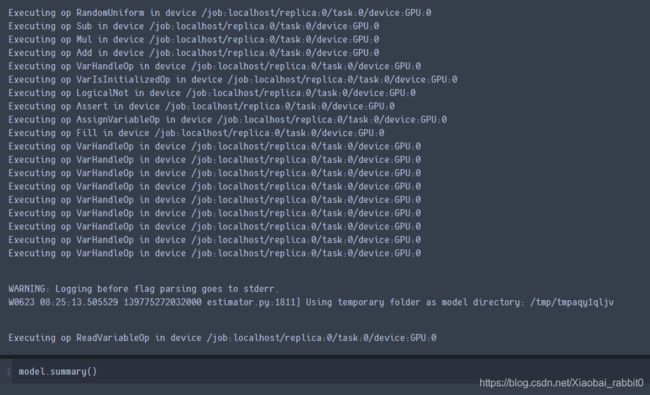

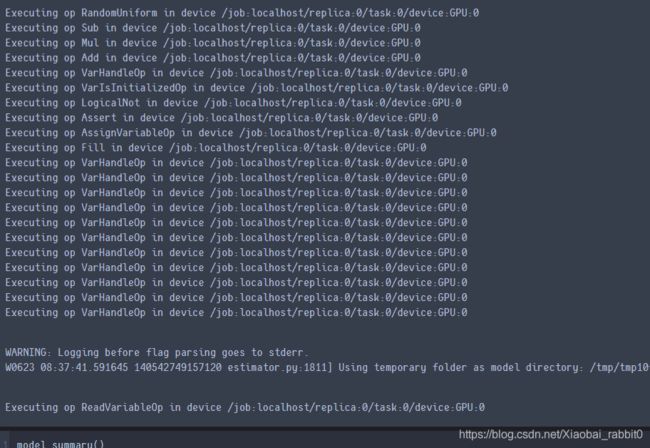

tf.debugging.set_log_device_placement(True)

gpus = tf.config.experimental.list_physical_devices('GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(logical_gpus))

strategy = tf.distribute.MirroredStrategy() #分布式

with strategy.scope():

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

history = model.fit(train_dataset,

steps_per_epoch = x_train_scaled.shape[0] // batch_size,

epochs=10)

(tf_distributed_keras_baseline)

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

#将keras模型装换为estimator

estimator = keras.estimator.model_to_estimator(model)

#这里训练模型就不再使用model.fit,而是使用estimator.train()

estimator.train(

input_fn = lambda : make_dataset(

x_train_scaled, y_train, epochs, batch_size),

max_steps = 5000)

将estimator,改成分布式(tf_distributed_keras)

在estimator上使用MirroredStrategy

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

strategy = tf.distribute.MirroredStrategy()

config = tf.estimator.RunConfig(

train_distribute = strategy)

estimator = keras.estimator.model_to_estimator(model, config=config)

在自定义流程上使用MirroredStrategy(tf_distributed_customized_training)

def make_dataset(images, labels, epochs, batch_size, shuffle=True):

dataset = tf.data.Dataset.from_tensor_slices((images, labels))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

batch_size_per_replica = 256

batch_size = batch_size_per_replica * len(logical_gpus)

train_dataset = make_dataset(x_train_scaled, y_train, 1, batch_size)

valid_dataset = make_dataset(x_valid_scaled, y_valid, 1, batch_size)

train_dataset_distribute = strategy.experimental_distribute_dataset(

train_dataset)

valid_dataset_distribute = strategy.experimental_distribute_dataset(

valid_dataset)

with strategy.scope():

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=256, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.Conv2D(filters=512, kernel_size=3,

padding='same',

activation='relu'))

model.add(keras.layers.MaxPool2D(pool_size=2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(512, activation='relu'))

model.add(keras.layers.Dense(10, activation="softmax"))

# customized training loop.

# 1. define losses functions

# 2. define function train_step

# 3. define function test_step

# 4. for-loop training loop

with strategy.scope():

# batch_size, batch_size / #{gpu}

# eg: 64, gpu: 16

loss_func = keras.losses.SparseCategoricalCrossentropy(

reduction = keras.losses.Reduction.NONE)

def compute_loss(labels, predictions):

per_replica_loss = loss_func(labels, predictions)

return tf.nn.compute_average_loss(per_replica_loss,

global_batch_size = batch_size)

test_loss = keras.metrics.Mean(name = "test_loss")

train_accuracy = keras.metrics.SparseCategoricalAccuracy(

name = 'train_accuracy')

test_accuracy = keras.metrics.SparseCategoricalAccuracy(

name = 'test_accuracy')

optimizer = keras.optimizers.SGD(lr=0.01)

def train_step(inputs):

images, labels = inputs

with tf.GradientTape() as tape:

predictions = model(images, training = True)

loss = compute_loss(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_accuracy.update_state(labels, predictions)

return loss

@tf.function

def distributed_train_step(inputs):

per_replica_average_loss = strategy.experimental_run_v2(

train_step, args = (inputs,))

return strategy.reduce(tf.distribute.ReduceOp.SUM,

per_replica_average_loss,

axis = None)

def test_step(inputs):

images, labels = inputs

predictions = model(images)

t_loss = loss_func(labels, predictions)

test_loss.update_state(t_loss)

test_accuracy.update_state(labels, predictions)

@tf.function

def distributed_test_step(inputs):

strategy.experimental_run_v2(

test_step, args = (inputs,))

epochs = 10

for epoch in range(epochs):

total_loss = 0.0

num_batches = 0

for x in train_dataset:

start_time = time.time()

total_loss += distributed_train_step(x)

run_time = time.time() - start_time

num_batches += 1

print('\rtotal: %3.3f, num_batches: %d, '

'average: %3.3f, time: %3.3f'

% (total_loss, num_batches,

total_loss / num_batches, run_time),

end = '')

train_loss = total_loss / num_batches

for x in valid_dataset:

distributed_test_step(x)

print('\rEpoch: %d, Loss: %3.3f, Acc: %3.3f, '

'Val_Loss: %3.3f, Val_Acc: %3.3f'

% (epoch + 1, train_loss, train_accuracy.result(),

test_loss.result(), test_accuracy.result()))

test_loss.reset_states()

train_accuracy.reset_states()

test_accuracy.reset_states()