python dlib实现小程序(4)实现换脸

# coding=utf-8

import cv2

import dlib

import os

import numpy as np

import glob

class NoFace(Exception):

pass

class Facechanger(object):

def __init__(self,which_predictor = '68',windowname = ' exchange face'):

self.current_path = os.getcwd()

self.predictor_68_path = self.current_path + "\shape_predictor_68_face_landmarks.dat"

self.predictor_5_points_path = self.current_path + "\shape_predictor_5_face_landmarks.dat"

self.detector = dlib.get_frontal_face_detector()

self.predictor= dlib.shape_predictor(self.predictor_68_path)

self.SCALE_FACTOR = 1

self.FEATHER_AMOUNT = 11

self.COLOUR_CORRECT_BLUR_FRAC = 0.6

self.FACE_POINTS = list(range(17, 68))

self.MOUTH_POINTS = list(range(48, 61))

self.RIGHT_BROW_POINTS = list(range(17, 22))

self.LEFT_BROW_POINTS = list(range(22, 27))

self.RIGHT_EYE_POINTS = list(range(36, 42))

self.LEFT_EYE_POINTS = list(range(42, 48))

self.NOSE_POINTS = list(range(27, 35))

self.JAW_POINTS = list(range(0, 17))

self.ALIGN_POINTS = (self.LEFT_BROW_POINTS + self.RIGHT_EYE_POINTS + self.LEFT_EYE_POINTS +

self.RIGHT_BROW_POINTS + self.NOSE_POINTS + self.MOUTH_POINTS)

self.OVERLAY_POINTS = [self.LEFT_BROW_POINTS + self.RIGHT_EYE_POINTS + self.LEFT_EYE_POINTS +

self.RIGHT_BROW_POINTS + self.NOSE_POINTS + self.MOUTH_POINTS]

cv2.namedWindow(windowname,cv2.WINDOW_AUTOSIZE)

self.windowname = windowname

self.cap = cv2.VideoCapture(0)

def det_landmark(self):

#dets = self.detector(self.frame,1)

face1 = self.dets[0]

face2 = self.dets[1]

return face1, face2

def matrix(self,image,face):

return np.matrix([[p.x, p.y] for p in self.predictor(image, face).parts()])

def transformation_from_points(self,points1,points2):

points1 = points1.astype(np.float64)

points2 = points2.astype(np.float64)

c1 = np.mean(points1, axis=0)

c2 = np.mean(points2, axis=0)

points1 -= c1

points2 -= c2

s1 = np.std(points1)

s2 = np.std(points2)

points1 /= s1

points2 /= s2

U, S, Vt = np.linalg.svd(points1.T * points2)

R = (U * Vt).T

return np.vstack([np.hstack(((s2 / s1) * R, c2.T - (s2 / s1) * R * c1.T)), np.matrix([0., 0., 1.])])

def warp_image(self, image, M, dshape):

output_image = np.zeros(dshape, dtype=image.dtype)

cv2.warpAffine(image, M[:2], (dshape[1], dshape[0]), dst=output_image, flags=cv2.WARP_INVERSE_MAP,

borderMode=cv2.BORDER_TRANSPARENT)

return output_image

def correct_colours(self, im1, im2, landmarks1):#颜色调整

blur_amount = self.COLOUR_CORRECT_BLUR_FRAC * np.linalg.norm(

np.mean(landmarks1[self.LEFT_EYE_POINTS], axis=0) -

np.mean(landmarks1[self.RIGHT_EYE_POINTS], axis=0))

blur_amount = int(blur_amount)

if blur_amount % 2 == 0:

blur_amount += 1

im1_blur = cv2.GaussianBlur(im1, (blur_amount, blur_amount), 0)

im2_blur = cv2.GaussianBlur(im2, (blur_amount, blur_amount), 0)

im2_blur += (128 * (im2_blur <= 1.0)).astype(im2_blur.dtype)

return (im2.astype(np.float64) * im1_blur.astype(np.float64) /

im2_blur.astype(np.float64))

def draw_convex_hull(self, img, points, color):

points = cv2.convexHull(points)

cv2.fillConvexPoly(img, points, color)

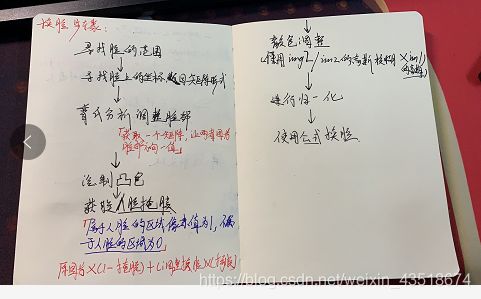

def get_face_mask(self, img, landmarks):#获取掩膜

img = np.zeros(img.shape[:2], dtype=np.float64)

for group in self.OVERLAY_POINTS:

self.draw_convex_hull(img, landmarks[group], color=1)

img = np.array([img, img, img]).transpose((1, 2, 0)) #变成 图片的原来格式(最后一维是三通道的)

img = (cv2.GaussianBlur(img, (self.FEATHER_AMOUNT, self.FEATHER_AMOUNT), 0) > 0) * 1.0

img = cv2.GaussianBlur(img, (self.FEATHER_AMOUNT, self.FEATHER_AMOUNT), 0)

return img

def run(self):

while True:

ret,self.frame1 = self.cap.read()

if ret is True:

b, g, r = cv2.split(self.frame1) # 分离色道 opencv读入的色道是B,G,R

self.frame = cv2.merge([r, g, b]) # 合成R,G,B

self.dets = self.detector(self.frame, 1)

if len(self.dets) ==1:

cv2.imshow(self.windowname, self.frame1)

k = cv2.waitKey(5)

if k & 0XFF == ord('q'):

break

elif len(self.dets) ==2:

self.face1,self.face2 = self.det_landmark()

self.point1 = self.matrix(self.frame1,self.face1)

self.point2 = self.matrix(self.frame1,self.face2)

self.mask1 = self.get_face_mask(self.frame1,self.point1)

self.mask2 = self.get_face_mask(self.frame1,self.point2)

self.tran1 = self.transformation_from_points(self.point1[self.ALIGN_POINTS],self.point2[self.ALIGN_POINTS])

self.tran2 = self.transformation_from_points(self.point2[self.ALIGN_POINTS],self.point1[self.ALIGN_POINTS])

self.warped_mask1 = self.warp_image(self.mask2,self.tran1,self.frame1.shape)

self.warped_mask2 = self.warp_image(self.mask1, self.tran2, self.frame1.shape)

self.conbined_mask1 = np.max([self.mask1,self.warped_mask1],axis=0)

self.conbined_mask2 = np.max([self.mask2,self.warped_mask2],axis=0)

self.warped_img2 = self.warp_image(self.frame1,self.tran1,self.frame1.shape)

self.warped_img1 = self.warp_image(self.frame1, self.tran2, self.frame1.shape)

self.warped_corrected_img2 = self.correct_colours(self.frame1,self.warped_img2,self.point1)

self.warped_corrected_img2_temp = np.zeros(self.warped_corrected_img2.shape,dtype=self.warped_corrected_img2.dtype)

self.warped_corrected_img1 = self.correct_colours(self.frame1, self.warped_img1, self.point2)

self.warped_corrected_img1_temp = np.zeros(self.warped_corrected_img1.shape,

dtype=self.warped_corrected_img1.dtype)

#cv2.normalize(self.warped_corrected_img2, self.warped_corrected_img2_temp, 0, 1, cv2.NORM_MINMAX)

#cv2.normalize(self.warped_corrected_img1, self.warped_corrected_img1_temp, 0, 1, cv2.NORM_MINMAX)

self.output = self.frame1*(1.0-self.conbined_mask1) + self.warped_corrected_img2*self.conbined_mask1

self.output = self.output * (

1.0 - self.conbined_mask2) + self.warped_corrected_img1 * self.conbined_mask2

cv2.normalize(self.output,self.output,0,1,cv2.NORM_MINMAX)

cv2.imshow(self.windowname,self.output.astype(self.output.dtype))

k = cv2.waitKey(5)

if k & 0XFF == ord('q'):

break

self.cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

corrrent = Facechanger(which_predictor = '68',windowname = ' exchange face')

corrrent.run()

大部分代码参考原PO:https://blog.csdn.net/hongbin_xu/article/details/78878745

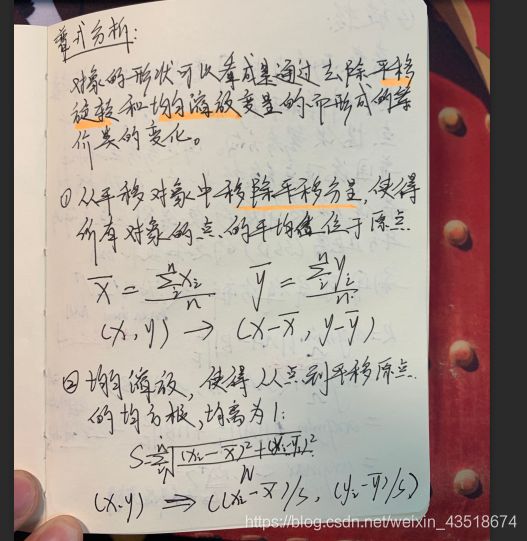

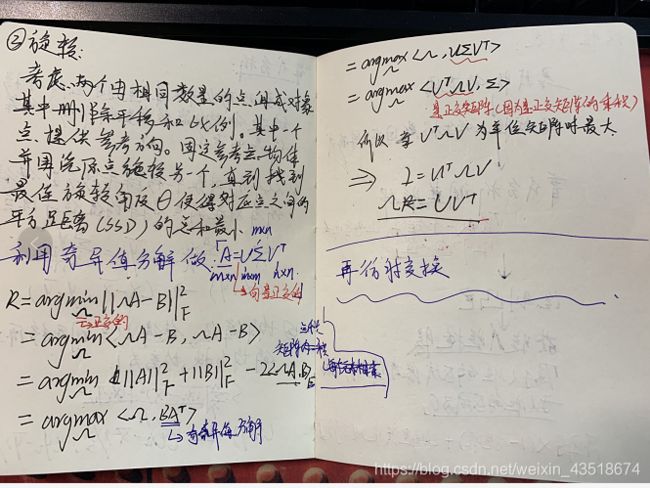

transformation_from_points(self,points1,points2):普什分析,仿射变换得到矩阵可以参考:

https://en.wikipedia.org/wiki/Orthogonal_Procrustes_problem

https://en.wikipedia.org/wiki/Transformation_matrix#Affine_transformations