HBase-client-0.98.4客户端重要参数分析

重要参数

参见: org.apache.hadoop.hbase.HConstants

hbase.rpc.timeout:表示一次RPC请求的超时时间 默认值: public static int DEFAULT_HBASE_RPC_TIMEOUT = 60000;hbase.client.operation.timeout:该值与hbase.rpc.timeout的区别为,hbase.rpc.timeout为一次rpc调用的超时时间。而hbase.client.operation.timeout为一次操作总的时间(从开始调用到重试n次之后失败的总时间) 默认值: public static final int DEFAULT_HBASE_CLIENT_OPERATION_TIMEOUT = Integer.MAX_VALUE;hbase.client.pause:失败重试时等待时间,随着重试次数越多,重试等待时间越长 默认值: public static long DEFAULT_HBASE_CLIENT_PAUSE = 100;hbase.client.retries.number:失败时重试次数 默认值: public static int DEFAULT_HBASE_CLIENT_RETRIES_NUMBER = 31;hbase.client.scanner.timeout.period:HBase客户端2次scan操作间隔时间 默认值: public static int DEFAULT_HBASE_CLIENT_SCANNER_TIMEOUT_PERIOD = 60000;

源代码分析

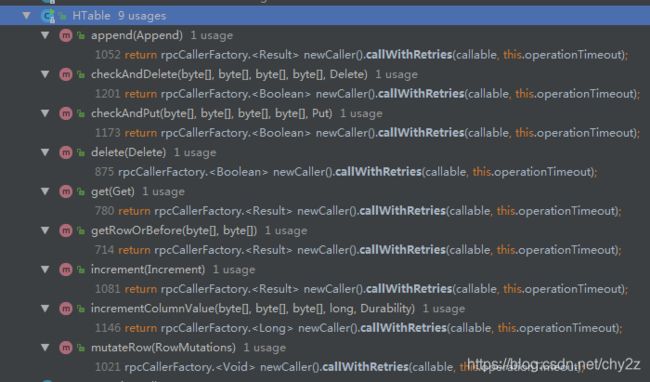

参见:org.apache.hadoop.hbase.client.HTable

可见hbase.client.operation.timeout和get、append、increment、delete、put 等操作相关

具体分析

rpcCallerFactory.newCaller().callWithRetries(callable, this.operationTimeout)

源码如下

/**

* Retries if invocation fails.

* @param callTimeout Timeout for this call

* @param callable The {@link RetryingCallable} to run.

* @return an object of type T

* @throws IOException if a remote or network exception occurs

* @throws RuntimeException other unspecified error

*/

@edu.umd.cs.findbugs.annotations.SuppressWarnings

(value = "SWL_SLEEP_WITH_LOCK_HELD", justification = "na")

public synchronized T callWithRetries(RetryingCallable callable, int callTimeout)

throws IOException, RuntimeException {

this.callTimeout = callTimeout;

List exceptions =

new ArrayList();

this.globalStartTime = EnvironmentEdgeManager.currentTimeMillis();

for (int tries = 0;; tries++) {

long expectedSleep = 0;

try {

beforeCall();

callable.prepare(tries != 0); // if called with false, check table status on ZK

return callable.call();

} catch (Throwable t) {

if (LOG.isTraceEnabled()) {

LOG.trace("Call exception, tries=" + tries + ", retries=" + retries + ", retryTime=" +

(EnvironmentEdgeManager.currentTimeMillis() - this.globalStartTime) + "ms", t);

}

// translateException throws exception when should not retry: i.e. when request is bad.

t = translateException(t);

callable.throwable(t, retries != 1);

RetriesExhaustedException.ThrowableWithExtraContext qt =

new RetriesExhaustedException.ThrowableWithExtraContext(t,

EnvironmentEdgeManager.currentTimeMillis(), toString());

exceptions.add(qt);

ExceptionUtil.rethrowIfInterrupt(t);

if (tries >= retries - 1) {

throw new RetriesExhaustedException(tries, exceptions);

}

// If the server is dead, we need to wait a little before retrying, to give

// a chance to the regions to be

// tries hasn't been bumped up yet so we use "tries + 1" to get right pause time

expectedSleep = callable.sleep(pause, tries + 1);

// If, after the planned sleep, there won't be enough time left, we stop now.

long duration = singleCallDuration(expectedSleep);

if (duration > this.callTimeout) {

String msg = "callTimeout=" + this.callTimeout + ", callDuration=" + duration +

": " + callable.getExceptionMessageAdditionalDetail();

throw (SocketTimeoutException)(new SocketTimeoutException(msg).initCause(t));

}

} finally {

afterCall();

}

try {

Thread.sleep(expectedSleep);

} catch (InterruptedException e) {

throw new InterruptedIOException("Interrupted after " + tries + " tries on " + retries);

}

}

} 可以发现:hbase.client.pause(tries) 和 hbase.client.retries.number (pause)也使用到了

重试等待时间如何计算?

expectedSleep = callable.sleep(pause, tries + 1);

@Override

public long sleep(long pause, int tries) {

return ConnectionUtils.getPauseTime(pause, tries);

}

public static long getPauseTime(final long pause, final int tries) {

int ntries = tries;

if (ntries >= HConstants.RETRY_BACKOFF.length) {

ntries = HConstants.RETRY_BACKOFF.length - 1;

}

long normalPause = pause * HConstants.RETRY_BACKOFF[ntries];

long jitter = (long)(normalPause * RANDOM.nextFloat() * 0.01f); // 1% possible jitter

return normalPause + jitter;

}如何查找分区信息

callable.prepare(tries != 0);

最终调用

metaLocation = locateRegion(parentTable, metaKey, true, false);ServerName servername = MetaRegionTracker.blockUntilAvailable(zkw, hci.rpcTimeout);

可以发现:hbase.rpc.timeout(rpcTimeout)也使用到了

上面分析的都是单个操作,在分析下批量操作和那些重要参数有关

Result[] get(Listgets) throws IOException;

源码执行流程

1)org.apache.hadoop.hbase.client.HTable

/**

* {@inheritDoc}

*/

@Override

public Result[] get(List gets) throws IOException {

if (gets.size() == 1) {

return new Result[]{get(gets.get(0))};

}

try {

Object [] r1 = batch((List)gets);

// translate.

Result [] results = new Result[r1.length];

int i=0;

for (Object o : r1) {

// batch ensures if there is a failure we get an exception instead

results[i++] = (Result) o;

}

return results;

} catch (InterruptedException e) {

throw (InterruptedIOException)new InterruptedIOException().initCause(e);

}

} 代码出口: batch((List)gets);

2)org.apache.hadoop.hbase.client.HConnectionManager

@Override

@Deprecated

public void processBatchCallback(

List list,

TableName tableName,

ExecutorService pool,

Object[] results,

Batch.Callback callback)

throws IOException, InterruptedException {

// To fulfill the original contract, we have a special callback. This callback

// will set the results in the Object array.

ObjectResultFiller cb = new ObjectResultFiller(results, callback);

AsyncProcess asyncProcess = createAsyncProcess(tableName, pool, cb, conf);

// We're doing a submit all. This way, the originalIndex will match the initial list.

asyncProcess.submitAll(list);

asyncProcess.waitUntilDone();

if (asyncProcess.hasError()) {

throw asyncProcess.getErrors();

}

} 代码出口:asyncProcess.submitAll(list);

3)org.apache.hadoop.hbase.client.AsyncProcess

private void submit(List> initialActions,

List> currentActions, int numAttempt,

final HConnectionManager.ServerErrorTracker errorsByServer) {

if (numAttempt > 1){

retriesCnt.incrementAndGet();

}

// group per location => regions server

final Map> actionsByServer =

new HashMap>();

NonceGenerator ng = this.hConnection.getNonceGenerator();

for (Action action : currentActions) {

HRegionLocation loc = findDestLocation(action.getAction(), action.getOriginalIndex());

if (loc != null) {

addAction(loc, action, actionsByServer, ng);

}

}

if (!actionsByServer.isEmpty()) {

sendMultiAction(initialActions, actionsByServer, numAttempt, errorsByServer);

}

}

代码出口:sendMultiAction(initialActions, actionsByServer, numAttempt, errorsByServer);

重点代码分析:

HRegionLocation loc = findDestLocation(action.getAction(), action.getOriginalIndex());

在获取分区信息时需要重试this.pause和hbase.client.pause相关

Thread.sleep(ConnectionUtils.getPauseTime(this.pause, tries));

4)org.apache.hadoop.hbase.client.AsyncProcess

public void sendMultiAction(final List> initialActions,

Map> actionsByServer,

final int numAttempt,

final HConnectionManager.ServerErrorTracker errorsByServer) {

// Send the queries and add them to the inProgress list

// This iteration is by server (the HRegionLocation comparator is by server portion only).

for (Map.Entry> e : actionsByServer.entrySet()) {

final HRegionLocation loc = e.getKey();

final MultiAction multiAction = e.getValue();

incTaskCounters(multiAction.getRegions(), loc.getServerName());

Runnable runnable = Trace.wrap("AsyncProcess.sendMultiAction", new Runnable() {

@Override

public void run() {

MultiResponse res;

try {

MultiServerCallable callable = createCallable(loc, multiAction);

try {

res = createCaller(callable).callWithoutRetries(callable, timeout);

} catch (IOException e) {

// The service itself failed . It may be an error coming from the communication

// layer, but, as well, a functional error raised by the server.

receiveGlobalFailure(initialActions, multiAction, loc, numAttempt, e,

errorsByServer);

return;

} catch (Throwable t) {

// This should not happen. Let's log & retry anyway.

LOG.error("#" + id + ", Caught throwable while calling. This is unexpected." +

" Retrying. Server is " + loc.getServerName() + ", tableName=" + tableName, t);

receiveGlobalFailure(initialActions, multiAction, loc, numAttempt, t,

errorsByServer);

return;

}

// Nominal case: we received an answer from the server, and it's not an exception.

receiveMultiAction(initialActions, multiAction, loc, res, numAttempt, errorsByServer);

} finally {

decTaskCounters(multiAction.getRegions(), loc.getServerName());

}

}

});

try {

this.pool.submit(runnable);

} catch (RejectedExecutionException ree) {

// This should never happen. But as the pool is provided by the end user, let's secure

// this a little.

decTaskCounters(multiAction.getRegions(), loc.getServerName());

LOG.warn("#" + id + ", the task was rejected by the pool. This is unexpected." +

" Server is " + loc.getServerName(), ree);

// We're likely to fail again, but this will increment the attempt counter, so it will

// finish.

receiveGlobalFailure(initialActions, multiAction, loc, numAttempt, ree, errorsByServer);

}

}

}

重点代码分析:

1)this.pool.submit(runnable); 可以看出,利用线程池同时并行多个RPC操作

2)res = createCaller(callable).callWithoutRetries(callable, timeout); 可以看出超时时间timeout和hbase.rpc.timeout相关,callWithoutRetries执行失败不会进行RPC重试

3)receiveGlobalFailure(initialActions, multiAction, loc, numAttempt, e, errorsByServer);失败进行RPC重试,其实就是递归调用sendMultiAction,numAttempt和hbase.client.retries.number相关

通过以上源码分析可以得出结论:

get、append、increment、delete操作和 hbase.client.operation.timeout、hbase.rpc.timeout、hbase.client.pause、hbase.client.retries.number 相关

批量操作和hbase.rpc.timeout、hbase.client.pause、 hbase.client.retries.number 相关