How boltdb Write its Data?

A-Ha!

Here’re three questions during reading the source code of BoltDB. I’ll explain our testing procedure to dive into the core of the BoltDB writing mechanism.

Code link: yhyddr/quicksilver

First

At first, my mentor posts a question: “Did BoltDB have a temporary file when starting a read/write transaction”.

Following the quickstart, all data is stored in a file on disk. Generally speaking, the file has a linerial structure, when inserting, updating and deleting, we have to re-arrange the space occupied by this file. A tempory file should be used in this procedure, for example, .swp file for VIM.

Let’s check whether BoltDB uses this method.

FileSystem Notify

We can use fsnotify to watch all the changes in a given directory. Here’s the code:

package main

import (

"log"

"github.com/fsnotify/fsnotify"

)

func main() {

hang := make(chan bool)

watcher, err := fsnotify.NewWatcher()

if err != nil {

log.Fatal(err)

}

watcher.Add("./")

go func() {

for {

select {

case e := <-watcher.Events:

log.Println(e.Op.String(), e.Name)

case err := <-watcher.Errors:

log.Println(err)

}

}

}()

<-hang

}

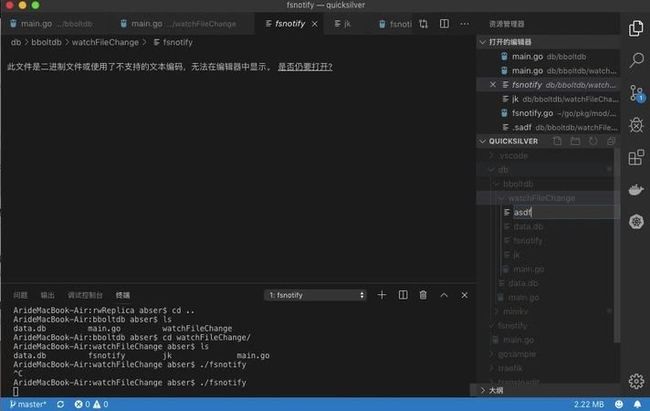

Testing procedure as below:

We could found our changes have records on the terminal, for example when I create a file named asdf, program log CREATE asdf.

Update BoltDB File

then we need to write code to store some data. This way we update the BoltDB file and know if there has a tempory file to replace origin file. Because it's obvious that BoltDB as a simple kv database seems do not have access to system directory but just current directory and the database file.

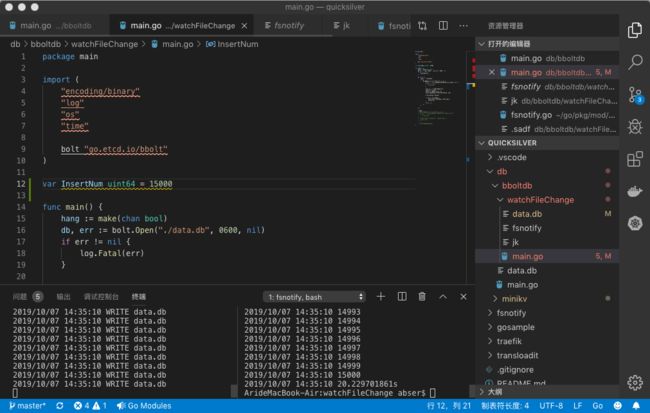

We Write code below to insert data :

package main

import (

"encoding/binary"

"log"

"os"

"time"

bolt "go.etcd.io/bbolt"

)

var InsertNum int = 15000

func main() {

hang := make(chan bool)

db, err := bolt.Open("./data.db", 0600, nil)

if err != nil {

log.Fatal(err)

}

go func() {

times := time.Now()

for {

db.Update(func(tx *bolt.Tx) error {

b, err := tx.CreateBucketIfNotExists([]byte("cats"))

if err != nil {

return err

}

num, err := b.NextSequence()

log.Println(num)

byteid := make([]byte, 8)

binary.BigEndian.PutUint64(byteid, num)

b.Put(byteid, byteid)

if num == InsertNum {

log.Println(time.Now().Sub(times))

os.Exit(0)

}

return nil

})

}

}()

<-hang

}the attention I use variable InsertNum control how much data could be inserted.

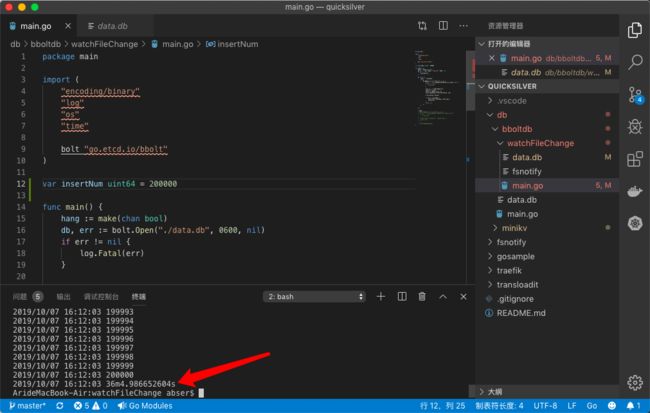

then we run it. (sure you need to run fsnotify first.)

we inserted 900 data and it cost us 20s. find that it's just writing data.db file. It means that we seem to have an answer to the first question: No!

Besides, we also could get the answer by source code.

Second

We have known the first question's answer, mentor quickly asked me the second.

How much data or Which size could a BoltDB file store?

we want to know how much.

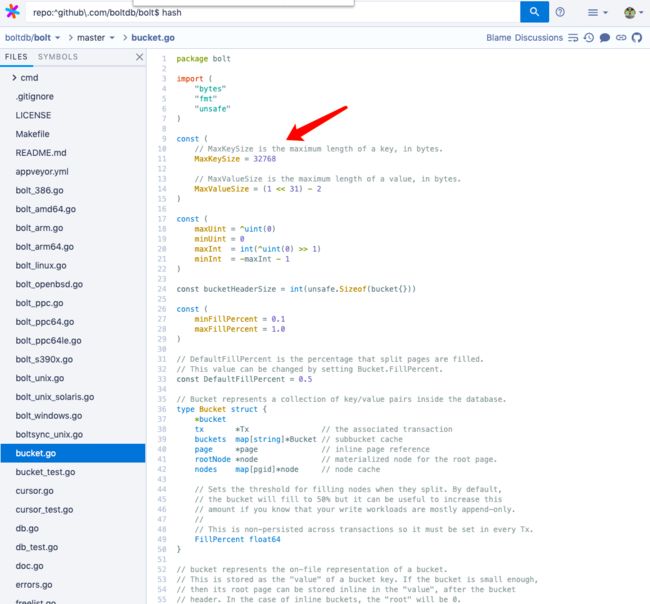

let's find it from source code. BoltDB declare these sizes on the head of a go file

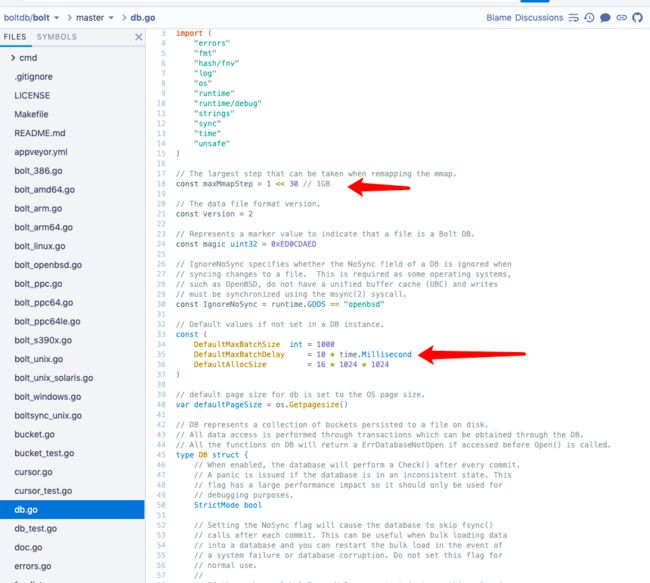

and we got the miniFillPercent and maxFillPercent are 0.1 and 1.0, in db.go we also know about BoltDB page size is determined by OS page size.

It means a page may be used in some parts.

important that maxMmanpstep = 1 <<30 is 1 GB for remapping.

we have known there's no temporary file that means BoltDB use memory or called Mmap to host temporary data.

use Mmap means, your BoltDB File should not bigger than your assignable memory space.

Third

From before questions, we know a lot about BoltDB. But the mentor posts once more question:

In a node, the author sets 50% capacity limit otherwise spill it to two nodes. Does our datafile just use 50% space what a BoltDB database file holds?

It's because the strategy about the node spill method to control the size of the node does not overflow OS page size.

that question needs we know about the disk layout of the BoltDB file. I'll detailed explanation in my next article. Of course, we know the space ratio should not over 0.5.

And now I just give some data for you.

I first insert 100000 data which is an auto-increment key and the same value.

1 -> 100000 total 977784 b because of BoltDB store in the file as bytes.

and we found our data file use 8.5m space.

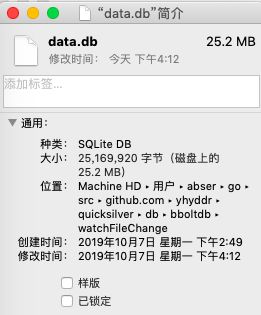

100000 -> 200000 and file expand to 25.2m when I insert nearly 110000th data.

And at last, the file is 25.2m when we hosted 200000 data.