Visual Studio 配置 OpenCv

我配置过程中debug出问题按这位博主的方法解决的

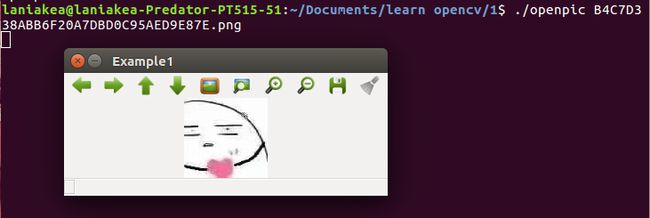

加载图片

Example2-2

代码如下

#include

int main(int argc, char** argv) {

cv::Mat img = cv::imread(argv[1], -1);

if (img.empty()) {

return -1;

}

cv::namedWindow("Example1", cv::WINDOW_AUTOSIZE);

cv::imshow("Example1", img);

cv::waitKey(0);

cv::destroyWindow("Example1");

return 0;

} 在 cv 命名空间下,等价于以下写法

#include

using namespace cv;

int main(int argc, char** argv) {

Mat img = imread(argv[1], -1);

if (img.empty()) {

return -1;

}

namedWindow("Example1", WINDOW_AUTOSIZE);

imshow("Example1", img);

waitKey(0);

destroyWindow("Example1");

return 0;

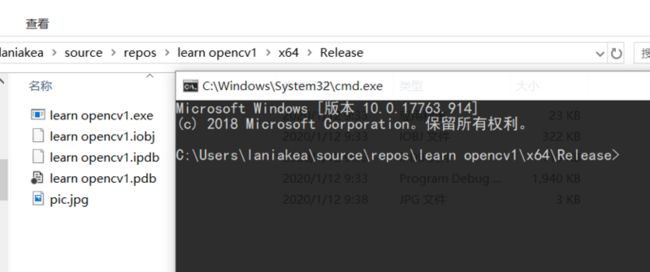

} 然后就可以运行了,上面贴出的网页博主只设置了debug,因为我的代码是按照learning OpenCV3中来的,里面加载图片是在命令框中进行的,如果是在window下,还要再设置一下release,和配debug过程一样的。

现在我再去Ubuntu里试一遍,顺便使用一下吃灰很久的cmake

先创建一个.cpp文件,我的叫openpic

然后再写一个CMakeLists.txt

内容如下

cmake_minimum_required (VERSION 3.5.1)

project(openpic)

find_package( OpenCV REQUIRED )

add_executable(openpic openpic.cpp)

target_link_libraries( openpic ${OpenCV_LIBS} )然后再命令框中依次执行

cmake .make加载视频

Example2-3

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

int main( int argc, char** argv ) {

cv::namedWindow( "Example3", cv::WINDOW_AUTOSIZE );

cv::VideoCapture cap;

cap.open( std::string(argv[1]) );

//string(argv[1])视频路径+名称

cv::Mat frame;

for(;;) {

cap >> frame;

//逐帧读取,直到读完所有帧

if( frame.empty() ) break; // Ran out of film

cv::imshow( "Example3", frame );

if( cv::waitKey(33) >= 0 ) break;//每帧展示33毫秒

}

return 0;

}CMakeCache.txt

cmake_minimum_required (VERSION 3.5.1)

project(openvideo)

find_package( OpenCV REQUIRED )

add_executable(openvideo openvideo.cpp)

target_link_libraries( openvideo ${OpenCV_LIBS} )cmake .make./openvideo red.avi

给视频加操作

- 加一个进度条

slider trackbar - 按S 单步调试-暂停

- 按R 开始

- 每当我们进行进度调整操作时,执行S(暂停)

Example2-4

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include

#include

using namespace std;

//有习惯使用g_表示全局变量

int g_slider_position = 0;

int g_run = 1;

//只要其不为零就显示新的帧

int g_dontset = 0; //start out in single step mode

cv::VideoCapture g_cap;

void onTrackbarSlide(int pos, void *) {

g_cap.set(cv::CAP_PROP_POS_FRAMES, pos);

if (!g_dontset){//被用户进行了操作

g_run = 1;

}

g_dontset = 0;

}

int main(int argc, char** argv) {

cv::namedWindow("Example2_4", cv::WINDOW_AUTOSIZE);

g_cap.open(string(argv[1]));

int frames = (int)g_cap.get(cv::CAP_PROP_FRAME_COUNT);//第几帧

int tmpw = (int)g_cap.get(cv::CAP_PROP_FRAME_WIDTH);//长

int tmph = (int)g_cap.get(cv::CAP_PROP_FRAME_HEIGHT);//高

cout << "Video has " << frames << " frames of dimensions("

<< tmpw << ", " << tmph << ")." << endl;

//calibrate the slider trackbar(校准滑块)

cv::createTrackbar("Position"//进度条标签

, "Example2_4"//放这个标签窗口的名称

, &g_slider_position//一个和滑块绑定的变量

, frames//进度最大值(视频总帧数)

,onTrackbarSlide//回调?(如果不想要,可设置为0)

);

cv::Mat frame;

for (;;) {

if (g_run != 0) {

g_cap >> frame; if (frame.empty()) break;

int current_pos = (int)g_cap.get(cv::CAP_PROP_POS_FRAMES);

g_dontset = 1;

cv::setTrackbarPos("Position", "Example2_4", current_pos);

cv::imshow("Example2_4", frame);

g_run -= 1;

}

char c = (char)cv::waitKey(10);

if (c == 's') // single step

{

g_run = 1; cout << "Single step, run = " << g_run << endl;

}

if (c == 'r') // run mode

{

g_run = -1; cout << "Run mode, run = " << g_run << endl;

}

if (c == 27)

break;

}

return(0);

} 增加一个全局变量表示进度条的位置

增加一个信号来更新这个变量并定位到需要的位置

当用进度条调整进度时,即设置g_run =1

功能需求:随着视频向前(as the video advances),进度条的位置也向前

我们使用一个函数来避免(triggering single-step mode)触发单步模式

(callback routine)回调例程void onTrackbarSlide(int pos, void *)

(passed a 32-bit integer)传递一个32位的整数pos

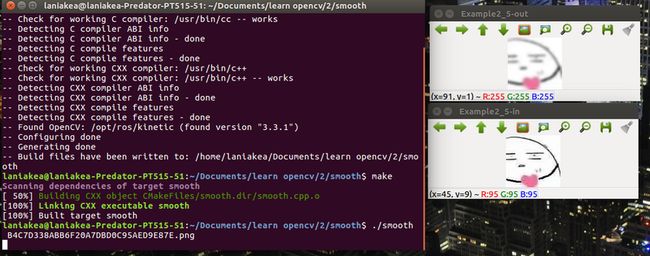

简单转换 平滑处理

Many basic vision tasks involve the application of filters to a video stream

很多视频将滤镜应用于视频流

稍后我们将将视频的每帧都加以处理smoothing an image 平滑处理

Example2-5

通过如高斯形式的卷积 或 类似函数 来减少图片中的信息

#include

int main(int argc, char** argv) {

cv::Mat image = cv::imread(argv[1], -1);

// Create some windows to show the input

// and output images in.

//

cv::namedWindow("Example2_5-in", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Example2_5-out", cv::WINDOW_AUTOSIZE);

// Create a window to show our input image

//

cv::imshow("Example2_5-in", image);

// Create an image to hold the smoothed output

//

cv::Mat out;

// Do the smoothing

// ( Note: Could use GaussianBlur(), blur(), medianBlur() or bilateralFilter(). )

//

cv::GaussianBlur(image, out, cv::Size(5, 5), 3, 3);

//blurred by a 5 × 5 Gaussian convolution filter

//Gaussian kernel should always be given in odd numbers

//input : image ; written to out

cv::GaussianBlur(out, out, cv::Size(5, 5), 3, 3);

// Show the smoothed image in the output window

//

cv::imshow("Example2_5-out", out);

// Wait for the user to hit a key, windows will self destruct

//

cv::waitKey(0);

return 0;

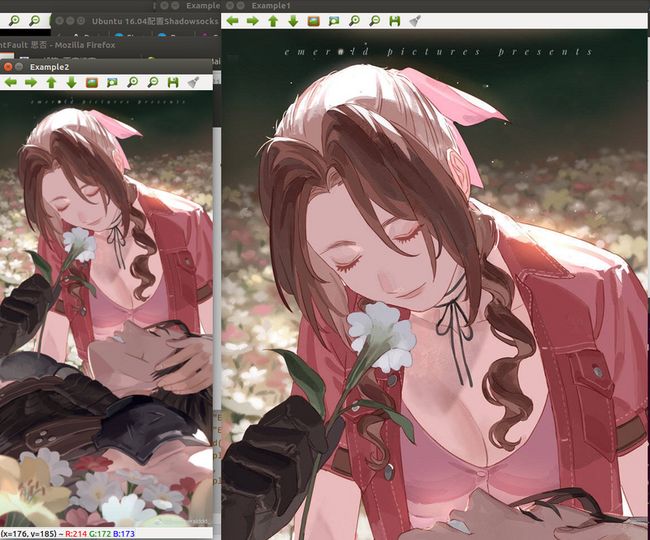

} 略复杂一点的

uses Gaussian blurring to downsample an image by a factor of 2

下采样 -- 缩小图像

1、使得图像符合显示区域的大小;

2、生成对应图像的缩略图scale space(image pyramid)尺度空间

信号处理 和 Nyquist-Shannon Sampling Theorem

下采样一个信号

convolving with a series of delta functions

与一系列增量函数卷积

high-pass filter 高通滤波器

band-limit 带限

Example 2-6

cv::pyrDown()

#include

int main(int argc, char** argv) {

cv::Mat img1, img2;

cv::namedWindow("Example1", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Example2", cv::WINDOW_AUTOSIZE);

img1 = cv::imread(argv[1]);

cv::imshow("Example1", img1);

cv::pyrDown(img1, img2);

cv::imshow("Example2", img2);

cv::waitKey(0);

return 0;

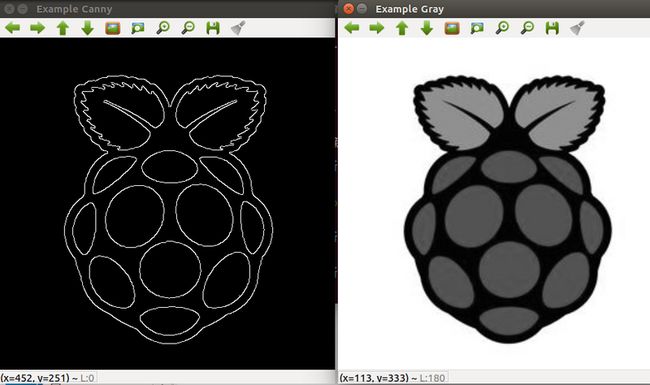

}; 边缘检测

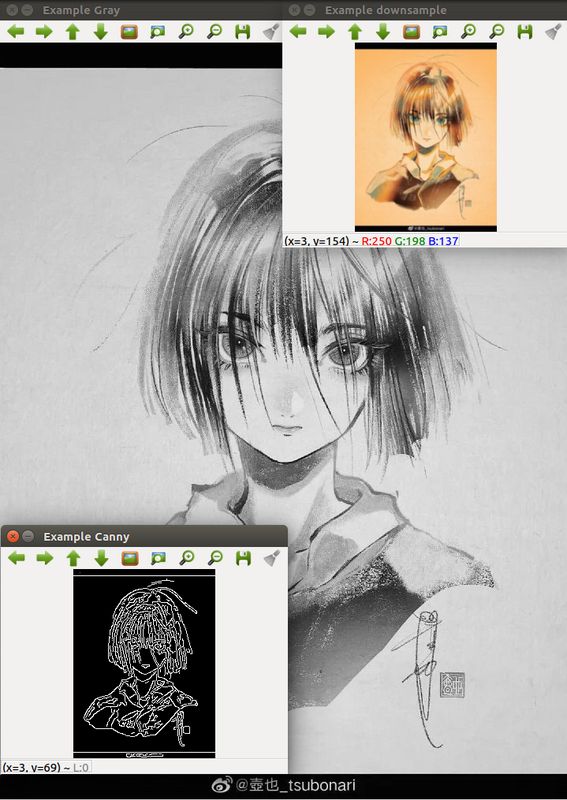

Example2-7

- Cannyedge detector Cannyedge边缘检测

把图片转换成灰度图,单通道cv::Canny()

cv::cvtColor() 将(BGR) 图片转换为灰度图

cv::COLOR_BGR2GRAY

#include

int main( int argc, char** argv ) {

cv::Mat img_rgb, img_gry, img_cny;

cv::namedWindow( "Example Gray", cv::WINDOW_AUTOSIZE );

cv::namedWindow( "Example Canny", cv::WINDOW_AUTOSIZE );

img_rgb = cv::imread( argv[1] );

cv::cvtColor( img_rgb, img_gry, cv::COLOR_BGR2GRAY);

cv::imshow( "Example Gray", img_gry );

cv::Canny( img_gry, img_cny, 10, 100, 3, true );

cv::imshow( "Example Canny", img_cny );

cv::waitKey(0);

} Example2-8

下采样两次再Canny subroutine in a simple image pipeline

#include

int main(int argc, char** argv) {

cv::Mat img_rgb, img_gry, img_cny, img_pyr, img_pyr2;

cv::namedWindow("Example Gray", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Example Canny", cv::WINDOW_AUTOSIZE);

img_rgb = cv::imread(argv[1]);

cv::cvtColor(img_rgb, img_gry, cv::COLOR_BGR2GRAY);

cv::pyrDown(img_gry, img_pyr);

cv::pyrDown(img_pyr, img_pyr2);

cv::imshow("Example Gray", img_gry);

cv::Canny(img_pyr2, img_cny, 10, 100, 3, true);

cv::imshow("Example Canny", img_cny);

cv::waitKey(0);

} Example 2-9

#include

int main(int argc, char** argv) {

int x = 16, y = 32;

cv::Mat img_rgb, img_gry, img_cny, img_pyr, img_pyr2,img_pyr3;

cv::namedWindow("Example Gray", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Example Canny", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Example downsample", cv::WINDOW_AUTOSIZE);

img_rgb = cv::imread(argv[1]);

cv::Vec3b intensity = img_rgb.at< cv::Vec3b >(y, x);

uchar blue = intensity[0];

uchar green = intensity[1];

uchar red = intensity[2];

std::cout << "At (x,y) = (" << x << ", " << y <<

"): (blue, green, red) = (" <<

(unsigned int)blue <<

", " << (unsigned int)green << ", " <<

(unsigned int)red << ")" << std::endl;

cv::cvtColor(img_rgb, img_gry, cv::COLOR_BGR2GRAY);

std::cout << "Gray pixel there is: " <<

(unsigned int)img_gry.at(y, x) << std::endl;

cv::pyrDown(img_gry, img_pyr);

cv::pyrDown(img_pyr, img_pyr2);

cv::imshow("Example Gray", img_gry);

cv::Canny(img_pyr2, img_cny, 10, 100, 3, true);

x /= 4; y /= 4;

std::cout << "Pyramid2 pixel there is: " <<

(unsigned int)img_pyr2.at(y, x) << std::endl;

img_cny.at(x, y) = 128; // Set the Canny pixel there to 128

cv::pyrDown(img_rgb, img_pyr3);

cv::pyrDown(img_pyr3, img_pyr3);

cv::imshow("Example downsample", img_pyr3);

cv::imshow("Example Canny", img_cny);

cv::waitKey(0);

} 从摄像头读入

analogous to 类同

Example 2-10

#include

#include

int main(int argc, char* argv[]) {

cv::namedWindow("Example2_11", cv::WINDOW_AUTOSIZE);

cv::namedWindow("Log_Polar", cv::WINDOW_AUTOSIZE);

// ( Note: could capture from a camera by giving a camera id as an int.)

//

cv::VideoCapture capture(argv[1]);

double fps = capture.get(cv::CAP_PROP_FPS);//每秒读一帧?

cv::Size size(

(int)capture.get(cv::CAP_PROP_FRAME_WIDTH),

(int)capture.get(cv::CAP_PROP_FRAME_HEIGHT)

);

//convert the frame to log-polar format

cv::VideoWriter writer;

writer.open(argv[2]//新文件的名字

, CV_FOURCC('M', 'J', 'P', 'G')//视频编码形式

, fps//重播帧率

, size//图片大小

);

cv::Mat logpolar_frame, bgr_frame;

for (;;) {

capture >> bgr_frame;

if (bgr_frame.empty()) break; // end if done

cv::imshow("Example2_11", bgr_frame);

cv::logPolar(

bgr_frame, // Input color frame

logpolar_frame, // Output log-polar frame

cv::Point2f( // Centerpoint for log-polar transformation

bgr_frame.cols / 2, // x

bgr_frame.rows / 2 // y

),

40, // Magnitude (scale parameter)

cv::WARP_FILL_OUTLIERS // Fill outliers with 'zero'

);

cv::imshow("Log_Polar", logpolar_frame);

writer << logpolar_frame;

char c = cv::waitKey(10);

if (c == 27) break; // allow the user to break out

}

capture.release();

} argument 参数replay frame rate 重播帧率