今日头条新闻爬取+storm流处理存储(3)——storm流处理部分

项目简介

本项目整体分为三个部分来进行

- 今日头条新闻爬取

- 将爬取下来的新闻正文部分进行实体分析,并将结果可视化

- 用storm框架将爬取的新闻数据存入mysql

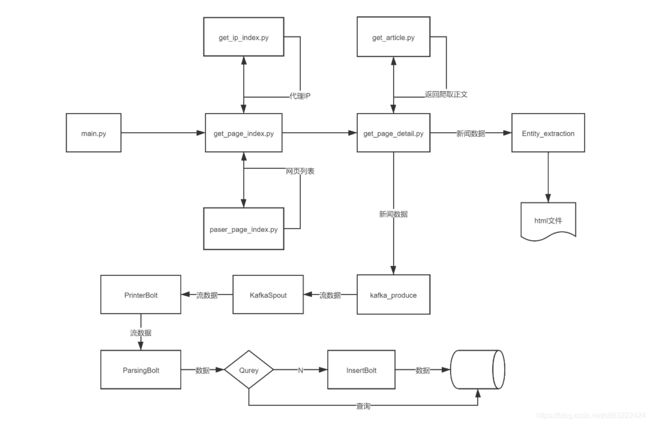

本文主要介绍今日头条新闻爬取的部分,下面给出整个项目的框架

今日头条爬取部分可以参考:爬取部分

实体分析部分可以参考:实体分析

项目下载地址:今日头条爬取+实体分析+storm流处理

流处理部分

下面主要介绍一下流处理这部分主要的工作以及主要编写的类,及各类的主要作用,具体还可以结合上面的数据处理框架来看。

数据处理流程

- 数据源将生成的数据输入到kafka

- 用KafkaSpout接收数据

- 将接收到的数据输出到控制台

- 将传过来的json格式的流数据处理成一个个独立的字段

- 将数据存入mysql数据库

主要编写的类及各类的作用

- KafkaTopology :拓扑主类

- PrinterBolt : 将传过来的数据输出到控制台

- ParsingBolt :将传过来的json格式的流数据处理成一个个独立的字段

- InsertBolt :将数据存入mysql数据库

- Qurey:mysql的查询函数,主要是在出入数据库之前先判断数据是否已经在数据库中

代码部分

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.ssh</groupId>

<artifactId>storm_kafka</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<kafka.version>0.11.0.0</kafka.version>

<storm.version>1.1.1</storm.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>${kafka.version}</version>

<exclusions>

<exclusion>

<groupId>javax.jms</groupId>

<artifactId>jms</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jdmk</groupId>

<artifactId>jmxtools</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jmx</groupId>

<artifactId>jmxri</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-kafka</artifactId>

<version>${storm.version}</version>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>${storm.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>log4j-over-slf4j</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>commons-collections</groupId>

<artifactId>commons-collections</artifactId>

<version>3.2.1</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>15.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.12</version>

</dependency>

<dependency>

<groupId>net.sf.json-lib</groupId>

<artifactId>json-lib</artifactId>

<version>2.4</version>

<classifier>jdk15</classifier>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.5.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>1.2.1</version>

<executions>

<execution>

<goals>

<goal>exec</goal>

</goals>

</execution>

</executions>

<configuration>

<executable>java</executable>

<includeProjectDependencies>true</includeProjectDependencies>

<includePluginDependencies>false</includePluginDependencies>

<classpathScope>compile</classpathScope>

<mainClass>com.learningstorm.kafka.KafkaTopology</mainClass>

</configuration>

</plugin>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<mainClass></mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

KafkaTopology.java

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.kafka.KafkaSpout;

import org.apache.storm.kafka.SpoutConfig;

import org.apache.storm.kafka.StringScheme;

import org.apache.storm.kafka.ZkHosts;

import org.apache.storm.spout.SchemeAsMultiScheme;

import org.apache.storm.topology.TopologyBuilder;

public class KafkaTopology {

public static void main(String[] args) throws AlreadyAliveException, InvalidTopologyException {

// zookeeper hosts for the Kafka cluster

ZkHosts zkHosts = new ZkHosts("192.168.161.100:2501");

SpoutConfig kafkaConfig = new SpoutConfig(zkHosts, "today_news", "", "id7");

kafkaConfig.scheme = new SchemeAsMultiScheme(new StringScheme());

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("KafkaSpout", new KafkaSpout(kafkaConfig), 1);

builder.setBolt("PrinterBolt", new PrinterBolt(), 1).globalGrouping("KafkaSpout");

builder.setBolt("SentenceBolt", new ParsingBolt(), 1).globalGrouping("PrinterBolt");

builder.setBolt("CountBolt", new InsertBolt(), 1).globalGrouping("SentenceBolt");

//本地模式

LocalCluster cluster = new LocalCluster();

Config conf = new Config();

// Submit topology for execution

cluster.submitTopology("KafkaToplogy", conf, builder.createTopology());

try {

System.out.println("Waiting to consume from kafka");

Thread.sleep(3000000);

} catch (Exception exception) {

System.out.println("Thread interrupted exception : " + exception);

}

// kill the KafkaTopology

cluster.killTopology("KafkaToplogy");

// shut down the storm test cluster

cluster.shutdown();

}

}

PrinterBolt.java

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

public class PrinterBolt extends BaseRichBolt {

/**

*

*/

private static final long serialVersionUID = 9063211371729556973L;

private OutputCollector collector;

@Override

public void execute(Tuple input) {

String sentence = input.getString(0);

System.out.println("Received Data: " + sentence);

this.collector.emit(new Values(sentence));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("data"));

}

@SuppressWarnings("rawtypes")

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector=collector;

}

}

ParsingBolt.java

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import java.util.Map;

import net.sf.json.JSONArray;

import net.sf.json.JSONObject;

import org.apache.storm.tuple.Values;

public class ParsingBolt extends BaseRichBolt {

/**

*

*/

private static final long serialVersionUID = 9063211371729556973L;

private OutputCollector collector;

@Override

public void execute(Tuple tuple) {

System.out.println("Start parsing");

String word = tuple.getStringByField("data");

JSONArray json = JSONArray.fromObject(word); // 首先把字符串转成 JSONArray 对象

JSONObject job = json.getJSONObject(0); // 遍历 jsonarray 数组,把每一个对象转成 json 对象

try {

System.out.print("url:" + job.get("url") + '\n');

System.out.print("type:" + job.get("type") + '\n');

System.out.print("title:" + job.get("title") + '\n');

System.out.print("time:" + job.get("time") + '\n');

System.out.print("news:" + job.get("news") + '\n');

System.out.print("comments_count:" + job.get("comments_count") + '\n');

System.out.print("source:" + job.get("source") + '\n');

System.out.print("coverImg:" + job.get("coverImg") + '\n');

System.out.print("keywords:" + job.get("keywords") + '\n');

System.out.print('\n');

}

catch (Exception e){

System.out.print("参数越界");

// 处理 Class.forName 错误

e.printStackTrace();

}

Qurey test=new Qurey();

if (test.match(job.get("title").toString())) {

this.collector.emit(new Values(job.get("url").toString(),job.get("type").toString(),

job.get("title").toString(),job.get("time").toString(),job.get("news").toString(),

job.get("comments_count").toString(),job.get("source").toString(),

job.get("coverImg").toString(),job.get("keywords").toString()));

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("url","type","title","time","news","comments_count","source","coverImg","keywords"));

}

@SuppressWarnings("rawtypes")

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector){

this.collector=collector;

}

}

InsertBolt.java

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

import java.sql.*;

import java.util.Map;

public class InsertBolt extends BaseRichBolt{

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

}

// MySQL 8.0 以上版本 - JDBC 驱动名及数据库 URL

static final String JDBC_DRIVER = "com.mysql.cj.jdbc.Driver";

static final String DB_URL = "jdbc:mysql://localhost:3306/News?useSSL=false&serverTimezone=UTC";

// 数据库的用户名与密码,需要根据自己的设置

static final String USER = "root";

static final String PASS = "root";

public void execute(Tuple tuple) {

Connection conn = null;

Statement stmt = null;

try{

// 注册 JDBC 驱动

Class.forName(JDBC_DRIVER);

// 打开链接

System.out.println("连接数据库...");

conn = DriverManager.getConnection(DB_URL,USER,PASS);

String sql = "insert into article_more values(?,?,?,?,?,?,?,?,?,?)";//数据库操作语句(插入)

PreparedStatement pst = conn.prepareStatement(sql);

pst.setString(1, tuple.getStringByField("url"));

pst.setString(2, tuple.getStringByField("type"));

pst.setString(3, tuple.getStringByField("title"));

pst.setString(4, tuple.getStringByField("time"));

pst.setString(5, tuple.getStringByField("news"));

pst.setString(6, tuple.getStringByField("comments_count"));

pst.setString(7, tuple.getStringByField("source"));

pst.setString(8, tuple.getStringByField("coverImg"));

pst.setString(9, tuple.getStringByField("keywords"));

pst.setInt(10, 0);

pst.executeUpdate();

conn.close();

System.out.println("成功插入新闻");

}catch(SQLException se){

// 处理 JDBC 错误

se.printStackTrace();

}catch(Exception e){

// 处理 Class.forName 错误

e.printStackTrace();

}finally{

// 关闭资源

try{

if(stmt!=null) stmt.close();

}catch(SQLException se2){

}// 什么都不做

try{

if(conn!=null) conn.close();

}catch(SQLException se){

se.printStackTrace();

}

}

}

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}

Qurey.java

import java.sql.*;

public class Qurey {

// MySQL 8.0 以下版本 - JDBC 驱动名及数据库 URL

// static final String JDBC_DRIVER = "com.mysql.jdbc.Driver";

// static final String DB_URL = "jdbc:mysql://localhost:3306/News";

// MySQL 8.0 以上版本 - JDBC 驱动名及数据库 URL

static final String JDBC_DRIVER = "com.mysql.cj.jdbc.Driver";

static final String DB_URL = "jdbc:mysql://localhost:3306/News?useSSL=false&serverTimezone=UTC";

// 数据库的用户名与密码,需要根据自己的设置

static final String USER = "root";

static final String PASS = "root";

public boolean match(String title) {

Connection conn = null;

Statement stmt = null;

try{

// 注册 JDBC 驱动

Class.forName(JDBC_DRIVER);

// 打开链接

conn = DriverManager.getConnection(DB_URL,USER,PASS);

// 执行查询

stmt = conn.createStatement();

String sql;

sql = "SELECT id FROM article_more where title='"+title+"'"+";";

ResultSet rs = stmt.executeQuery(sql);

if(rs.next()){

return false;

}

else {

return true;

}

// 完成后关闭

}catch(SQLException se){

// 处理 JDBC 错误

se.printStackTrace();

}catch(Exception e){

// 处理 Class.forName 错误

e.printStackTrace();

}finally{

// 关闭资源

try{

if(stmt!=null) stmt.close();

}catch(SQLException se2){

}// 什么都不做

try{

if(conn!=null) conn.close();

}catch(SQLException se){

se.printStackTrace();

}

}

return false;

}

public static void main(String[] args) {

Qurey a=new Qurey();

boolean result=a.match("b");

System.out.println(result);

}

}

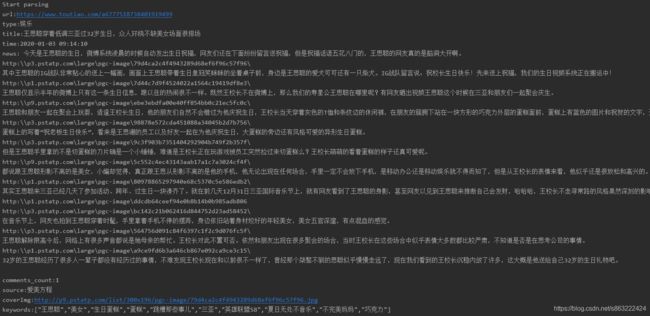

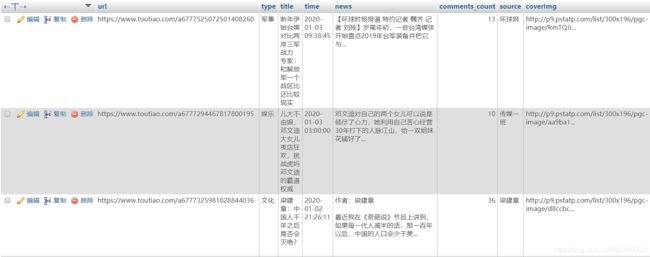

简单演示

总结

到此,整个项目算是完成了,整个项目实现的功能其实还是比较浅的,主要是将网络爬虫和kafka消息队列以及storm结合了起来,尽管使用了流处理,但是这里没有涉及到复杂的计算,性能其实没有发挥出来,这里对新闻数据其实可以做更多有意义的数据挖掘,这里也是留待以后来进行吧。