1、GPU与CPU结构上的对比

2、GPU能加速我的应用程序吗?

3、GPU与CPU在计算效率上的对比

4、利用Matlab进行GPU计算的一般流程

5、GPU计算的硬件、软件配置

5.1 硬件及驱动

5.2 软件

6、示例Matlab代码——GPU计算与CPU计算效率的对比

1、GPU与CPU结构上的对比

原文:

Multicore machines and hyper-threading technology have enabled scientists, engineers, and financial analysts to speed up computationally intensive applications in a variety of disciplines. Today, another type of hardware promises even higher computational performance: the graphics processing unit (GPU).

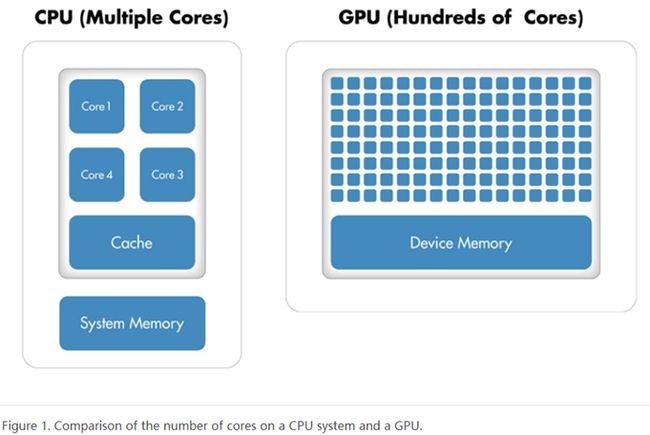

Originally used to accelerate graphics rendering, GPUs are increasingly applied to scientific calculations. Unlike a traditional CPU, which includes no more than a handful of cores, a GPU has a massively parallel array of integer and floating-point processors, as well as dedicated, high-speed memory. A typical GPU comprises hundreds of these smaller processors (Figure 1).

个人注解1:从上图可以看出:CPU的核心数是远远小于GPU的核心数的,虽然CPU每个核心的性能非常强大,但是典型的GPU都包含了数百个小型处理器,这些处理器是并行工作的,如果在处理大量的数据时,就会表现出相当高的效率,这就是所谓的众人拾柴火焰高。

2、GPU能加速我的应用程序吗?

原文:

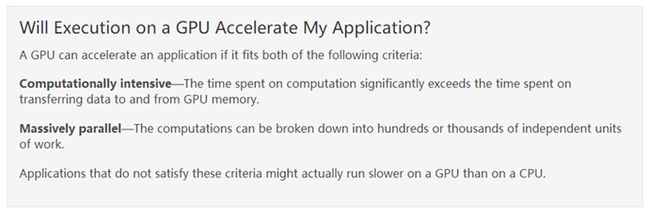

个人注解2:这两个条件的第二个我觉得是进行GPU计算的大前提,正是因为任务能够碎片化,才能够充分利用GPU的物理结构,从而提高计算效率。第一个说则条件说明了GPU计算需要将数据传输给GPU显存,这一步会花一些时间,如果数据传输花费的时间比较多的话,那就不推荐使用GPU计算啦。下面是我截的图片,演示了一个GPU计算的具体流程。

至于数据传输所花费的时间是什么量级,可以通过gpuArray函数和gather函数来传递一个矩阵B来做测试,两次传输过程之间不要做任何多余的操作,将该过程循环几千次然后求出其用时的平均值;依次改变B的大小重复上面的步骤,然后将B的大小作为横轴,平均传输时间做为纵轴,plot一个图即可。

由于目前我不太用GPU计算了,各种软件没有安装,不方便给出结果,感兴趣读者的可以亲自尝试验证一下,不再赘述。

3、GPU与CPU在计算效率上的对比

原文:

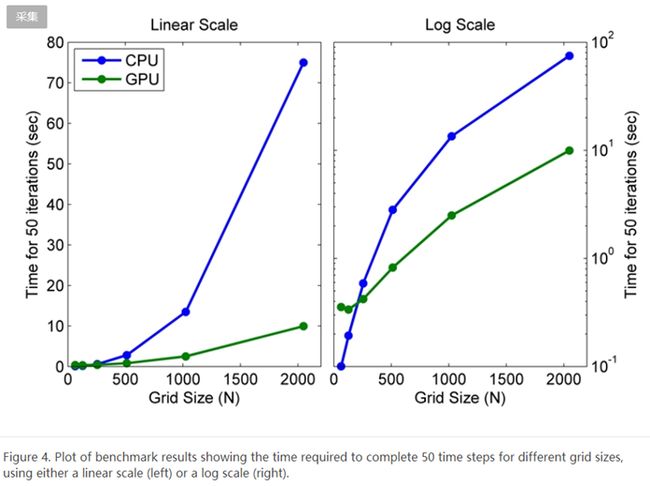

To evaluate the benefits of using the GPU to solve second-order wave equations, we ran a benchmark study in which we measured the amount of time the algorithm took to execute 50 time steps for grid sizes of 64, 128, 512, 1024, and 2048 on an Intel® Xeon® Processor X5650 and then using an NVIDIA® Tesla™ C2050 GPU.

For a grid size of 2048, the algorithm shows a 7.5x decrease in compute time from more than a minute on the CPU to less than 10 seconds on the GPU (Figure 4). The log scale plot shows that the CPU is actually faster for small grid sizes. As the technology evolves and matures, however, GPU solutions are increasingly able to handle smaller problems, a trend that we expect to continue.

个人注解3:这个图是非常直观的,我当时就是看到这个图才知道原来GPU计算这么厉害!!! 从上图可以看出,数据量越大,GPU计算相对于CPU计算的效率越高;而在数据量较小时,CPU计算的效率是远远高于GPU计算的,我觉得应该有两个原因(仅供参考):其中一个原因是,小量的数据可能根本占用不了GPU那么多的核心,而每个核心的计算效率又相对较低,所以速度会比较慢。另外一个原因,就是上面所说的数据传输相对耗时的原因。

4、利用Matlab进行GPU计算的一般流程

个人注解4:上图标记的地方解释了第二节所说的数据传输。

5、GPU计算的硬件、软件配置

5.1 硬件及驱动

电脑:联想扬天 M4400

系统:win 7 X64

硬件:NVIDIA GeForce GT 740M 独显2G

硬件驱动:

5.2 软件

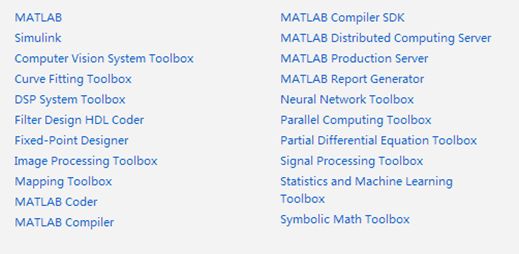

Matlab 2015a 需要安装Parallel Computing Toolbox

VS 2013 只安装了 C++基础类

CUDA 7.5.18 只安装了Toolkit

6、示例Matlab代码——GPU计算与CPU计算效率的对比

%%首先以200*200的矩阵做加减乘除做比较

t = zeros(1,100);

A = rand(200,200);B = rand(200,200);C = rand(200,200);

for i=1:100

tic;

D=A+B;E=A.*D;F=B./(E+eps);

t(i)=toc;

end;mean(t)

%%%%ans = 2.4812e-04

t1 = gpuArray(zeros(1,100));

A1 = gpuArray(rand(200,200));

B1 = gpuArray(rand(200,200));

C1 = gpuArray(rand(200,200));

for i=1:100

tic;

D1=A1+B1;E1=A1.*D1;F1=B1./(E1+eps);

t1(i)=toc;

end;mean(t1)

%%%%ans = 1.2260e-04

%%%%%%速度快了近两倍!

%%然后将矩阵大小提高到2000*2000做实验

t = zeros(1,100);

A = rand(2000,2000);B = rand(2000,2000);C = rand(2000,2000);

for i=1:100

tic;

D=A+B;E=A.*D;F=B./(E+eps);

t(i)=toc;

end;mean(t)

%%%%ans = 0.0337

t1 = gpuArray(zeros(1,100));

A1 = gpuArray(rand(2000,2000));

B1 = gpuArray(rand(2000,2000));

C1 = gpuArray(rand(2000,2000));

for i=1:100

tic;

D1=A1+B1;E1=A1.*D1;F1=B1./(E1+eps);

t1(i)=toc;

end;mean(t1)

%%%%ans = 1.1730e-04

%%%mean(t)/mean(t1) = 287.1832 快了287倍!!!

参考链接:https://ww2.mathworks.cn/company/newsletters/articles/gpu-programming-in-matlab.html