Unity URP 渲染管线着色器编程 104 之 镜头光晕(lensflare)的实现

在SRP管线中是不支持Unity原有的LensFlare配置的,也就是说如果在URP或者HDRP管线下要使用Lens Flare,需要自己实现改功能。

本节介绍一个HDRP的官方DEMO工程 FontainebleauDemo 中使用到的LensFlare功能。该LensFlare模块只需少量的修改就可以在URP管线下使用,但是如果要适配移动端设备,需要做一些修改,同时也可以进行一些性能优化。

当然LensFlare的实现不止这一种思路,也可以在屏幕后处理里得到同样的效果,但是屏幕后处理对于移动端设备来说过于昂贵,不建议这样做。

设计思路

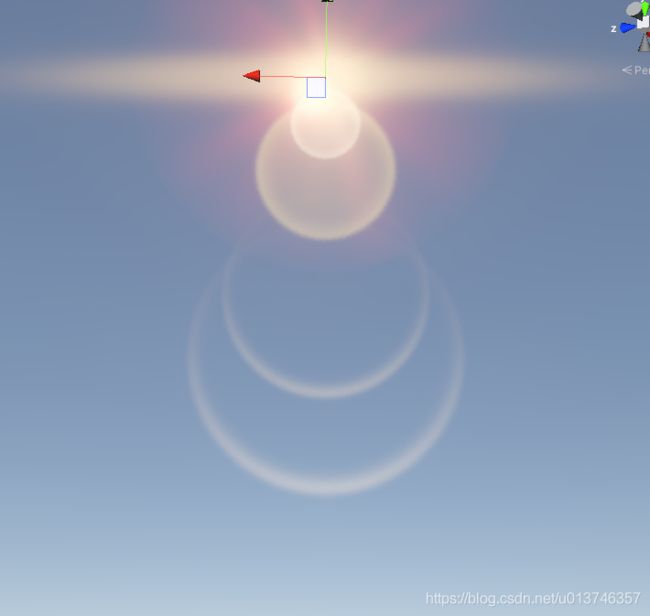

镜头光晕效果本质上是由多个面片,按照设定的顺序,大小叠加出来的效果。

如下图所示

有了这个思路,我们的LensFlare就需要如下几个功能:

- 一个CSharp脚本用来根据需要(LensFlare的设置)生成指定数量的面片(Quad),并且每个面片要有所需的大小,角度等。

- 用来渲染面片的着色器,使用类似粒子特效的透明材质,可选Alpha Blend的模式(Additive,Premultiply,DefaultTransparent)。

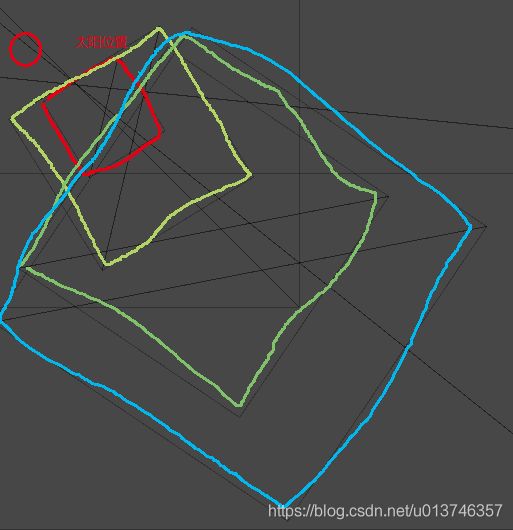

- 要实现遮罩,避免太阳被遮挡时,依然能看到镜头光晕。

对于这三个功能,我们出于性能考虑做一点点变动。

- 因为镜头光晕会根据摄像机的朝向进行移动,面片的大小和旋转以及位置都通过GPU在顶点着色器中进行处理,避免在CPU中频繁创建生成mesh。

- 遮罩的实现,使用在顶点着色器中使用均匀的采样点对深度图进行多次采样,来得到遮罩比例。

优化

对于原始的实现有如下优化策略或者移动端的适配可以使用:

- 目前的实现使用了多个独立的subMesh来绘制多个面片,但是可以使用分UV的方式,把多张flare贴图合成一张图,并且在一个mesh上进行绘制,可以减少到一次drawcall。原始的Builtin管线里的lensflare便是如此的实现。

- 部分移动端低端设备不支持在顶点着色器里采样深度贴图,可以改为在像素着色器中进行采样,只是如此会带来额外的开销。

- 目前的实现,把一些配置参数通过mesh的额外uv1,uv2,uv3传入shader,而且使用了float4 格式的uv,但是float4格式的uv在移动端可能不支持,因此需要调整为float2格式,而这样有可能导致uv坐标不够的情况,mesh只支持最多四套uv(uv0,uv1,uv2,uv3)。因此要优化掉部分通过uv传递的餐宿

- 一些参数如:lensflare在世界空间下的中心坐标,可以省略掉(可以在着色器里通过把模型空间下的(0,0,0)坐标转换到世界坐标来得到(0,0,0)对应的世界坐标)。

- 另外一些参数,因为是对所有面片通用的,例如 Near Start Distance /Near End Distance / Far Start Distance / Far End Distance这四个参数可以直接在shader里使用硬编码或者放在constant buffer里,避免占用额外的UV坐标。

上述优化并不包含在下面的代码里,如果把该功能应用到实际的移动端项目里,需要自己执行这些优化操作

完整代码如下

CSharp脚本代码如下:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

[ExecuteInEditMode]

[RequireComponent(typeof(MeshRenderer))]

[RequireComponent(typeof(MeshFilter))]

public class LensFlare : MonoBehaviour

{

[SerializeField, HideInInspector]

MeshRenderer m_MeshRenderer;

[SerializeField, HideInInspector]

MeshFilter m_MeshFilter;

[SerializeField]

Light m_Light;

[Header("Global Settings")]

public float OcclusionRadius = 1.0f;

public float NearFadeStartDistance = 1.0f;

public float NearFadeEndDistance = 3.0f;

public float FarFadeStartDistance = 10.0f;

public float FarFadeEndDistance = 50.0f;

[Header("Flare Element Settings")]

[SerializeField]

public List<FlareSettings> Flares;

void Awake()

{

if (m_MeshFilter == null)

m_MeshFilter = GetComponent<MeshFilter>();

if (m_MeshRenderer == null)

m_MeshRenderer = GetComponent<MeshRenderer>();

m_Light = GetComponent<Light>();

m_MeshFilter.hideFlags = HideFlags.None;

m_MeshRenderer.hideFlags = HideFlags.None;

if (Flares == null)

Flares = new List<FlareSettings>();

m_MeshFilter.mesh = InitMesh();

}

void OnEnable()

{

UpdateGeometry();

}

// Use this for initialization

void Start ()

{

m_Light = GetComponent<Light>();

}

void OnValidate()

{

UpdateGeometry();

UpdateMaterials();

}

// Update is called once per frame

void Update ()

{

// Lazy!

UpdateVaryingAttributes();

}

Mesh InitMesh()

{

Mesh m = new Mesh();

m.MarkDynamic();

return m;

}

void UpdateMaterials()

{

Material[] mats = new Material[Flares.Count];

int i = 0;

foreach(FlareSettings f in Flares)

{

mats[i] = f.Material;

i++;

}

m_MeshRenderer.sharedMaterials = mats;

}

void UpdateGeometry()

{

Mesh m = m_MeshFilter.sharedMesh;

// Positions

List<Vector3> vertices = new List<Vector3>();

foreach (FlareSettings s in Flares)

{

vertices.Add(new Vector3(-1, -1, 0));

vertices.Add(new Vector3(1, -1, 0));

vertices.Add(new Vector3(1, 1, 0));

vertices.Add(new Vector3(-1, 1, 0));

}

m.SetVertices(vertices);

// UVs

List<Vector2> uvs = new List<Vector2>();

foreach (FlareSettings s in Flares)

{

uvs.Add(new Vector2(0, 1));

uvs.Add(new Vector2(1, 1));

uvs.Add(new Vector2(1, 0));

uvs.Add(new Vector2(0, 0));

}

m.SetUVs(0, uvs);

// Variable Data

m.SetColors(GetLensFlareColor());

m.SetUVs(1, GetLensFlareData());

m.SetUVs(2, GetWorldPositionAndRadius());

m.SetUVs(3, GetDistanceFadeData());

m.subMeshCount = Flares.Count;

// Tris

for (int i = 0; i < Flares.Count; i++)

{

int[] tris = new int[6];

tris[0] = (i * 4) + 0;

tris[1] = (i * 4) + 1;

tris[2] = (i * 4) + 2;

tris[3] = (i * 4) + 2;

tris[4] = (i * 4) + 3;

tris[5] = (i * 4) + 0;

m.SetTriangles(tris, i);

}

Bounds b = m.bounds;

b.extents = new Vector3(OcclusionRadius, OcclusionRadius, OcclusionRadius);

m.bounds = b;

m.UploadMeshData(false);

}

void UpdateVaryingAttributes()

{

Mesh m = m_MeshFilter.sharedMesh;

m.SetColors(GetLensFlareColor());

m.SetUVs(1, GetLensFlareData());

m.SetUVs(2, GetWorldPositionAndRadius());

m.SetUVs(3, GetDistanceFadeData());

Bounds b = m.bounds;

b.extents = new Vector3(OcclusionRadius, OcclusionRadius, OcclusionRadius);

m.bounds = b;

m.name = "LensFlare (" + gameObject.name + ")";

}

List<Color> GetLensFlareColor()

{

List<Color> colors = new List<Color>();

foreach (FlareSettings s in Flares)

{

Color c = (s.MultiplyByLightColor && m_Light != null)? s.Color * m_Light.color * m_Light.intensity : s.Color;

colors.Add(c);

colors.Add(c);

colors.Add(c);

colors.Add(c);

}

return colors;

}

List<Vector4> GetLensFlareData()

{

List<Vector4> lfData = new List<Vector4>();

foreach(FlareSettings s in Flares)

{

Vector4 data = new Vector4(s.RayPosition, s.AutoRotate? -1 : Mathf.Abs(s.Rotation), s.Size.x, s.Size.y);

lfData.Add(data); lfData.Add(data); lfData.Add(data); lfData.Add(data);

}

return lfData;

}

List<Vector4> GetDistanceFadeData()

{

List<Vector4> fadeData = new List<Vector4>();

foreach (FlareSettings s in Flares)

{

Vector4 data = new Vector4(NearFadeStartDistance,NearFadeEndDistance, FarFadeStartDistance, FarFadeEndDistance);

fadeData.Add(data); fadeData.Add(data); fadeData.Add(data); fadeData.Add(data);

}

return fadeData;

}

List<Vector4> GetWorldPositionAndRadius()

{

List<Vector4> worldPos = new List<Vector4>();

Vector3 pos = transform.position;

Vector4 value = new Vector4(pos.x,pos.y,pos.z, OcclusionRadius);

foreach (FlareSettings s in Flares)

{

worldPos.Add(value); worldPos.Add(value); worldPos.Add(value); worldPos.Add(value);

}

return worldPos;

}

void OnDrawGizmosSelected()

{

Gizmos.color = new Color(1, 0, 0, 0.3f);

Gizmos.DrawSphere(transform.position, OcclusionRadius);

Gizmos.color = Color.red;

Gizmos.DrawWireSphere(transform.position, OcclusionRadius);

}

[System.Serializable]

public class FlareSettings

{

public float RayPosition;

public Material Material;

[ColorUsage(true,true)]

public Color Color;

public bool MultiplyByLightColor;

public Vector2 Size;

public float Rotation;

public bool AutoRotate;

public FlareSettings()

{

RayPosition = 0.0f;

Color = Color.white;

MultiplyByLightColor = true;

Size = new Vector2(0.3f, 0.3f);

Rotation = 0.0f;

AutoRotate = false;

}

}

}

着色器代码如下:

着色器代码由两部分构成:

- LensFlareCommon.hlsl,包含这里用到的一些通用功能

- LensFlareAdditive.shader/LensFlareLerp.shader/LensFlarePremultiplied.shader 分别应用了不同的Blend模式,其他代码一致

LensFlareCommon.hlsl代码如下

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

float4 color : COLOR;

// LensFlare Data :

// * X = RayPos

// * Y = Rotation (< 0 = Auto)

// * ZW = Size (Width, Height) in Screen Height Ratio

nointerpolation float4 lensflare_data : TEXCOORD1;

// World Position (XYZ) and Radius(W) :

nointerpolation float4 worldPosRadius : TEXCOORD2;

// LensFlare FadeData :

// * X = Near Start Distance

// * Y = Near End Distance

// * Z = Far Start Distance

// * W = Far End Distance

nointerpolation float4 lensflare_fadeData : TEXCOORD3;

};

struct v2f

{

float2 uv : TEXCOORD0;

float4 vertex : SV_POSITION;

float4 color : COLOR;

};

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

TEXTURE2D(_CameraDepthTexture);

SAMPLER(sampler_CameraDepthTexture);

float _OccludedSizeScale;

// thanks, internets

static const uint DEPTH_SAMPLE_COUNT = 32;

static float2 samples[DEPTH_SAMPLE_COUNT] = {

float2(0.658752441406,-0.0977704077959),

float2(0.505380451679,-0.862896621227),

float2(-0.678673446178,0.120453640819),

float2(-0.429447203875,-0.501827657223),

float2(-0.239791020751,0.577527523041),

float2(-0.666824519634,-0.745214760303),

float2(0.147858589888,-0.304675519466),

float2(0.0334240831435,0.263438135386),

float2(-0.164710089564,-0.17076793313),

float2(0.289210408926,0.0226817727089),

float2(0.109557107091,-0.993980526924),

float2(-0.999996423721,-0.00266989553347),

float2(0.804284930229,0.594243884087),

float2(0.240315377712,-0.653567194939),

float2(-0.313934922218,0.94944447279),

float2(0.386928111315,0.480902403593),

float2(0.979771316051,-0.200120285153),

float2(0.505873680115,-0.407543361187),

float2(0.617167234421,0.247610524297),

float2(-0.672138273716,0.740425646305),

float2(-0.305256098509,-0.952270269394),

float2(0.493631094694,0.869671344757),

float2(0.0982239097357,0.995164275169),

float2(0.976404249668,0.21595069766),

float2(-0.308868765831,0.150203511119),

float2(-0.586166858673,-0.19671548903),

float2(-0.912466347218,-0.409151613712),

float2(0.0959918648005,0.666364192963),

float2(0.813257217407,-0.581904232502),

float2(-0.914829492569,0.403840065002),

float2(-0.542099535465,0.432246923447),

float2(-0.106764614582,-0.618209302425)

};

float GetOcclusion(float2 screenPos, float depth, float radius, float ratio)

{

float contrib = 0.0f;

float sample_Contrib = 1.0 / DEPTH_SAMPLE_COUNT;

float2 ratioScale = float2(1 / ratio, 1.0);

for (uint i = 0; i < DEPTH_SAMPLE_COUNT; i++)

{

float2 pos = screenPos + (samples[i] * radius * ratioScale);

pos = pos * 0.5 + 0.5;

pos.y = 1 - pos.y;

if (pos.x >= 0 && pos.x <= 1 && pos.y >= 0 && pos.y <= 1)

{

float sampledDepth = LinearEyeDepth(SAMPLE_TEXTURE2D_LOD(_CameraDepthTexture, sampler_CameraDepthTexture, pos, 0).r, _ZBufferParams);

//float sampledDepth = LinearEyeDepth(SampleCameraDepth(pos), _ZBufferParams);

if (sampledDepth >= depth)

contrib += sample_Contrib;

}

}

return contrib;

}

v2f vert(appdata v)

{

v2f o;

float4 clip = TransformWorldToHClip(GetCameraRelativePositionWS(v.worldPosRadius.xyz));

float depth = clip.w; // 这里的w实际上等于相机空间下的坐标z值,也就相机空间下距离相机的距离。因为世界空间到相机空间的矩阵没有缩放操作,因此这里的距离等价于世界空间下距离相机的距离。

float3 cameraUp = normalize(mul(UNITY_MATRIX_V, float4(0, 1, 0, 0))).xyz;

float4 extent = TransformWorldToHClip(GetCameraRelativePositionWS(v.worldPosRadius.xyz + cameraUp * v.worldPosRadius.w));

// 这里是得到太阳中心或者lensflare中心的坐标

float2 screenPos = clip.xy / clip.w; // 齐次除,得到坐标区间[-1,1]

// 这里是lensflare上边缘中心点的坐标

float2 extentPos = extent.xy / extent.w; // 齐次除,得到坐标区间[-1,1]

float radius = distance(screenPos, extentPos);

// 屏幕长宽比 w/h

float ratio = _ScreenParams.x / _ScreenParams.y; // screenWidth/screenHeight

// 计算遮罩比例

float occlusion = GetOcclusion(screenPos, depth - v.worldPosRadius.w, radius, ratio);

// 根据设置的距离,淡入淡出镜头光晕

float4 d = v.lensflare_fadeData;

float distanceFade = saturate((depth - d.x) / (d.y - d.x));

distanceFade *= 1.0f - saturate((depth - d.z) / (d.w - d.z));

// position and rotate

float angle = v.lensflare_data.y;

if (angle < 0) // Automatic

{

float2 dir = normalize(screenPos);

angle = atan2(dir.y, dir.x) + 1.57079632675; // arbitrary, we need V to face the source, not U;

}

// 根据遮罩比例和设置的面片大小,计算最终的面片大小

float2 quad_size = lerp(_OccludedSizeScale, 1.0f, occlusion) * v.lensflare_data.zw;

if (distanceFade * occlusion == 0.0f) // if either one or other is zeroed

quad_size = float2(0, 0); // clip

float2 local = v.vertex.xy * quad_size;

// 旋转面片

// 这里实际上是float2x2 旋转矩阵R

// {

// cos(θ),-sin(θ)

// sin(θ),cos(θ)

// }

// 乘上顶点的屏幕空间坐标

local = float2(

local.x * cos(angle) + local.y * (-sin(angle)),

local.x * sin(angle) + local.y * cos(angle));

// adjust to correct ratio

local.x /= ratio; // 应用对应屏幕比例的缩放,否则结果会被拉伸

float2 rayOffset = -screenPos * v.lensflare_data.x;

o.vertex.w = v.vertex.w;

o.vertex.xy = screenPos + local + rayOffset;

o.vertex.z = 1;

o.uv = v.uv;

o.color = v.color * occlusion * distanceFade * saturate(length(screenPos * 2));

return o;

}

LensFlareAdditive.shader代码如下

Shader "zhangguangmu/tutorial/lensflare/LensFlareAdditive"

{

Properties

{

_MainTex("Texture",2D)="white"

_OccludedSizeScale("Cooluded Size scale",Float)=1.0

}

SubShader

{

Pass

{

Tags{"RenderQueue"="Transparent"}

Blend One One

ColorMask RGB

ZWrite Off

Cull Off

ZTest Always

HLSLPROGRAM

#pragma target 5.0

#pragma vertex vert

#pragma fragment frag

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

#include "LensFlareCommon.hlsl"

real4 frag(v2f i):SV_Target

{

float4 col=SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex,i.uv);

return col*i.color;

}

ENDHLSL

}

}

}