OpenCV/source/sample/cpp的学习

昨天看完了opencv_cheatsheet.pdf、opencv_tutorials.pdf、opencv_user.pdf这三个opencv自带的pdf文档 发现如果看完了浅墨大神写的《OpenCV3编程入门》后 基本上可以不用看opencv_tutorials.pdf了,因为这本书就是根据这个pdf写的 如果要做鱼眼图像校正拼接全景漫游 或者说如果研究方向跟我一样 那就看下opencv_tutorials.pdf这个的第五章 camera calibration以及chessboard calibration方面的知识 浅墨大神的书里 省略了

然后我在开始看D:\myopencv\opencv\sources\samples\cpp这里面关于图像校正方面的cpp

(一)brief_match_test.cpp

对于brief_match_test.cpp我稍微改动(改动的地方把原来的变成注释里 然后改动的写在后面的) 然后运行了下:

#include "opencv2/core/core.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include

#include

using namespace cv;

using namespace std;

//Copy (x,y) location of descriptor matches found from KeyPoint data structures into Point2f vectors

static void matches2points(const vector

const vector

{

pts_train.clear();

pts_query.clear();

pts_train.reserve(matches.size());

pts_query.reserve(matches.size());

for (size_t i = 0; i < matches.size(); i++)

{

const DMatch& match = matches[i];

pts_query.push_back(kpts_query[match.queryIdx].pt);

pts_train.push_back(kpts_train[match.trainIdx].pt);

}

}

static double match(const vector

const Mat& train, const Mat& query, vector

{

double t = (double)getTickCount();

matcher.match(query, train, matches); //Using features2d

return ((double)getTickCount() - t) / getTickFrequency();

}

static void help()

{

cout << "This program shows how to use BRIEF descriptor to match points in features2d" << endl <<

"It takes in two images, finds keypoints and matches them displaying matches and final homography warped results" << endl <<

"Usage: " << endl <<

"image1 image2 " << endl <<

"Example: " << endl <<

"box.png box_in_scene.png " << endl;

}

const char* keys =

{

"{1| |box.png |the first image}"

"{2| |box_in_scene.png|the second image}"

};

int main(int argc, const char ** argv)

{

help();

CommandLineParser parser(argc, argv, keys);

//string im1_name = parser.get

//string im2_name = parser.get

//Mat im1 = imread(im1_name, CV_LOAD_IMAGE_GRAYSCALE);

//Mat im2 = imread(im2_name, CV_LOAD_IMAGE_GRAYSCALE);

Mat im1=imread("1.jpg",0);

Mat im2=imread("2.jpg",0);

if (im1.empty() || im2.empty())

{

cout << "could not open one of the images..." << endl;

cout << "the cmd parameters have next current value: " << endl;

parser.printParams();

return 1;

}

double t = (double)getTickCount();

FastFeatureDetector detector(50);

BriefDescriptorExtractor extractor(32); //this is really 32 x 8 matches since they are binary matches packed into bytes

vector

detector.detect(im1, kpts_1);

detector.detect(im2, kpts_2);

t = ((double)getTickCount() - t) / getTickFrequency();

//cout << "found " << kpts_1.size() << " keypoints in " << im1_name << endl << "fount " << kpts_2.size()

// << " keypoints in " << im2_name << endl << "took " << t << " seconds." << endl;

cout << "found " << kpts_1.size() << " keypoints in " << "im1" << endl << "fount " << kpts_2.size()

<< " keypoints in " << "im2" << endl << "took " << t << " seconds." << endl;

Mat desc_1, desc_2;

cout << "computing descriptors..." << endl;

t = (double)getTickCount();

extractor.compute(im1, kpts_1, desc_1);

extractor.compute(im2, kpts_2, desc_2);

t = ((double)getTickCount() - t) / getTickFrequency();

cout << "done computing descriptors... took " << t << " seconds" << endl;

//Do matching using features2d

cout << "matching with BruteForceMatcher

BFMatcher matcher_popcount(NORM_HAMMING);

vector

double pop_time = match(kpts_1, kpts_2, matcher_popcount, desc_1, desc_2, matches_popcount);

cout << "done BruteForceMatcher

vector

matches2points(matches_popcount, kpts_1, kpts_2, mpts_1, mpts_2); //Extract a list of the (x,y) location of the matches

vector

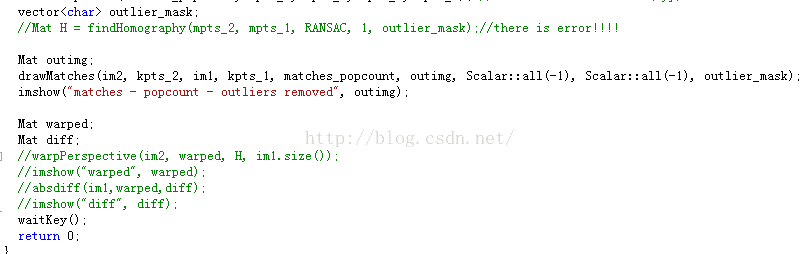

//Mat H = findHomography(mpts_2, mpts_1, RANSAC, 1, outlier_mask);//there is error!!!!

Mat outimg;

drawMatches(im2, kpts_2, im1, kpts_1, matches_popcount, outimg, Scalar::all(-1), Scalar::all(-1), outlier_mask);

imshow("matches - popcount - outliers removed", outimg);

Mat warped;

Mat diff;

//warpPerspective(im2, warped, H, im1.size());

//imshow("warped", warped);

//absdiff(im1,warped,diff);

//imshow("diff", diff);

waitKey();

return 0;

}

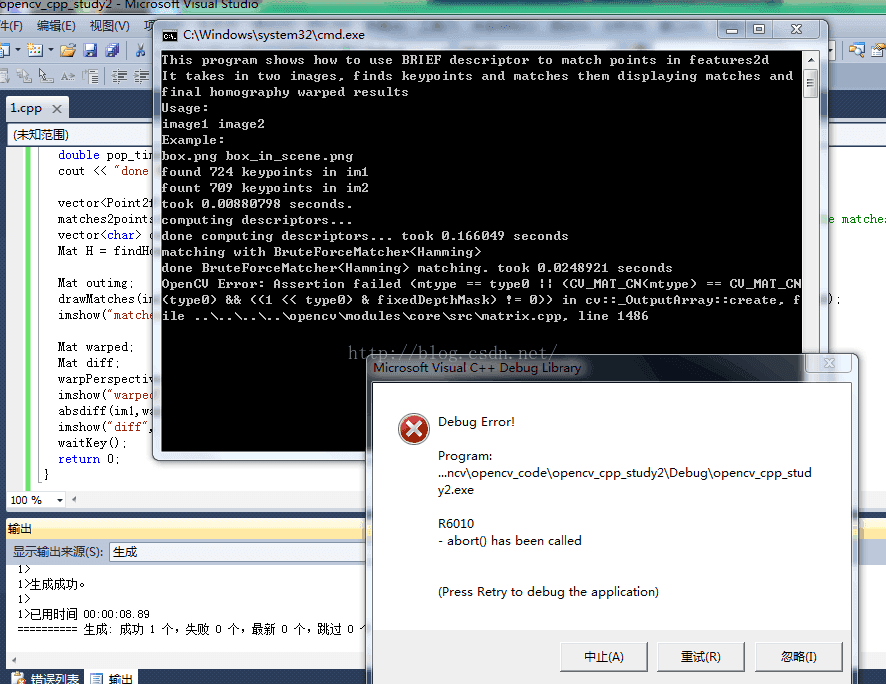

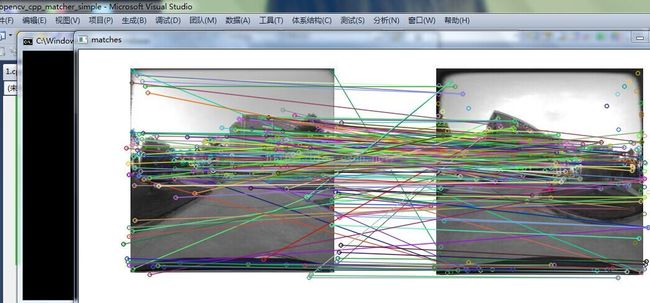

可以看到有很多误匹配点对 因为之前匹配点(不管是用的SIFT还是SURF或者是别的)都只是初步得到两幅图的匹配点对 后面按道理还要进行RANSAC进一步精确匹配点对 但我没有 因为会出现错误 如果我不改动 就是不把下几句变成注释 就像原cpp一样 进行RANSAC

可以看到有很多误匹配点对 因为之前匹配点(不管是用的SIFT还是SURF或者是别的)都只是初步得到两幅图的匹配点对 后面按道理还要进行RANSAC进一步精确匹配点对 但我没有 因为会出现错误 如果我不改动 就是不把下几句变成注释 就像原cpp一样 进行RANSAC

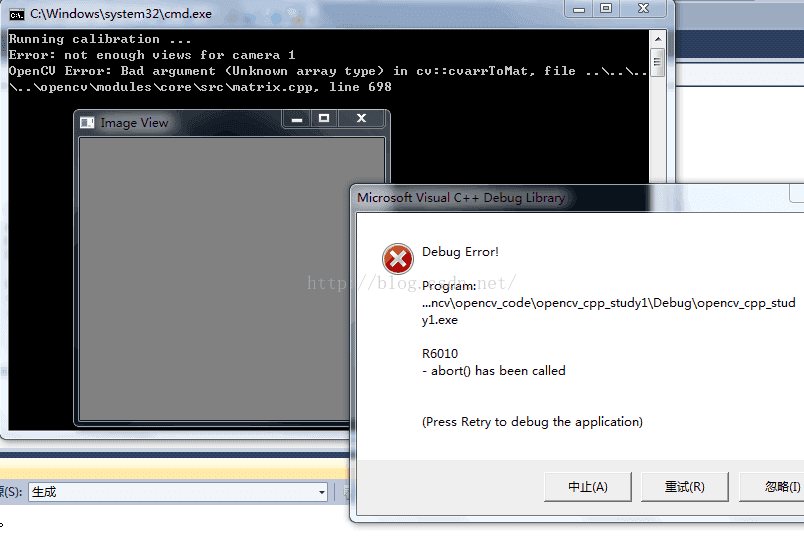

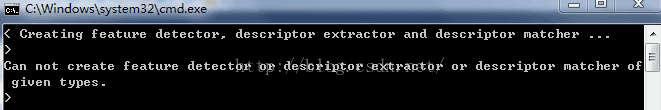

即 会出现这个错误 具体原因我目前不知道 我目前只知道是从RANSAC那里开始错的 所以我之前才把它注释掉了 我知道了只需要把Mat H = findHomography(mpts_2, mpts_1, RANSAC);改成这样就好了 ,不要后面的那个掩膜,这样就可以运行出来四个显示窗口:

即 会出现这个错误 具体原因我目前不知道 我目前只知道是从RANSAC那里开始错的 所以我之前才把它注释掉了 我知道了只需要把Mat H = findHomography(mpts_2, mpts_1, RANSAC);改成这样就好了 ,不要后面的那个掩膜,这样就可以运行出来四个显示窗口:

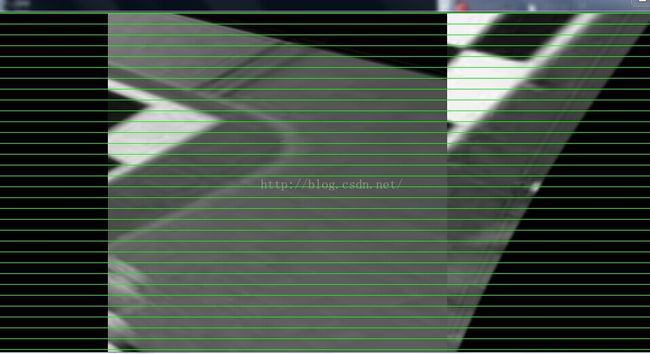

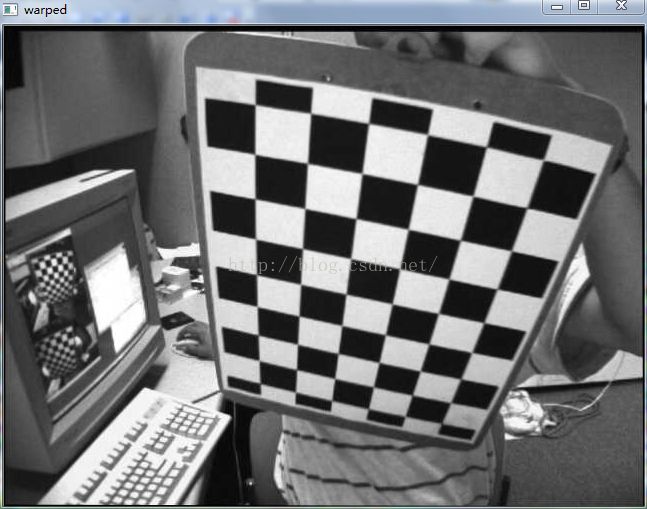

这是通过函数Mat H = findHomography(mpts_2, mpts_1, RANSAC);找到的透视变换 然后在其中一幅图上warpPerspective(im2, warped, H, im1.size());运用这个透视变换H显示出来两幅图的差异

这是通过函数Mat H = findHomography(mpts_2, mpts_1, RANSAC);找到的透视变换 然后在其中一幅图上warpPerspective(im2, warped, H, im1.size());运用这个透视变换H显示出来两幅图的差异

absdiff(im1,warped,diff);据说是计算的当前帧与背景之差的绝对值 当输入参数是三幅图时则是将前两幅图的不同点输出到第三幅图显示出来

absdiff(im1,warped,diff);据说是计算的当前帧与背景之差的绝对值 当输入参数是三幅图时则是将前两幅图的不同点输出到第三幅图显示出来

至于为什么改成Mat H = findHomography(mpts_2, mpts_1, RANSAC);这样就好了 我还不是太清楚 因为这个函数的输入参数会默认掩膜那一项是空 当不是空时输入掩膜会被忽略掉 但后面的drawMatches()函数中输入 参数中如果有掩膜 则只绘制出掩膜匹配点对 否则绘制全部匹配点对 这部分我不是太清楚 感觉有点混沌 为什么只能是一个空的掩膜

(二)3calibration.cpp

这个我运行出来有error 然后我就慢慢调试 结果还是有错误 程序部分我没太看懂:

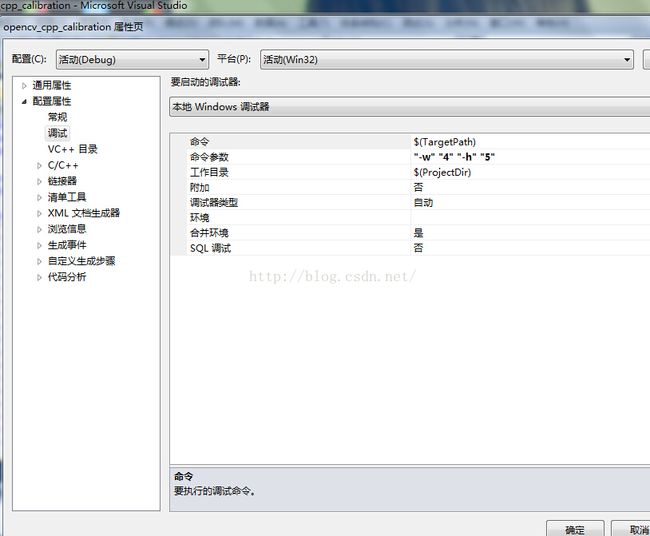

我想了很久 不知道为什么 然后厚脸皮的问了王志周童鞋 原来是main()传递参数这里 我之前是传递的三张棋盘图片 原来是按照help()这里传递-w和-h 以及wd.xml 这个xml文件我加在main()函数开始生成的 把图片也放在目录下

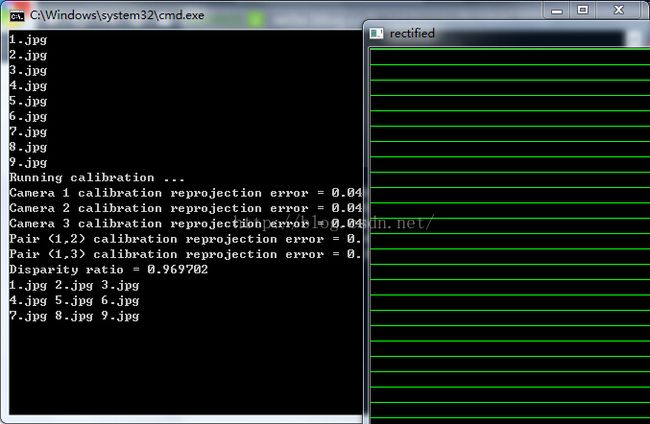

运行完 结果:

然后我按Enter键 发现rectified窗口会变化 然后这个黑框框也会相应的变化 直到最后一幅图完毕

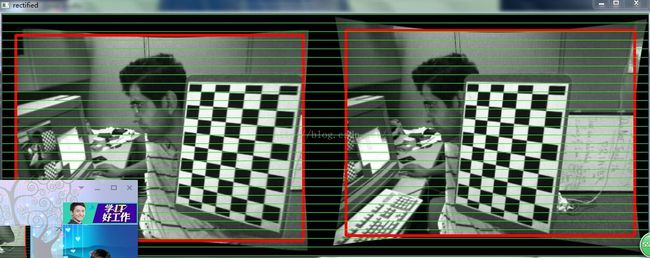

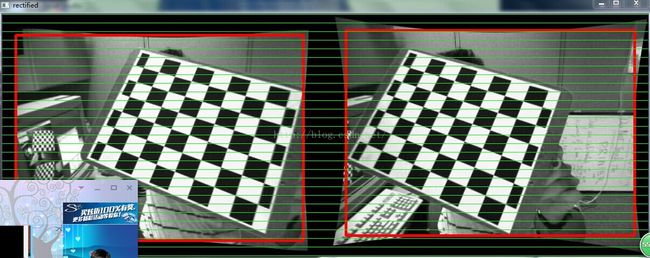

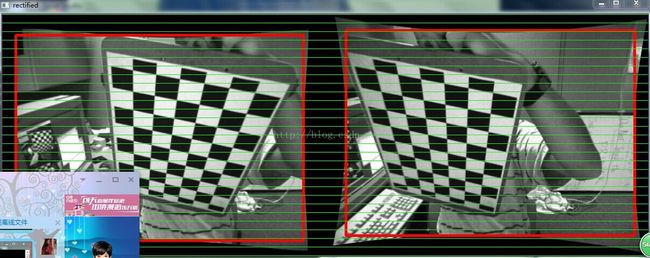

这个程序先是通过三个视点的图进行摄像机标定 得到旋转平移矩阵 得到内参外参 我原来以为后面是对棋盘进行校正 原来不是 王志周说是根据得到的这些参数将倾斜的棋盘摆正 不是像鱼眼那样校正

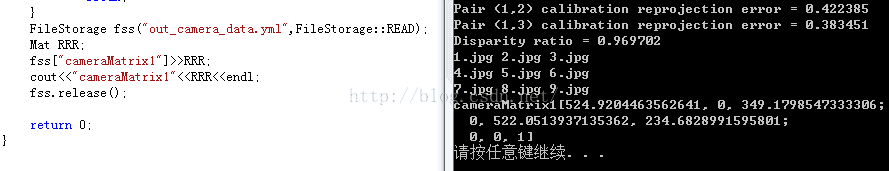

后来我就加了一点 想看输出文件out_camera_data.yml里通过标定得到的 相机矩阵cameraMatrix1 然后结果出来:

当然也可以自己看另两个相机矩阵 以及旋转平移矩阵 加在程序最后面就好了 当按Enter运行到最后一张图后 就会像这样显示出来

当然也可以自己看另两个相机矩阵 以及旋转平移矩阵 加在程序最后面就好了 当按Enter运行到最后一张图后 就会像这样显示出来

但这个总的程序运行出来 效果还是不好 上面图片中只看得到棋盘的一角 看不到完整的棋盘。

另外 这几个网站可以看下:

http://docs.opencv.org/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html

http://blog.csdn.net/u011867581/article/details/43083287

http://blog.csdn.net/yf0811240333/article/details/46399331

我准备看完下面这些cpp 再看第一个网站

我查了下资料 因为我是研究鱼眼校正拼接全景漫游的 所以我需要着重看以下几个cpp:

1:brief_match_test.cpp :利用brief描述算子匹配二维图像特征点

2:calibration.cpp 3calibration.cpp:相机外定标。根据自带的函数提取角点后定标

3: calibration_artificial :根据角点自动校准摄像。初始化后寻找角点再用calibrateCamera校准

4:chamfer.cpp:图像匹配。把图像二值后在目标图像中寻找模板图像。主要调用chamerMatching函数。

5: descriptor_extractor_matcher.cpp:SIFT匹配。

6:detector_descriptor_evaluation.cpp:计算检测算子。各种Dataset。以及detector_descriptor_matcher_evaluation.cpp:计算检测算子匹配

7:freak_demo.cpp:利用特征点进行图像匹配。特征点描述包括A. Alahi, R. Ortiz, and P. Vandergheynst. FREAK: Fast Retina Keypoint.

8: matcher_simple.cpp:SURF图像匹配。参数少,效果和generic_descriptor_match.cpp相似。而matching_to_many_images.cpp多幅图像的匹配。强大的SURF算法。

9:stereo_match.cpp :立体匹配。

10:stitching.cpp stitching_detailed.cpp:图像拼接。涉及到特征点的提取、特征点匹配、图像融合等等。Stitcher类。

如果和我一样研究方向 那大概就是要看这些 并且看懂 而且它们的每个函数每个算法。

再次感叹 OpenCV真的好麻烦啊 我也还有好多不懂啊 我还是习惯用MATLAB 真的不太习惯OpenCV

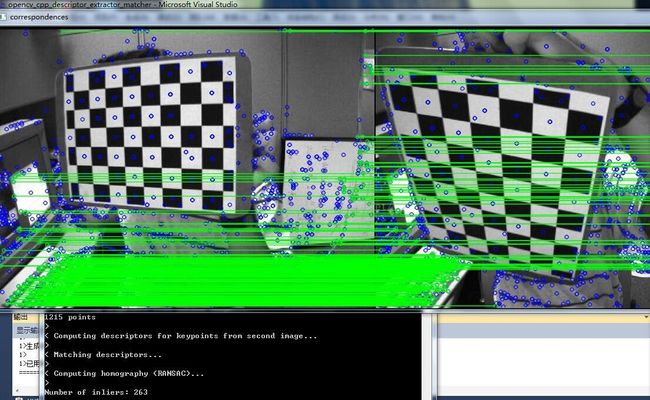

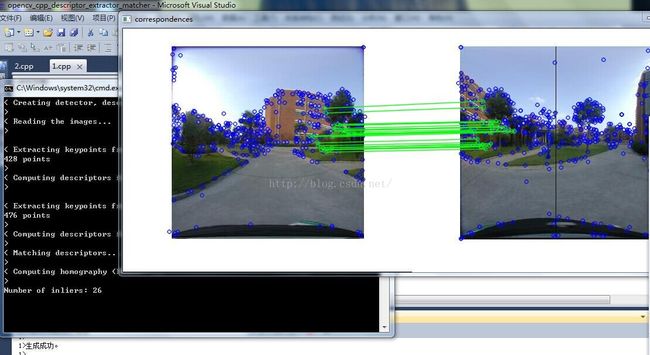

(三)今天2015.9.23了 时间过得真快 特别是最近他们专硕的都在找工作。。。时间紧迫啊。。。所以我开始看拼接了,descriptor_extractor_matcher.cpp

用的opencv2.4.9里的棋盘图 结果:

用鱼眼校正后的图像 结果:

这个官方示例cpp的main()里有6个(或者7个也行)传递参数 argv[1]、argv[2]可以选择填"SIFT"/"SURF" 而argv[3]是选择暴力匹配"BruteForce"或者是"FlannBased"进行匹配 argv[4]是选择"CrossCheckFilter" 而argv[5]与argv[6]是待匹配的两幅图像 argv[7]是RANSAC精确匹配点对的阈值 这里选的是3 所以整个命令参数就应该是这样(可以自己改变):

(四)matcher_simple.cpp

这个其实就是之前学OpenCV时浅墨书里面的一个SIFT匹配的例子 或者说他的那个例子就是参照这个cpp改写的

有很多误匹配对 同时我发现对于main函数这句:int main(int argc, char** argv) 书里说argc是传递参数的个数 argv就是对应的具体参数 那么这个cpp里明明我只传递了两个参数 也就是两幅图的名字 为什么开头时main里面写 if (argc !=3) {} 明明只有2个参数 我明白了!刚刚看《C++编程思想》看到P103 原来argv[0]是程序本身的路径和名字

有很多误匹配对 同时我发现对于main函数这句:int main(int argc, char** argv) 书里说argc是传递参数的个数 argv就是对应的具体参数 那么这个cpp里明明我只传递了两个参数 也就是两幅图的名字 为什么开头时main里面写 if (argc !=3) {} 明明只有2个参数 我明白了!刚刚看《C++编程思想》看到P103 原来argv[0]是程序本身的路径和名字

所以这使得main默认了一个隐藏的参数argv[0] 而我真正传递进去的参数是从argv[1]开始的 !

所以这使得main默认了一个隐藏的参数argv[0] 而我真正传递进去的参数是从argv[1]开始的 !

(五)generic_descriptor_match.cpp

这个cpp要传递4个参数 前两个是两幅图 后两个我不知道该传递什么 根据其中这三条语句其实是可以知道传递什么的

std::string alg_name = std::string(argv[3]);

std::string params_filename = std::string(argv[4]);

Ptr 我看不了GenericDescriptorMatcher::create()这个函数的源码 所以我不知道第三个第四个参数该传递什么

我看不了GenericDescriptorMatcher::create()这个函数的源码 所以我不知道第三个第四个参数该传递什么

算了 这个弄了几天还是不知道 先放在这里 运行完其他的cpp再来看它

(六)stitching.cpp

这个是实现图像拼接 本来我是用两幅鱼眼校正图来传递给main来拼接 结果不行 总是报错 "Can't stitch images, error code = 1" 后来在网上重新下图了 就可以了

原来不能用我之前校正的鱼眼图 像下面这样 边缘是空白的 不行 要像上面这三幅一样才可以 不要用下面的图来拼接 不然会和我一样错:

原来不能用我之前校正的鱼眼图 像下面这样 边缘是空白的 不行 要像上面这三幅一样才可以 不要用下面的图来拼接 不然会和我一样错:

(7)stitching_detailed.cpp

我只传递了两张图像 即上面的"2.jpg"和"3.jpg" 结果的确拼接出来了 因为是imwrite 所以可以在原工程目录下看结果 result.jpg 如下:

但是我想直接看程序运行完的结果 于是加了imshow 可是结果显示不出来 如下 为什么??

等运行完这些与校正拼接有关的cpp 再研究这些cpp的源码 到时候应该就知道了吧

等运行完这些与校正拼接有关的cpp 再研究这些cpp的源码 到时候应该就知道了吧

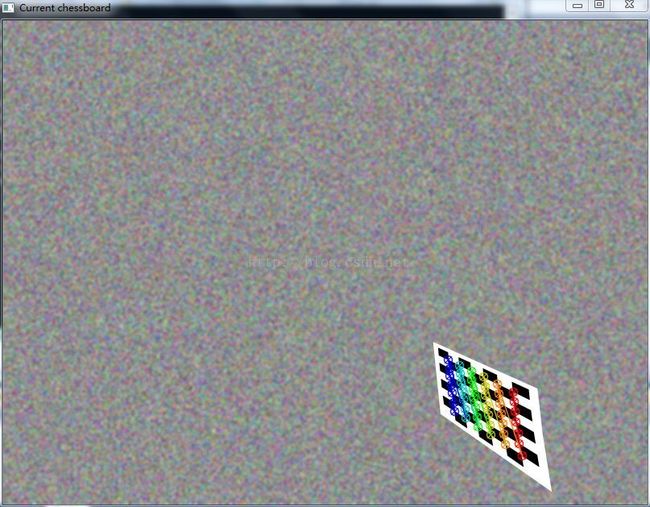

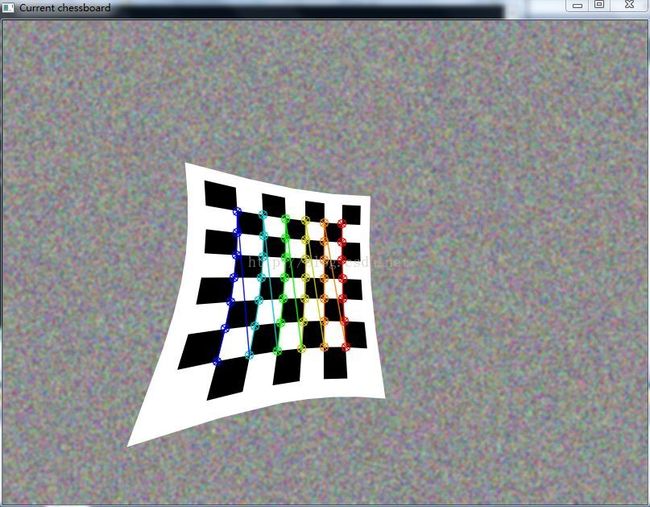

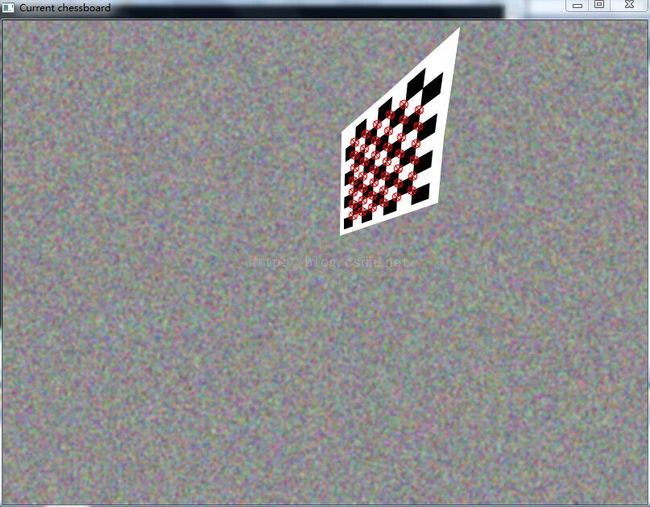

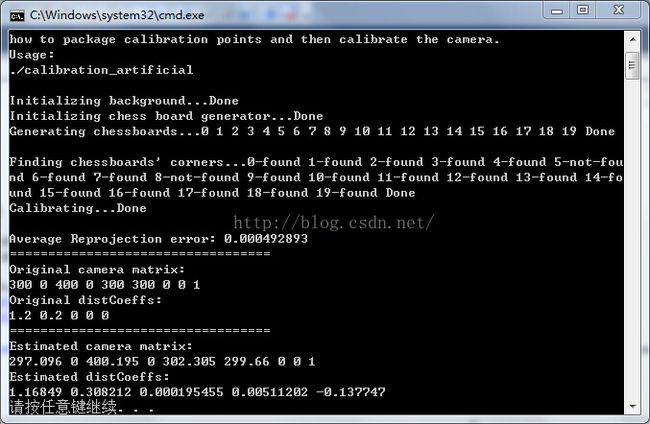

(八)calibration_artificial.cpp

这个是自动找寻角点校正 运行出来是个动态的 会不断的在多个方向出现棋盘图 然后找寻角点 我只贴其中几幅 看看:

(九)stereo_calib.cpp

这个是摄像机立体校准 我传递的参数是这样:

结果如下 按Enter键会自动更新:

(十)calibration.cpp

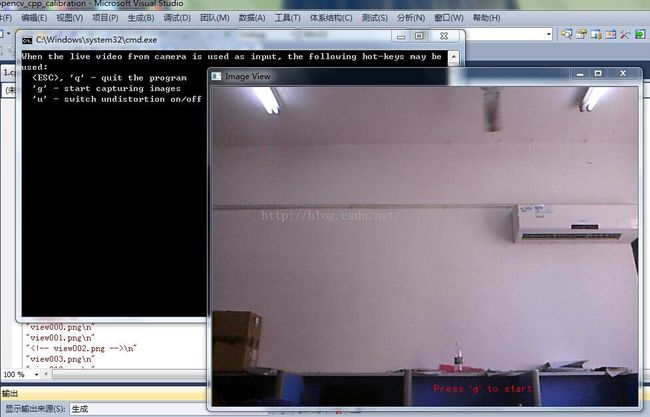

这是相机标定 当我传递参数如下时:

采集到的就是现在我电脑对面的场景 按

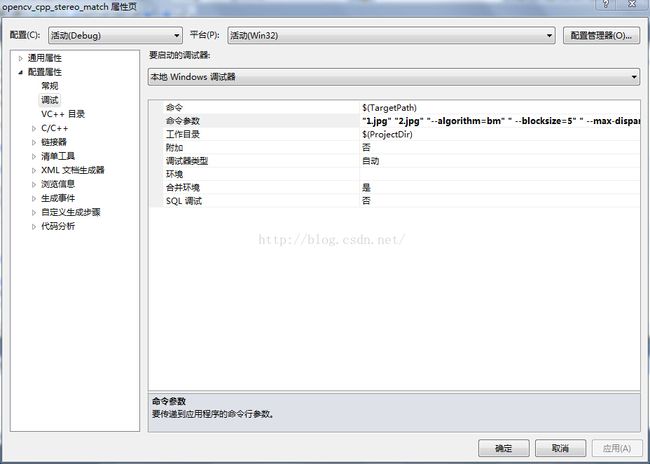

(十一)stereo_match.cpp

这个是立体匹配 可是运行有错误 Command_line parameter error:unknown option -o disparity.bmp

传递的参数 l.jpg 2.jpg --algorithm=bm --blocksize=5 --max-disparity=256 --scale=1.0 --no-display -o disparity.bmp

这个我不太懂 求视差图

到现在为止 calibration.cpp和stitching_detailed.cpp能运行但是还有小问题 而generic_description_match.cpp、matching_to_many_images.cpp、stereo_match.cpp这三个是完全连运行通过都不行 其它七个就能运行成功 但源码还没分析

(十二)matching_to_many_images.cpp

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/contrib/contrib.hpp"

#include

#include

using namespace std;

using namespace cv;

const string defaultDetectorType = "SURF";

const string defaultDescriptorType = "SURF";

const string defaultMatcherType = "FlannBased";

const string defaultQueryImageName = "D:/myopencv/opencv_code/matching_to_many_images/query.png";

const string defaultFileWithTrainImages = "D:/myopencv/opencv_code/matching_to_many_images/train/trainImages.txt";

const string defaultDirToSaveResImages = "D:/myopencv/opencv_code/matching_to_many_images/results";

static void printPrompt( const string& applName )

{

cout << "/*\n"

<< " * This is a sample on matching descriptors detected on one image to descriptors detected in image set.\n"

<< " * So we have one query image and several train images. For each keypoint descriptor of query image\n"

<< " * the one nearest train descriptor is found the entire collection of train images. To visualize the result\n"

<< " * of matching we save images, each of which combines query and train image with matches between them (if they exist).\n"

<< " * Match is drawn as line between corresponding points. Count of all matches is equel to count of\n"

<< " * query keypoints, so we have the same count of lines in all set of result images (but not for each result\n"

<< " * (train) image).\n"

<< " */\n" << endl;

cout << endl << "Format:\n" << endl;

cout << "./" << applName << " [detectorType] [descriptorType] [matcherType] [queryImage] [fileWithTrainImages] [dirToSaveResImages]" << endl;

cout << endl;

cout << "\nExample:" << endl

<< "./" << applName << " " << defaultDetectorType << " " << defaultDescriptorType << " " << defaultMatcherType << " "

<< defaultQueryImageName << " " << defaultFileWithTrainImages << " " << defaultDirToSaveResImages << endl;

}

static void maskMatchesByTrainImgIdx( const vector

{

mask.resize( matches.size() );

fill( mask.begin(), mask.end(), 0 );

for( size_t i = 0; i < matches.size(); i++ )

{

if( matches[i].imgIdx == trainImgIdx )

mask[i] = 1;

}

}

static void readTrainFilenames( const string& filename, string& dirName, vector

{

trainFilenames.clear();

ifstream file( filename.c_str() );

if ( !file.is_open() )

return;

size_t pos = filename.rfind('\\');

char dlmtr = '\\';

if (pos == String::npos)

{

pos = filename.rfind('/');

dlmtr = '/';

}

dirName = pos == string::npos ? "" : filename.substr(0, pos) + dlmtr;

while( !file.eof() )

{

string str; getline( file, str );

if( str.empty() ) break;

trainFilenames.push_back(str);

}

file.close();

}

static bool createDetectorDescriptorMatcher( const string& detectorType, const string& descriptorType, const string& matcherType,

Ptr

Ptr

Ptr

{

cout << "< Creating feature detector, descriptor extractor and descriptor matcher ..." << endl;

featureDetector = FeatureDetector::create( detectorType );

descriptorExtractor = DescriptorExtractor::create( descriptorType );

descriptorMatcher = DescriptorMatcher::create( matcherType );

cout << ">" << endl;

bool isCreated = !( featureDetector.empty() || descriptorExtractor.empty() || descriptorMatcher.empty() );

if( !isCreated )

cout << "Can not create feature detector or descriptor extractor or descriptor matcher of given types." << endl << ">" << endl; //我的运行到这里弹出这条error!

return isCreated;

}

static bool readImages( const string& queryImageName, const string& trainFilename,

Mat& queryImage, vector

{

cout << "< Reading the images..." << endl;

queryImage = imread( queryImageName, CV_LOAD_IMAGE_GRAYSCALE);

if( queryImage.empty() )

{

cout << "Query image can not be read." << endl << ">" << endl;

return false;

}

string trainDirName;

readTrainFilenames( trainFilename, trainDirName, trainImageNames );

if( trainImageNames.empty() )

{

cout << "Train image filenames can not be read." << endl << ">" << endl;

return false;

}

int readImageCount = 0;

for( size_t i = 0; i < trainImageNames.size(); i++ )

{

string filename = trainDirName + trainImageNames[i];

Mat img = imread( filename, CV_LOAD_IMAGE_GRAYSCALE );

if( img.empty() )

cout << "Train image " << filename << " can not be read." << endl;

else

readImageCount++;

trainImages.push_back( img );

}

if( !readImageCount )

{

cout << "All train images can not be read." << endl << ">" << endl;

return false;

}

else

cout << readImageCount << " train images were read." << endl;

cout << ">" << endl;

return true;

}

static void detectKeypoints( const Mat& queryImage, vector

const vector

Ptr

{

cout << endl << "< Extracting keypoints from images..." << endl;

featureDetector->detect( queryImage, queryKeypoints );

featureDetector->detect( trainImages, trainKeypoints );

cout << ">" << endl;

}

static void computeDescriptors( const Mat& queryImage, vector

const vector

Ptr

{

cout << "< Computing descriptors for keypoints..." << endl;

descriptorExtractor->compute( queryImage, queryKeypoints, queryDescriptors );

descriptorExtractor->compute( trainImages, trainKeypoints, trainDescriptors );

int totalTrainDesc = 0;

for( vector

totalTrainDesc += tdIter->rows;

cout << "Query descriptors count: " << queryDescriptors.rows << "; Total train descriptors count: " << totalTrainDesc << endl;

cout << ">" << endl;

}

static void matchDescriptors( const Mat& queryDescriptors, const vector

vector

{

cout << "< Set train descriptors collection in the matcher and match query descriptors to them..." << endl;

TickMeter tm;

tm.start();

descriptorMatcher->add( trainDescriptors );

descriptorMatcher->train();

tm.stop();

double buildTime = tm.getTimeMilli();

tm.start();

descriptorMatcher->match( queryDescriptors, matches );

tm.stop();

double matchTime = tm.getTimeMilli();

CV_Assert( queryDescriptors.rows == (int)matches.size() || matches.empty() );

cout << "Number of matches: " << matches.size() << endl;

cout << "Build time: " << buildTime << " ms; Match time: " << matchTime << " ms" << endl;

cout << ">" << endl;

}

static void saveResultImages( const Mat& queryImage, const vector

const vector

const vector

{

cout << "< Save results..." << endl;

Mat drawImg;

vector

for( size_t i = 0; i < trainImages.size(); i++ )

{

if( !trainImages[i].empty() )

{

maskMatchesByTrainImgIdx( matches, (int)i, mask );

drawMatches( queryImage, queryKeypoints, trainImages[i], trainKeypoints[i],

matches, drawImg, Scalar(255, 0, 0), Scalar(0, 255, 255), mask );

string filename = resultDir + "/res_" + trainImagesNames[i];

if( !imwrite( filename, drawImg ) )

cout << "Image " << filename << " can not be saved (may be because directory " << resultDir << " does not exist)." << endl;

}

}

cout << ">" << endl;

}

int main(int argc, char** argv)

{

string detectorType = defaultDetectorType;

string descriptorType = defaultDescriptorType;

string matcherType = defaultMatcherType;

string queryImageName = defaultQueryImageName;

string fileWithTrainImages = defaultFileWithTrainImages;

string dirToSaveResImages = defaultDirToSaveResImages;

if( argc != 7 && argc != 1 )

{

printPrompt( argv[0] );

return -1;

}

if( argc != 1 )

{

detectorType = argv[1]; descriptorType = argv[2]; matcherType = argv[3];

queryImageName = argv[4]; fileWithTrainImages = argv[5];

dirToSaveResImages = argv[6];

}

Ptr

Ptr

Ptr

if( !createDetectorDescriptorMatcher( detectorType, descriptorType, matcherType, featureDetector, descriptorExtractor, descriptorMatcher ) )

{

printPrompt( argv[0] );

return -1;

}

Mat queryImage;

vector

vector

if( !readImages( queryImageName, fileWithTrainImages, queryImage, trainImages, trainImagesNames ) )

{

printPrompt( argv[0] );

return -1;

}

vector

vector

detectKeypoints( queryImage, queryKeypoints, trainImages, trainKeypoints, featureDetector );

Mat queryDescriptors;

vector

computeDescriptors( queryImage, queryKeypoints, queryDescriptors,

trainImages, trainKeypoints, trainDescriptors,

descriptorExtractor );

vector

matchDescriptors( queryDescriptors, trainDescriptors, matches, descriptorMatcher );

saveResultImages( queryImage, queryKeypoints, trainImages, trainKeypoints,

matches, trainImagesNames, dirToSaveResImages );

return 0;

}

if( !createDetectorDescriptorMatcher( detectorType, descriptorType, matcherType, featureDetector, descriptorExtractor, descriptorMatcher ) )

{

printPrompt( argv[0] );

return -1;

}

运行到这句开始有错误 原因是featureDetector, descriptorExtractor, descriptorMatcher 这些是空的 可是调用那个函数时创建了的 为什么还说是空?