Oracle logmnr挖掘DDL语句实现误删除表的恢复

案例场景:

当时一个同事在公司业务统计库上误删除了一个表,下午做业务统计,突然这个表查不到,领导说需要恢复,给我一天时间,让我将这个表恢复,数据库可以申请停机时间。

当时存在的备份:

昨天的手工备份,归档日志都在,当前日志也正常。

模拟恢复场景:

数据库正在运行,Drop purge操作。

恢复分析

无法执行闪回drop,因为使用purge,直接跳过回收站。

由于没有使用RMAN备份,所以不能使用基于表空间的不完全恢复。

恢复计划:

需要挖掘出drop表的时间点/scn 这个是最主要的,然后使用昨天的备份进行不完全恢复,然后将表的数据使用数据泵将其导出,再使用申请停机时的全备进行一次完全恢复,使用数据泵工具将表的数据导入。

模拟环境:

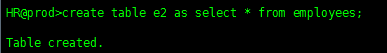

测试表:

昨日的备份

将该表purge掉:

![]()

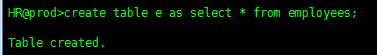

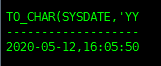

做一些操作,用于测试,最终恢复成功后,数据无丢失。

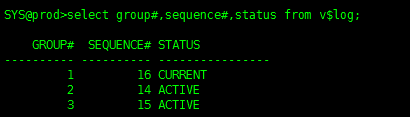

查看当前时间

select to_char(sysdate,‘yyyy-mm-dd,hh24:mm:ss’) from dual

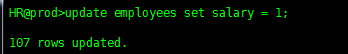

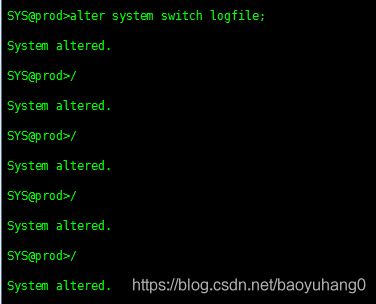

切换日志 模式数据库正在运行不断自动日志切换:

开始进行日志挖掘:

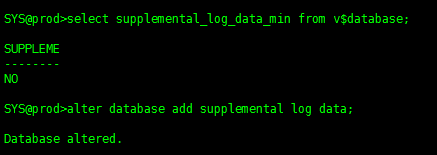

开启附加日志:

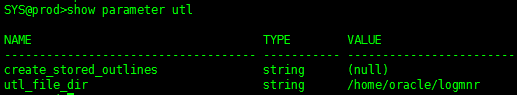

启动一个参数:utl

需要一个目录用于存放挖掘信息:

如果该参数为空,可以自己创建一个目录用于存放挖掘信息:

[oracle@service3 ~]$ mkdir /home/oracle/logmnr

SYS@prod>alter system set utl_file_dir=’/home/oracle/logmnr’ scope=spfile;

找出需要进行挖掘的日志:

时间为预计数据未丢失的时间

select name from v$ archived_log where first_time >=

(select max(first_time) from v$ archived_log

where first_time <= to_date(‘2020-05-12 16:00:00’,‘yyyy-mm-dd hh24:mi:ss’))

union all

select member from v$ logfile where group#=

(select group# from v$ log where archived=‘NO’) order by name

/

根据上面的sql语句进一步构建挖掘队列脚本:

log.sql:

select q’[exec dbms_logmnr.add_logfile(’]’ || name || q’[’,dbms_logmnr.addfile);]’ from v$ archived_log

where first_time >=

(select max(first_time) from v $archived_log where first_time <= to_date(‘2020-05-12 16:00:00’,‘yyyy-mm-dd hh24:mi:ss’))

union all

select q’[exec dbms_logmnr.add_logfile(’]’ ||member ||q’[’,dbms_logmnr.addfile);]’ from v $logfile

where group#=

(select group# from v $log where archived=‘NO’) order by 1

SYS@prod> set trim on

SYS@prod> set trims on

SYS@prod>set term off

SYS@prod>set heading off

SYS@prod>set feedback off

SYS@prod> set echo off

SYS@prod> set linesize 500

SYS@prod> set pagesize 0

SYS@prod> spool /home/oracle/logmnr.sql

SYS@prod> @log.sql

SYS@prod> spool off

SYS@prod>quit

logmnr.sql:

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_11/o1_mf_1_28_hclqm925_.arc’,dbms_logmnr.new);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_10_hcnrltwy_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_11_hcnrq41v_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_12_hcnrq9xq_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_13_hcnrqg6h_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_14_hcns0g1y_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_15_hcns0k2x_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_16_hcns5w93_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_17_hcns627p_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_18_hcns656r_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_3_hcnrdtb1_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_4_hcnrdtv7_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_5_hcnrdxrh_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_6_hcnrdych_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_7_hcnrdywx_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_8_hcnrdzjp_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_9_hcnrf16k_.arc’,dbms_logmnr.addfile);

exec dbms_logmnr.add_logfile(’/u01/app/oracle/oradata/prod/redo01.log’,dbms_logmnr.addfile);

开始进行日志挖掘:

1.execute dbms_logmnr_d.build(‘dict.ora’,’/home/oracle/logmnr’,dbms_logmnr_d.store_in_flat_file);

2.SYS@prod>@logmnr.sql

3.execute dbms_logmnr.start_logmnr(dictfilename=>’/home/oracle/logmnr/dict.ora’,options=>dbms_logmnr.ddl_dict_tracking);

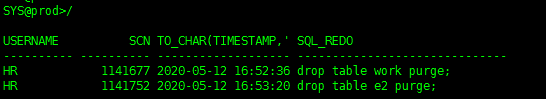

4.Select username,scn,to_char(timestamp,’yyyy-mm-dd hh24:mi:ss’),sql_redo from v$logmnr_contents where lower(sql_redo) like ‘drop table%’

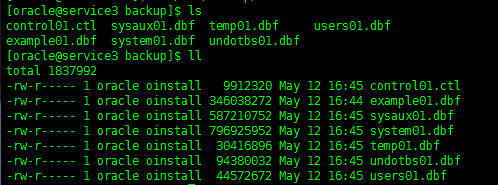

一致性关库进行一次数据库全备,包括控制文件:

SYS@prod>shutdown immediate;

Database closed.

Database dismounted.

ORACLE instance shut down.

[oracle@service3 new_backup]$ ls

control01.ctl example01.dbf redo01.log redo02.log redo03.log sysaux01.dbf system01.dbf temp01.dbf undotbs01.dbf users01.dbf

使用昨天备份进行不完全恢复到指定SCN:

[oracle@service3 prod]$ cp /u01/backup/* .

SYS@prod>startup;

ORACLE instance started.

Total System Global Area 835104768 bytes

Fixed Size 2257840 bytes

Variable Size 490736720 bytes

Database Buffers 335544320 bytes

Redo Buffers 6565888 bytes

Database mounted.

ORA-01113: file 1 needs media recovery

ORA-01110: data file 1: ‘/u01/app/oracle/oradata/prod/system01.dbf’

SYS@prod>select checkpoint_change# from v$datafile;

CHECKPOINT_CHANGE#

------------------

1154677

1154677

1154677

1154677

1154677

SYS@prod>select checkpoint_change# from v$datafile_header;

CHECKPOINT_CHANGE#

------------------

1140670

1140670

1140670

1140670

1140670

开始执行不完全恢复到指定SCN

SYS@prod>recover database until change 1141677;

ORA-00279: change 1140670 generated at 05/12/2020 16:43:22 needed for thread 1

ORA-00289: suggestion :

/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_10_hcnrltw

y_.arc

ORA-00280: change 1140670 for thread 1 is in sequence #10

Specify log: {=suggested | filename | AUTO | CANCEL}

auto

ORA-00279: change 1140958 generated at 05/12/2020 16:46:18 needed for thread 1

ORA-00289: suggestion :

/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_11_hcnrq41

v_.arc

ORA-00280: change 1140958 for thread 1 is in sequence #11

ORA-00279: change 1141209 generated at 05/12/2020 16:48:36 needed for thread 1

ORA-00289: suggestion :

/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_12_hcnrq9x

q_.arc

ORA-00280: change 1141209 for thread 1 is in sequence #12

ORA-00279: change 1141415 generated at 05/12/2020 16:48:41 needed for thread 1

ORA-00289: suggestion :

/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_13_hcnrqg6

h_.arc

ORA-00280: change 1141415 for thread 1 is in sequence #13

ORA-00279: change 1141548 generated at 05/12/2020 16:48:46 needed for thread 1

ORA-00289: suggestion :

/u01/app/oracle/fast_recovery_area/PROD/archivelog/2020_05_12/o1_mf_1_14_hcns0g1

y_.arc

ORA-00280: change 1141548 for thread 1 is in sequence #14

Log applied.

Media recovery complete.

SYS@prod>select checkpoint_change# from v$datafile;

CHECKPOINT_CHANGE#

------------------

1154677

1154677

1154677

1154677

1154677

SYS@prod>select checkpoint_change# from v$datafile_header;

CHECKPOINT_CHANGE#

------------------

1141677

1141677

1141677

1141677

1141677

SYS@prod>alter database open resetlogs;

Database altered.

SYS@prod>conn hr/hr

Connected.

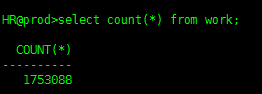

HR@prod>select count(*) from work;

COUNT(*)

----------

1753088

准备进行数据泵导出:

[oracle@service3 ~]$ mkdir pumpdir

SYS@prod>create directory pump_dir as ‘/home/oracle/pumpdir’;

Directory created.

SYS@prod>grant read,write on directory pump_dir to hr;

[oracle@service3 ~]$ expdp hr/hr directory=pump_dir dumpfile=work.dmp tables=work;

Dump file set for HR.SYS_EXPORT_TABLE_01 is:

/home/oracle/pumpdir/work.dmp

Job “HR”.“SYS_EXPORT_TABLE_01” successfully completed at Tue May 12 17:35:44 2020 elapsed 0 00:00:07

[oracle@service3 ~]$ cd pumpdir/

[oracle@service3 pumpdir]$ ls

export.log work.dmp

一致性关库:

SYS@prod>shutdown immediate;

Database closed.

Database dismounted.

ORACLE instance shut down.

进行完全恢复:

将数据文件,控制文件,日志文件拷贝回原位置。

数据文件

[oracle@service3 prod]$ cp /u01/new_backup/*.dbf .

控制文件

[oracle@service3 prod]$ cp /u01/new_backup/control01.ctl .

[oracle@service3prod]$cp/u01/new_backup/control01.ctl /u01/app/oracle/fast_recovery_area/prod/control02.ctl

日志文件

[oracle@service3 prod]$ cp /u01/new_backup/*.log .

SYS@prod>startup mount;

SYS@prod>select checkpoint_change# from v$datafile;

CHECKPOINT_CHANGE#

------------------

1154677

1154677

1154677

1154677

1154677

SYS@prod>select checkpoint_change# from v$datafile_header;

CHECKPOINT_CHANGE#

------------------

1154677

1154677

1154677

1154677

1154677

SYS@prod>alter database open;

Database altered.

如果不备份日志文件的话,也可以选择不恢复日志文件

执行该命令:

Alter database clear logfile group 1

Alter database clear logfile group 2

Alter database clear logfile group 3

校验数据是否丢失:

HR@prod>select count(*) from e2;

COUNT(*)

----------

107

HR@prod>select count(*) from e;

COUNT(*)

----------

107

HR@prod>select salary from employees rownum < 2;

SALARY

----------

1

创建数据泵目录对象:

SYS@prod>create directory pump_dir as ‘/home/oracle/pumpdir’;

SYS@prod>grant read,write on directory pump_dir to hr;

[oracle@service3 prod]$ impdp hr/hr directory=pump_dir dumpfile=work.dmp;

Import: Release 11.2.0.4.0 - Production on Tue May 12 17:53:25 2020

Copyright © 1982, 2011, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

Master table “HR”.“SYS_IMPORT_FULL_01” successfully loaded/unloaded

Starting “HR”.“SYS_IMPORT_FULL_01”: hr/******** directory=pump_dir dumpfile=work.dmp

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

. . imported “HR”.“WORK” 120.5 MB 1753088 rows

Job “HR”.“SYS_IMPORT_FULL_01” successfully completed at Tue May 12 17:53:44 2020 elapsed 0 00:00:16

SYS@prod>select count(*) from hr.work;

COUNT(*)

----------

1753088

恢复完毕,进行一次全备。