OpenTSDB之HTTP请求接口、Java开发

HTTP API

由于OpenTSDB没有支持Java的SDK进行调用,所以基于Java开发OpenTSDB的调用将要依靠HTTP请求的方式进行。下面就先介绍下部分HTTP API的特性:

数据写入

写入特性

- 为了节省传输带宽,提高传输效率,该接口可以实现批量写入多个属于不同时序的点;

- 写入请求处理时 ,毫无关系的点分开单独处理,即使其中一个点存储失败,也不会影响其他点的保存;

- 一次请求的点过多,响应请求的速度会变慢。当HTTP请求体的大小超过一定限制,可以进行分块传输(Chunked Transfer),OpenTSDB可以通过对 tsd.http.request.enable_chunked 的配置设置为true(默认为false),即可使OpenTSDB 支持 HTTP 的 Chunked Transfer Encoding。

- tsd.mode 的配置对写入操作有影响,配置 ro 表示只读状态,配置 rw 表示读写状态,当写入操作一直失败时,可以确认此配置项。

写入请求可选参数

- /api/put:根据 url 参数的不同,可以选择是否获取详细的信息。

- 通过POST方式插入数据,JSON格式,例如

{ "metric":"self.test", "timestamp":1456123787, "value":20, "tags":{ "host":"web1" } } - /api/put?summary:返回写入操作的概述信息(包括失败和成功的个数)

{ "failed": 0, "success": 1 } - /api/put?details:返回写入操作的详细信息

{ "errors": [], "failed": 0, "success": 1 } - /api/put?sync:写入操作为同步写入,即所有点写入完成(成功或失败)才向客户端返回响应,默认为异步写入。

- /api/put?sync_timeout:同步写入的超时时间。

查询数据

-

/api/query:可以选择 Get 或者 Post 两种方式,推荐使用 Post 方式,

- 请求JSON 格式

{ "start": 1456123705, // 该查询的起始时间 "end": 1456124985, // 该查询的结束时间 "globalAnnotation": false, // 查询结果中是否返回 global annotation "noAnnotations": false, // 查询结果中是否返回 annotation "msResolution": false, // 返回的点的精度是否为毫秒级,如果该字段为false, // 则同一秒内的点将按照 aggregator 指定的方式聚合得到该秒的最终值 "showTSUIDs": true, // 查询结果中是否携带 tsuid "showQuery": true, // 查询结果中是否返回对应的子查询 "showSummary": false, // 查询结果中是否携带此次查询时间的一些摘要信息 "showStats": false, // 查询结果中是否携带此次查询时间的一些详细信息 "delete": false, // 注意:如果该值设为true,则所有符合此次查询条件的点都会被删除 "queries": [ // 子查询,为一个数组,可以指定多条相互独立的子查询 ] }- Metric Query 类型子查询:指定完整的 metric、tag 及聚合信息。

- metric 和 tags 是用于过滤的查询条件。

{ "metric": "JVM_Heap_Memory_Usage_MB", // 查询使用的 metric "aggregator": "sum", // 使用的聚合函数 "downsample": "30s-avg", // 采样时间间隔和采样函数 "tags": { // tag组合,在OpenTSDB 2.0 中已经标记为废弃 // 推荐使用下面的 filters 字段 "host": "server01" }, "filters": [], // TagFilter,下面将详细介绍 Filter 相关的内容 "explicitTags": false, // 查询结果是否只包含 filter 中出现的 tag "rate": false, // 是否将查询结果转换成 rate "rateOption": {} // 记录了 rate 相关的参数,具体参数后面会进行介绍 }- TSUIDS Query 类型子查询(可看做 Metric Query 的优化):指定一条或多条 tsuid,不再指定 metric、tag 等。

{ "aggregator": "sum", // 使用的聚合函数 "tsuids": [ // 查询的 tsuids 集合,这里的 tsuids 可理解为 // 时序数据库的 id "123", "456" ] }- 返回字符串也为json格式

[ { "metric": "self.test", "tags": {}, "aggregateTags": [ "host" ], "dps": { "1456123785": 10, "1456123786": 10 } }, { "metric": "self.test", "tags": { "host": "web1" }, "aggregateTags": [], "dps": { "1456123784": 10, "1456123786": 15 } } ]

Timestamp

OpenTSDB的查询中的两种类型的时间:

- 绝对时间:用于精确指定查询的起止时间,格式 yyyy/MM/dd-HH:mm:ss

- 相对时间:用于指定查询的时间范围,格式 (amount) (time unit)-ago,例如:3h-ago(查询的结束时间是当前时间,起始时间是3小时之前)。

- amount:表示时间跨度

- time out:表示时间跨度单位,相应的时间跨度的单位,ms(毫秒)、s(秒) 、m(分钟)、h(小时)、d(天,24小时)、w(周,7天)、n(月,30天)、y(年,365天)

- -ago:固定项

由于存储时间序列的最高精度是毫秒,毫秒级、秒级所占用字节数:

- 使用毫秒精度:HBase RowKey 中的时间戳占用6个字节

- 使用秒精度:RowKey 中的时间戳占用4个字节

注意:毫秒级存储,在查询时默认返回秒级数据(按照查询中指定的聚合方式对 1秒内的时序数据进行采样聚合,形成最终结果);可以通过 msResolution 参数设置,返回毫秒级数据。

Filtering

Filter 详解:

- Filter 类似于 SQL 语句中的 Where 子句,主要用于 tagv 的过滤;

- 同一个子查询中多个 Filter 进行过滤时,FIlter 之间的关系是 AND;

- 多个 Filter 同时对一组 Tag 进行过滤,只要一个 Filter 开启分组功能,就会按照 Tag 进行分组。

Filter 的具体格式:

{

"type": "wildcard", // Fliter 类型,可以直接使用 OpenTSDB 中内置的 Filter,也可以通过插件

// 的方式增加自定义的 Filter 类型

"tagk": "host", // 被过滤的 TagKey

"filter": "*", // 过滤表达式,该表达式作用于 TagValue 上,不同类型的 Filter 支持不同形式的表达式

"groupBy": true // 是否对过滤结果进行分组(group by),默认为 false,即查询结果会被聚合成一条时序数据

}

几个实用内置 Filter 类型详解:

-

literal_or、ilteral_or 类型:

-

支持单个字符串,也支持使用 “|” 连接多个字符串;

-

含义与 SQL 语句中的 “WHERE host IN(‘server01’,‘server02’,‘server03’)” 相同;

-

ilteral_or 是 literal_or 的大小写不敏感版本,使用方式一致。

-

使用方式:

{ "type": "literal_or", "tagk": "host", "filter": "server01|server02|server03", "groupBy": false }

-

-

not_literal_or、not_iliteral_or 类型:

- 与 literal_or 的含义相反,使用方式与 literal_or 一致

- 含义与 SQL 语句中的 “WHERE host NOT IN(‘server01’,‘server02’)” 相同;

- not_ilteral_or 是 not_literal_or 的大小写不敏感版本,使用方式一致。

- 使用方式:

{ "type": "not_literal_or", "tagk": "host", "filter": "server01|server02", "groupBy": false }

-

wildcard、iwildcard 类型:

- wildcard 类型的 Filter 提供前缀、中缀、后缀的匹配功能;

- 支持使用 “*” 通配符匹配任何字符,其表达式中可以包含多个(但至少包含一个);

- 使用方式:

- iwildcard 是 wildcard 的大小写不敏感版本,使用方式一致。

{ "type": "wildcard", "tagk": "host", "filter": "server*", "groupBy": false }

-

regexp 类型:

- regexp 类型的 Filter 提供了正则表达式的过滤条件功能;

- 其含义与 SQL 语句中的 “ WHERE host REGEXP ‘.*’ ” 相同。

{ "type": "regexp", "tagk": "host", "filter": ".*", "groupBy": false }

-

not_key 类型:

- not_key 类型的 Filter 提供了过滤指定 TagKey 的功能;

- 其含义是跳过任何包含 host 这个 TagKey 的时序,注意,其 Filter 表达式必须为空。

{ "type": "not_key", "tagk": "host", "filter": "", "groupBy": false }

Aggregation

aggregator 是用于对查询结果进行聚合,将同一 unix 时间戳内的数据进行聚合计算后返回结果,例如如果 tags 不填,1456123705 有两条数据,一条 host=web1,另外一条 host=web2,值都为10,那么返回的该时间点的值为 sum 后的 20。

- 条件过滤

可以针对 tag 进行一些条件的过滤,返回 tag 中 host 的值以 web 开头的数据。"queries": [ { "aggregator": "sum", "metric": "self.test", "filters": [ { "type": "wildcard", "tagk": "host", "filter": "web*" } ] } ]

OpenTSDB 中提供了 interpolation 来解决聚合时数据缺失的补全问题,目前支持如下四种类型:

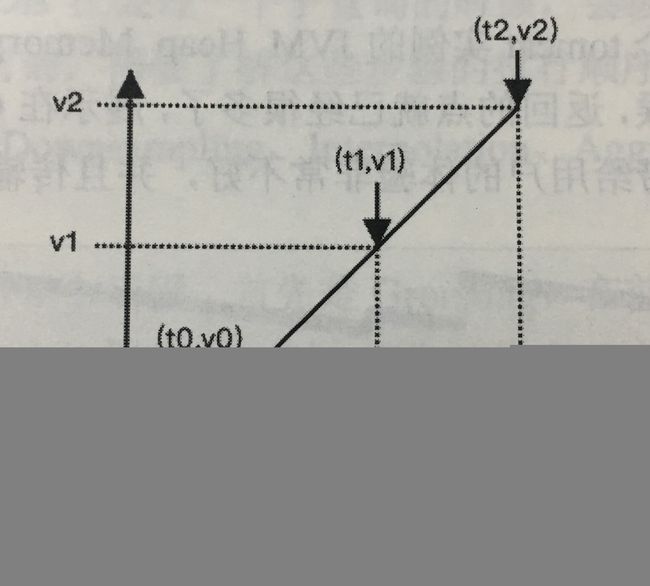

- LERP(Linear Interpolation):根据丢失点的前后两个点估计该点的值。

- 例如,时间戳 t1 处的点丢失,则使用 t0 和 t2 两个点的值(其前后两个点)估计 t1 的值,公式是: v 1 = v 0 + ( v 2 − v 0 ) ∗ ( ( t 1 − t 0 ) / ( t 2 − t 0 ) ) v1=v0+(v2-v0)*((t1-t0)/(t2-t0)) v1=v0+(v2−v0)∗((t1−t0)/(t2−t0));这里假设 t0、t1、t2 的时间间隔为 5s,v0 和 v2 分别是 10 和 20,则 v1 的估计值为 15。

- ZIM(Zero if missing):如果存在丢失点,则使用 0 进行替换。

- MAX:如果存在丢失点,则使用其类型的最大值替换。

- MIN:如果存在丢失点,则使用其类型的最小值替换。

- 例如,时间戳 t1 处的点丢失,则使用 t0 和 t2 两个点的值(其前后两个点)估计 t1 的值,公式是: v 1 = v 0 + ( v 2 − v 0 ) ∗ ( ( t 1 − t 0 ) / ( t 2 − t 0 ) ) v1=v0+(v2-v0)*((t1-t0)/(t2-t0)) v1=v0+(v2−v0)∗((t1−t0)/(t2−t0));这里假设 t0、t1、t2 的时间间隔为 5s,v0 和 v2 分别是 10 和 20,则 v1 的估计值为 15。

列出部分常用的Aggregator 函数 机器使用的 Interpolation 类型:

| Aggregator | 描述 | Interpolation |

|---|---|---|

| avg | 计算平均值作为聚合结果 | Linear Interpolation |

| count | 点的个数作为聚合结果 | ZIM |

| dev | 标准差 | Linear Interpolation |

| min | 最小值作为聚合结果 | Linear Interpolation |

| max | 最大值作为聚合结果 | Linear Interpolation |

| sum | 求和 | Linear Interpolation |

| zimsum | 求和 | ZIM |

| p99 | 将p99作为聚合结果 | Linear Interpolation |

downsample(采样功能)

简单来说就是对指定时间段内的数据进行聚合后返回;例如,需要返回每分钟的平均值数据,按照 5m-avg 的方式进行采样;分析 5m-avg 参数:

- 第一部分,采样的时间范围,即5分钟一次采样;

- 第二部分,采样使用的聚合函数,这里使用的是 avg(平均值)的聚合方式。

其含义是 每5分钟为一个采样区间,将每个区间的平均值作为返回的点。返回结果中,每个点之间的时间间隔为5分钟。

"queries": [

{

"aggregator": "sum",

"metric": "self.test",

"downsample": "5m-avg",

"tags": {

"host": "web1"

}

}

]

时序数据的丢失也会对 Downsampling 处理结果产生一定的影响。OpenTSDB 也为 Downsampling 提供了相应的填充策略,Downsampling 的填充策略如下:

- None(none):默认填充策略,当 Downsampling 结果中缺失某个节点时,不会进行处理,而是在进行 “Aggregator” 时通过相应的 interpolation 进行填充。

- NaN(nan):当 Downsampling 结果中缺少某个节点时,会将其填充为 NaN,在进行 “Aggregation” 时会跳过该点。

- Null(null):与 NaN 类似。

- Zero(zero):当 Downsampling 结果中缺少某个节点时,会将其填充为 0。

执行顺序

Rate Conversion(增长率)

在子查询中,有两个字段与 Rate Conversion 相关

- rate 字段:表示是否进行 Rate Conversion 操作;参数为 true 或 false。

- rateOptions 字段:记录 Rate Conversion 操作的一些参数,该字段是一个 Map,其中的字段及含义如下。

- counter 字段:参与 Rate Conversion 操作的时序记录是否为一个会溢出(rollover)的单调递增值。

- counterMax 字段:时序的最大值,详细介绍。

- resetValue 字段:当计算得到的比值超过该字段值时会返回0,主要是防止出现异常的峰值。

- dropResets 字段:是否直接丢弃 resetValue 的点或出现 rollover 的点。

其他接口

- /api/suggest 接口:该接口的主要功能是根据给定的前缀查询符合该前缀的 metric、tagk 或 tagv,主要用于给页面提供自动补全功能。请求结构如下:

{ "type": "metric", // 查询的字符串的类型,可选项有 metrics、tagk、tagv "q": "sys", // 字符串前缀 "max": 10 // 此次请求返回值携带的字符串个数上限 } - /api/query/exp 接口:该接口支持表达式查询。

- /api/query/gexp 接口:该接口主要为了兼容 Graphite 到 OpenTSDB 的迁移。

- /api/query/last 接口:在有些场景中,只需要一条时序数据中最近的一个点的值。

- /api/uid/assign 接口:该接口主要为 metric、tagk、tagv 分配 UID,UID 的相关内容和分配的具体实现在后面会进行详细分析。

- /api/uid/tsmeta 接口:该接口支持查询、编辑、删除 TSMeta 元数据。

- /api/uid/uidmeta 接口:改接口支持编辑、删除 UIDMeta 元数据。

- /api/annotation 接口:改接口支持添加、编辑、删除 Annotation 数据。

JAVA的调用

OpenTSDB提供三种方式的读写操作:telnet、http、post,但官方并没提供JAVA版的API。在GitHub上有的Java的opentsdb-client ,才使得我能对openTSDB的读写操作进行封装,从而分享至此:https://github.com/shifeng258/opentsdb-client

在此项目上包装开发或将其打包成SDK使用均可。本人将用前一种方式进行的。

增加的一个统一调用类OpentsdbClient

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.ygsoft.opentsdb.client.ExpectResponse;

import com.ygsoft.opentsdb.client.HttpClient;

import com.ygsoft.opentsdb.client.HttpClientImpl;

import com.ygsoft.opentsdb.client.builder.MetricBuilder;

import com.ygsoft.opentsdb.client.request.Query;

import com.ygsoft.opentsdb.client.request.QueryBuilder;

import com.ygsoft.opentsdb.client.request.SubQueries;

import com.ygsoft.opentsdb.client.response.Response;

import com.ygsoft.opentsdb.client.response.SimpleHttpResponse;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.util.*;

/**

* Opentsdb读写工具类

*/

public class OpentsdbClient {

private static Logger log = LoggerFactory.getLogger(OpentsdbClient.class);

/**

* 取平均值的聚合器

*/

public static String AGGREGATOR_AVG = "avg";

/**

* 取累加值的聚合器

*/

public static String AGGREGATOR_SUM = "sum";

private HttpClient httpClient;

public OpentsdbClient(String opentsdbUrl) {

this.httpClient = new HttpClientImpl(opentsdbUrl);

}

/**

* 写入数据

* @param metric 指标

* @param timestamp 时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putData(String metric, Date timestamp, long value, Map tagMap) throws Exception {

long timsSecs = timestamp.getTime() / 1000;

return this.putData(metric, timsSecs, value, tagMap);

}

/**

* 写入数据

* @param metric 指标

* @param timestamp 时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putData(String metric, Date timestamp, double value, Map tagMap) throws Exception {

long timsSecs = timestamp.getTime() / 1000;

return this.putData(metric, timsSecs, value, tagMap);

}

/**

* 写入数据

* @param metric 指标

* @param timestamp 转化为秒的时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putData(String metric, long timestamp, long value, Map tagMap) throws Exception {

MetricBuilder builder = MetricBuilder.getInstance();

builder.addMetric(metric).setDataPoint(timestamp, value).addTags(tagMap);

try {

log.debug("write quest:{}", builder.build());

Response response = httpClient.pushMetrics(builder, ExpectResponse.SUMMARY);

log.debug("response.statusCode: {}", response.getStatusCode());

return response.isSuccess();

} catch (Exception e) {

log.error("put data to opentsdb error: ", e);

throw e;

}

}

/**

* 写入数据

* @param metric 指标

* @param timestamp 转化为秒的时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putData(String metric, long timestamp, double value, Map tagMap) throws Exception {

MetricBuilder builder = MetricBuilder.getInstance();

builder.addMetric(metric).setDataPoint(timestamp, value).addTags(tagMap);

try {

log.debug("write quest:{}", builder.build());

Response response = httpClient.pushMetrics(builder, ExpectResponse.SUMMARY);

log.debug("response.statusCode: {}", response.getStatusCode());

return response.isSuccess();

} catch (Exception e) {

log.error("put data to opentsdb error: ", e);

throw e;

}

}

/**

* 批量写入数据

* @param metric 指标

* @param timestamp 时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putData(JSONArray jsonArr) throws Exception {

return this.putDataBatch(jsonArr);

}

/**

* 批量写入数据

* @param metric 指标

* @param timestamp 转化为秒的时间点

* @param value

* @param tagMap

* @return

* @throws Exception

*/

public boolean putDataBatch(JSONArray jsonArr) throws Exception {

MetricBuilder builder = MetricBuilder.getInstance();

try {

for(int i = 0; i < jsonArr.size(); i++){

Map tagMap = new HashMap();

for(String key : jsonArr.getJSONObject(i).getJSONObject("tags").keySet()){

tagMap.put(key, jsonArr.getJSONObject(i).getJSONObject("tags").get(key));

}

String metric = jsonArr.getJSONObject(i).getString("metric").toString();

long timestamp = DateTimeUtil.parse(jsonArr.getJSONObject(i).getString("timestamp"), "yyyy/MM/dd HH:mm:ss").getTime() / 1000;

double value = Double.valueOf(jsonArr.getJSONObject(i).getString("value"));

builder.addMetric(metric).setDataPoint(timestamp, value).addTags(tagMap);

}

log.debug("write quest:{}", builder.build());

Response response = httpClient.pushMetrics(builder, ExpectResponse.SUMMARY);

log.debug("response.statusCode: {}", response.getStatusCode());

return response.isSuccess();

} catch (Exception e) {

log.error("put data to opentsdb error: ", e);

throw e;

}

}

/**

* 查询数据,返回的数据为json格式,结构为:

* "[

* " {

* " metric: mysql.innodb.row_lock_time,

* " tags: {

* " host: web01,

* " dc: beijing

* " },

* " aggregateTags: [],

* " dps: {

* " 1435716527: 1234,

* " 1435716529: 2345

* " }

* " },

* " {

* " metric: mysql.innodb.row_lock_time,

* " tags: {

* " host: web02,

* " dc: beijing

* " },

* " aggregateTags: [],

* " dps: {

* " 1435716627: 3456

* " }

* " }

* "]";

* @param metric 要查询的指标

* @param aggregator 查询的聚合类型, 如: OpentsdbClient.AGGREGATOR_AVG, OpentsdbClient.AGGREGATOR_SUM

* @param tagMap 查询的条件

* @param downsample 采样的时间粒度, 如: 1s,2m,1h,1d,2d

* @param startTime 查询开始时间,时间格式为yyyy/MM/dd HH:mm:ss

* @param endTime 查询结束时间,时间格式为yyyy/MM/dd HH:mm:ss

*/

public String getData(String metric, Map tagMap, String aggregator, String downsample, String startTime, String endTime) throws IOException {

QueryBuilder queryBuilder = QueryBuilder.getInstance();

Query query = queryBuilder.getQuery();

query.setStart(DateTimeUtil.parse(startTime, "yyyy/MM/dd HH:mm:ss").getTime() / 1000);

query.setEnd(DateTimeUtil.parse(endTime, "yyyy/MM/dd HH:mm:ss").getTime() / 1000);

List sqList = new ArrayList();

SubQueries sq = new SubQueries();

sq.addMetric(metric);

sq.addTag(tagMap);

sq.addAggregator(aggregator);

sq.setDownsample(downsample + "-" + aggregator);

sqList.add(sq);

query.setQueries(sqList);

try {

log.debug("query request:{}", queryBuilder.build()); //这行起到校验作用

SimpleHttpResponse spHttpResponse = httpClient.pushQueries(queryBuilder, ExpectResponse.DETAIL);

log.debug("response.content: {}", spHttpResponse.getContent());

if (spHttpResponse.isSuccess()) {

return spHttpResponse.getContent();

}

return null;

} catch (IOException e) {

log.error("get data from opentsdb error: ", e);

throw e;

}

}

/**

* 查询数据,返回tags与时序值的映射: Map>

* @param metric 要查询的指标

* @param aggregator 查询的聚合类型, 如: OpentsdbClient.AGGREGATOR_AVG, OpentsdbClient.AGGREGATOR_SUM

* @param tagMap 查询的条件

* @param downsample 采样的时间粒度, 如: 1s,2m,1h,1d,2d

* @param startTime 查询开始时间, 时间格式为yyyy/MM/dd HH:mm:ss

* @param endTime 查询结束时间, 时间格式为yyyy/MM/dd HH:mm:ss

* @param retTimeFmt 返回的结果集中,时间点的格式, 如:yyyy/MM/dd HH:mm:ss 或 yyyyMMddHH 等

* @return Map>

*/

public Map getData(String metric, Map tagMap, String aggregator, String downsample, String startTime, String endTime, String retTimeFmt) throws IOException {

String resContent = this.getData(metric, tagMap, aggregator, downsample, startTime, endTime);

return this.convertContentToMap(resContent, retTimeFmt);

}

public Map convertContentToMap(String resContent, String retTimeFmt) {

Map tagsValuesMap = new HashMap();

if (resContent == null || "".equals(resContent.trim())) {

return tagsValuesMap;

}

JSONArray array = (JSONArray) JSONObject.parse(resContent);

if (array != null) {

for (int i = 0; i < array.size(); i++) {

JSONObject obj = (JSONObject) array.get(i);

JSONObject tags = (JSONObject) obj.get("tags");

JSONObject dps = (JSONObject) obj.get("dps"); //timeValueMap.putAll(dps); Map timeValueMap = new HashMap(); for (Iterator it = dps.keySet().iterator(); it.hasNext(); ) { String timstamp = it.next(); Date datetime = new Date(Long.parseLong(timstamp)*1000); timeValueMap.put(DateTimeUtil.format(datetime, retTimeFmt), dps.get(timstamp)); } tagsValuesMap.put(tags.toString(), timeValueMap); } } return tagsValuesMap; }

}

}

return tagsValuesMap;

}

}

增加一个时间处理类

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

public class DateTimeUtil {

public static Date parse(String date,String fm){

Date res=null;

try {

SimpleDateFormat sft=new SimpleDateFormat(fm);

res=sft.parse(date);

} catch (ParseException e) {

e.printStackTrace();

}

return res;

}

}

为了建立HTTP的长连接,将PoolingHttpClient进行单例模式的修改(防止调用时频繁的实例化引起的资源消耗)

public class PoolingHttpClient {

...

/*单例模式修改*/

private static PoolingHttpClient poolingHttpClient;

private PoolingHttpClient() {

// Increase max total connection

connManager.setMaxTotal(maxTotalConnections);

// Increase default max connection per route

connManager.setDefaultMaxPerRoute(maxConnectionsPerRoute);

// config timeout

RequestConfig config = RequestConfig.custom()

.setConnectTimeout(connectTimeout)

.setConnectionRequestTimeout(waitTimeout)

.setSocketTimeout(readTimeout).build();

httpClient = HttpClients.custom()

.setKeepAliveStrategy(keepAliveStrategy)

.setConnectionManager(connManager)

.setDefaultRequestConfig(config).build();

// detect idle and expired connections and close them

IdleConnectionMonitorThread staleMonitor = new IdleConnectionMonitorThread(

connManager);

staleMonitor.start();

}

public static PoolingHttpClient getInstance() {

if (null == poolingHttpClient) {

//加锁保证线程安全

synchronized (PoolingHttpClient.class) {

if (null == poolingHttpClient) {

poolingHttpClient = new PoolingHttpClient();

}

}

}

return poolingHttpClient;

}

/*单例模式修改结束*/

public SimpleHttpResponse doPost(String url, String data)

throws IOException {

StringEntity requestEntity = new StringEntity(data);

HttpPost postMethod = new HttpPost(url);

postMethod.setEntity(requestEntity);

HttpResponse response = execute(postMethod);

int statusCode = response.getStatusLine().getStatusCode();

SimpleHttpResponse simpleResponse = new SimpleHttpResponse();

simpleResponse.setStatusCode(statusCode);

HttpEntity entity = response.getEntity();

if (entity != null) {

// should return: application/json; charset=UTF-8

String ctype = entity.getContentType().getValue();

String charset = getResponseCharset(ctype);

String content = EntityUtils.toString(entity, charset);

simpleResponse.setContent(content);

}

/*增加线程回收开始*/

EntityUtils.consume(entity);

postMethod.releaseConnection();

/*增加线程回收结束*/

return simpleResponse;

}

...

}

修改HttpClientImpl对单例模式的PoolingHttpClient调用

public class HttpClientImpl implements HttpClient {

...

//private PoolingHttpClient httpClient = new PoolingHttpClient();

private PoolingHttpClient httpClient = PoolingHttpClient.getInstance();

...

}