爬虫学习笔记16-scrapy_splash组件

1、了解scrapy_splash组件

- 与selenium有点相似,能够模拟浏览器加载js,并返回js运行后的数据;

- 对于页面需要加载渲染时作为一种辅助组件来使用,使用scrapy-splash最终拿到的response相当于是在浏览器全部渲染完成以后的网页源代码;

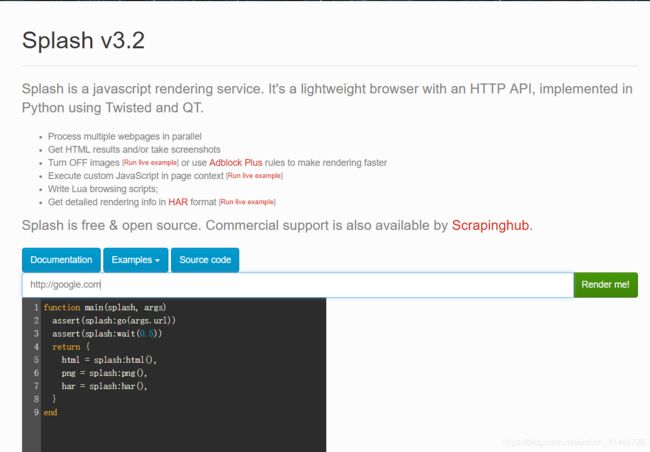

- splash官方文档

2、scrapy_splash的环境安装

(1)在python虚拟环境中安装scrapy-splash包:pip install scrapy-splash

(2)使用splash的docker镜像

-

splash的dockerfile

-

安装并启动docker服务:安装参考

-

获取splash的镜像:sudo docker pull scrapinghub/splash

-

验证是否安装成功:运行splash的docker服务,并通过浏览器访问8050端口验证安装是否成功

①前台运行 sudo docker run -p 8050:8050 scrapinghub/splash

②后台运行 sudo docker run -d -p 8050:8050 scrapinghub/splash

解决获取镜像超时:修改docker的镜像源:

- 创建并编辑docker的配置文件

sudo vi /etc/docker/daemon.json- 写入国内docker-cn.com的镜像地址配置后保存退出

{ "registry-mirrors": ["https://registry.docker-cn.com"] }- 重启电脑或docker服务后重新获取splash镜像

- 这时如果还慢,请使用手机热点(流量orz)

- 关闭splash服务:需要先关闭容器后,再删除容器

sudo docker ps -a

sudo docker stop CONTAINER_ID

sudo docker rm CONTAINER_ID

3、 在scrapy中使用splash:建立在普通爬虫的区别:

(1)在settings.py文件中添加splash的配置以及修改robots协议

# 渲染服务的url

SPLASH_URL = 'http://127.0.0.1:8050'

# 下载器中间件

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

# 去重过滤器

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

# 使用Splash的Http缓存

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

(2)爬虫文件的区别

①普通爬虫文件

import scrapy

class NoSplashSpider(scrapy.Spider):

name = 'no_splash'

allowed_domains = ['baidu.com']

start_urls = ['https://www.baidu.com/s?wd=13161933309']

def parse(self, response):

with open('no_splash.html', 'w') as f:

f.write(response.body.decode())

②使用scrapy_splash组件的爬虫文件

import scrapy

from scrapy_splash import SplashRequest # 使用scrapy_splash包提供的request对象

class WithSplashSpider(scrapy.Spider):

name = 'with_splash'

allowed_domains = ['baidu.com']

start_urls = ['https://www.baidu.com/s?wd=13161933309']

def start_requests(self):

yield SplashRequest(self.start_urls[0],

callback=self.parse_splash,

args={

'wait': 10}, # 最大超时时间,单位:秒

endpoint='render.html') # 使用splash服务的固定参数

def parse_splash(self, response):

with open('with_splash.html', 'w') as f:

f.write(response.body.decode())