saltstack自动化部署keepalived实现haproxy高可用

实验环境

redhat6.5

iptables和selinux关闭

| 主机名 | 角色 | ip |

|---|---|---|

| server1 | master服务端 | 172.25.35.51 |

| server4 | master服务端 | 172.25.35.54 |

| server2 | minion客户端 | 172.25.35.52 |

| server3 | minion客户端 | 172.25.35.53 |

| 虚拟ip | 172.25.35.100 |

四台主机的salt相关配置见:https://blog.csdn.net/Ying_smile/article/details/81868956

1、配置yum源

server4:

[root@server4 ~]# cat /etc/yum.repos.d/rhel-source.repo

[rhel-source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.35.250/rhel6.5

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[salt]

name=saltstack

baseurl=http://172.25.35.250/rhel6

gpgcheck=0

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.35.250/rhel6.5/LoadBalancer

gpgcheck=0

2、源码编译keepalived

server1:

[root@server1 ~]# cd /srv/salt

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# cd keepalived/

[root@server1 keepalived]# vim install.sls

include:

- pkgs.make

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/keepalived

[root@server1 keepalived]# cd files

[root@server1 files]# ls

keepalived-2.0.6.tar.gz

[root@server1 files]# salt server4 state.sls keepalived.install

server4:

[root@server4 init.d]# scp /usr/local/keepalived/etc/rc.d/init.d/keepalived server1:/srv/salt/keepalived/files

[root@server4 init.d]# scp /usr/local/keepalived/etc/keepalived/keepalived.conf server1:/srv/salt/keepalived/files

3、建立连接

[root@server1 files]# cd ..

[root@server1 keepalived]# vim install.sls

include:

- pkgs.make

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/keepalived

/etc/keepalived:

file.directory:

- mode: 755

/etc/sysconfig/keepalived:

file.symlink:

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

[root@server1 keepalived]# salt server4 state.sls keepalived.install

4、多节点推送

[root@server1 keepalived]# vim service.sls

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }}

VRID: {{ pillar['vrid'] }}

PRIORITY: {{ pillar['priority'] }}

kp-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf

[root@server1 keepalived]# vim files/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.35.100

}

}

[root@server1 keepalived]# cd ../../

[root@server1 srv]# cd pillar/

[root@server1 pillar]# mkdir keepalived

[root@server1 pillar]# cd keepalived/

[root@server1 keepalived]# vim install.sls

{% if grains['fqdn'] == 'server1' %}

state: MASTER

vrid: 69

priority: 100

{% elif grains['fqdn'] == 'server4' %}

state: BACKUP

vrid: 69

priority: 50

{% endif %}

[root@server1 ]# cd ../../salt

[root@server1 salt]# vim top.sls

base:

'server1':

- haproxy.install

- keepalived.service

'server4':

- haproxy.install

- keepalived.service

'roles:apache':

- match: grain

- httpd.install

'roles:nginx':

- match: grain

- nginx.service

[root@server1 salt]# cd /srv/pillar/web/

[root@server1 web]# vim install.sls

{% if grains['fqdn'] == 'server2' %}

webserver: httpd

port: 80

{% elif grains['fqdn'] == 'server3' %}

webserver: nginx

{% endif %}

[root@server1 web]# vim ../../salt/httpd/files/httpd.conf

Listen {{ port }}

[root@server1 web]# salt '*' state.highstate

[root@server1 web]# ip addr

inet 172.25.35.100/32

浏览器测试:

高可用测试:

[root@server1 web]# /etc/init.d/keepalived stop //关闭server1的keepalived,vip会自动添加到server4

[root@server4 init.d]# ip addr

inet 172.25.35.100/32

浏览器:仍旧正常访问

Haproxy 健康监测:

[root@server1 ~]# cd /opt

[root@server1 opt]# vim check_haproxy.sh

#!/bin/bash

/etc/init.d/haproxy status &> /dev/null || /etc/init.d/haproxy restart &> /dev/null

if [ $? -ne 0 ];then

/etc/init.d/keepalived stop &> /dev/null

fi

[root@server1 ~]# chmod +x check_haproxy.sh

[root@server1 ~]# scp check_haproxy.sh server4:/opt/

[root@server1 ~]# cd /srv/salt/keepalived/files/

[root@server1 files]# vim keepalived.conf

! Configuration File for keepalived

vrrp_script check_haproxy {

script "/opt/check_haproxy.sh"

interval 2

weight 2

}

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.35.100

}

track_script {

check_haproxy

}

}

[root@server1 files]# salt '*' state.highstate

关掉haproxy之后查看haproxy进程,如果可以重启,就依旧访问本机,虚拟ip会在本机,如果haproxy不能重启,就会自动把keepalived关掉,而去访问另外一台,虚拟ip也就在另外一台上面

测试:

1、haproxy关掉后可以重启

[root@server1 files]# /etc/init.d/haproxy stop

[root@server1 init.d]# ip addr

inet 172.25.35.100/32

2、haproxy关掉后不可以重启

server1:

[root@server1 files]# cd /etc/init.d/

[root@server1 init.d]# /etc/init.d/haproxy stop //关掉haproxy

[root@server1 init.d]# chmod -x haproxy

//在重启限定时间内,去掉haproxy的可执行权限保证不能重启

server4:

[root@server4 ~]# ip addr //虚拟ip分配到server4

inet 172.25.35.100/32

server1

[root@server1 init.d]# chmod +x haproxy //加上可执行权限之后,虚拟ip会自动加到本机

[root@server1 init.d]# /etc/init.d/keepalived start

[root@server1 init.d]# ip addr

inet 172.25.35.100/32

写入数据库

[root@server1 ~]# yum install mysql-server -y

[root@server1 ~]# /etc/init.d/mysqld start

[root@server1 ~]# mysql

mysql> grant all on salt.* to salt@'172.25.35.%' identified by 'ZhanG@2424';

[root@server1 ~]# cat test.sql

CREATE DATABASE `salt`

DEFAULT CHARACTER SET utf8

DEFAULT COLLATE utf8_general_ci;

USE `salt`;

--

-- Table structure for table `jids`

--

DROP TABLE IF EXISTS `jids`;

CREATE TABLE `jids` (

`jid` varchar(255) NOT NULL,

`load` mediumtext NOT NULL,

UNIQUE KEY `jid` (`jid`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#CREATE INDEX jid ON jids(jid) USING BTREE;

--

-- Table structure for table `salt_returns`

--

DROP TABLE IF EXISTS `salt_returns`;

CREATE TABLE `salt_returns` (

`fun` varchar(50) NOT NULL,

`jid` varchar(255) NOT NULL,

`return` mediumtext NOT NULL,

`id` varchar(255) NOT NULL,

`success` varchar(10) NOT NULL,

`full_ret` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

KEY `id` (`id`),

KEY `jid` (`jid`),

KEY `fun` (`fun`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

--

-- Table structure for table `salt_events`

--

DROP TABLE IF EXISTS `salt_events`;

CREATE TABLE `salt_events` (

`id` BIGINT NOT NULL AUTO_INCREMENT,

`tag` varchar(255) NOT NULL,

`data` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

`master_id` varchar(255) NOT NULL,

PRIMARY KEY (`id`),

KEY `tag` (`tag`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

[root@server1 ~]# mysql < test.sql

[root@server1 ~]# mysql

mysql> show databases;

mysql> use salt;

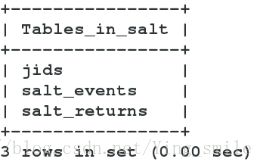

mysql> show tables;

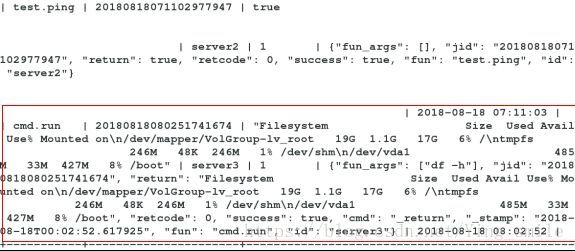

1、通过其他主机minion写入

server2:

[root@server2 ~]# cd /etc/salt/

[root@server2 salt]# yum install MySQL-python -y

[root@server2 salt]# vim minion

814 mysql.host: '172.25.35.51'

815 mysql.user: 'salt'

816 mysql.pass: 'ZhanG@2424'

817 mysql.db: 'salt'

818 mysql.port: 3306

[root@server2 salt]# /etc/init.d/salt-minion restart

server1:

[root@server1 ~]# salt 'server2' test.ping --return mysql

server2:

True

[root@server1 ~]# mysql

mysql> use salt;

mysql> select * from salt_returns; //访问会写入数据库

2、本地master写入

[root@server1 ~]# vim /etc/salt/master

1058 master_job_cache: mysql

1059 mysql.host: 'localshost'

1060 mysql.user: 'salt'

1061 mysql.pass: 'ZhanG@2424'

1062 mysql.db: 'salt'

1063 mysql.port: 3306

[root@server1 ~]# /etc/init.d/salt-master restart

[root@server1 ~]# mysql

mysql> grant all on salt.* to salt@localhost identified by 'ZhanG@2424';

mysql> flush privileges;

mysql> quit

[root@server1 salt]# yum install MySQL-python.x86_64 -y

[root@server1 ~]# mysql -u 172.25.35.51 -u salt -p

mysql> use salt;

mysql> select * from salt_returns; //可以查看到本机写入的内容

同步模块

[root@server1 salt]# mkdir /srv/salt/_modules;

[root@server1 salt]# cd /srv/salt/_modules/

[root@server1 _modules]# vim my_disk.py

#!/usr/bin/env python

def df():

return __salt__['cmd.run']('df -h')

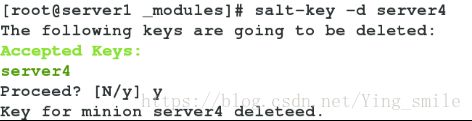

顶级master

将server4从server1上删除,并配置server4作为的顶级master

server4:

[root@server4 salt]# /etc/init.d/salt-minion stop

[root@server4 salt]# /etc/init.d/keepalived stop

[root@server4 salt]# /etc/init.d/haproxy stop

[root@server4 salt]# yum install -y salt-master

[root@server4 salt]# vim /etc/salt/master

857 order_masters: True

[root@server4 salt]# /etc/init.d/salt-master start

server1:

[root@server1 _modules]# yum install -y salt-syndic

[root@server1 _modules]# /etc/init.d/salt-syndic start

[root@server1 _modules]# vim /etc/salt/master

862 syndic_master: 172.25.35.54

[root@server1 _modules]# /etc/init.d/salt-master stop

[root@server1 _modules]# /etc/init.d/salt-master start

免密连接

[root@server1 _modules]# yum install salt-ssh -y

[root@server1 _modules]# vim /etc/salt/roster

server3:

host: 172.25.35.53

user: root

passwd: westos

[root@server1 _modules]# cd /etc/salt

[root@server1 salt]# vim master

1059 #master_job_cache: mysql

1060 #mysql.host: 'localhost'

1061 #mysql.user: 'salt'

1062 #mysql.pass: 'ZhanG@2424'

1063 #mysql.db: 'salt'

1064 #mysql.port: 3306

api认证

[root@server1 ~]# yum install salt-api -y

[root@server1 ~]# cd /etc/pki/tls/private/

[root@server1 private]# openssl genrsa 1024 > localhost.key

Generating RSA private key, 1024 bit long modulus

...++++++

....++++++

e is 65537 (0x10001)

[root@server1 private]# cd ../certs/

[root@server1 certs]# make testcert

Country Name (2 letter code) [XX]:cn

State or Province Name (full name) []:shaanxi

Locality Name (eg, city) [Default City]:xi'an

Organization Name (eg, company) [Default Company Ltd]:westos

Organizational Unit Name (eg, section) []:linux

Common Name (eg, your name or your server's hostname) []:server1

Email Address []:root@localhost

[root@server1 certs]# cd /etc/salt/master.d/

[root@server1 master.d]# vim api.conf

rest_cherrypy:

port: 8000

ssl_crt: /etc/pki/tls/certs/localhost.crt

ssl_key: /etc/pki/tls/private/localhost.key

[root@server1 master.d]# vim auth.conf

external_auth:

pam:

saltapi:

- '.*'

- '@wheel'

- '@runner'

- '@jobs'

[root@server1 master.d]# useradd saltapi

[root@server1 master.d]# passwd saltapi

[root@server1 master.d]# /etc/init.d/salt-master stop

[root@server1 master.d]# /etc/init.d/salt-master start

[root@server1 master.d]# /etc/init.d/salt-api start

[root@server1 master.d]# netstat -antlp | grep 8000

tcp 0 0 0.0.0.0:8000 0.0.0.0:* LISTEN 11702/salt-api -d

tcp 0 0 127.0.0.1:47365 127.0.0.1:8000 TIME_WAIT -

[root@server1 master.d]# curl -sSk https://localhost:8000/login \

> -H 'Accept: application/x-yaml' \

> -d username=saltapi \

> -d password=westos \

> -d eauth=pam

[root@server1 master.d]# curl -sSk https://localhost:8000 \

> -H 'Accept: application/x-yaml' \

> -H 'X-Auth-Token: e374c256d073ce73f07da90d88748d9ac6ccdc34' \

> -d client=local \

> -d tgt='*' \

> -d fun=test.ping