MobileNet V1 网络结构的原理与 Tensorflow2.0 实现

文章目录

- MobileNet 网络结构

- 代码实现

-

- 用函数实现

- 用类实现

MobileNet 网络结构

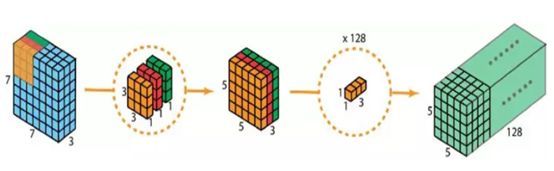

介绍 MobileNet 之前,我们需要先了解一下它的重要组件——深度级可分离卷积(Depthwise separable convolution)网络,这种网络可以被进一步分解成两个部分:对输入的每个通道使用单通道卷积核进行卷积(depthwise convolution)、使用 1x1 卷积核将输入通道数转变成输出通道数(pointwise convolution)。举例如下图所示:

在第一部分中,深度级卷积和普通卷积不同,对于标准卷积其卷积核是用在所有的输入通道上的,而深度级可分离卷积针对每个输入通道采用不同的卷积核,即一个卷积核对应一个输入通道,所以我们说这个操作是发生在深度级上的。

在第二部分中,pointwise convolution 其实就和普通卷积操作相同,只不过使用的卷积核尺寸是 1x1 的。

深度级可分离卷积和一个普通卷积的整体效果差不多,但前者会大大减少计算量和模型参数量,具体可参考卷积、可分离卷积的参数量与计算量的对比。

在 MobileNet 网络结构中,有 13 个深度级可分离卷积网络被使用。

代码实现

用函数实现

import tensorflow as tf

def conv_block(inputs, filters, kernel_size = (3,3), strides=(1,1)):

x = tf.keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides,

padding='SAME', use_bias=False)(inputs)

x = tf.keras.layers.BatchNormalization()(x)

out = tf.keras.layers.Activation('relu')(x)

return out

def depthwise_conv_block(inputs,

pointwise_conv_filters,

strides=(1,1)):

x = tf.keras.layers.DepthwiseConv2D(kernel_size=(3,3), strides=strides, padding='SAME',

use_bias=False)(inputs)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(filters=pointwise_conv_filters, kernel_size=(1,1),

padding='SAME', use_bias=False)(x)

x = tf.keras.layers.BatchNormalization()(x)

out = tf.keras.layers.Activation('relu')(x)

return out

def mobilenet_v1(inputs,

classes):

# [32, 32, 3] => [16, 16, 32]

x = conv_block(inputs, 32, strides=(2,2))

# [16, 16, 32] => [16, 16, 64]

x = depthwise_conv_block(x, 64)

# [16, 16, 64] => [8, 8, 128]

x = depthwise_conv_block(x, 128, strides=(2,2))

# [8, 8, 128] => [8, 8, 128]

x = depthwise_conv_block(x, 128)

# [8, 8, 128] => [4, 4, 256]

x = depthwise_conv_block(x, 256, strides=(2, 2))

# [4, 4, 256] => [4, 4, 256]

x = depthwise_conv_block(x, 256)

# [4, 4, 256] => [2, 2, 512]

x = depthwise_conv_block(x, 512, strides=(2, 2))

# [2, 2, 512] => [2, 2, 512]

x = depthwise_conv_block(x, 512)

# [2, 2, 512] => [2, 2, 512]

x = depthwise_conv_block(x, 512)

# [2, 2, 512] => [2, 2, 512]

x = depthwise_conv_block(x, 512)

# [2, 2, 512] => [2, 2, 512]

x = depthwise_conv_block(x, 512)

# [2, 2, 512] => [2, 2, 512]

x = depthwise_conv_block(x, 512)

# [2, 2, 512] => [1, 1, 1024]

x = depthwise_conv_block(x, 1024, strides=(2,2))

# [1, 1, 1024] => [1, 1, 1024]

x = depthwise_conv_block(x, 1024)

# [1, 1, 1024] => (1024,)

x = tf.keras.layers.GlobalAveragePooling2D()(x)

# (1024,) => (classes,)

pred = tf.keras.layers.Dense(classes, activation='softmax')(x)

return pred

inputs = np.zeros((10, 32, 32, 3), dtype=np.float32)

classes = 10

output = mobilenet_v1(inputs, classes)

output.shape

TensorShape([10, 10])

用类实现

import tensorflow as tf

class conv_block(tf.keras.Model):

def __init__(self, filters, kernel_size = (3,3), strides=(1,1)):

super().__init__()

self.listLayers = []

self.listLayers.append(tf.keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides,

padding='SAME', use_bias=False))

self.listLayers.append(tf.keras.layers.BatchNormalization())

self.listLayers.append(tf.keras.layers.Activation('relu'))

def call(self, x):

for layer in self.listLayers.layers:

x = layer(x)

return x

class depthwise_conv_block(tf.keras.Model):

def __init__(self, pointwise_conv_filters, strides=(1,1)):

super().__init__()

self.listLayers = []

self.listLayers.append(tf.keras.layers.DepthwiseConv2D(kernel_size=(3,3), strides=strides, padding='SAME',

use_bias=False))

self.listLayers.append(tf.keras.layers.BatchNormalization())

self.listLayers.append(tf.keras.layers.Activation('relu'))

self.listLayers.append(tf.keras.layers.Conv2D(filters=pointwise_conv_filters, kernel_size=(1,1),

padding='SAME', use_bias=False))

self.listLayers.append(tf.keras.layers.BatchNormalization())

self.listLayers.append(tf.keras.layers.Activation('relu'))

def call(self, x):

for layer in self.listLayers.layers:

x = layer(x)

return x

class mobilenet_v1(tf.keras.Model):

def __init__(self, classes):

super().__init__()

self.listLayers = []

self.listLayers.append(conv_block(32, strides=(2,2)))

self.listLayers.append(depthwise_conv_block(64))

self.listLayers.append(depthwise_conv_block(128, strides=(2,2)))

self.listLayers.append(depthwise_conv_block(128))

self.listLayers.append(depthwise_conv_block(256, strides=(2, 2)))

self.listLayers.append(depthwise_conv_block(256))

self.listLayers.append(depthwise_conv_block(512, strides=(2, 2)))

self.listLayers.append(depthwise_conv_block(512))

self.listLayers.append(depthwise_conv_block(512))

self.listLayers.append(depthwise_conv_block(512))

self.listLayers.append(depthwise_conv_block(512))

self.listLayers.append(depthwise_conv_block(512))

self.listLayers.append(depthwise_conv_block(1024, strides=(2,2)))

self.listLayers.append(depthwise_conv_block(1024))

self.listLayers.append(tf.keras.layers.GlobalAveragePooling2D())

self.listLayers.append(tf.keras.layers.Dense(classes, activation='softmax'))

def call(self, x):

for layer in self.listLayers.layers:

x = layer(x)

return x

inputs = np.zeros((10, 32, 32, 3), dtype=np.float32)

model = mobilenet_v1(10)

model(inputs).shape

TensorShape([10, 10])