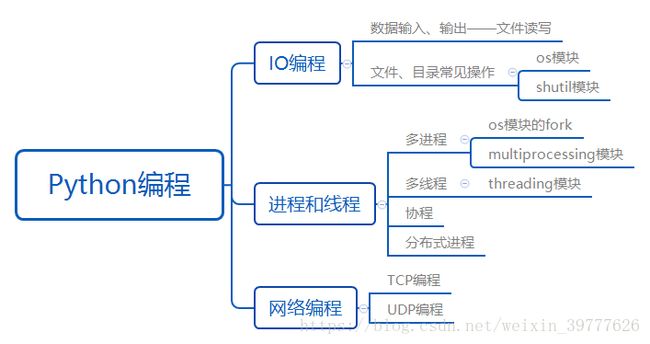

Python编程

更多爬虫实例请见 https://blog.csdn.net/weixin_39777626/article/details/81564819

文件读写

open函数

open(file, mode=‘r’, buffering=-1, encoding=None, errors=None, newline=None, closefd=True, opener=None)

主要参数说明(mode)

| 值 | 描述 |

|---|---|

| ‘r’ | 读(默认) |

| ‘w’ | 写 |

| ‘x’ | 创建一个新文件并写入 |

| ‘a’ | 追加 |

| ‘b’ | 二进制(可添加到其他模式中使用) |

| ‘t’ | 文本(默认) |

| ‘+’ | 更新磁盘文件(读写) |

读取

with open('/home/as/文档/statistic/data/GDP_world.csv','r') as Reader:

print(Reader.read())

#大文件

with open('/home/as/文档/statistic/data/GDP_world.csv','r') as Reader:

for line in Reader.readlines():

print(line.strip())

写入

with open('/home/as/文档/statistic/data/test.csv','w') as Writer:

Writer.write('I love Englisjh')

文件、目录常见操作

os模块

import os

os.getcwd() #获取路径

os.listdir('/home/as/') #返回指定目录下所有文件和目录名

os.path.isfile('/home/as/文档/statistic/data/test.csv') #是否存在xx文件

os.path.isdir('/home/as/') #是否存在目xx录

os.stat('/home/as/文档/statistic/data/test.csv') #获取文件属性

os.path.getsize('/home/as/文档/statistic/data/test.csv') #获取文件大小

shutil模块

import shutil

shutil.copytree('/home/as/文档/statistic/data','/home/as/文档/statistic/test') #复制文件夹

shutil.copyfile('/home/as/文档/statistic/data/test.csv','/home/as/文档/statistic/test/test.csv') #复制文件

进程和线程

多进程

os模块的fork

getpid() #获取当前进程的ID

import os

print('current Process (%s) start ...'%os.getpid())

pid=os.fork()

if pid<0:

print('error in fork')

elif pid==0:

print('I am child process (%s) and my parents process is (%s)'%(os.getpid(),os.getppid()))

else:

print('I (%s) created a chlid process (%s).'%(os.getpid(),pid))

multiprocessing模块

| 方法 | 功能 |

|---|---|

| start() | 启动进程 |

| join() | 实现进程间的同步 |

import os

from multiprocessing import Process

def run_proc(name):

print('Child process %s (%s) Running ...'%(name,os.getpid()))

print('Parents process %s .'%os.getpid())

for i in range(5):

p=Process(target=run_proc,args=(str(i),))

print('Process will start.')

p.start()

p.join()

print('Process end.')

#Pool可以提供指定数量的进程,默认大小是CPU的核数

from multiprocessing import Pool

import os,time,random

def run_task(name):

print('Task %s (pid=%s) is runing...'%(name,os.getpid()))

time.sleep(random.random()*3)

print('Task %s end.'%name)

print('Current process %s.'%os.getpid())

p=Pool(processes=3)

for i in range(5):

p.apply_async(run_task,args=(i,))

print('Waiting for all subprocesses done...')

p.close()

p.join()

print('All subprocesses done.')

| 方法 | 功能 |

|---|---|

| Put() | 插入数据 |

| Get() | 读取并删除 |

from multiprocessing import Process,Queue

import os,time,random

def proc_write(q,urls):

print('Process(%s) is writing...'%os.getpid())

for url in urls:

q.put(url)

print('Put %s to queue...'%url)

time.sleep(random.random())

def proc_read(q):

print('Process(%s) is reading ...'%os.getpid())

while True:

url=q.get(True)

print('Get %s from queue.'%url)

q=Queue()

proc_writer1=Process(target=proc_write,args=(q,['url_1','url_2','url_3']))

proc_writer2=Process(target=proc_write,args=(q,['url_4','url_5','url_6']))

proc_reader=Process(target=proc_read,args=(q,))

proc_writer1.start()

proc_writer2.start()

proc_reader.start()

proc_writer1.join()

proc_writer2.join()

proc_reader.terminate()

#Pipe()返回(conn1,conn2)conn1负责接收消息,conn2负责发送消息

import multiprocessing

import random

import time,os

def proc_send(pipe,urls):

for url in urls:

print('Process(%s) send: %s'%(os.getpid(),url))

pipe.send(url)

time.sleep(random.random())

def proc_recv(pipe):

while True:

print('Process(%s) send: %s'%(os,getpid(),pipe.recv()))

time.sleep(random.random())

pipe=multiprocessing.Pipe()

p1=multiprocessing.Process(target=proc_send,args=(pipe[0],['url_'+str(i) for i in range(10)]))

p2=multiprocessing.Process(target=proc_recv,args=(pipe[1],))

p1.start()

p2.start()

p1.join()

p2.join()

多线程

threading模块

import random

import time,threading

def thread_run(urls):

print('Current %s is running...'%threading.current_thread().name)

for url in urls:

print('%s -------------->>> %s '%(threading.current_thread().name,url))

time.sleep(random.random())

print('%s ended .'%threading.current_thread().name)

print('%s is running...'%threading.current_thread().name)

t1=threading.Thread(target=thread_run,name='Thread_1',args=(['url_1','url_2','url_3'],))

t2=threading.Thread(target=thread_run,name='Thread_2',args=(['url_4','url_5','url_6'],))

t1.start()

t2.start()

t1.join()

t2.join()

print('%s ended.'%threading.current_thread().name)

import random

import threading

import time

class myThread(threading.Thread):

def __init__(self,name,urls):

threading.Thread.__init__(self,name=name)

self.urls=urls

def run(self):

print('Current %s is running...'%threading.current_thread().name)

for url in self.urls:

print('%s ------->>> %s'%(threading.current_thread().name,url))

time.sleep(random.random())

print('%s ended.'%threading.current_thread().name)

print('%s is running...'%threading.current_thread().name)

t1=myThread(name='Thread_1',urls=['url_1','url_2','url_3'])

t2=myThread(name='Thread_2',urls=['url_4','url_5','url_6'])

t1.start()

t2.start()

t1.join()

t2.join()

print('%s ended .'%threading.current_thread().name)

import threading

mylock=threading.RLock()

num=0

class myThread(threading.Thread):

def __init__(self,name):

threading.Thread.__init__(self,name=name)

def run(self):

global num

while True:

mylock.acquire()

print('%s locked,Number: %d'%(threading.current_thread().name,num))

if num>=4:

mylock.release()

print('%s relseased,Number: %d'%(threading.current_thread().name,num))

break

num+=1

print('%s released,Number: %d'%(threading.current_thread().name,num))

mylock.release()

thread1=myThread('Thread_1')

thread2=myThread('Thread_1')

thread1.start()

thread2.start()

协程

from gevent import monkey;monkey.patch_all()

import gevent

import urllib

def run_task(url):

print('Visit --> %s'%url)

try:

response=urllib.request.urlopen(url)

data=response.read()

print('%d bytes received from %s.'%(len(data),url))

except Exception as e:

print(e)

urls=['https://gitthub.com/','https://www.python.org/','http://www.cnblogs.com/']

greenlets=[gevent.spawn(run_task,url) for url in urls]

gevent.joinall(greenlets)

from gevent import monkey

monkey.patch_all()

from gevent.pool import Pool

def run_task(url):

print('Visit --> %s'%url)

try:

response=urllib.urlopen(url)

data=response.read()

print('%d bytes received from %s.'%(len(data),url))

except Exception as e:

print(e)

return 'url:%s --->finish'%url

pool=Pool(2)

urls=['https://gitthub.com/','https://www.python.org/','http://www.cnblogs.com/']

results=pool.map(run_task,urls)

print(results)

分布式进程

#服务进程

import random,time

from multiprocessing.managers import BaseManager

from multiprocessing import Queue

task_queue=Queue()

result_queue=Queue()

class Queuemanager(BaseManager):

pass

Queuemanager.register('get_task_queue',callable=lambda:task_queue)

Queuemanager.register('get_result_queue',callable=lambda:result_queue)

manager=Queuemanager(address=(' ',8001),authkey='qiye'.encode('utf-8'))

manager.start()

task=manager.get_task_queue()

result=manager.get_result_queue()

for url in ['ImageUrl_'+i for i in range(10)]:

print('put task %s ...'%url)

task.put(url)

print('try get result ...')

for i in range(10):

print('result is %s'%result.get(timeout=10))

manager.shutdown()

#任务进程

import time

from multiprocessing.managers import BaseManager

class QueueManager(BaseManager):

pass

QueueManager.register('get_task_queue')

QueueManager.register('get_result_queue')

server_addr='127.0.0.1'

print('Connect to server %s ...'%server_addr)

m=Queuemanager(address=(server_addr,8001),authkey='qiye'.encode('utf-8'))

m.connect()

task=m.get_task_queue()

result=m.get_result_queue()

while(not task.empty()):

image_url=task.get(True,timeout=5)

print('run task download %s...'%image_url)

time.sleep(1)

result.put('%s---->success'%image_url)

print('worker exit.')

网络编程

TCP编程

#服务端

import socket

import threading

import time

def dealClient(stock,addr):

print('Accept new connection from %s:%s...'%addr)

stock.send(b'Hello,I am Server!')

while True:

data=sock.recv(1024)

time.sleep(1)

if not data or data.decode('utf-8')=='exit':

break

print('--->> %s !'%data.decode('utf-8'))

stock.send(('Loop_Msg: %s ! '%data.decode('utf-8')).encode('utf-8'))

sock.close()

print('Connection from %s :%s closed.'%addr)

s=socket.socket(socket.AF_INET,socket.SOCK_STREAM)

s.bind(('127.0.0.1',9999))

s.listen(5)

print('Waiting for connection...')

while True:

sock,addr=s.accept()

t=threading.Thread(target=dealClient,args=(sock,addr))

t.start()

#客户端

import socket

s=socket.socket(socket.AF_INET,socket.SOCK_STREAM)

s.connect(('127.0.0.1',9999))

print('--->>'+s.recv(1024).decode('utf-8'))

s.send(b'Hello,I am a client')

print('--->>'+s.recv(1024).decode('utf-8'))

s.send(b'exit')

s.close()

UDP编程

#服务端

import socket

s=socket.socket(socket.AF_INET,socket.SOCK_DGRAM)

s.bind(('127.0.0.1',9999))

print('Bind UDP on 9999...')

while TRUE:

data,addr=s.recvfrom(1024)

print('Received from %s : %s .'%addr)

s.sendto(b'Hello, %s !'%data,addr)

#客户端

import socket

s=socket.socket(socket.AF_INET,socket.SOCK_DGRAM)

for data in [b'Hello',b'World']:

s.sendto(data,('127.0.0.1',9999))

print(s.recv(1024).decode('utf-8'))

s.close()

更多爬虫实例请见 https://blog.csdn.net/weixin_39777626/article/details/81564819