数据挖掘实例——信用评级

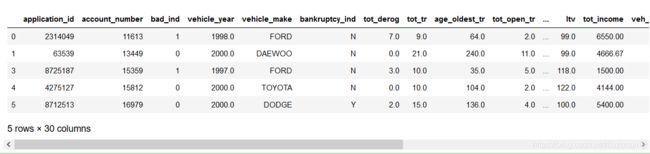

本次的源数据是汽车违约贷款数据集accepts.csv

原始数据与源代码可以在GitHub中下载

GitHub地址

如果有兴趣可以git clone下来自己跑一跑代码

读取源数据

import os

import numpy as np

from scipy import stats

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

import matplotlib.pyplot as plt

accepts = pd.read_csv('accepts.csv').dropna()

accepts

衍生变量

先定义一个除法函数

def divMy(x,y):

import numpy as np

if x==np.nan or y==np.nan:

return np.nan

elif y==0:

return -1

else:

return x/y

divMy(1,2)

0.5

增加变量

##历史负债收入比:tot_rev_line/tot_income

accepts["dti_hist"]=accepts[["tot_rev_line","tot_income"]].apply(lambda x:divMy(x[0],x[1]),axis = 1)

##本次新增负债收入比:loan_amt/tot_income

accepts["dti_mew"]=accepts[["loan_amt","tot_income"]].apply(lambda x:divMy(x[0],x[1]),axis = 1)

##本次贷款首付比例:down_pyt/loan_amt

accepts["fta"]=accepts[["down_pyt","loan_amt"]].apply(lambda x:divMy(x[0],x[1]),axis = 1)

##新增债务比:loan_amt/tot_rev_debt

accepts["nth"]=accepts[["loan_amt","tot_rev_debt"]].apply(lambda x:divMy(x[0],x[1]),axis = 1)

##新增债务额度比:loan_amt/tot_rev_line

accepts["nta"]=accepts[["loan_amt","tot_rev_line"]].apply(lambda x:divMy(x[0],x[1]),axis = 1)

accepts.head()

随机抽样,建立训练集与测试集

train = accepts.sample(frac=0.7, random_state=1234).copy()

test = accepts[~ accepts.index.isin(train.index)].copy()

print(' 训练集样本量: %i \n 测试集样本量: %i' %(len(train), len(test)))

训练集样本量: 2874

测试集样本量: 1231

lg = smf.glm('bad_ind ~ age_oldest_tr', data=train,

family=sm.families.Binomial(sm.families.links.logit)).fit()

lg.summary()

train['proba'] = lg.predict(train)

test['proba'] = lg.predict(test)

test['proba'].head(10)

4 0.238307

6 0.065840

10 0.148619

11 0.267025

13 0.283468

16 0.277072

20 0.051232

22 0.236012

35 0.147021

43 0.052479

Name: proba, dtype: float64

计算准确率

test['prediction'] = (test['proba'] > 0.3).astype('int')

pd.crosstab(test.bad_ind, test.prediction, margins=True)

acc = sum(test['prediction'] == test['bad_ind']) /np.float(len(test))

print('The accurancy is %.2f' %acc)

for i in np.arange(0.02, 0.3, 0.02):

prediction = (test['proba'] > i).astype('int')

confusion_matrix = pd.crosstab(prediction,test.bad_ind,

margins = True)

precision = confusion_matrix.ix[0, 0] /confusion_matrix.ix['All', 0]

recall = confusion_matrix.ix[0, 0] / confusion_matrix.ix[0, 'All']

Specificity = confusion_matrix.ix[1, 1] /confusion_matrix.ix[1,'All']

f1_score = 2 * (precision * recall) / (precision + recall)

print('threshold: %s, precision: %.2f, recall:%.2f ,Specificity:%.2f , f1_score:%.2f'%(i, precision, recall, Specificity,f1_score))

The accurancy is 0.77

threshold: 0.02, precision: 0.00, recall:1.00 ,Specificity:0.19 , f1_score:0.01

threshold: 0.04, precision: 0.02, recall:0.94 ,Specificity:0.19 , f1_score:0.03

threshold: 0.06, precision: 0.05, recall:0.91 ,Specificity:0.19 , f1_score:0.10

threshold: 0.08, precision: 0.09, recall:0.89 ,Specificity:0.19 , f1_score:0.16

threshold: 0.1, precision: 0.14, recall:0.88 ,Specificity:0.20 , f1_score:0.25

threshold: 0.12000000000000001, precision: 0.22, recall:0.89 ,Specificity:0.20 , f1_score:0.35

threshold: 0.13999999999999999, precision: 0.29, recall:0.89 ,Specificity:0.21 , f1_score:0.43

threshold: 0.16, precision: 0.38, recall:0.88 ,Specificity:0.22 , f1_score:0.53

threshold: 0.18, precision: 0.48, recall:0.86 ,Specificity:0.23 , f1_score:0.62

threshold: 0.19999999999999998, precision: 0.58, recall:0.86 ,Specificity:0.24 , f1_score:0.69

threshold: 0.22, precision: 0.69, recall:0.85 ,Specificity:0.26 , f1_score:0.76

threshold: 0.24, precision: 0.76, recall:0.84 ,Specificity:0.26 , f1_score:0.80

threshold: 0.26, precision: 0.80, recall:0.84 ,Specificity:0.28 , f1_score:0.82

threshold: 0.28, precision: 0.85, recall:0.83 ,Specificity:0.27 , f1_score:0.84

绘制ROC曲线

import sklearn.metrics as metrics

fpr_test, tpr_test, th_test = metrics.roc_curve(test.bad_ind, test.proba)

fpr_train, tpr_train, th_train = metrics.roc_curve(train.bad_ind, train.proba)

plt.figure(figsize=[3, 3])

plt.plot(fpr_test, tpr_test, 'b--')

plt.plot(fpr_train, tpr_train, 'r-')

plt.title('ROC curve')

plt.show()

多元逻辑回归

def forward_select(data, response):

remaining = set(data.columns)

remaining.remove(response)

selected = []

current_score, best_new_score = float('inf'), float('inf')

while remaining:

aic_with_candidates=[]

for candidate in remaining:

formula = "{} ~ {}".format(

response,' + '.join(selected + [candidate]))

aic = smf.glm(

formula=formula, data=data,

family=sm.families.Binomial(sm.families.links.logit)

).fit().aic

aic_with_candidates.append((aic, candidate))

aic_with_candidates.sort(reverse=True)

best_new_score, best_candidate=aic_with_candidates.pop()

if current_score > best_new_score:

remaining.remove(best_candidate)

selected.append(best_candidate)

current_score = best_new_score

print ('aic is {},continuing!'.format(current_score))

else:

print ('forward selection over!')

break

formula = "{} ~ {} ".format(response,' + '.join(selected))

print('final formula is {}'.format(formula))

model = smf.glm(

formula=formula, data=data,

family=sm.families.Binomial(sm.families.links.logit)

).fit()

return(model)

使用forward_select进行变量筛选

candidates = ['bad_ind','tot_derog','age_oldest_tr','tot_open_tr','rev_util','fico_score','loan_term','ltv',

'veh_mileage','dti_hist','dti_mew','fta','nth','nta']

data_for_select = train[candidates]

lg_m1 = forward_select(data=data_for_select, response='bad_ind')

lg_m1.summary()

aic is 2539.65259738261,continuing!

aic is 2448.9722277457986,continuing!

aic is 2406.5983198124773,continuing!

aic is 2401.0559077596185,continuing!

aic is 2397.8249140811195,continuing!

aic is 2395.437268476122,continuing!

aic is 2394.181908138009,continuing!

aic is 2393.010378559502,continuing!

forward selection over!

final formula is bad_ind ~ fico_score + ltv + age_oldest_tr + tot_derog + nth + tot_open_tr + veh_mileage + rev_util

可以看到不显著的变量被自动删除了