基于C++与CUDA的N卡GPU并行程序——虚幻5渲染视频很牛逼?让我们从底层C++开始自制光线追踪渲染器,并自制高级版《我的世界》

虚幻5效果图

哈喽,呆嘎吼.最近5月13日,官方放出了虚幻5的演示视频,据说是可以同屏显示数亿三角形,从而实时渲染出电影级别的画质,其动态光照效果也是极其逼真.其中提到了Nanite技术与Lumen技术,前者Nanite虚拟几何技术的出现意味着由数以亿计的多边形组成的影视级艺术作品可以被直接导入虚幻引擎,Nanite几何体可以被实时流送和缩放,因此无需再考虑多边形数量预算、多边形内存预算或绘制次数预算了;也不用再将细节烘焙到法线贴图或手动编辑细节层次,这必定是图形学领域革命性的飞跃。后者Lumen是一套全动态全局光照解决方案,能够对场景和光照变化做出实时反应,能在宏大而精细的场景中渲染间接镜面反射和可以无限反弹的漫反射。

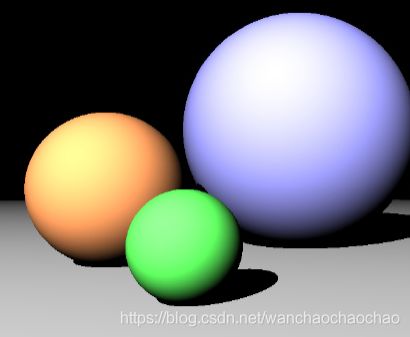

笔者自制的渲染器效果图

这技术也是相当厉害了,甚至标志着影视游戏行业跨入次世代纪元,但是这时外国的公司,我们能有这么炫酷的产品就好了,所以本人趁着疫情在家有空闲时间,尝试从零开始自制基于光线追踪技术的3D影视的渲染器,首先我来展示一下笔者自己自制的光线追踪渲染器效果图.

如上图所示,笔者自制的光线追踪渲染器,能够实现镜面反射效果和透明折射效果,可以同时支持很多个动态光源,也能随之形成动态阴影效果,而且所有光线可以同时经过很多次反射和折射,上图中的紫色球体形成了镜面反射,悬在空中的透明球体形成了折射倒影,同时还在地面形成了圆形的阴影效果.光线追踪渲染器还能够在任意位置生成平面,从而能够建造出任意想要的虚拟世界,而且能够在物体表面贴上图片从而显示出纹理,上图中就把达芬奇的《蒙娜丽莎》和梵高的《星空》挂到了虚拟的墙壁上.

如上图所示,笔者自制的光线追踪渲染器,能够实现镜面反射效果和透明折射效果,可以同时支持很多个动态光源,也能随之形成动态阴影效果,而且所有光线可以同时经过很多次反射和折射,上图中的紫色球体形成了镜面反射,悬在空中的透明球体形成了折射倒影,同时还在地面形成了圆形的阴影效果.光线追踪渲染器还能够在任意位置生成平面,从而能够建造出任意想要的虚拟世界,而且能够在物体表面贴上图片从而显示出纹理,上图中就把达芬奇的《蒙娜丽莎》和梵高的《星空》挂到了虚拟的墙壁上.

最后为了能够方便的建造三维虚拟世界,笔者写了个简单的数据导入功能,如上图所示,只需要在文本文件中写上各种物体的位置和颜色,程序就会自动读取文件数据,并生成基于光线追踪的虚拟影像.比如在txt中写一行数据,

Sphere 3 -3 0 0.9 0 0 0 0.5

就表示在光线追踪渲染器中生成一个位置坐标在(3,-3,0)的半径为0.9的反射率为0.5的无色圆球.

光线追踪原理简介

首先,假设在一个虚拟的三维空间中,有多个光源会发射百万条光线,每条光线都会射向不同的位置和角度,如果光线碰撞到了物体,就会使物体显示出颜色,如果物体表面能够反射光线,就继续计算光线产生镜面反射之后的效果,如果物体是透明的能够折射光线,就继续计算光线穿过物体之后的折射效果,并且光线还能够继续多次反射折射,没有被光线照射到的地方就会随之形成阴影,最后所有反射折射光线进入摄像机,从而在相机底片上形成光影胶片.

但是如果完全按照那种方式来计算的话,所需要的计算量将会很大,为此我们实际上是对光线传播路径进行逆向追踪的,就是假设光线从相机胶片上发射出去,然后视线如果碰撞到了物体,就判断碰撞点和光源点之间是否没有阻挡,如果没有阻挡就显示出颜色,如果物体能够反射,就计算视线反射之后的效果,这种方式就称之为光线追踪技术. 再次给出大致的光线追踪算法流程

color trace(光线的起点,光线的方向){

遍历场景中的物体,寻找最近的交点

for(场景中的光源){

测试交点与光源的连线是否被遮挡

if(没有遮挡)

color += 光源照射在物体之上表现出来的颜色

}

if(n < maxN)

color += trace()

}

上述伪代码就是大致的光线追踪流程,在trace函数中可以执行多次递归计算,这样就能实现光线的多次反弹效果,最后对整个画面的所有像素点都执行相同的计算过程

for 像素(x,y) in 画面(w,h){

光线起点 = 相机位置

光线方向 = 计算从相机位置出发,经过像素点(x,y)之后的方向向量

像素颜色 = trace(起点,方向)

}

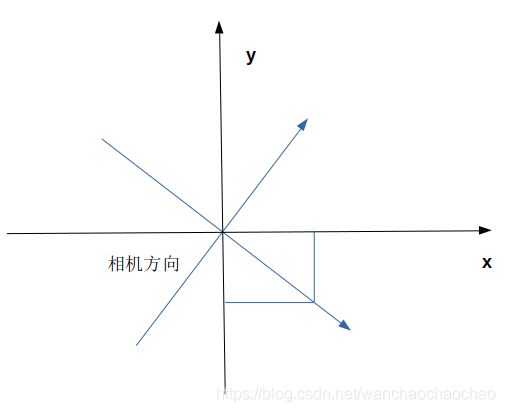

相机起始光线的方向

假设光线从相机发射出去然后碰撞物体,这时要计算光线的起点和方向向量,起点其实就是相机的位置坐标.

这里的几何关系如上图所示,已知相机的坐标和方向还有像素点在相机胶片的位置坐标(w,h),求经过这个像素点的光线方向,计算公式如下所示

这里的几何关系如上图所示,已知相机的坐标和方向还有像素点在相机胶片的位置坐标(w,h),求经过这个像素点的光线方向,计算公式如下所示

//胶片上像素相对于中心的位置

float w = (x - photo->width/2) * photo->focalScope / photo->width;

float h = (y - photo->height/2) * photo->focalScope / photo->width;

//光线的起点

vec startPoint = vec(photo->center), rayDir, color = vec(0, 0, 0);

//计算每个像素所对应的光线的向量,光线起点为相机坐标

vec temp = vec( photo->photoDir ) * photo->focalDistance;

float x1_y1 = sqrtf(temp.x * temp.x + temp.y * temp.y), x1_y1_z1 = temp.norm();

rayDir.z = temp.z + h * x1_y1 / x1_y1_z1;

rayDir.x = (x1_y1 - h * temp.z / x1_y1_z1) * temp.x / x1_y1 + w * temp.y / x1_y1;

rayDir.y = (x1_y1 - h * temp.z / x1_y1_z1) * temp.y / x1_y1 - w * temp.x / x1_y1;

相机的位置移动和视角转动

在虚拟现实游戏中,玩家需要能够进行前后左右的位置移动,以及上下左右的视角转动,就像在《绝地求生》游戏中,玩家按WASD就能走动,移动鼠标就能转动视角.

上图所示的是相机的位置移动时的几何关系,具体的计算过程如下

上图所示的是相机的位置移动时的几何关系,具体的计算过程如下

//向前移动

photo->center.x += photoSpeed*photo->photoDir.x;

photo->center.y += photoSpeed*photo->photoDir.y;

//向右移动

photo->center.x -= photoSpeed*photo->photoDir.x;

photo->center.y -= photoSpeed*photo->photoDir.y;

计算相机视角转动的过程和计算穿过像素点光线方向的过程是类似的,具体计算过程如下

//x,y是鼠标在屏幕上的位置坐标

//根据鼠标所在的位置,计算相机视角转动的角度

float dx = -(DIM_W/2 - (float)x)/4;//DIM_W,DIM_H是分辨率

float dy = -((float)y - DIM_H/2)/4;

Photo *photo = ((DataBlock *)dataBlock)->cpu_photo;

float w = dx * photo->focalScope / photo->width;

float h = dy * photo->focalScope / photo->width;

float x1 = photo->photoDir.x * photo->focalDistance;

float y1 = photo->photoDir.y * photo->focalDistance;

float z1 = photo->photoDir.z * photo->focalDistance;

float x1_y1 = sqrtf(x1 * x1 + y1 * y1);

float x1_y1_z1 = sqrtf(x1 * x1 + y1 * y1 + z1 * z1);

float dir_z = z1 + h * x1_y1 / x1_y1_z1;

float dir_x = (x1_y1 - h * z1 / x1_y1_z1) * x1 / x1_y1 + w * y1 / x1_y1;

float dir_y = (x1_y1 - h * z1 / x1_y1_z1) * y1 / x1_y1 - w * x1 / x1_y1;

float norm = sqrtf(dir_x * dir_x + dir_y * dir_y + dir_z * dir_z);

photo->photoDir.x = dir_x / norm;

photo->photoDir.y = dir_y / norm;

photo->photoDir.z = dir_z / norm;

圆球的光线追踪

首先,我们来考虑最简单的情形,假设三维空间中只有几个圆球,那么我们要如何实现对圆球的光线追踪呢.回到上面的算法流程trace函数,我们拿到一根光线的起点和方向向量后,需要计算这根光线能否碰撞到每个圆球,如果能同时碰撞到多个圆球,我们就计算出每个圆球的碰撞距离,也就是从光线起点到碰撞点之间的距离,然后取距离最短的那个圆球,最后加上那个圆球表面的颜色值.

首先实现一个圆球类class Sphere,类成员变量中要记录这个圆球的位置坐标和颜色值,然后定义圆球类的成员函数hit(),在这个函数中计算光线是否能碰撞到圆球,并返回距离和颜色值.首先要说明,颜色值除了圆球自身的表面颜色外,还有光源的入射角度,颜色值的强弱取决于入射角度的余弦值.

&emsp: 为了计算光线是否能碰撞到圆球,首先计算球心到光线的距离,然后如果距离小于圆球半径,那么就说明光想会照射到圆球表面上.

&emsp: 为了计算光线是否能碰撞到圆球,首先计算球心到光线的距离,然后如果距离小于圆球半径,那么就说明光想会照射到圆球表面上.

光线和圆球的位置关系如上图所示,计算过程如下所示

光线和圆球的位置关系如上图所示,计算过程如下所示

vec a = center - startPoint;

float norm_a = a.norm(), cos_a_b = rayDir.cos(a), sin_a_b = sqrtf(1-cos_a_b*cos_a_b);

float d = norm_a * sin_a_b;

if(cos_a_b >= 0 && d <= radius){

float Distance = norm_a*cos_a_b - sqrtf(radius*radius - d*d);//光线起点到碰撞点的距离,上图中的 PM 线段

实现了上面的圆球的光线追踪后,就能够初步显示出简单的效果图了,这时能有下面的图片效果

矩形平面的光线追踪

如果矩形平面无限大,只要光线和矩形平面不平行,光线就会碰撞到平面,但是如果矩形平面有边界,那么就需要判定碰撞点是否在边界以内,如果是则表示能够碰撞到平面.首先以最简单的平行于xoy平面的矩形平面来举例,首先创建一个平面类class Plane,然后实现其成员函数hit().

distance = ((point1.z - startPoint.z) / rayDir.z) * norm_b;//碰撞距离

vec hitPoint = startPoint + rayDir * (distance / norm_b);//碰撞点

if(hitPoint.x>=point1.x-eps && hitPoint.x<=point2.x+eps && hitPoint.y>=point1.y-eps && hitPoint.y<=point2.y+eps && hitPoint.z>=point1.z-eps && hitPoint.z<=point2.z+eps){

return distance;//如果碰撞点在边界内,返回碰撞距离

}

镜面反射效果

如果光线碰撞到了物体表面,那么直接修改光线,将光线的起点修改为碰撞点坐标,然后根据碰撞点的平面法线,计算出反射之后的方向向量.

光线的镜面反射的几何关系如上图所示,假设光线的方向向量为b,平面的法线向量为c,那么反射之后的光线方向向量为 b − 2 c ∗ ( b ⋅ c / ∣ c ∣ 2 ) b-2c*(b\cdot c/|c|^2) b−2c∗(b⋅c/∣c∣2),计算过程如下所示

光线的镜面反射的几何关系如上图所示,假设光线的方向向量为b,平面的法线向量为c,那么反射之后的光线方向向量为 b − 2 c ∗ ( b ⋅ c / ∣ c ∣ 2 ) b-2c*(b\cdot c/|c|^2) b−2c∗(b⋅c/∣c∣2),计算过程如下所示

vec c = startPoint - this->center;

float norm_c = c.norm();

*rayDir -= c * (2*rayDir->dot(c) / (norm_c * norm_c));

而在代码上,只要在光线追踪函数trace()中,继续递归调用trace()就可以直接拓展到光线反射过程了,如果递归调用了三次,就表示光线可以反弹三次.如果实现了镜面反射,就能够产生如下所示的效果图,其中的圆球和地面都能产生了两次反射的镜面效果.

透明折射效果

如果光线碰撞到一个透明的物体,那么光线就会产生折射效果,使光线方向产生弯折,这时要计算出折射之后的方向向量

假设光线为b,碰撞点所在的平面法线向量为c,首先要计算出h,然后根据折射率计算折射最后的方向,假设物体是玻璃材质,折射率为1.5,

假设光线为b,碰撞点所在的平面法线向量为c,首先要计算出h,然后根据折射率计算折射最后的方向,假设物体是玻璃材质,折射率为1.5,

h = b − c ∗ ( b ⋅ c / ∣ c ∣ 2 ) h=b-c*(b\cdot c/|c|^2) h=b−c∗(b⋅c/∣c∣2)

s i n α = 1 − ( b ⋅ c / ( ∣ b ∣ ∗ ∣ c ∣ ) ) sin\alpha = \sqrt{1-(b\cdot c/(|b|*|c|))} sinα=1−(b⋅c/(∣b∣∗∣c∣))

b ′ = h ∗ 1 1.5 + c ∗ c o s α / ∣ c ∣ b'=h*\frac{1}{1.5}+c*cos\alpha/|c| b′=h∗1.51+c∗cosα/∣c∣

具体计算过程如下所示,为了简化其过程,直接计算两次折射并穿过整个透明物体之后的光线.

//光线第一次折射

vec c = (*startPoint - center).normalize();// * (1 / radius);//计算折射光线的对称轴向量

float dot_b_c_with_norm_b = rayDir->dot(c);

vec h = *rayDir - c * dot_b_c_with_norm_b;

float cos_b_c = dot_b_c_with_norm_b / rayDir->norm();

float sin_b_c = sqrtf(abs(1 - cos_b_c * cos_b_c));

float cos_with_refraction = sqrtf(abs(1 - pow(sin_b_c/refractionRate,2)));

*rayDir = -c * cos_with_refraction + h * (1 / refractionRate);

//光线第一次折射之后的碰撞

float distance = 2 * radius * cos_with_refraction;//计算光线碰撞前的距离

*startPoint += *rayDir * (distance / rayDir->norm());

//光线第二次折射

c = (*startPoint - center).normalize();// * (1 / radius);//计算折射光线的对称轴向量

dot_b_c_with_norm_b = rayDir->dot(c);

h = *rayDir - c * dot_b_c_with_norm_b;

cos_b_c = dot_b_c_with_norm_b / rayDir->norm();

sin_b_c = sqrtf(abs(1 - cos_b_c * cos_b_c));

cos_with_refraction = sqrtf(abs(1 - pow(sin_b_c*refractionRate,2)));

*rayDir = c * cos_with_refraction + h * (1 * refractionRate);

如果加上了光线的透明折射过程,就能实现下图中透明圆球所示的效果,

主函数代码

光线追踪所需要的计算量是非常大,如果要做到能够用键盘鼠标实时操控移动,就必须要在一秒内快速渲染很多帧画面,所以对设备的计算能力要求更高.假设画面像素是1920*1080,而且一秒钟显示60帧画面,那么设备就要在一秒钟内计算一亿两千万个像素,一般的中低端CPU可能是很难做到的,而一块中端的GPU显卡是可以做到的,所以笔者使用一块400块钱的二手矿卡,从零开始使用C++编写程序调用CUDA在GPU上计算.

DataBlock data;

//将数据复制到GPU设备上

HANDLE_ERROR( cudaMalloc( (void**)&data.sphere, sizeof(Sphere)*sphereNum ) );

HANDLE_ERROR( cudaMemcpy( data.sphere, data.cpu_sphere, sizeof(Sphere)*sphereNum, cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.ground, sizeof(Ground)*groundNum ) );

HANDLE_ERROR( cudaMemcpy( data.ground, data.cpu_ground, sizeof(Ground)*groundNum, cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.plane, sizeof(Plane)*planeNum ) );

HANDLE_ERROR( cudaMemcpy( data.plane, data.cpu_plane, sizeof(Plane)*planeNum, cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.light, sizeof(Light)*lightNum ) );

HANDLE_ERROR( cudaMemcpy( data.light, data.cpu_light, sizeof(Light)*lightNum, cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.photo, sizeof(Photo) ) );

HANDLE_ERROR( cudaMemcpy( data.photo, data.cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.image, sizeof(Image)*imageNum ) );

HANDLE_ERROR( cudaMemcpy( data.image, data.cpu_image,sizeof(Image)*imageNum, cudaMemcpyHostToDevice ) );

//定义OpenGL界面功能,光线追踪器

GPUAnimBitmap bitmap( DIM_W, DIM_H, &data );

bitmap.passive_motion( (void (*)(void*,int,int))point );

bitmap.key_func( (void (*)(unsigned char,int,int,void*))keyFunc );

bitmap.anim_and_exit(

(void (*)(uchar4*,void*,int))generate_frame, (void (*)(void *))exit_func );

在主函数中把所有的球体平面等数据写上,全部保存到DataBlock data上,在传输到GPU上保存在设备全局内存中,代码最后几行都是OpenGL窗口的回调函数.

鼠标锁定函数

在bitmap.passive_motion回调函数中,调用point函数,其中定义了鼠标移动函数,一旦鼠标发生移动就会传递回鼠标的位置坐标.笔者想要的不只是鼠标能够移动,而是像玩绝地求生游戏那样,鼠标有两种状态,原始的状态就是鼠标有箭头,然后鼠标箭头能够移出窗口,这时的相机视角不会转动,然后比如按一下Tab键就能切换鼠标状态,鼠标能够锁定在窗口中,此时鼠标的箭头会消失,无法移动出窗口,而且所操纵的相机观察视角可以永远的朝一个方向转动.原始的鼠标状态是默认自带的,这时需要设置一个变量布尔值isLockCursorInWindow来表示鼠标在两种状态之间切换,但是为了实现切换鼠标状态之后的这个功能,首先需要设置鼠标箭头隐身,然后强制设置鼠标永远设定在窗口中心,这样就是锁定的无法移出窗口,再时时刻刻计算鼠标位移的坐标,最后减去窗口中心坐标.具体代码如下所示,

void point(void *dataBlock, int x, int y){

if(((DataBlock *)dataBlock)->isLockCursorInWindow){

if((x-DIM_W/2) != 0 || (y-DIM_H/2) != 0){

float dx = -(DIM_W/2 - (float)x)/4;

float dy = -((float)y - DIM_H/2)/4;

Photo *photo = ((DataBlock *)dataBlock)->cpu_photo;

float w = dx * photo->focalScope / photo->width;

float h = dy * photo->focalScope / photo->width;

//计算每个像素所对应的光线的向量,光线起点为相机坐标

float x1 = photo->photoDir.x * photo->focalDistance;

float y1 = photo->photoDir.y * photo->focalDistance;

float z1 = photo->photoDir.z * photo->focalDistance;

float x1_y1 = sqrtf(x1 * x1 + y1 * y1);

float x1_y1_z1 = sqrtf(x1 * x1 + y1 * y1 + z1 * z1);

float dir_z = z1 + h * x1_y1 / x1_y1_z1;

float dir_x = (x1_y1 - h * z1 / x1_y1_z1) * x1 / x1_y1 + w * y1 / x1_y1;

float dir_y = (x1_y1 - h * z1 / x1_y1_z1) * y1 / x1_y1 - w * x1 / x1_y1;

float norm = sqrtf(dir_x * dir_x + dir_y * dir_y + dir_z * dir_z);

photo->photoDir.x = dir_x / norm;

photo->photoDir.y = dir_y / norm;

photo->photoDir.z = dir_z / norm;

glutWarpPointer(DIM_W/2,DIM_H/2);

HANDLE_ERROR( cudaMemcpy( ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

}

}

}

键盘移动函数

主函数中的bitmap.key_func()回调函数中调用了keyFunc()键盘函数,这个比较简单,只要按下一个按键,回调函数就会自动传递按键的键值,使用switch case分支再分别定义按下每个键时的功能.具体代码如下所示,其中定义了WASD键的前后左右的移动功能.

void keyFunc(unsigned char key, int x, int y, void *dataBlock){

//cout<<"按下了键盘:"<<key<<",键码:";printf("%d\n",key);

float photoSpeed = 0.2;

switch (key){

case 96:{

if (((DataBlock *)dataBlock)->isLockCursorInWindow == false){

glutSetCursor(GLUT_CURSOR_NONE);

}

else{

glutSetCursor(GLUT_CURSOR_LEFT_ARROW);

}

((DataBlock *)dataBlock)->isLockCursorInWindow = !(((DataBlock *)dataBlock)->isLockCursorInWindow);

break;

}

case 119:{

((DataBlock *)dataBlock)->cpu_photo->center.x += photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.x;

((DataBlock *)dataBlock)->cpu_photo->center.y += photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.y;

HANDLE_ERROR( cudaMemcpy( ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

break;

}

case 115:{

((DataBlock *)dataBlock)->cpu_photo->center.x -= photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.x;

((DataBlock *)dataBlock)->cpu_photo->center.y -= photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.y;

HANDLE_ERROR( cudaMemcpy( ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

break;

}

case 97:{

((DataBlock *)dataBlock)->cpu_photo->center.x -= photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.y;

((DataBlock *)dataBlock)->cpu_photo->center.y -= -photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.x;

HANDLE_ERROR( cudaMemcpy( ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

break;

}

case 100:{

((DataBlock *)dataBlock)->cpu_photo->center.x += photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.y;

((DataBlock *)dataBlock)->cpu_photo->center.y += -photoSpeed*((DataBlock *)dataBlock)->cpu_photo->photoDir.x;

HANDLE_ERROR( cudaMemcpy( ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->cpu_photo, sizeof(Photo), cudaMemcpyHostToDevice ) );

break;

}

}

}

GPU调用函数

在主函数的bitmap.anim_and_exit()回调函数中调用了generate_frame()函数,这是让GPU计算光线追踪的核心程序,具体代码如下所示,

void generate_frame( uchar4 *pixels, void *dataBlock, int ticks ) {

dim3 grids(DIM_W/16,DIM_H/16);

dim3 threads(16,16);

kernel_3D<<<grids,threads>>>( pixels, ((DataBlock *)dataBlock)->sphere, ((DataBlock *)dataBlock)->ground, ((DataBlock *)dataBlock)->plane, ((DataBlock *)dataBlock)->photo, ((DataBlock *)dataBlock)->light, ((DataBlock *)dataBlock)->image );

}

在generate_frame()函数中使用grids和threads定义了线程网格和线程块,然后执行kernel_3D()光线追踪函数,这时的核函数就是在GPU上计算的了

GPU核函数

在GPU核函数kernel_3D()中,定义GPU的每个线程的计算过程,首先是获取每个线程的线程块ID和线程ID,然后据此映射到显示画面的像素坐标上去,然后计算穿过这个像素的光线的方向向量,最后就是执行光线追踪函数.具体代码如下所示,

__global__ void kernel_3D( uchar4 *ptr, Sphere *sphere, Ground * ground, Plane *plane, Photo *photo, Light *light, Image *image ){

//将threadIdx/BlockIdx映射到像素位置

float x = threadIdx.x + blockIdx.x * blockDim.x;

float y= threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

//胶片上像素相对于中心的位置

float w = (x - photo->width/2) * photo->focalScope / photo->width;

float h = (y - photo->height/2) * photo->focalScope / photo->width;

//光线的起点

vec startPoint = vec(photo->center), rayDir, color = vec(0, 0, 0);

//计算每个像素所对应的光线的向量,光线起点为相机坐标

vec temp = vec( photo->photoDir ) * photo->focalDistance;

float x1_y1 = sqrtf(temp.x * temp.x + temp.y * temp.y), x1_y1_z1 = temp.norm();

rayDir.z = temp.z + h * x1_y1 / x1_y1_z1;

rayDir.x = (x1_y1 - h * temp.z / x1_y1_z1) * temp.x / x1_y1 + w * temp.y / x1_y1;

rayDir.y = (x1_y1 - h * temp.z / x1_y1_z1) * temp.y / x1_y1 - w * temp.x / x1_y1;

int depth = 0;//递归计算的最大层数

hitAllObject( light, sphere, ground, plane, &startPoint, &rayDir, &color, image, depth );

ptr[offset].x = (unsigned char)(color.x * 255);//像素值

ptr[offset].y = (unsigned char)(color.y * 255);

ptr[offset].z = (unsigned char)(color.z * 255);

ptr[offset].w = 255;

}

光线追踪函数

在上面的核函数中调用光线追踪函数hitAllObject(),这个函数会根据光线的起点和方向向量,计算其照射到所有物体上时的颜色值,为了支持反射和折射过程,这个函数是能够递归调用的.其过程是遍历所有物体,然后计算其光线碰撞点,判断光源是否能照射到这个碰撞点,如果能照射到就添加上漫反射的颜色值,判断物体是否会反射或者折射,如果是,则要更新光线的起点和方向,作为反射折射后的光线,再继续递归调用原函数.具体代码如下所示,

__device__ void hitAllObject(Light *light, Sphere *sphere, Ground *ground, Plane *plane, vec *startPoint, vec *rayDir, vec *color, Image *image, int depth, float reflRate=1 ){

float distance, minDistance = INF, colorScale = 0;

int indexHitSphere = -1, indexHitGround = -1, indexHitPlane = -1;

bool isShadow = false;

//圆球

for(int i = 0; i < sphere->num; i++){

distance = sphere[i].hit( *startPoint, *rayDir, &colorScale );

if(distance < minDistance){

minDistance = distance;

indexHitSphere = i;

}

}

//地面

for(int i = 0; i < ground->num; i++){

distance = ground[i].hit( *startPoint, *rayDir, &colorScale );

if(distance < minDistance){

minDistance = distance*(1 - 1e-5f);

indexHitGround = i;

}

}

//矩形平面

for(int i = 0; i < plane->num; i++){

distance = plane[i].hit( *startPoint, *rayDir, &colorScale );

if(distance < minDistance){

minDistance = distance*(1 - 1e-5f);

indexHitPlane = i;

}

}

//光线碰撞

if(minDistance < INF){

float dir_norm_minDistance = minDistance / rayDir->norm();

*startPoint += (*rayDir) * dir_norm_minDistance;

//计算光源的漫反射

vec hitLightDir;

//printf("%d ",light->num);

for(int i = 0; i < light->num; i++){

//循环遍历所有光源

isShadow = false;

hitLightDir = light[i].center - (*startPoint);

float norm_hitLightDir = hitLightDir.norm();

for(int j = 0; j < sphere->num; j++){

distance = sphere[j].hit( *startPoint, hitLightDir, &colorScale );

if(distance < norm_hitLightDir){

isShadow = true;}

}

for(int j = 0; j < plane->num; j++){

distance = plane[j].hit( *startPoint, hitLightDir, &colorScale );

if(distance < norm_hitLightDir){

isShadow = true;}

}

//for(int j = 0; j < 1; j++){

// distance = ground[j].hit( xHit, yHit, zHit, dir_xHit, dir_yHit, dir_zHit, &n );

//}

if(!isShadow){

vec color0 = vec(0.8,0,0);

colorScale = 0;//重新初始化为0,否则会有颜色残留,显示出一圈白色细线

if(indexHitPlane != -1){

distance = plane[indexHitPlane].hit( light[i].center, -hitLightDir, &colorScale );

color0 = plane[indexHitPlane].color * reflRate;

plane[indexHitPlane].colorImage(*rayDir, *startPoint, &color0, image);

}

else if(indexHitGround != -1){

distance = ground[indexHitGround].hit( light[i].center, -hitLightDir, &colorScale );

color0 = ground[indexHitGround].color * reflRate;}

else if(indexHitSphere != -1){

distance = sphere[indexHitSphere].hit( light[i].center, -hitLightDir, &colorScale );

color0 = sphere[indexHitSphere].color * reflRate;}

*color += ((color0 * colorScale) ^ light[i].color);

}

}

//计算光线反射之后的方向向量

if(indexHitPlane != -1){

plane[indexHitPlane].refl(rayDir);

reflRate *= plane[indexHitPlane].reflRate;//更新递归光线的反射率

}

else if(indexHitGround != -1){

ground[indexHitGround].refl(rayDir);

reflRate *= ground[indexHitGround].reflRate;//更新递归光线的反射率

}

else if(indexHitSphere != -1){

if(indexHitSphere == 3 || indexHitSphere == 1){

sphere[indexHitSphere].refraction(startPoint, rayDir);

}

else{

sphere[indexHitSphere].refl(*startPoint, rayDir);

}

reflRate *= sphere[indexHitSphere].reflRate;//更新递归光线的反射率

}

//如果光线碰撞,递归计算下一层光线,直到最大层数

if(depth < MAXDEPTH){

depth += 1;

hitAllObject( light, sphere, ground, plane, startPoint, rayDir, color, image, depth, reflRate );

}

}

}

圆球类函数

因为不同的物体,产生的反射和折射的效果可能不一样,所以直接在各种物体的类中定义反射或折射功能.具体代码如下所示,

struct Sphere{

vec center, color;int num;

float radius, reflRate = 1, refractionRate = 1.5;//光线的反射率和折射率

Sphere(){

}

void setSphere(const vec &m_center, const float &m_radius, const vec &m_color){

center=m_center, radius=m_radius, color=m_color;}

//光线碰撞函数

__device__ float hit( const vec &startPoint, const vec &rayDir, float *n ){

vec a = center - startPoint;

float norm_a = a.norm(), cos_a_b = rayDir.cos(a), sin_a_b = sqrtf(1-cos_a_b*cos_a_b);

float d = norm_a * sin_a_b;

if(cos_a_b >= 0 && d <= radius){

*n = sqrtf(radius*radius-d*d) / radius;

float Distance = norm_a*cos_a_b - sqrtf(radius*radius - d*d);

return Distance;

}

return INF;

}

//光线反射函数

__device__ void refl(const vec &startPoint, vec *rayDir){

vec c = startPoint - this->center;

float norm_c = c.norm(), dot_b_c_devide_norm_c = rayDir->dot(c) / (norm_c * norm_c);

*rayDir -= c * (2*dot_b_c_devide_norm_c);

}

//光线折射函数,直接计算两次折射之后的光线

__device__ void refraction(vec *startPoint, vec *rayDir){

//光线第一次折射

vec c = (*startPoint - center).normalize();// * (1 / radius);//计算折射光线的对称轴向量

float dot_b_c_with_norm_b = rayDir->dot(c);

vec h = *rayDir - c * dot_b_c_with_norm_b;

float cos_b_c = dot_b_c_with_norm_b / rayDir->norm();

float sin_b_c = sqrtf(abs(1 - cos_b_c * cos_b_c));

*rayDir = c * cos_b_c + h * (sin_b_c / refractionRate);

//光线第一次折射之后的碰撞

float distance = 2 * radius * sqrtf(1 - pow(sin_b_c/refractionRate,2));//计算光线碰撞前的距离

*startPoint += *rayDir * (distance / rayDir->norm());

//光线第二次折射

c = (*startPoint - center).normalize();// * (1 / radius);//计算折射光线的对称轴向量

dot_b_c_with_norm_b = rayDir->dot(c);

h = *rayDir - c * dot_b_c_with_norm_b;

cos_b_c = dot_b_c_with_norm_b / rayDir->norm();

sin_b_c = sqrtf(abs(1 - cos_b_c * cos_b_c));

*rayDir = c * cos_b_c + h * (sin_b_c * refractionRate);

}

};

矩形平面类

struct Plane{

//平行于坐标轴的矩形平面

vec point1, point2, color;//由矩形对角两个点来确定平面

float reflRate = 1;int indexImage = -1;int num;

Plane(){

}

void setPlane(const vec &m_point1, const vec &m_point2, const vec &m_color, float m_reflRate=1, int m_indexImage=-1){

point1 = m_point1, point2 = m_point2, color = m_color, reflRate = m_reflRate, indexImage = m_indexImage;}

__device__ float hit(const vec &startPoint, const vec &rayDir, float *n){

float norm_b = rayDir.norm(), distance, eps = 1e-5f;

if(point1.z == point2.z){

*n = abs(rayDir.z / norm_b);distance = ((point1.z - startPoint.z) / rayDir.z) * norm_b;}

else if(point1.y == point2.y){

*n = abs(rayDir.y / norm_b);distance = ((point1.y - startPoint.y) / rayDir.y) * norm_b;}

else if(point1.x == point2.x){

*n = abs(rayDir.x / norm_b);distance = ((point1.x - startPoint.x) / rayDir.x) * norm_b;}

if(distance >= 0){

vec hitPoint = startPoint + rayDir * (distance / norm_b);

if(hitPoint.x>=point1.x-eps && hitPoint.x<=point2.x+eps && hitPoint.y>=point1.y-eps && hitPoint.y<=point2.y+eps && hitPoint.z>=point1.z-eps && hitPoint.z<=point2.z+eps){

return distance;}}

return INF;

}

//光线反射函数

__device__ void refl(vec *rayDir){

if(point1.z == point2.z){

rayDir->z = -rayDir->z;}

if(point1.y == point2.y){

rayDir->y = -rayDir->y;}

if(point1.x == point2.x){

rayDir->x = -rayDir->x;}

}

};

地面光源相机类

struct Ground{

float z = 0, reflRate = 1;//光线反射率

int num;

vec color;

Ground(){

}

void setGround(const float &m_z, const vec &m_color, float m_reflRate = 1){

z = m_z, color = m_color, reflRate = m_reflRate;}

//光线碰撞函数

__device__ float hit(const vec &startPoint, const vec &rayDir, float *n){

float norm_b = rayDir.norm();

float distance = ((z - startPoint.z) / rayDir.z) * norm_b;

if(distance >= 0){

*n = abs(rayDir.z / norm_b);

return distance;

}

return INF;

}

//光线反射函数

__device__ void refl(vec *rayDir){

rayDir->z = -rayDir->z;

}

};

struct Light{

//单点光源

vec center, color;int num;

Light(){

}

void setLight(const vec &m_center, const vec &m_color){

center = m_center, color = m_color;}

};

struct Photo{

float x = 0, y = 0, z = 0;int num;

vec center, photoDir;

float focalDistance = 0.2;//焦距

float focalScope = 0.3;//横向的广度

float width = 960, height = 960;

Photo(){

}

void setPhoto(const vec &m_center, const float &m_focalDistance, const float &m_focalScope, const float &m_width, const float &m_height, const vec &m_photoDir ){

center=m_center, focalDistance=m_focalDistance, focalScope=m_focalScope, width=m_width, height=m_height, photoDir=m_photoDir;}

};

struct DataBlock{

Sphere *sphere, *cpu_sphere;

Ground *ground, *cpu_ground;

Photo *photo, *cpu_photo;

Light *light, *cpu_light;

Plane *plane, *cpu_plane;

Image *image, *cpu_image;

bool isLockCursorInWindow = false;

};

向量类

struct vec{

float x, y, z;

__host__ __device__ vec(){

}

__host__ __device__ vec(const float &m_x, const float &m_y, const float &m_z):x(m_x), y(m_y), z(m_z){

}

__host__ __device__ vec operator +(const vec &other)const{

vec result = vec(this->x + other.x, this->y + other.y, this->z + other.z);return result;}

__host__ __device__ vec operator -(const vec &other)const{

vec result = vec(this->x - other.x, this->y - other.y, this->z - other.z);return result;}

__host__ __device__ const vec operator -(){

this->x = -this->x, this->y = -this->y, this->z = -this->z;return *this;}

__host__ __device__ vec operator *(const float &timeVal)const{

vec result = vec(this->x * timeVal, this->y * timeVal, this->z * timeVal); return result;}

__host__ __device__ vec operator ^(const vec &other){

this->x *= other.x, this->y *= other.y, this->z *= other.z; return *this;}

__host__ __device__ void operator +=(const vec &other){

x += other.x, y += other.y, z += other.z;}

__host__ __device__ void operator -=(const vec &other){

x -= other.x, y -= other.y, z -= other.z;}

__host__ __device__ float norm()const{

return sqrtf(x*x + y*y + z*z);}

__host__ __device__ vec normalize()const{

float normVal=this->norm();

vec result = vec(x/normVal,y/normVal,z/normVal);return result;}

__host__ __device__ float dot(const vec &other)const{

return (x*other.x + y*other.y + z*other.z);}

__host__ __device__ float cos(const vec &other)const{

return this->dot(other) / (this->norm() * other.norm());}

};

最后在退出整个程序的时候,需要释放各种数据的内存资源,这些过程统一在exit_func()函数中执行,具体代码如下所示,

void exit_func(void *dataBlock){

//释放用户数据的内存资源

delete[] ((DataBlock *)dataBlock)->cpu_sphere;

delete[] ((DataBlock *)dataBlock)->cpu_ground;

delete[] ((DataBlock *)dataBlock)->cpu_plane;

delete[] ((DataBlock *)dataBlock)->cpu_photo;

delete[] ((DataBlock *)dataBlock)->cpu_light;

HANDLE_ERROR( cudaFree( ((DataBlock *)dataBlock)->sphere ) );

HANDLE_ERROR( cudaFree( ((DataBlock *)dataBlock)->ground ) );

HANDLE_ERROR( cudaFree( ((DataBlock *)dataBlock)->plane ) );

HANDLE_ERROR( cudaFree( ((DataBlock *)dataBlock)->photo ) );

HANDLE_ERROR( cudaFree( ((DataBlock *)dataBlock)->light ) );

}