poi异步批量上传Excel十万级数据+springboot+上传到数据库时前端实时进度条显示

梗概

1、用easypoi解析Excel时解析过程已经封装好,我们得到的直接是解析完成的list数据集合,但是当数据量较大,据我测试达到10000条时就出现了内存溢出 java heap space。所以数据量过大时,用自己定义的poi进行边解析,边插入数据库。并且多线程批量插入数据库。这个过程参考该篇文章,帮助很大:

Springboot+poi上传并处理百万级数据EXCEL

2、上传到数据库的过程中,前端需要实时显示进度条。看了大部分博客都是对上传文件的监听,获取进度。但是我要做的是对插入数据库的数据进行监听。

操作步骤

1、前端页面

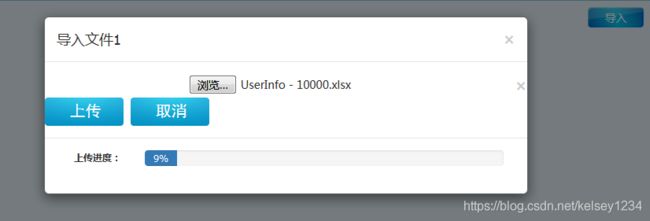

导入文件1

var timer_is_on = 1;

var timer ;

function toCancel() {

timer_is_on = 0;

clearInterval(timer);

}

function toProgress() {

var formData = new FormData();

var file = $("#file_id");

formData.append('file', file[0].files[0]);

// 写入数据

$.ajax({

url: "/user/user_toImport",

type: 'POST',

Accept: 'text/html;charset=UTF-8',

cache: false,

data: formData,

processData: false,

contentType: false,

success: function (msg) {

},

});

/*** 进度条的显示 */

window.setTimeout(function () {

if (timer_is_on == 1){

timer = window.setInterval(function () {

$.ajax({

type: 'post',

dataType: 'json',

url: "/user/user_toProress",

success: function (data) {

$("#myModal_add_progressBar").css("width", data.percent + "%").text(data.percent + "%");

if (data.percent == "100") {

window.clearInterval(timer);

}

},

});

}, 800);

}

}, 800);

}

用两个ajax发送两个请求,第一个ajax发送请求进行解析数据保存到数据库,第二个ajax用一个定时器,不断发送请求给后端进行获取进度

3、Controller

@RequestMapping(value = "/user/user_toImport")

@ResponseBody

public ReturnResult toUploadExcel(

@RequestParam(value = "file" )MultipartFile multipartFile

) {

try {

userService.addBlackLists(multipartFile.getInputStream() );

} catch (Exception e) {

e.printStackTrace();

}

return ReturnResult.success();

}

Map resultMap = new HashMap<>();

@RequestMapping(value = "/user/user_toProress")

@ResponseBody

public Map progress() throws IOException {

int percent = userService.getPercent();

resultMap.put("percent" , percent);

return resultMap;

}

4、ServiceImpl

@Service

public class UserServiceImpl implements UserService {

@Autowired

private UserMapper userMapper;

int addingCount = 0;

int percent = 0;

@Override

public int addBlackLists(InputStream is) throws ExecutionException, InterruptedException {

ExecutorService executorService = Executors.newCachedThreadPool();

ArrayList> resultList = new ArrayList<>();

XxlsAbstract xxlsAbstract = new XxlsAbstract() {

/**

step:

1、查找sheet:processOneSheet

2、处理每一行:optRows

3、进入XxlsAbstract后,parse()方法内部不断调用optRows()方法,所以不 断地解析每一行,

4、每解析一行,willSaveAmount就会加1。直到加到1001(包含了表头行),将list集合存到数据库

5.

*/

@Override

public void optRows(int sheetIndex, int curRow, List rowlist) throws SQLException {

/**

* 边解析边插入数据库 否则会出现oom java heap space

* 判断即将插入的数据是否已经到达1000,如果到达1000,

* 进行数据插入

*/

if (this.willSaveAmount == 1001) {

List> list = new LinkedList<>(this.dataList.subList(1, 1001));

Callable callable = () -> {

int count = userMapper.addBlackLists(list);

//将保存到数据库的数量返回给Controller(getPercent方法),Controller再返回给前端,产生进度

addingCount += count;

double dPercent = (double) addingCount / 100000; //将计算出来的数转换成double

percent = (int) (dPercent * 100); //再乘上100取整

return count;

};

this.willSaveAmount = 0;

this.dataList = new LinkedList<>();

Future future = executorService.submit(callable);

}

//没有映射到类里面,是因为操作excel数据时边解析边插入数据库,如果要映射要在获取cell时进行映射

//汇总数据

Map map = new HashMap<>();//LinkedHashMap在遍历的时候会比HashMap慢

map.put("user_name", rowlist.get(0));

map.put("gender", rowlist.get(1));

map.put("account", rowlist.get(2));

map.put("password", rowlist.get(3));

map.put("address1", rowlist.get(4));

map.put("address2", rowlist.get(5));

map.put("address3", rowlist.get(6));

map.put("address4", rowlist.get(7));

map.put("address5", rowlist.get(8));

map.put("address6", rowlist.get(9));

map.put("address7", rowlist.get(10));

map.put("address8", rowlist.get(11));

map.put("address9", rowlist.get(12));

map.put("address10", rowlist.get(13));

map.put("address11", rowlist.get(14));

map.put("address12", rowlist.get(15));

map.put("address13", rowlist.get(16));

map.put("address14", rowlist.get(17));

map.put("address15", rowlist.get(18));

map.put("address16", rowlist.get(19));

map.put("telephone1", rowlist.get(20));

map.put("telephone2", rowlist.get(21));

map.put("telephone3", rowlist.get(22));

map.put("telephone4", rowlist.get(23));

map.put("telephone5", rowlist.get(24));

map.put("telephone6", rowlist.get(25));

map.put("telephone7", rowlist.get(26));

map.put("telephone8", rowlist.get(27));

map.put("telephone9", rowlist.get(28));

map.put("telephone10", rowlist.get(29));

map.put("telephone11", rowlist.get(30));

map.put("telephone12", rowlist.get(31));

map.put("telephone13", rowlist.get(32));

map.put("telephone14", rowlist.get(33));

map.put("telephone15", rowlist.get(34));

map.put("telephone16", rowlist.get(35));

map.put("email", rowlist.get(36));

this.dataList.add(map);

this.willSaveAmount++;

this.totalSavedAmount++;

}

};

try {

xxlsAbstract.processOneSheet(is, 1);

} catch (Exception e) {

e.printStackTrace();

}

//针对没有存入的数据进行处理

if (xxlsAbstract.willSaveAmount != 0) {

List> list = new LinkedList<>(xxlsAbstract.dataList);

Callable callable = () -> {

int count = userMapper.addBlackLists(list);

//导出的进度条信息

addingCount += count;

double dPercent = (double) addingCount / 100000; //将计算出来的数转换成double

percent = (int) (dPercent * 100); //再乘上100取整

return count;

};

Future future = executorService.submit(callable);

}

executorService.shutdown();

return 0;

//

}

@Override

public int getPercent() {

return percent;

}

}

为了减小数据库的io,提高性能。运用1000条批量插入的方式,同时运用Jave的Future模式,多线程操作数据

5、这里用到的解析Excel的方法

/**

* @Author wpzhang

* @Date 2019/12/17

* @Description

*/

package com.yaspeed.core;

import org.apache.poi.openxml4j.opc.OPCPackage;

import org.apache.poi.ss.formula.functions.T;

import org.apache.poi.xssf.eventusermodel.XSSFReader;

import org.apache.poi.xssf.model.SharedStringsTable;

import org.apache.poi.xssf.usermodel.XSSFRichTextString;

import org.xml.sax.Attributes;

import org.xml.sax.InputSource;

import org.xml.sax.SAXException;

import org.xml.sax.XMLReader;

import org.xml.sax.helpers.DefaultHandler;

import org.xml.sax.helpers.XMLReaderFactory;

import java.io.InputStream;

import java.sql.SQLException;

import java.util.*;

/**

* XSSF and SAX (Event API)

*/

public abstract class XxlsAbstract extends DefaultHandler {

private SharedStringsTable sst;

private String lastContents;

private boolean nextIsString;

private int sheetIndex = -1;

private List rowlist = new ArrayList<>();

// public List> dataList = new LinkedList<>(); //即将进行批量插入的数据

public List> dataList = new LinkedList<>(); //即将进行批量插入的数据

public int willSaveAmount; //将要插入的数据量

public int totalSavedAmount; //总共插入了多少数据

private int curRow = 0; //当前行

private int curCol = 0; //当前列索引

private int preCol = 0; //上一列列索引

private int titleRow = 0; //标题行,一般情况下为0

private int rowsize = 0; //列数

//excel记录行操作方法,以sheet索引,行索引和行元素列表为参数,对sheet的一行元素进行操作,元素为String类型

public abstract void optRows(int sheetIndex, int curRow, List rowlist) throws SQLException;

//只遍历一个sheet,其中sheetId为要遍历的sheet索引,从1开始,1-3

/**

* @paramis

* @param sheetId sheetId为要遍历的sheet索引,从1开始,1-3

* @throws Exception

*/

public void processOneSheet(InputStream is, int sheetId) throws Exception {

OPCPackage pkg = OPCPackage.open(is);

XSSFReader r = new XSSFReader(pkg);

SharedStringsTable sst = r.getSharedStringsTable();

XMLReader parser = fetchSheetParser(sst);

// rId2 found by processing the Workbook

// 根据 rId# 或 rSheet# 查找sheet

InputStream sheet2 = r.getSheet("rId" + sheetId);

sheetIndex++;

InputSource sheetSource = new InputSource(sheet2);

parser.parse(sheetSource);// 调用endElement

sheet2.close();

}

public XMLReader fetchSheetParser(SharedStringsTable sst)

throws SAXException {

XMLReader parser = XMLReaderFactory.createXMLReader();

//.createXMLReader("org.apache.xerces.parsers.SAXParser");

this.sst = sst;

parser.setContentHandler(this);

return parser;

}

public void startElement(String uri, String localName, String name,

Attributes attributes) throws SAXException {

// c => 单元格

if (name.equals("c")) {

// 如果下一个元素是 SST 的索引,则将nextIsString标记为true

String cellType = attributes.getValue("t");

String rowStr = attributes.getValue("r");

curCol = this.getRowIndex(rowStr);

if (cellType != null && cellType.equals("s")) {

nextIsString = true;

} else {

nextIsString = false;

}

}

// 置空

lastContents = "";

}

public void endElement(String uri, String localName, String name)

throws SAXException {

//todo 3 调用optRows()

// 根据SST的索引值的到单元格的真正要存储的字符串

// 这时characters()方法可能会被调用多次

if (nextIsString) {

try {

int idx = Integer.parseInt(lastContents);

lastContents = new XSSFRichTextString(sst.getEntryAt(idx))

.toString();

} catch (Exception e) {

}

}

// v => 单元格的值,如果单元格是字符串则v标签的值为该字符串在SST中的索引

// 将单元格内容加入rowlist中,在这之前先去掉字符串前后的空白符

if (name.equals("v")) {

String value = lastContents.trim();

value = value.equals("") ? " " : value;

int cols = curCol - preCol;

if (cols > 1) {

for (int i = 0; i < cols - 1; i++) {

rowlist.add(preCol, "");

}

}

preCol = curCol;

rowlist.add(curCol - 1, value);//这里对rowlist进行了初始化

} else {

//如果标签名称为 row ,这说明已到行尾,调用 optRows() 方法

if (name.equals("row")) {

int tmpCols = rowlist.size();

if (curRow > this.titleRow && tmpCols < this.rowsize) {

for (int i = 0; i < this.rowsize - tmpCols; i++) {

rowlist.add(rowlist.size(), "");

}

}

try {

optRows(sheetIndex, curRow, rowlist);

} catch (SQLException e) {

e.printStackTrace();

}

if (curRow == this.titleRow) {

this.rowsize = rowlist.size();

}

rowlist.clear();

curRow++;

curCol = 0;

preCol = 0;

}

}

}

public void characters(char[] ch, int start, int length)

throws SAXException {

//得到单元格内容的值

lastContents += new String(ch, start, length);

}

//得到列索引,每一列c元素的r属性构成为字母加数字的形式,字母组合为列索引,数字组合为行索引,

//如AB45,表示为第(A-A+1)*26+(B-A+1)*26列,45行

public int getRowIndex(String rowStr) {

rowStr = rowStr.replaceAll("[^A-Z]", "");

byte[] rowAbc = rowStr.getBytes();

int len = rowAbc.length;

float num = 0;

for (int i = 0; i < len; i++) {

num += (rowAbc[i] - 'A' + 1) * Math.pow(26, len - i - 1);

}

return (int) num;

}

public int getTitleRow() {

return titleRow;

}

public void setTitleRow(int titleRow) {

this.titleRow = titleRow;

}

}

6、顺便记录下将list

INSERT INTO user_import

(

user_name , gender , account , password ,

address1 , address2 , address3 , address4 , address5 , address6 , address7 , address8 , address9 , address10 , address11 , address12 , address13 , address14 , address15 , address16 ,

telephone1 , telephone2 , telephone3 , telephone4 , telephone5 , telephone6 , telephone7 , telephone8 , telephone9 , telephone10 , telephone11 , telephone12 , telephone13 , telephone14 , telephone15 , telephone16 ,

email

)

VALUES

(

#{item.user_name , jdbcType=VARCHAR} , #{item.gender, jdbcType=VARCHAR} , #{item.account , jdbcType=VARCHAR} , #{item.password, jdbcType=VARCHAR} ,

#{item.address1, jdbcType=VARCHAR} , #{item.address2, jdbcType=VARCHAR} , #{item.address3, jdbcType=VARCHAR} , #{item.address4, jdbcType=VARCHAR} , #{item.address5, jdbcType=VARCHAR} , #{item.address6, jdbcType=VARCHAR} , #{item.address7, jdbcType=VARCHAR} , #{item.address8, jdbcType=VARCHAR} , #{item.address9, jdbcType=VARCHAR} , #{item.address10, jdbcType=VARCHAR} , #{item.address11, jdbcType=VARCHAR} , #{item.address12, jdbcType=VARCHAR} , #{item.address13, jdbcType=VARCHAR} , #{item.address14, jdbcType=VARCHAR} , #{item.address15, jdbcType=VARCHAR} , #{item.address16, jdbcType=VARCHAR},

#{item.telephone1,jdbcType=VARCHAR} , #{item.telephone2,jdbcType=VARCHAR} , #{item.telephone3,jdbcType=VARCHAR} , #{item.telephone4,jdbcType=VARCHAR} , #{item.telephone5,jdbcType=VARCHAR} , #{item.telephone6,jdbcType=VARCHAR} , #{item.telephone7,jdbcType=VARCHAR} , #{item.telephone8,jdbcType=VARCHAR} , #{item.telephone9,jdbcType=VARCHAR} , #{item.telephone10,jdbcType=VARCHAR} , #{item.telephone11,jdbcType=VARCHAR} , #{item.telephone12,jdbcType=VARCHAR} , #{item.telephone13,jdbcType=VARCHAR} , #{item.telephone14,jdbcType=VARCHAR} , #{item.telephone15,jdbcType=VARCHAR} , #{item.telephone16,jdbcType=VARCHAR} ,

#{item.email ,jdbcType=VARCHAR}

)

在插入到数据库的同时前端一直不断在发请求,Controller中一直在执行progress()方法,通过调用getPercent()不断获取serviceImpl中的percent(进度),将进度塞到map集合里传给前端

效果图

经测试,插入100000条数据到数据库2分钟不到,由于电脑太垃圾,正常电脑应该在1分半钟左右

经测试,插入100000条数据到数据库2分钟不到,由于电脑太垃圾,正常电脑应该在1分半钟左右