Ubuntu 16.04开发CUDA程序入门(一)

- 环境:ubuntu 16.04+NVIDIA-SMI 378.13+cmake 3.5.1+CUDA 8.0+KDevelop 4.7.3

环境配置

- NVIDIA驱动、cmake、CUDA配置方法见:ubuntu 16.04 配置运行 Kintinuous

- KDevelop配置:命令行输入

sudo apt-get install kdevelop

参考文献

- 刘金硕等.基于CUDA的并行程序设计.科学出版社.2014

- linux下使cmake编译cuda: http://blog.csdn.net/u012839187/article/details/45887737 .

- CUDA Example: /home/luhaiyan/NVIDIA_CUDA-8.0_Samples/0_Simple/vectorAdd/vectorAdd.cu

数组相加-程序代码

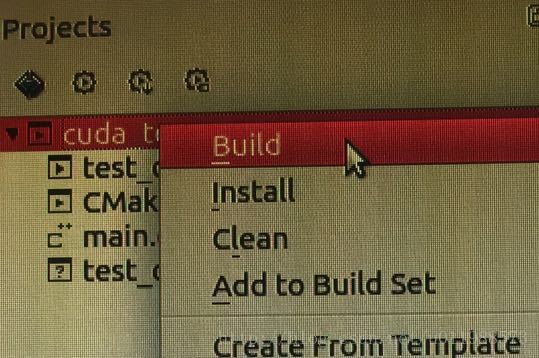

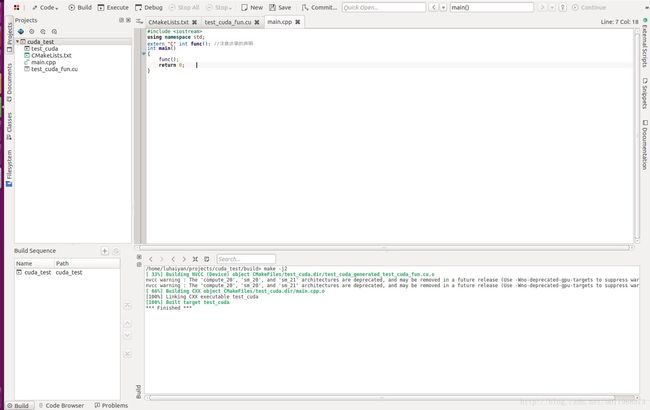

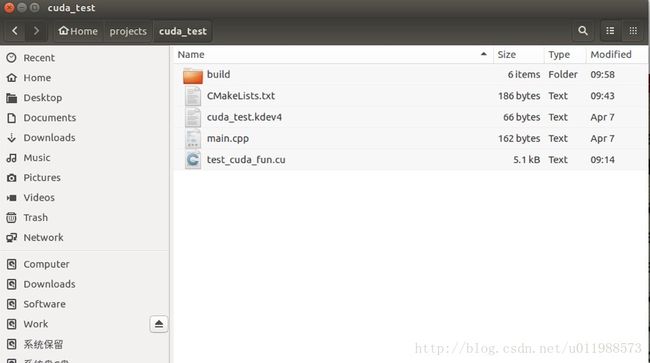

- 打开KDevelop,新建工程,“New From Template…”-“Standard”-“Terminal”,“Application Name:”处填写“cuda_test”,“Location:”为默认的“/home/luhaiyan/projects”。

- 在cuda_test工程下新建文件“test_cuda_fun.cu”,“test_cuda_fun.cu”文件内容为[2][3]:

#include

#include

#include

__global__ void vectorAdd(const float *A, const float *B, float *C, int numElements)

{

int i = blockDim.x * blockIdx.x + threadIdx.x;

if (i < numElements)

{

C[i] = A[i] + B[i];

}

}

extern "C" int func()

{

cudaError_t err = cudaSuccess;

int numElements = 3;

size_t size = numElements * sizeof(float);

printf("[Vector addition of %d elements]\n", numElements);

float *h_A = (float *)malloc(size);

float *h_B = (float *)malloc(size);

float *h_C = (float *)malloc(size);

if (h_A == NULL || h_B == NULL || h_C == NULL)

{

fprintf(stderr, "Failed to allocate host vectors!\n");

exit(EXIT_FAILURE);

}

printf("Index h_A h_B\n");

for (int i = 0; i < numElements; ++i)

{

h_A[i] = rand()/(float)RAND_MAX;

h_B[i] = rand()/(float)RAND_MAX;

printf("Index %d: %f %f\n",i,h_A[i],h_B[i]);

}

printf("\n");

float *d_A = NULL;

err = cudaMalloc((void **)&d_A, size);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to allocate device vector A (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

float *d_B = NULL;

err = cudaMalloc((void **)&d_B, size);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to allocate device vector B (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

float *d_C = NULL;

err = cudaMalloc((void **)&d_C, size);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to allocate device vector C (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

printf("Copy input data from the host memory to the CUDA device\n");

err = cudaMemcpy(d_A, h_A, size, cudaMemcpyHostToDevice);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to copy vector A from host to device (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

err = cudaMemcpy(d_B, h_B, size, cudaMemcpyHostToDevice);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to copy vector B from host to device (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

int threadsPerBlock = 256;

int blocksPerGrid =(numElements + threadsPerBlock - 1) / threadsPerBlock;

printf("CUDA kernel launch with %d blocks of %d threads\n", blocksPerGrid, threadsPerBlock);

vectorAdd<<>>(d_A, d_B, d_C, numElements);

err = cudaGetLastError();

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to launch vectorAdd kernel (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

printf("Copy output data from the CUDA device to the host memory\n");

err = cudaMemcpy(h_C, d_C, size, cudaMemcpyDeviceToHost);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to copy vector C from device to host (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

for (int i = 0; i < numElements; ++i)

{

if (fabs(h_A[i] + h_B[i] - h_C[i]) > 1e-5)

{

fprintf(stderr, "Result verification failed at element %d!\n", i);

exit(EXIT_FAILURE);

}

}

printf("Test PASSED\n\n");

printf("vectorAdd_Result:\n");

for(int i=0;iprintf("Index %d: %f\n",i,h_C[i]);

printf("\n");

err = cudaFree(d_A);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to free device vector A (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

err = cudaFree(d_B);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to free device vector B (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

err = cudaFree(d_C);

if (err != cudaSuccess)

{

fprintf(stderr, "Failed to free device vector C (error code %s)!\n", cudaGetErrorString(err));

exit(EXIT_FAILURE);

}

free(h_A);

free(h_B);

free(h_C);

printf("Done\n");

return 0;

}

#include

using namespace std;

extern "C" int func();

int main()

{

func();

return 0;

}

cmake_minimum_required(VERSION 2.6)

project(cuda_test)

find_package(CUDA REQUIRED)

include_directories(${CUDA_INCLUDE_DIRS})

CUDA_ADD_EXECUTABLE(test_cuda main.cpp test_cuda_fun.cu)

cd '/home/luhaiyan/projects/cuda_test/build'

./test_cuda

- 运行结果: