kubeadm1.8.4安装kubernetes1.8.4集群

kubeadm是kubernetes提供的集群部署工具,目前处于测试阶段不能用于生产环节的部署。kubeadm适合快速搭建一个kubernetes学习环境。

本文适合初学者通过kubeadm搭建一个3节点k8s集群。在安装过程中如果有什么疑问欢迎留言交流。

软件环境

docker 17.06.0-ce

kubeadm 1.8.4

kubelet 1.8.4

kubectl 1.8.4

CentOS Linux release 7.4.1708 (Core)

3台机器都配置好科学上网,在这里就不讨论如何科学上网了。3台机器时间保持一致,时间不一致会引起node节点加入集群失败

准备阶段

(3台机器上都要操作)

配置hosts

vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 192.168.123.81 vmnode1 #master 192.168.123.82 vmnode2 192.168.123.83 vmnode3关闭方法墙

systemctl stop firewalld systemctl disable firewalld创建/etc/sysctl.d/k8s.conf文件

cat << EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 EOF sysctl -p /etc/sysctl.d/k8s.conf如果出现列错误

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory执行modprobe br_netfilter同时加入rc.local自启动中

modprobe br_netfilter echo "modprobe br_netfilter" >> /etc/rc.local禁用SELINUX:

setenforce 0修改/etc/selinux/config文件

SELINUX=disabled关闭swap

Kubernetes 1.8开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动。可以通过kubelet的启动参数–fail-swap-on=falseswapoff -a修改/etc/fstab 文件,注释掉 SWAP 的自动挂载.

使用free -m确认swap已经关闭。swappiness参数调整,修改/etc/sysctl.d/k8s.conf添加vm.swappiness=0

执行sysctl -p /etc/sysctl.d/k8s.conf使修改生效。

调整iptables规则

执行iptables -P FORWARD ACCEPT然后加入rc.localiptables -P FORWARD ACCEPT echo "sleep 30 && /sbin/iptables -P FORWARD ACCEPT" >> /etc/rc.local安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools防止yum超时

如果网速慢的话可以通过增加yum的超时时间,这样就不会总是因为超时而退出

修改/etc/yum.conf 加入

timeout=120

安装Docker

(3台机器上都要操作)

添加docker.repo执行安装

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce-17.06.0.ce-1.el7.centos启动docker

systemctl start docker systemctl enable docker

安装kubeadm和kubelet

(3台机器上都要操作)

添加kubernetes.repo,安装kubeadm和kubelet

cat </etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet-1.8.4-0 kubeadm-1.8.4-0 kubectl-1.8.4-0 修改10-kubeadm.conf,把systemd替换成cgroupfs

修改 /etc/systemd/system/kubelet.service.d/10-kubeadm.conf 把systemd替换成cgroupfs

Environment=”KUBELET_CGROUP_ARGS=–cgroup-driver=cgroupfs”重启kubelet

systemctl daemon-reload systemctl restart kubelet systemctl enable kubelet.service

初始化集群

(在master上操作)

初始化

kubeadm init --pod-network-cidr=192.168.0.0/16 --kubernetes- version=v1.8.4 --apiserver-advertise-address=192.168.123.81apiserver-advertise-address对应master节点ip

pod-network-cidr 是安装Calico network需要的网络ip,可以自己定义kubeadm init命令执行完毕后,需执行如下命令(注:mkdir不要加 sudo,$HOME不需要做替换变量替换)

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf查看一下集群状态:

[root@vmnode1 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health": "true"}

安装Pod Network

(在master上操作)

这里使用的是Calico network,最初使用的是flannel network发现kub-dns 启动不了,改成Calico就正常了。

安装Calico

yum install ebtables ethtool wget https://docs.projectcalico.org/v2.6/getting-started/kubernetes/installation/hosted/kubeadm/1.6/calico.yaml kubectl apply -f ./calico.yaml让master节点参与工作负载

kubectl taint nodes --all node-role.kubernetes.io/master-查看所有pods的状态,running表示正常

[root@vmnode1 ~]# kubectl get pod --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE default curl-6896d87888-fpm8h 1/1 Running 1 8h 192.168.87.66 vmnode1 kube-system calico-etcd-fpggh 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system calico-kube-controllers-685f8bc7fb-4sx72 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system calico-node-58qx7 2/2 Running 1 8h 192.168.123.82 vmnode2 kube-system calico-node-72q56 2/2 Running 0 8h 192.168.123.81 vmnode1 kube-system calico-node-fm5tt 2/2 Running 0 8h 192.168.123.83 vmnode3 kube-system etcd-vmnode1 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system heapster-5d67855584-dllb7 1/1 Running 0 4h 192.168.183.66 vmnode3 kube-system kube-apiserver-vmnode1 1/1 Running 2 8h 192.168.123.81 vmnode1 kube-system kube-controller-manager-vmnode1 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system kube-dns-545bc4bfd4-jt47k 3/3 Running 0 8h 192.168.87.65 vmnode1 kube-system kube-proxy-8psvr 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system kube-proxy-hxgrg 1/1 Running 0 8h 192.168.123.83 vmnode3 kube-system kube-proxy-lbttb 1/1 Running 0 8h 192.168.123.82 vmnode2 kube-system kube-scheduler-vmnode1 1/1 Running 0 8h 192.168.123.81 vmnode1 kube-system kubernetes-dashboard-7486b894c6-68xcp 1/1 Running 1 8h 192.168.87.67 vmnode1 kube-system monitoring-grafana-5bccc9f786-m7l97 1/1 Running 0 4h 192.168.183.65 vmnode3 kube-system monitoring-influxdb-85cb4985d4-x68s9 1/1 Running 0 4h 192.168.215.65 vmnode2有些pod的状态需要等一会才能从pending变为running,如果有错误使用kubectl describe或kubectl logs进行查看,具体用法后面有介绍

测试DNS

(在master上操作)

kubectl run curl --image=radial/busyboxplus:curl -i --tty

If you don't see a command prompt, try pressing enter.

[ root@curl-2716574283-xr8zd:/ ]# nslookup kubernetes.defaul

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local向Kubernetes集群添加Node

查看token(在master节点执行)

[root@vmnode1 ~]# sudo kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 2543a3.bde66e6ab36f306a 10h 2017-12-02T17:52:01+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token添加节点(在另外两个node节点执行)

kubeadm join --token 2543a3.bde66e6ab36f306a 192.168.123.81:6443192.168.123.81 是master ip

6443 是apiserver的端口号查看集群中已经添加的节点(在master上执行)

[root@vmnode1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION vmnode1 Ready master 9h v1.8.4 vmnode2 Ready <none> 9h v1.8.4 vmnode3 Ready <none> 9h v1.8.4

测试集群是否正常

(在master节点操作)

部署一个nginx应用

[root@vmnode1 ~]# kubectl create -f https://raw.githubusercontent.com/kubernetes/kubernetes.github.io/master/docs/concepts/workloads/controllers/nginx-deployment.yaml

deployment "nginx-deployment" created

[root@vmnode1 ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE

curl-6896d87888-fpm8h 1/1 Running 1 9h 192.168.87.66 vmnode1

nginx-deployment-569477d6d8-kms9c 1/1 Running 0 5m 192.168.215.66 vmnode2

nginx-deployment-569477d6d8-lts2r 1/1 Running 0 5m 192.168.183.67 vmnode3

nginx-deployment-569477d6d8-tvzk5 1/1 Running 0 5m 192.168.87.68 vmnode1

[root@vmnode1 ~]# curl http://192.168.215.66

<html>

<head>

<title>Welcome to nginx!title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

style>

head>

<body>

<h1>Welcome to nginx!h1>

<a href="http://nginx.com/">nginx.coma>.p>

<p><em>Thank you for using nginx.em>p>

body>

html>

安装Dashboard

(在Master 节点操作)

到目前为止集群已经可以正常使用了,不安装Dashboard也不影响集群的运行,但是为了方便维护下面介绍Dashboard的安装

下载kubernetes-dashboard.yaml

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml修改 kubernetes-dashboard.yaml

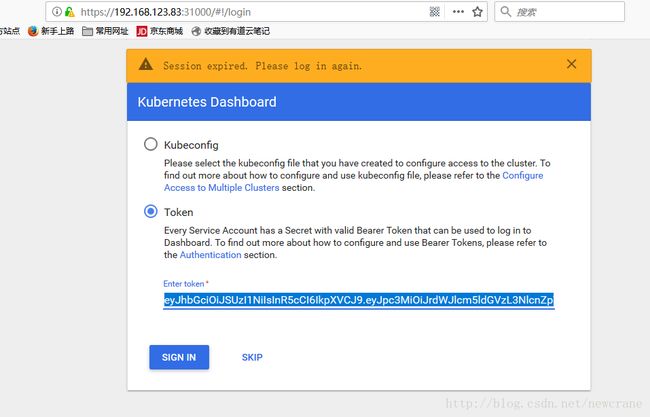

在文件的最后kind: Service部分定义NodePort 端口31000kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ## 增加定义NodePort ports: - port: 443 targetPort: 8443 nodePort: 31000 ## 对外端口 selector: k8s-app: kubernetes-dashboardkubectl create -f kubernetes-dashboard.yaml登录dashboard

登录地址

https://192.168.123.81:31000查出token信息,用token进行登录

[root@vmnode1 ~]# kubectl -n kube-system get secret | grep kubernetes-dashboard-token

kubernetes-dashboard-token-bffq7 kubernetes.io/service-account-token 3 6h

[root@vmnode1 ~]# kubectl describe -n kube-system secret/kubernetes-dashboard-token-bffq7

Name: kubernetes-dashboard-token-bffq7

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name=kubernetes-dashboard

kubernetes.io/service-account.uid=d7e534b2-d67e-11e7-9a7a-0050563c50ac

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1iZmZxNyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImQ3ZTUzNGIyLWQ2N2UtMTFlNy05YTdhLTAwNTA1NjNjNTBhYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.zfb0ByhfAEdpgQWaYuSzKKlsi7v9F11L_c86zxzts7ZJ1ckQgRkXpBJH2GxOBvQTADKDpVc3P5306zjgRb-0Clv46IDJjsM2JuSKyxVp0xm99BiLGxyiObUf6EDpTAdGze0tka7_mlQL70sGeNOiQJxuwbNsLWLjZmnx8OpDDJcvHQ0nlOvHp8MvDUepih2wOyzwIQ1YEHummXiwZ4tb0vSJ12zzFr8DMNT0GtcJETdDuQ2i4qUZt4ZqooGOe6qo8xZM9bCwD49p62o7EA0eWWHW_xpMC7miUkiTOnDCaqXrbmbLsno2enT1VrZzaIL9MoHxSXeZxvmCmM8S_oHknQ

ca.crt: 1025 bytes

登录页面如下图

如果浏览器会出现

User “system:serviceaccount:kube-system:kubernetes-dashboard” cannot list statefulsets.apps in the namespace “default”的错误

解决办法是创建kubernetes-dashboard-admin.yaml文件对ServiceAccount进行授权

cat < ./kubernetes-dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

EOF kubectl create -f kubernetes-dashboard-admin.yaml重新登录即可正常使用Dashboard了

为Dashboard安装Heapster

mkdir -p ~/heapster cd ~/heapster wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml kubectl create -f ./发现heapster运行有问题,通过kubernetes.default不能正常连接api-server

kubectl logs heapster-85b879c5f8-tqw9z -n kube-system E1211 09:36:32.417400 1 reflector.go:190] k8s.io/heapster/metrics/util/util.go:51: Failed to list *v1.Node: Get https://kubernetes.default/api/v1/nodes?resourceVersion=0: dial tcp 10.96.0.1:443: i/o timeout E1211 09:36:32.418520 1 reflector.go:190] k8s.io/heapster/metrics/heapster.go:322: Failed to list *v1.Pod: Get https://kubernetes.default/api/v1/pods?resourceVersion=0: dial tcp 10.96.0.1:443: i/o timeout E1211 09:36:32.421626 1 reflector.go:190] k8s.io/heapster/metrics/processors/namespace_based_enricher.go:84: Failed to list *v1.Namespace: Get https://kubernetes.default/api/v1/namespaces?resourceVersion=0: dial tcp 10.96.0.1:443: i/o timeout尝试下面的修改,问题依旧。各位网友如果有解决办法欢迎留言。

–source=kubernetes:https://kubernetes.default.svc.cluster.local

参考资料

https://blog.frognew.com/2017/09/kubeadm-install-kubernetes-1.8.html

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

其它

修改Calico默认ip段

Calico默认ip段是192.168.0.0/16,如果本地也是192.168.x.x容易引起冲突,所以最好重新定义Calicoip

- 第一步在kubeadm init 的时候修改ip段。

例如:kubeadm init –pod-network-cidr=192.168.111.0/24 - 第二步修改calico.yaml 文件

# Configure the IP Pool from which Pod IPs will be chosen.

- name: CALICO_IPV4POOL_CIDR

value: “192.168.111.0/24”

- name: CALICO_IPV4POOL_IPIP

value: “always”

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: “false”- 第一步在kubeadm init 的时候修改ip段。

集群维护命令

查看集群所有pod执行状态

kubectl get pod --all-namespaces -o wide 查看kubernetes-dashboard-1607234690-3bnk2的详细信息

kubectl describe pods kubernetes-dashboard-1607234690-3bnk2 --namespace=kube-system查看kubernetes-dashboard-7486b894c6-x2dtv日志

kubectl logs kubernetes-dashboard-7486b894c6-x2dtv --namespace=kube-system查看kubelet错误日志

journalctl -xeu kubelet删除dashboard

kubectl delete -f kubernetes-dashboard-admin.yaml

kubectl delete -f kubernetes-dashboard.yaml 移除节点

kubectl drain node2 --delete-local-data --force --ignore-daemonsets

kubectl delete node node2kubeadm int或kebeadm join 执行出错的时候重置节点

kubeadm reset

ifconfig tunl0 down

ip link delete tunl0