Linux 进程,线程和调度 ---- 02

1. fork、 vfork、 clone

写时拷贝技术

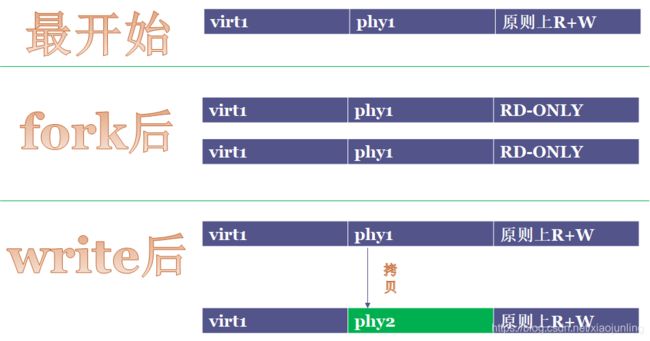

从图中可以看出,在最开始阶段的时候,就是有一个进程在运行,并且虚拟地址是可读可写的

使用fork以后产生了子进程,子进程会拷贝父进程的信息,并且共享内存资源的信息,并把相应的共享虚拟地址标记为只读的状态。

任一一个进程 write 后,写进程会申请新的物理地址,并把共享的物理地址断开,并标记为 R+W,也就是 Copy On Write (COW)机制

这是的 P1 和 P2 的虚拟地址是一样的,就是物理地址不一样

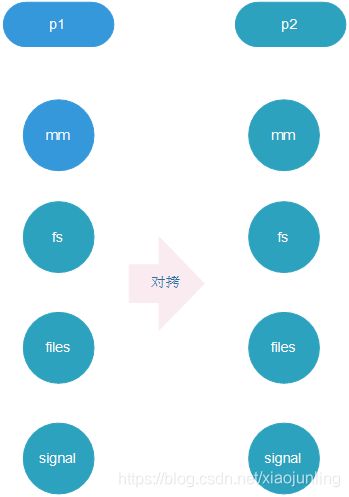

fork 以后的资源形式:

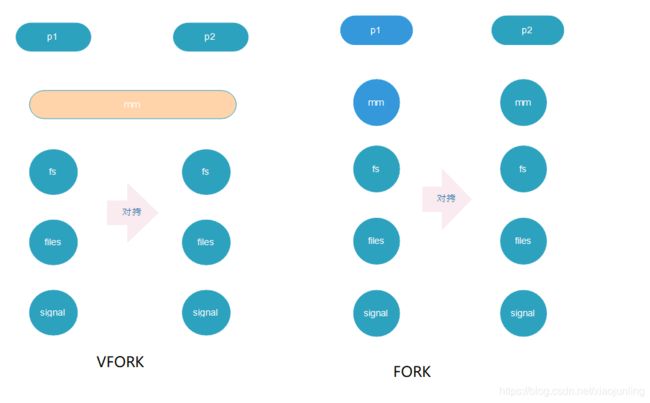

fork():

1. SIGCHLD

最难分裂的是内存资源,内存资源分配需要 MMU

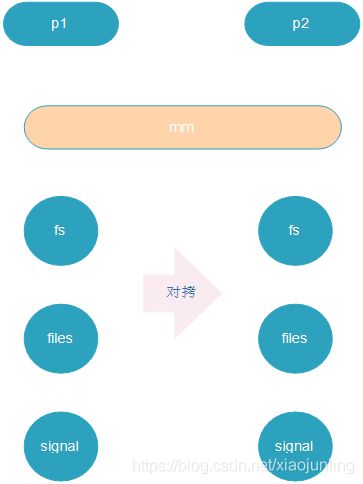

vfork

在没有 MMU 的 Linux 系统中没有 fork ,只有使用 vfork

没有 Copy On Write, 没有 fork

使用 vfork : 父进程阻塞知道子进程 exit 或者 exec

vfork():

1. CLONE_VM

2. CLONE_VFORK

3. SIGCHLD

fork 和 vfork 的不同:

#include Clone

pthread_create -> clone 通过 clone_flags 传参,共享资源,可以调度的

1. CLONE_VM

2. CLONE_FS

3. CLONE_FILES

4. CLONE_SIGHAND

5. CLONE_THREAD

线程也是 LWP 轻量级进程

clone 就是创建新的 task_struct 结构体

线程的 PID, POSIX 标准统一个进程穿件的线程使用同一个 PID,在内核里增加了一个 tgid (thread group ID)

// 编译 gcc thread.c -pthread, 用 strace ./a.out 跟踪其对 clone() 的调用

// ls /proc/$pid/task/ 查看 线程ID

// gettid 可以通过系统调用得到 线程ID

// pthread_self 只是拿到用户态的一个编号

#include

#include

#include

#include

#include

static pid_t gettid( void )

{

return syscall(__NR_gettid);

}

static void *thread_fun(void *param)

{

printf("thread pid:%d, tid:%d pthread_self:%lu\n", getpid(), gettid(), pthread_self());

while(1);

return NULL;

}

int main(void)

{

pthread_t tid1, tid2;

int ret;

printf("thread pid:%d, tid:%d pthread_self:%lu\n", getpid(), gettid(),pthread_self());

ret = pthread_create(&tid1, NULL, thread_fun, NULL);

if (ret == -1) {

perror("cannot create new thread");

return -1;

}

ret = pthread_create(&tid2, NULL, thread_fun, NULL);

if (ret == -1) {

perror("cannot create new thread");

return -1;

}

if (pthread_join(tid1, NULL) != 0) {

perror("call pthread_join function fail");

return -1;

}

if (pthread_join(tid2, NULL) != 0) {

perror("call pthread_join function fail");

return -1;

}

return 0;

}

2. 孤儿进程的托孤, SUBREAPER

在新的系统中不仅可以托孤给 init,也可以托孤给一个 subreaper 的进程

subreaper 进程要做一些事情

/* Become reaper of our children */

if (prctl(PR_SET_CHILD_SUBREAPER, 1) < 0) {

log_warning("Failed to make us a subreaper: %m");

if (errno == EINVAL)

log_info("Perhaps the kernel version is too old (<3.4?)");

}

PR_SET_CHILD_SUBREAPER 是 Linux 3.4 加入的新特性。把它设置为

非零值,当前进程就会变成 subreaper,会像 1 号进程那样收养孤儿进程

了

life-period例子,实验体会托孤

// 编译运行 life_period.c,杀死父进程,用 pstree 命令观察子进程的被托孤

#include 3. 进程的睡眠和等待队列

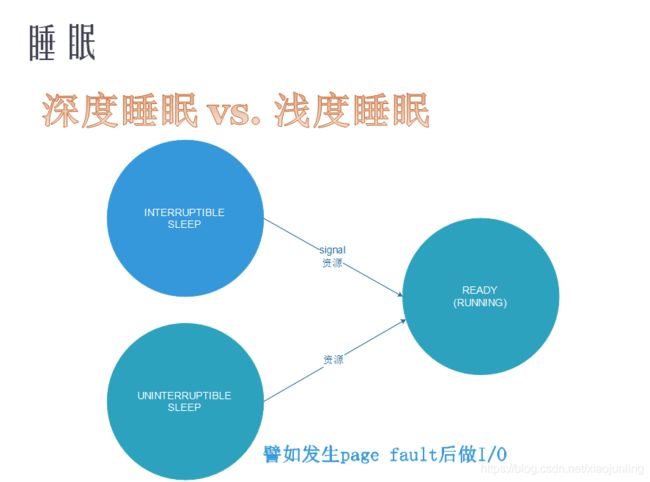

睡眠: 深度睡眠和浅度睡眠

深度睡眠: 不允许信号打断,只能通过资源来唤醒

浅度睡眠: 可以通过信号和资源来唤醒

内核里通常要等待资源时,要把 task_struct 挂载到等待队列上,资源来到时只需要唤醒等待队列就可以了

一下是进程是如何睡的,如何唤醒的

static ssize_t globalfifo_read(struct file *filp, char __user *buf,

size_t count, loff_t *ppos)

static ssize_t globalfifo_write(struct file *filp, const char __user *buf,

size_t count, loff_t *ppos)

彻底看懂等待队列的案例

/*

* a simple char device driver: globalfifo

*

* Copyright (C) 2014 Barry Song ([email protected])

*

* Licensed under GPLv2 or later.

*/

#include " );

MODULE_LICENSE("GPL v2");

4. 进程0 和 进程1

进程0 是 IDLE 进程,等没有进程运行的时候,进程0才运行,优先级是最低的,在进程0运行时会进入低功耗模式

进程1 的父进程就是 进程0