tensorflow实现验证码识别(三)

搭积木

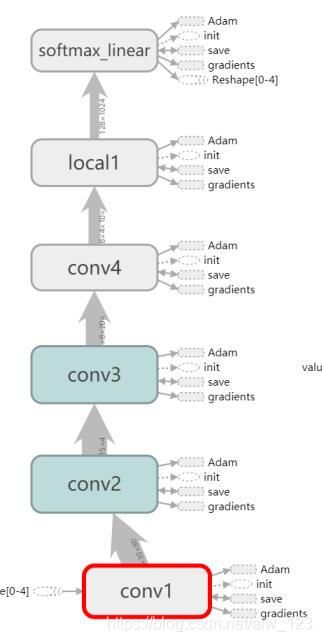

数据都准备好了之后,就剩下定义网络结构了,定义网络结构说白了就是搭积木。我这边的积木是酱紫:

可以看出来我搭了4层卷积+1层FC+1层softmax的辣鸡积木,搭积木的代码是酱紫:

#推理

def __inference(self, images, keep_prob):

images = tf.reshape(images, (-1, utility.IMG_HEIGHT, utility.IMG_WIDTH, 1))

# 用于tensorboard中可视化原图

tf.summary.image('src_img', images, 5)

with tf.variable_scope('conv1') as scope:

kernel = self.__weight_variable('weights_1', shape=[5, 5, 1, 64])

biases = self.__bias_variable('biases_1', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(images, kernel), biases)

conv1 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv1/weights_1', kernel)

tf.summary.histogram('conv1/biases_1', biases)

kernel_2 = self.__weight_variable('weights_2', shape=[5, 5, 64, 64])

biases_2 = self.__bias_variable('biases_2', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(conv1, kernel_2), biases_2)

conv2 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv1/weights_2', kernel_2)

tf.summary.histogram('conv1/biases_2', biases_2)

# 用于可视化第一层卷积后的图像

conv1_for_show1 = tf.reshape(conv1[:, :, :, 1], (-1, 60, 160, 1))

conv1_for_show2 = tf.reshape(conv1[:, :, :, 2], (-1, 60, 160, 1))

conv1_for_show3 = tf.reshape(conv1[:, :, :, 3], (-1, 60, 160, 1))

tf.summary.image('conv1_for_show1', conv1_for_show1, 5)

tf.summary.image('conv1_for_show2', conv1_for_show2, 5)

tf.summary.image('conv1_for_show3', conv1_for_show3, 5)

# max pooling

pool1 = self.__max_pool_2x2(conv1, name='pool1')

with tf.variable_scope('conv2') as scope:

kernel = self.__weight_variable('weights_1', shape=[5, 5, 64, 64])

biases = self.__bias_variable('biases_1', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(pool1, kernel), biases)

conv2 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv2/weights_1', kernel)

tf.summary.histogram('conv2/biases_1', biases)

kernel_2 = self.__weight_variable('weights_2', shape=[5, 5, 64, 64])

biases_2 = self.__bias_variable('biases_2', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(conv2, kernel_2), biases_2)

conv2 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv2/weights_2', kernel_2)

tf.summary.histogram('conv2/biases_2', biases_2)

# 用于可视化第二层卷积后的图像

conv2_for_show1 = tf.reshape(conv2[:, :, :, 1], (-1, 30, 80, 1))

conv2_for_show2 = tf.reshape(conv2[:, :, :, 2], (-1, 30, 80, 1))

conv2_for_show3 = tf.reshape(conv2[:, :, :, 3], (-1, 30, 80, 1))

tf.summary.image('conv2_for_show1', conv2_for_show1, 5)

tf.summary.image('conv2_for_show2', conv2_for_show2, 5)

tf.summary.image('conv2_for_show3', conv2_for_show3, 5)

# max pooling

pool2 = self.__max_pool_2x2(conv2, name='pool2')

with tf.variable_scope('conv3') as scope:

kernel = self.__weight_variable('weights', shape=[3, 3, 64, 64])

biases = self.__bias_variable('biases', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(pool2, kernel), biases)

conv3 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv3/weights', kernel)

tf.summary.histogram('conv3/biases', biases)

kernel_2 = self.__weight_variable('weights_2', shape=[3, 3, 64, 64])

biases_2 = self.__bias_variable('biases_2', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(conv3, kernel_2), biases_2)

conv3 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv3/weights_2', kernel_2)

tf.summary.histogram('conv3/biases_2', biases_2)

conv3_for_show1 = tf.reshape(conv3[:, :, :, 1], (-1, 15, 40, 1))

conv3_for_show2 = tf.reshape(conv3[:, :, :, 2], (-1, 15, 40, 1))

conv3_for_show3 = tf.reshape(conv3[:, :, :, 3], (-1, 15, 40, 1))

tf.summary.image('conv3_for_show1', conv3_for_show1, 5)

tf.summary.image('conv3_for_show2', conv3_for_show2, 5)

tf.summary.image('conv3_for_show3', conv3_for_show3, 5)

pool3 = self.__max_pool_2x2(conv3, name='pool3')

with tf.variable_scope('conv4') as scope:

kernel = self.__weight_variable('weights', shape=[3, 3, 64, 64])

biases = self.__bias_variable('biases', [64])

pre_activation = tf.nn.bias_add(self.__conv2d(pool3, kernel), biases)

conv4 = tf.nn.relu(pre_activation, name=scope.name)

tf.summary.histogram('conv4/weights', kernel)

tf.summary.histogram('conv4/biases', biases)

conv4_for_show1 = tf.reshape(conv4[:, :, :, 1], (-1, 8, 20, 1))

conv4_for_show2 = tf.reshape(conv4[:, :, :, 2], (-1, 8, 20, 1))

conv4_for_show3 = tf.reshape(conv4[:, :, :, 3], (-1, 8, 20, 1))

tf.summary.image('conv4_for_show1', conv4_for_show1, 5)

tf.summary.image('conv4_for_show2', conv4_for_show2, 5)

tf.summary.image('conv4_for_show3', conv4_for_show3, 5)

pool4 = self.__max_pool_2x2(conv4, name='pool4')

#全连接层

with tf.variable_scope('local1') as scope:

reshape = tf.reshape(pool4, [images.get_shape()[0].value, -1])

weights = self.__weight_variable('weights', shape=[4*10*64, 1024])

biases = self.__bias_variable('biases', [1024])

local1 = tf.nn.relu(tf.matmul(reshape, weights) + biases, name=scope.name)

tf.summary.histogram('local1/weights', kernel)

tf.summary.histogram('local1/biases', biases)

local1_drop = tf.nn.dropout(local1, keep_prob)

tf.summary.tensor_summary('local1/dropout', local1_drop)

#输出层

with tf.variable_scope('softmax_linear') as scope:

weights = self.__weight_variable('weights', shape=[1024, self.__CHARS_NUM * self.__CLASSES_NUM])

biases = self.__bias_variable('biases', [self.__CHARS_NUM * self.__CLASSES_NUM])

result = tf.add(tf.matmul(local1_drop, weights), biases, name=scope.name)

reshaped_result = tf.reshape(result, [-1, self.__CHARS_NUM, self.__CLASSES_NUM])

return reshaped_result

训练

第二篇已经说过数据是dump成tfrecords,然后用队列来读取tfrecords,所以训练的时候肯定是要运行队列,让队列从tfrecords里不断的拿数据。但是如果单线程来拿数据的话肯定不是个好主意,所以应该是主线程创建一个专门往队列里塞数据的子线程。主线程在需要的时候只要从队列里拿数据训练就行了。这些线程间同步的操作tf已经封装好了,tf.train.Coordinator()能实例化一个线程协调器,tf.train.start_queue_runners()会把graph里的所有队列run起来,并返回管理队列的对应的子线程。所以代码时酱紫:

with tf.Session() as sess:

tf.global_variables_initializer().run()

tf.local_variables_initializer().run()

writer = tf.summary.FileWriter(utility.LOG_DIR, sess.graph)

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

try:

step = 0

while not coord.should_stop():

start_time = time.time()

_, loss_value, performance, summaries = sess.run([train_op, loss, accuracy, summary_op])

duration = time.time() - start_time

if step % 10 == 0:

print('>> 已训练%d个批次: loss = %.2f (%.3f sec), 该批正确数量 = %d' % (step, loss_value, duration, performance))

if step % 100 == 0:

writer.add_summary(summaries, step)

saver.save(sess, utility.MODEL_DIR, global_step=step)

step += 1

except tf.errors.OutOfRangeError:

print('训练结束')

saver.save(sess, utility.MODEL_DIR, global_step=step)

coord.request_stop()

finally:

coord.request_stop()

coord.join(threads)

demo的完整代码在:https://github.com/aolingwen/fuck_verifycode

代码写的比较搓,欢迎各位大佬指正。如果对你有帮助,还望fork或star一把,谢谢!