机器学习:02 特征工程和决策树回归

文章目录

- 特征工程关注点

- 聊聊互联网公司机器学习工作

- 数据与特征处理

-

- 数值型

- 类别型

- 时间型

- 文本型

- 统计特征

- 组合特征

- 特征选择

- Kaggle自行车租赁预测比赛

-

- 数据集介绍

-

- 基本介绍

- 数据字段

- 数据读取与预分析

- 数据可视化

-

- 数据类型数据统计分析

- 目标字段相关分析

- 相关性分析

- 可视化对比 Count 和 核心字段关系

- 机器学习算法

在机器学习工作中,特征工程占据很重要的位置。

特征工程关注点

- 数据与特征处理

- 数据选择/清洗/采样

- 数值类型/类别型/日期型/统计型/文本型特征处理

- 组合特征处理

- 特征选择

- Filter特征选择

- Wrapper特征选择

- Embedded特征选择

聊聊互联网公司机器学习工作

大部分复杂模型的算法精进都是数据科学家在做的时间,大多数同学做的工作

- 跑各种数据,各种map-reduce,hive SQL,Spark SQL ,数据仓库等

- 数据清洗,分析业务,找特征

我们发现,实际数据挖掘工程师的工作并不是我们想象的那样?

- 研究各种算法?设计高达上模型? NO。。。。

- 深度学习应用?N层神经网络?NO。。。。

数据与特征处理

数值型

-

幅度调整:幅度调整[0,1]范围,例如:MaxMinScaler、StandarScaler

-

Log 等数据域变化

-

统计值 max, min, mean, std:在numpy提供函数

-

离散化:等距切分(按照固定大小分布)、等频切分数据(根据数据百分位划分)

-

Hash 分桶

-

每个类别下对应的变量统计值 histogram( 分布状况) )

-

数值型 => 类别型

类别型

- one-hot 编码(哑变量、独热向量编码):pandas 中提供pd.get_dummies 函数

- Hash与聚类处理:数据通过某个规则或者算法 压缩成几个固定的类别中

- 统计每个类别变量下各个target,转换为数值类型

时间型

既可以看做连续值,也可以看做离散值

-

连续值

-

持续时间(单页浏览时长 )

-

间隔时间( 上次购买/点击离现在的时间)

-

-

离散值

-

一天中哪个时间段 (hour_0-23)

-

一周中星期几 (week_monday…)

-

一年中哪个星期

-

一年中哪个季度

-

工作日/周末

-

文本型

- 词袋

词装入一个袋中,然后词在词袋设置1,否则0表示。

可以使用sklearn.feature_extraction.text import CountVectorizer

计算思想:统计样本单词词频-> 根据词频排序-> 构建满足词典-> 样本在词典是否存在通过0/1 来表示我们的输入。

特点:字典很大,数据非常稀疏,没有考虑词序

- 词袋词-> 扩展n-gram

一定程度上有词序信息,字典变得的更大

- tf-idf 算法特征表示

tf-idf 是一种统计方法,同样存在的问题:构建一个庞大词典-> 特征表示(数据稀疏问题)

- 词袋-> 扩展word2vec 表示

这种方式,结合深度学习模型,效果会更好些。

有很多的工具库可以选择: google word2vec 、gensim、facebook fasttext

统计特征

比如,在推荐和排序业务中模型用到的特征

-

电商中商品价格 分位线统计特征

-

电商中商品的评论比例(好,中,查的占比特征)。。。

-

购物车购买转化率 =>用户维度统计特征

-

商品热度 =>商品维度统计特征

-

对不同item点击/收藏/购物车/购买的总计 =>商品维度统计特征

-

对不同item点击/收藏/购物车/购买平均每个user的计数=>用户维度统计特征

-

变热门的品牌/商品 =>商品维度统计特征(差值型)

-

商品在类别中的排序 =>商品维度统计特征(次序型)

-

商品交互的总人数 =>商品维度统计特征(求和型)

组合特征

对于特征的组合,我们可以选择人工的方式去组合。实际工业界采用模型方式获取模型的重要性,然后进行特征组合-> 模型训练。举例说明:

- GBDT 基于样本训练可以获取特征( feature_importance , 通过决策树可以获取有效组合)

- 组合特征+原始特征-> LR 模型训练,最早facebook 使用这种方式,互联网公司都这样做。

特征选择

-

原因

- 冗余:部分特征相关度太高,影响性能

- 噪声:部分特征对预测结果有负影响

-

特征选择vs降维

- 特征选择:剔除和结果预测关系不大的特征

- 降维:特征计算组合构成新特征

-

特征选择方法

- Filter:单个特征和结果值之间的相关程度,保留topk特征。不足:未考虑特征之前关联

- Wrapper:采用特征子集训练模型,尝试挑选最好特征训.sklearn.feature_selection.RFE

- Embedded:基于模型训练分析特征重要性,例如:正则化方式获取特征

举个例子,早期电商中LR做CTR预测,在亿级别特征(多数稀疏),通过L1正则化的LR模型。剩余千万级别特征,然后再进行训练。 from sklearn.feature_selection import SelectFromModel

通过sklearn 工具提供算法进行特征选择很容易,重点关于几个API即可

- Filter

sklearn.feature_selection.SelectKBest

- Wrapper

sklearn.feature_select.RFE

-

Embedded

- feature_select.SelectFromModel

- LinearModel,L1 正则化

Kaggle自行车租赁预测比赛

Kaggle自行车租赁预测比赛,这是一个城市自行车租赁系统,提供的数据为2年内华盛顿按小时记录的自行车租赁数据,其中训练集由每个月的前19天组成,测试集由20号之后的时间组成(需要我们自己去预测)。这是一个连续值预测的问题,也就是我们说的机器学习中的回归问题

数据集介绍

基本介绍

Bike sharing systems are a means of renting bicycles where the process of obtaining membership, rental, and bike return is automated via a network of kiosk locations throughout a city. Using these systems, people are able rent a bike from a one location and return it to a different place on an as-needed basis. Currently, there are over 500 bike-sharing programs around the world.

数据字段

- datetime - hourly date + timestamp

- season - 1 = spring, 2 = summer, 3 = fall, 4 = winter

- holiday - whether the day is considered a holiday

- workingday - whether the day is neither a weekend nor holiday

- weather -

- 1: Clear, Few clouds, Partly cloudy, Partly cloudy

- 2: Mist + Cloudy, Mist + Broken clouds, Mist + Few clouds, Mist

- 3: Light Snow, Light Rain + Thunderstorm + Scattered clouds, Light Rain + Scattered clouds

- 4: Heavy Rain + Ice Pallets + Thunderstorm + Mist, Snow + Fog

- temp - temperature in Celsius

- atemp - “feels like” temperature in Celsius

- humidity - relative humidity

- windspeed - wind speed

- casual - number of non-registered user rentals initiated

- registered - number of registered user rentals initiated

- count - number of total rentals (Dependent Variable)

数据读取与预分析

老朋友最靠谱,最好用,继续用python中数据分析有着不可撼动统治地位的pandas包

numpy是科学计算包,也是肯定要用的。

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

df_train = pd.read_csv('kaggle_bike_competition_train.csv',header = 0)

读到内存里了,先拿出来亮亮相,我们一起来瞄一眼前10行

df_train.head(5)

| datetime | season | holiday | workingday | weather | temp | atemp | humidity | windspeed | casual | registered | count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2011-01-01 00:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 81 | 0.0 | 3 | 13 | 16 |

| 1 | 2011-01-01 01:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 8 | 32 | 40 |

| 2 | 2011-01-01 02:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 5 | 27 | 32 |

| 3 | 2011-01-01 03:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 3 | 10 | 13 |

| 4 | 2011-01-01 04:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 0 | 1 | 1 |

就喜欢这种顺畅的excel表格形式,常年懒癌晚期,于是乎我们让pandas自己告诉我们一些信息

字段的名字和类型咱们总得了解一下吧

df_train.dtypes

datetime object

season int64

holiday int64

workingday int64

weather int64

temp float64

atemp float64

humidity int64

windspeed float64

casual int64

registered int64

count int64

dtype: object

接下来咱们总得了解一下我们的任务有多艰巨(数据量有多大)吧

#让它告诉我们形状

df_train.shape

(10886, 12)

我们总结一下,总共有10886行,同时每一行有12列不同的信息

那个,记得我们说过的脏数据问题吧,所以呢,我们看看有没有缺省的字段

df_train.count()

datetime 10886

season 10886

holiday 10886

workingday 10886

weather 10886

temp 10886

atemp 10886

humidity 10886

windspeed 10886

casual 10886

registered 10886

count 10886

dtype: int64

→_→可见万恶的资本主义郭嘉的记录系统多么严谨完善,居然就没有缺省值

type(df_train.datetime)

pandas.core.series.Series

df_train.dt = pd.to_datetime(df_train.datetime)

咱们第一个来处理时间,因为它包含的信息总是非常多的,毕竟变化都是随着时间发生的嘛

# 把月、日、和 小时单独拎出来,放到3列中

df_train['month'] = pd.DatetimeIndex(df_train.datetime).month

df_train['day'] = pd.DatetimeIndex(df_train.datetime).dayofweek

df_train['hour'] = pd.DatetimeIndex(df_train.datetime).hour

# 再看

df_train.head(10)

| datetime | season | holiday | workingday | weather | temp | atemp | humidity | windspeed | casual | registered | count | month | day | hour | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2011-01-01 00:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 81 | 0.0000 | 3 | 13 | 16 | 1 | 5 | 0 |

| 1 | 2011-01-01 01:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0000 | 8 | 32 | 40 | 1 | 5 | 1 |

| 2 | 2011-01-01 02:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0000 | 5 | 27 | 32 | 1 | 5 | 2 |

| 3 | 2011-01-01 03:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0000 | 3 | 10 | 13 | 1 | 5 | 3 |

| 4 | 2011-01-01 04:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0000 | 0 | 1 | 1 | 1 | 5 | 4 |

| 5 | 2011-01-01 05:00:00 | 1 | 0 | 0 | 2 | 9.84 | 12.880 | 75 | 6.0032 | 0 | 1 | 1 | 1 | 5 | 5 |

| 6 | 2011-01-01 06:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0000 | 2 | 0 | 2 | 1 | 5 | 6 |

| 7 | 2011-01-01 07:00:00 | 1 | 0 | 0 | 1 | 8.20 | 12.880 | 86 | 0.0000 | 1 | 2 | 3 | 1 | 5 | 7 |

| 8 | 2011-01-01 08:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0000 | 1 | 7 | 8 | 1 | 5 | 8 |

| 9 | 2011-01-01 09:00:00 | 1 | 0 | 0 | 1 | 13.12 | 17.425 | 76 | 0.0000 | 8 | 6 | 14 | 1 | 5 | 9 |

那个,既然时间大串已经被我们处理过了,那这个字段放着太占地方,干脆就不要了吧

先上一个粗暴的版本,咱们把注册租户和未注册租户也先丢掉,回头咱们再看另外一种处理方式

# 那个,保险起见,咱们还是先存一下吧

df_train_origin = df_train

# 抛掉不要的字段 :axis = 1 指定的列

df_train = df_train.drop(['datetime','casual','registered'], axis = 1)

# 看一眼

df_train.head(5)

| season | holiday | workingday | weather | temp | atemp | humidity | windspeed | count | month | day | hour | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 81 | 0.0 | 16 | 1 | 5 | 0 |

| 1 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 40 | 1 | 5 | 1 |

| 2 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 32 | 1 | 5 | 2 |

| 3 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 13 | 1 | 5 | 3 |

| 4 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 1 | 1 | 5 | 4 |

赞,干净多了

df_train.shape

(10886, 12)

分成2部分:

1. df_train_target:目标,也就是count字段。

2. df_train_data:用于产出特征的数据

df_train_target = df_train['count'].values

df_train_data = df_train.drop(['count'],axis = 1).values

print( 'df_train_data shape is ', df_train_data.shape )

print( 'df_train_target shape is ', df_train_target.shape )

df_train_data shape is (10886, 11)

df_train_target shape is (10886,)

数据可视化

数据类型数据统计分析

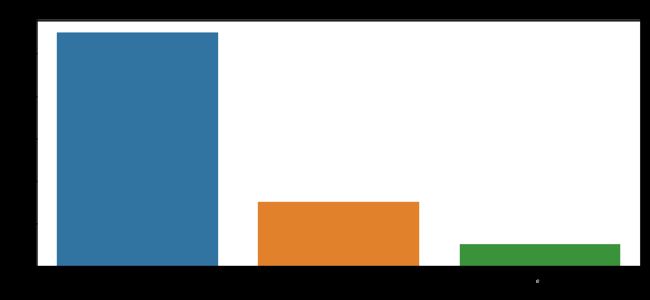

dataTypeDf = pd.DataFrame(df_train_origin.dtypes.value_counts()).reset_index().rename(columns={

"index":"variableType",0:"count"})

dataTypeDf.head()

| variableType | count | |

|---|---|---|

| 0 | int64 | 11 |

| 1 | float64 | 3 |

| 2 | object | 1 |

dataTypeDf = pd.DataFrame(df_train_origin.dtypes.value_counts()).reset_index().rename(columns={

"index":"variableType",0:"count"})

fig,ax = plt.subplots()

fig.set_size_inches(12,5)

sns.barplot(data=dataTypeDf,x="variableType",y="count",ax=ax)

g=ax.set(xlabel='variableTypeariable Type', ylabel='Count',title="Variables DataType Count")

目标字段相关分析

At first look, “count” variable contains lot of outlier data points which skews the distribution towards right (as there are more data points beyond Outer Quartile Limit).But in addition to that, following inferences can also been made from the simple boxplots given below.

- Spring season has got relatively lower count.The dip in median value

in boxplot gives evidence for it. - The boxplot with “Hour Of The Day” is quiet interesting.The median value are relatively higher at 7AM - 8AM and 5PM - 6PM. It can be attributed to regular school and office users at that time.

- Most of the outlier points are mainly contributed from “Working Day” than “Non Working Day”. It is quiet visible from from figure 4.

df_train_origin.head()

| datetime | season | holiday | workingday | weather | temp | atemp | humidity | windspeed | casual | registered | count | month | day | hour | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2011-01-01 00:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 81 | 0.0 | 3 | 13 | 16 | 1 | 5 | 0 |

| 1 | 2011-01-01 01:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 8 | 32 | 40 | 1 | 5 | 1 |

| 2 | 2011-01-01 02:00:00 | 1 | 0 | 0 | 1 | 9.02 | 13.635 | 80 | 0.0 | 5 | 27 | 32 | 1 | 5 | 2 |

| 3 | 2011-01-01 03:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 3 | 10 | 13 | 1 | 5 | 3 |

| 4 | 2011-01-01 04:00:00 | 1 | 0 | 0 | 1 | 9.84 | 14.395 | 75 | 0.0 | 0 | 1 | 1 | 1 | 5 | 4 |

fig, axes = plt.subplots(nrows=2,ncols=2)

fig.set_size_inches(12, 10)

sns.boxplot(data=df_train_origin,y="count",orient="v",ax=axes[0][0])

sns.boxplot(data=df_train_origin,y="count",x="season",orient="v",ax=axes[0][1])

sns.boxplot(data=df_train_origin,y="count",x="hour",orient="v",ax=axes[1][0])

sns.boxplot(data=df_train_origin,y="count",x="workingday",orient="v",ax=axes[1][1])

axes[0][1].set(xlabel='Season', ylabel='Count',title="Box Plot On Count Across Season")

axes[1][0].set(xlabel='Hour Of The Day', ylabel='Count',title="Box Plot On Count Across Hour Of The Day")

axes[1][1].set(xlabel='Working Day', ylabel='Count',title="Box Plot On Count Across Working Day")

[Text(0,0.5,'Count'),

Text(0.5,0,'Working Day'),

Text(0.5,1,'Box Plot On Count Across Working Day')]

相关性分析

One common to understand how a dependent variable is influenced by features (numerical) is to fibd a correlation matrix between them. Lets plot a correlation plot between “count” and [“temp”,“atemp”,“humidity”,“windspeed”].

- temp and humidity features has got positive and negative correlation

with count respectively.Although the correlation between them are not

very prominent still the count variable has got little dependency on

“temp” and “humidity”. - windspeed is not gonna be really useful numerical feature and it is visible from it correlation value with “count”

- “atemp” is variable is not taken into since “atemp” and “temp” has got strong correlation with each other. During model building any one of the variable has to be dropped since they will exhibit multicollinearity in the data.

- “Casual” and “Registered” are also not taken into account since they are leakage variables in nature and need to dropped during model building.

Regression plot in seaborn is one useful way to depict the relationship between two features. Here we consider “count” vs “temp”, “humidity”, “windspeed”.

corrMatt = df_train_origin[["temp","atemp","casual","registered","humidity","windspeed","count"]].corr()

mask = np.array(corrMatt)

mask[np.tril_indices_from(mask)] = False

fig,ax= plt.subplots()

fig.set_size_inches(20,10)

sns.heatmap(corrMatt, mask=mask,vmax=.8, square=True,annot=True)

可视化对比 Count 和 核心字段关系

- It is quiet obvious that people tend to rent bike during summer

season since it is really conducive to ride bike at that

season.Therefore June, July and August has got relatively higher

demand for bicycle. - On weekdays more people tend to rent bicycle around 7AM-8AM and 5PM-6PM. As we mentioned earlier this can be attributed to regular school and office commuters.

- Above pattern is not observed on “Saturday” and “Sunday”.More people tend to rent bicycle between 10AM and 4PM.

- The peak user count around 7AM-8AM and 5PM-6PM is purely contributed by registered user.

import calendar

from datetime import datetime

fig,(ax1,ax2,ax3,ax4)= plt.subplots(nrows=4)

fig.set_size_inches(12,20)

sortOrder = ["January","February","March","April","May","June","July","August","September","October","November","December"]

hueOrder = ["Sunday","Monday","Tuesday","Wednesday","Thursday","Friday","Saturday"]

df_train_origin["date"] = df_train_origin.datetime.apply(lambda x : x.split()[0])

df_train_origin["month"] = df_train_origin.date.apply(lambda dateString : calendar.month_name[datetime.strptime(dateString,"%Y-%m-%d").month])

df_train_origin["weekday"] = df_train_origin.date.apply(lambda dateString : calendar.day_name[datetime.strptime(dateString,"%Y-%m-%d").weekday()])

monthAggregated = pd.DataFrame(df_train_origin.groupby("month")["count"].mean()).reset_index()

monthSorted = monthAggregated.sort_values(by="count",ascending=False)

sns.barplot(data=monthSorted,x="month",y="count",ax=ax1,order=sortOrder)

ax1.set(xlabel='Month', ylabel='Avearage Count',title="Average Count By Month")

hourAggregated = pd.DataFrame(df_train_origin.groupby(["hour","season"],sort=True)["count"].mean()).reset_index()

sns.pointplot(x=hourAggregated["hour"], y=hourAggregated["count"],hue=hourAggregated["season"], data=hourAggregated, join=True,ax=ax2)

ax2.set(xlabel='Hour Of The Day', ylabel='Users Count',title="Average Users Count By Hour Of The Day Across Season",label='big')

hourAggregated = pd.DataFrame(df_train_origin.groupby(["hour","weekday"],sort=True)["count"].mean()).reset_index()

sns.pointplot(x=hourAggregated["hour"], y=hourAggregated["count"],hue=hourAggregated["weekday"],hue_order=hueOrder, data=hourAggregated, join=True,ax=ax3)

ax3.set(xlabel='Hour Of The Day', ylabel='Users Count',title="Average Users Count By Hour Of The Day Across Weekdays",label='big')

hourTransformed = pd.melt(df_train_origin[["hour","casual","registered"]], id_vars=['hour'], value_vars=['casual', 'registered'])

hourAggregated = pd.DataFrame(hourTransformed.groupby(["hour","variable"],sort=True)["value"].mean()).reset_index()

sns.pointplot(x=hourAggregated["hour"], y=hourAggregated["value"],hue=hourAggregated["variable"],hue_order=["casual","registered"], data=hourAggregated, join=True,ax=ax4)

ax4.set(xlabel='Hour Of The Day', ylabel='Users Count',title="Average Users Count By Hour Of The Day Across User Type",label='big')

[Text(0,0.5,'Users Count'),

Text(0.5,0,'Hour Of The Day'),

Text(0.5,1,'Average Users Count By Hour Of The Day Across User Type'),

None]

机器学习算法

下面的过程会让你看到,其实应用机器学习算法的过程,多半是在调参,各种不同的参数会带来不同的结果(比如正则化系数,比如决策树类的算法的树深和棵树,比如距离判定准则等等等等)

import sklearn

print('sklearn.__version__ = ',sklearn.__version__)

# 线性模型 wx+b ,可以提供回归

# https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Ridge.html#sklearn.linear_model.Ridge

## 采用岭回归,损失函数:||y - Xw||^2_2 + alpha * ||w||^2_2

from sklearn import linear_model

from sklearn import svm

# https://scikit-learn.org/stable/modules/classes.html#sklearn.cross_validation

# 评估每次得分

from sklearn.model_selection import cross_validate

# https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.learning_curve.html#sklearn.model_selection.learning_curve

# 学习曲线

from sklearn.model_selection import learning_curve

# 对某个estimator进行参数网格搜索,寻找最优参数

from sklearn.model_selection import GridSearchCV

# https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.train_test_split.html#sklearn.model_selection.train_test_split

from sklearn.model_selection import train_test_split

# 数据集进行交叉验证,效果我们可以求平均

##https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.ShuffleSplit.html#sklearn.model_selection.ShuffleSplit

from sklearn.model_selection import ShuffleSplit

# 多棵树决策,回归结果求平均

## 数模型很容易过拟合:设置树个数和深度、调整正则化系数(控制叶子结点数目)

from sklearn.ensemble import RandomForestRegressor

# https://scikit-learn.org/stable/modules/classes.html#module-sklearn.metrics

# 回归模型评测方法

from sklearn.metrics import explained_variance_score

sklearn.__version__ = 0.23.2

数据量不算大,世界那么大,你想去看看,没钱看不成;模型这么多,你尽量试试总可以吧。

咱们依旧会使用交叉验证的方式(交叉验证集约占全部数据的20%)来看看模型的效果,我们会试 支持向量回归/Suport Vector Regression, 岭回归/Ridge Regression 和 随机森林回归/Random Forest Regressor。每个模型会跑3趟看平均的结果。

什么,你说这些模型还没讲,你都不懂?没关系,先练练手试试咯,学会读文档嘛。

支持向量回归

岭回归

随机森林回归

# 总得切分一下数据咯(训练集和测试集)

cv = ShuffleSplit(n_splits=5, random_state=0, test_size=0.2, train_size=None)

# 各种模型来一圈

## 关于模型评分公式参考

## https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html#sklearn.ensemble.RandomForestRegressor.score

print("岭回归" )

for train,test in cv.split(df_train_data):

svc = linear_model.Ridge().fit(df_train_data[train], df_train_target[train])

print("train score: {0:.3f}, test score: {1:.3f}".format(

svc.score(df_train_data[train], df_train_target[train]), svc.score(df_train_data[test], df_train_target[test])))

print("支持向量回归/SVR(kernel='rbf',C=10,gamma=.001)")

for train, test in cv.split(df_train_data):

svc = svm.SVR(kernel ='rbf', C = 10, gamma = .001).fit(df_train_data[train], df_train_target[train])

print("train score: {0:.3f}, test score: {1:.3f}".format(

svc.score(df_train_data[train], df_train_target[train]), svc.score(df_train_data[test], df_train_target[test])))

print("随机森林回归/Random Forest(n_estimators = 100)" )

for train, test in cv.split(df_train_data):

svc = RandomForestRegressor(n_estimators = 100).fit(df_train_data[train], df_train_target[train])

print("train score: {0:.3f}, test score: {1:.3f}".format(

svc.score(df_train_data[train], df_train_target[train]), svc.score(df_train_data[test], df_train_target[test])))

岭回归

train score: 0.339, test score: 0.332

train score: 0.330, test score: 0.370

train score: 0.342, test score: 0.320

train score: 0.342, test score: 0.320

train score: 0.336, test score: 0.342

支持向量回归/SVR(kernel='rbf',C=10,gamma=.001)

train score: 0.417, test score: 0.408

train score: 0.406, test score: 0.452

train score: 0.419, test score: 0.390

train score: 0.421, test score: 0.399

train score: 0.415, test score: 0.416

随机森林回归/Random Forest(n_estimators = 100)

train score: 0.982, test score: 0.865

train score: 0.981, test score: 0.879

train score: 0.982, test score: 0.870

train score: 0.981, test score: 0.872

train score: 0.981, test score: 0.866

不出意料,随机森林回归获得了最佳结果。。。

不过,那个,大家有没有想过,也有可能是你的参数设置的不对啊?这个,留给大家自己去试试咯,试完告诉我,哈哈

好多同学问参数咋调啊?我们有一个工具可以帮忙,叫做GridSearch,可以在你喝咖啡的时候,帮你搬搬砖,找找参数

X = df_train_data

y = df_train_target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

tuned_parameters = [{

'n_estimators':[50,100,200]}]

## 关于模型评分公式参考

## https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html#sklearn.ensemble.RandomForestRegressor.score

scores = ['r2']

for score in scores:

print('*' * 60)

print('回归模型评分标准:',score)

# scoring: 设置每个模型的评估标准

## 更多scoring https://scikit-learn.org/stable/modules/model_evaluation.html#scoring

## sklearn 提供很多评分标准,也可以实现自己的评估标准

clf = GridSearchCV(RandomForestRegressor(), tuned_parameters, cv=5, scoring=score)

clf.fit(X_train, y_train)

# clf 返回的结果有哪些参数

## https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GridSearchCV.html#sklearn.model_selection.GridSearchCV

print("最佳参数找到了:")

#best_estimator_ returns the best estimator chosen by the search

print(clf.best_estimator_)

print("得分分别是:")

for params, mean_test_score, std_test_score in zip(clf.cv_results_['params'],clf.cv_results_['mean_test_score'],clf.cv_results_['std_test_score']) :

print("%0.5f (+/-%0.035) for %r"

% (mean_test_score, std_test_score.std() * 1.0 / 2, params))

************************************************************

回归模型评分标准: r2

最佳参数找到了:

RandomForestRegressor(n_estimators=200)

得分分别是:

0.860 (+/-0.000) for {'n_estimators': 50}

0.862 (+/-0.000) for {'n_estimators': 100}

0.862 (+/-0.000) for {'n_estimators': 200}

你看到咯,Grid Search帮你挑参数还是蛮方便的,你也可以大胆放心地在刚才其他的模型上试一把。

而且要看看模型状态是不是,过拟合or欠拟合

依旧是学习曲线

def plot_learning_curve(estimator, title, X, y, ylim=None, cv=None,

n_jobs=1, train_sizes=np.linspace(.1, 1.0, 5)):

plt.figure()

plt.title(title)

if ylim is not None:

plt.ylim(*ylim)

plt.xlabel("Training examples")

plt.ylabel("Score")

train_sizes, train_scores, test_scores = learning_curve(

estimator, X, y, cv=cv, n_jobs=n_jobs, train_sizes=train_sizes)

train_scores_mean = np.mean(train_scores, axis=1)

train_scores_std = np.std(train_scores, axis=1)

test_scores_mean = np.mean(test_scores, axis=1)

test_scores_std = np.std(test_scores, axis=1)

plt.grid()

plt.fill_between(train_sizes, train_scores_mean - train_scores_std,

train_scores_mean + train_scores_std, alpha=0.1,

color="r")

plt.fill_between(train_sizes, test_scores_mean - test_scores_std,

test_scores_mean + test_scores_std, alpha=0.1, color="g")

plt.plot(train_sizes, train_scores_mean, 'o-', color="r",

label="Training score")

plt.plot(train_sizes, test_scores_mean, 'o-', color="g",

label="Cross-validation score")

plt.legend(loc="best")

return plt

title = "Learning Curves (Random Forest, n_estimators = 100)"

cv = ShuffleSplit(n_splits=5, random_state=0, test_size=0.2, train_size=None)

estimator = RandomForestRegressor(n_estimators = 100)# 200 这里选择GridSeachCV 提供的最优参数,训练模型查看是否过拟合

plot_learning_curve(estimator, title, X, y, (0.0, 1.01), cv=cv, n_jobs=4)

plt.show()

看出来了吧,训练集和测试集直接间隔那么大,这。。。一定是过拟合了

随机森林这种算法学习能力非常强啦,大家从最上面对比各个模型得分的时候也可以看到,训练集和测试集的得分也是差蛮多的,过拟合还蛮明显。

# 尝试一下缓解过拟合,当然,未必成功

print("随机森林回归/Random Forest(n_estimators=100, max_features=0.6, max_depth=15)")

cv = ShuffleSplit(n_splits=5, random_state=0, test_size=0.2, train_size=None)

for train, test in cv.split(df_train_data):

svc = RandomForestRegressor(n_estimators = 100, max_features=0.6, max_depth=15).fit(df_train_data[train], df_train_target[train])

print("train score: {0:.3f}, test score: {1:.3f}".format(

svc.score(df_train_data[train], df_train_target[train]), svc.score(df_train_data[test], df_train_target[test])))

随机森林回归/Random Forest(n_estimators=100, max_features=0.6, max_depth=15)

train score: 0.965, test score: 0.868

train score: 0.965, test score: 0.883

train score: 0.965, test score: 0.872

train score: 0.965, test score: 0.875

train score: 0.966, test score: 0.869

这里仅仅是一个简单特征工程和机器学习决策树回归一个小案例,模型效果还需要业务抽取特征进行优化。