百度PaddlePaddle深度学习七日打卡技术心得及笔记

百度PaddlePaddle深度学习七日打卡技术心得及笔记

- 七日打卡心得

- 七日打卡技术收获

-

- Day1-Python基础练习

-

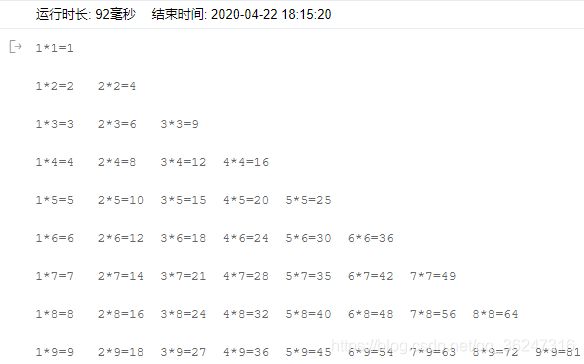

- 输出 9*9 乘法口诀表

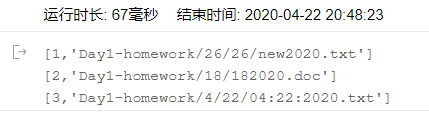

- 查找特定名称文件

- Day2-《青春有你2》选手信息爬取

- Day3-《青春有你2》选手数据分析

- Day4-《青春有你2》选手识别

- Day5-综合大作业

七日打卡心得

通过这一期的七日打卡学习,我收获很多。

课程内容从Python基础讲起,适合有一些编程语言基础的学生或者接触过Python,但基础不扎实的学生学习。课程内容由易到难,很丰富,作业难度适中,并且对讲解的内容做到了很好的补充。每日作业打卡的形式以及大家集结一起浓厚的学习氛围给我带来了源源不断的学习的动力,有效对抗了我的拖延症和惰性。这7天通过B站直播+课后作业+比赛的形式进行学习,我的收获很多,我之前接触过Python,但用的少,忘掉了一些印象不深的基础知识,通过第一天和第二天的学习,用重新对Python基础知识做了回顾,之后的课程内容层层递进,逻辑联系紧密,通过爬虫爬取《青春有你》选手信息并进行数据分析很好的应用了Python基础知识并了解了如何去爬取自己想要的信息,通过识别《青春有你》前五名的图片所对应的姓名,了解了深度学习的基本概念和paddlehub的应用,通过运用paddlehub中的分类预训练模型,调参提高识别人物图片概率,图像分割,图像识别,情感分析等,对深度学习的实际应用有了更加直观和深刻的认识。AI Studio一站式深度学习开发平台十分给力,提供免费算力,给硬件性能不够,又想学习实践深度学习的人提供了很大的帮助。但是,因为时间有限,要想把代码全部理解并吃透,并自己做出一个项目,我觉得在之后的日子里还是需要合理利用资源,多总结,多学习,多实践,才能真正很好的掌握一门技术。

七日打卡技术收获

Day1-Python基础练习

输出 9*9 乘法口诀表

def table():

for i in range(1,10,1):

for j in range(1,i+1,1):

print("{}*{}={}\t".format(j,i,i*j),end='')

print("\n")

if __name__ == '__main__':

table()

查找特定名称文件

import os

path = "Day1-homework"

#待搜索文件名称所含有的字段

filename = "2020"

#定义保存结果的数组

result = []

def findfiles():

#在这里写下您的查找文件代码吧!

for parent,dirnames,filenames in os.walk(path):

for filen in filenames:

if filen.find(filename) != -1:

f_path=os.path.join(parent,filen)

result.append(f_path)

for i in range(1,len(result)+1):

print("[{},'{}']".format(i,result[i-1]))

if __name__ == '__main__':

findfiles()

Day2-《青春有你2》选手信息爬取

def crawl_pic_urls():

'''

爬取每个选手的百度百科图片,并保存

'''

with open('work/'+ today + '.json', 'r', encoding='UTF-8') as file:

json_array = json.loads(file.read())

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'

}

for star in json_array:

name = star['name']

link = star['link']

#!!!请在以下完成对每个选手图片的爬取,将所有图片url存储在一个列表pic_urls中!!!

pic_urls = []

response = requests.get(link,headers=headers)

print(response.status_code)

pus = BeautifulSoup(response.text,'lxml')

pic_list_url = pus.select('.summary-pic a')[0].get('href')

#print(pic_list_url)

pic_list_urls = 'https://baike.baidu.com'+pic_list_url

pic_list_response = requests.get(pic_list_urls,headers=headers)

pus = BeautifulSoup(pic_list_response.text,'lxml')

pic_list_html = pus.select('.pic-list img')

#print(pic_list_html)

for pic_html in pic_list_html:

pic_url = pic_html.get('src')

#print(pic_url)

pic_urls.append(pic_url)

#!!!根据图片链接列表pic_urls, 下载所有图片,保存在以name命名的文件夹中!!!

down_pic(name,pic_urls)

Day3-《青春有你2》选手数据分析

%matplotlib inline

with open('data/data31557/20200422.json', 'r', encoding='UTF-8') as file:

json_array = json.loads(file.read())

#绘制小姐姐体重分布饼状图

weights = []

for star in json_array:

weight = star['weight']

weights.append(weight)

print(len(weights))

#print(weights)

weight_list = []

for weight in weights:

if 'kg' in weight:

weight = weight.replace('kg','')

float(weight)

weight_list.append(weight)

#print(weight_list)

df = pd.read_json('data/data31557/20200422.json')

listBins = [0,45, 50,55,60]

listLables = ['<45kg','45~50kg','50~55kg','>55kg']

df['group'] = pd.cut(weight_list,listBins,labels = listLables,include_lowest = True)

#print(df['group'])

cols = [col for col in df.columns if col not in['group']]

df_mean = df.groupby('group')[cols].count()

#print(df_mean)

label_list = df['group']

label_set = set(label_list)

label_list = list(label_set)

print(label_list)

type(label_list)

count_list = df_mean['name']

list(count_list)

#print(count_list)

#type(count_list)

plt.pie(count_list,labels=label_list,autopct='%1.1f%%')

Day4-《青春有你2》选手识别

- 重命名一个目录中的所有文件

path = "/home/aistudio/dataset/data"

for parent,dirnames,filenames in os.walk(path):

i=1

for filename in filenames:

new_path = os.path.join(parent,filename)

new_path_list = new_path.split('/')

new_name = new_path_list[-2]+str(i)+'.jpg'

i=i+1

#print(new_name)

os.rename(os.path.join(parent,filename),os.path.join(parent,new_name))

#print(filename)

#new_name = dirname+str(i)+'.jpg'

#print(new_name)

#i = i + 1

print("重命名成功!")

- 将文件目录(包含文件名)及文件标识写入指定train.txt文件中

with open(r'/home/aistudio/dataset/train_list.txt', 'w+', encoding='utf-8') as f :

for parent,dirnames,filenames in os.walk(path):

for filename in filenames:

file_path = os.path.join(parent,filename)

file_path_list = file_path.split('/')

if file_path_list[-2] == 'yushuxin':

path_str = file_path + ' ' + '0' + '\n'

#print(path_str)

f.write(path_str)

elif file_path_list[-2] == 'xujiaqi':

path_str = file_path + ' ' + '1' + '\n'

f.write(path_str)

elif file_path_list[-2] == 'zhaoxiaotang':

path_str = file_path + ' ' + '2' + '\n'

f.write(path_str)

elif file_path_list[-2] == 'anqi':

path_str = file_path + ' ' + '3' + '\n'

f.write(path_str)

elif file_path_list[-2] == 'wangchengxuan':

path_str = file_path + ' ' + '4' + '\n'

f.write(path_str)

else:

path_str = ''

print('写入文件成功!')

Day5-综合大作业

- Requests.Session()的作用:

使用Session成功登录了某个网站,则在再次使用该session对象请求该网站的其他网页时都会默认使用该session之前使用的cookie等参数,类似于一下urllib库的使用:

cookie = http.cookiejar.CookieJar()

handler = urllib.request.HttpCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

opener里面保存了cookie,以后无论访问该网站的哪个网页,都是自动的带上同一个cookie,此cookie就是你第一次登陆该网站时的cookie

- jieba库的使用:

Jieba库是一款优秀的第三方中文分词库,jieba支持三种分词模式:精确模式、全模式和搜索引擎模式。

– 精确模式:试图将语句最精确的切分,不存在冗余数据,适合做文本分析

– 全模式:将语句中所有可能是词的词语都切分出来,速度很快,但是存在冗余数据。

– 搜索引擎模式:在精确模式的基础上,对长词再次进行切分。

应用示例:

import jieba

seg_str = “好好学习,天天向上。”

print(“/”.join(jieba.lcut(seg_str)))

print(“/”.join(jieba.lcut(seg_str,cut_all=True)))

print(“/”.join(jieba.lcut_for_search(seg_str)))

- 字典dict的get、items方法:

dict.get(key,default = None)

函数返回指定键的值,如果值不在字典中返回默认值。

idict.items()

函数以列表返回可遍历的(键,值)元组数组。

items = list(dict.items())

items.sort(key=lambda x:x[1],reverse=True)

lambda 变量名:变量[维数],即对items的第二维数据的值(value)进行排序。