电商指标项目-模板方法

1. 模板方法提取公共类

模板方法

模板方法模式是在父类中定义算法的骨架,把具体实延迟到子类中去,可以在不改变一个算法的结构时可重定义该算法的某些步骤。

前面我们已经编写了三个业务的分析代码,代码结构都是分五部分,非常的相似。针对这样的代码,我们可以进行优化,提取模板类,让所有的任务类都按照模板的顺序去执行。

BaseTask.scala

package com.itheima.realprocess.task

import com.itheima.realprocess.bean.ClickLogWide

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

// 抽取一个公共的trait, 所有的任务都来实现它

trait BaseTask[T] {

/**

* 对原始日志数据流 进行map转换 分组 时间窗口 聚合 落地HBase

* @param clickLogWideDataStream

* @return

*/

def process(clickLogWideDataStream: DataStream[ClickLogWide]):Any={

val mapDataStream:DataStream[T] = map(clickLogWideDataStream)

val keyedStream:KeyedStream[T, String] = keyBy(mapDataStream)

val windowedStream: WindowedStream[T, String, TimeWindow] = timeWindow(keyedStream)

val reduceDataStream: DataStream[T] = reduce(windowedStream)

sink2HBase(reduceDataStream)

}

// Map转换数据流

def map(source:DataStream[ClickLogWide]):DataStream[T]

// 分组

def keyBy(mapDataStream: DataStream[T]):KeyedStream[T,String]

// 时间窗口

def timeWindow(keyedStream: KeyedStream[T, String]):WindowedStream[T, String, TimeWindow]

// 聚合

def reduce(windowedStream: WindowedStream[T, String, TimeWindow]): DataStream[T]

// 落地HBase

def sink2HBase(reduceDataStream: DataStream[T])

}

改造后的代码:

// 添加一个`ChannelFreshness`样例类,它封装要统计的四个业务字段:频道ID(channelID)、日期(date)、新用户(newCount)、老用户(oldCount)

case class ChannelFreshness(var channelID: String,

var date: String,

var newCount: Long,

var oldCount: Long)

object ChannelFreshnessTask extends BaseTask[ChannelFreshness] {

// 1. 使用flatMap算子,将`ClickLog`对象转换为`ChannelFreshness`

override def map(source: DataStream[ClickLogWide]): DataStream[ChannelFreshness] = {

source.flatMap {

clicklog =>

val isOld = (isNew: Int, isDateNew: Int) => if (isNew == 0 && isDateNew == 1) 1 else 0

List(

ChannelFreshness(clicklog.channelID, clicklog.yearMonthDayHour, clicklog.isNew, isOld(clicklog.isNew, clicklog.isHourNew)),

ChannelFreshness(clicklog.channelID, clicklog.yearMonthDay, clicklog.isNew, isOld(clicklog.isNew, clicklog.isDayNew)),

ChannelFreshness(clicklog.channelID, clicklog.yearMonth, clicklog.isNew, isOld(clicklog.isNew, clicklog.isMonthNew))

)

}

}

override def keyBy(mapDataStream: DataStream[ChannelFreshness]): KeyedStream[ChannelFreshness, String] = {

mapDataStream.keyBy {

freshness =>

freshness.channelID + freshness.date

}

}

override def timeWindow(keyedStream: KeyedStream[ChannelFreshness, String]): WindowedStream[ChannelFreshness, String, TimeWindow] = {

keyedStream.timeWindow(Time.seconds(3))

}

override def reduce(windowedStream: WindowedStream[ChannelFreshness, String, TimeWindow]): DataStream[ChannelFreshness] = {

windowedStream.reduce {

(freshness1, freshness2) =>

ChannelFreshness(freshness2.channelID, freshness2.date, freshness1.newCount + freshness2.newCount, freshness1.oldCount + freshness2.oldCount)

}

}

override def sink2HBase(reduceDataStream: DataStream[ChannelFreshness]) = {

reduceDataStream.addSink {

value => {

val tableName = "channel_freshness"

val cfName = "info"

// 频道ID(channelID)、日期(date)、新用户(newCount)、老用户(oldCount)

val channelIdColName = "channelID"

val dateColName = "date"

val newCountColName = "newCount"

val oldCountColName = "oldCount"

val rowkey = value.channelID + ":" + value.date

// - 判断hbase中是否已经存在结果记录

val newCountInHBase = HBaseUtil.getData(tableName, rowkey, cfName, newCountColName)

val oldCountInHBase = HBaseUtil.getData(tableName, rowkey, cfName, oldCountColName)

var totalNewCount = 0L

var totalOldCount = 0L

// 判断hbase中是否有历史的指标数据

if (StringUtils.isNotBlank(newCountInHBase)) {

totalNewCount = newCountInHBase.toLong + value.newCount

}

else {

totalNewCount = value.newCount

}

if (StringUtils.isNotBlank(oldCountInHBase)) {

totalOldCount = oldCountInHBase.toLong + value.oldCount

}

else {

totalOldCount = value.oldCount

}

// 将合并累计的数据写入到hbase中

HBaseUtil.putMapData(tableName, rowkey, cfName, Map(

channelIdColName -> value.channelID,

dateColName -> value.date,

newCountColName -> totalNewCount,

oldCountColName -> totalOldCount

))

}

}

}

}

2. 实时频道地域分析业务开发

2.1. 业务介绍

通过地域分析,可以帮助查看地域相关的PV/UV、用户新鲜度。

需要分析出来指标

-

PV

-

UV

-

新用户

-

老用户

需要分析的维度

- 地域(国家省市)——这里为了节省时间,只分析市级的地域维度,其他维度大家可以自己来实现

- 时间维度(时、天、月)

统计分析后的结果如下:

| 频道ID | 地域(国/省/市) | 时间 | PV | UV | 新用户 | 老用户 |

|---|---|---|---|---|---|---|

| 频道1 | 中国北京市朝阳区 | 201809 | 1000 | 300 | 123 | 171 |

| 频道1 | 中国北京市朝阳区 | 20180910 | 512 | 123 | 23 | 100 |

| 频道1 | 中国北京市朝阳区 | 2018091010 | 100 | 41 | 11 | 30 |

2.2. 业务开发

步骤

- 创建频道地域分析样例类(频道、地域(国省市)、时间、PV、UV、新用户、老用户)

- 将预处理后的数据,使用

flatMap转换为样例类 - 按照

频道、时间、地域进行分组(分流) - 划分时间窗口(3秒一个窗口)

- 进行合并计数统计

- 打印测试

- 将计算后的数据下沉到Hbase

实现

- 创建一个

ChannelAreaTask单例对象 - 添加一个

ChannelArea样例类,它封装要统计的四个业务字段:频道ID(channelID)、地域(area)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount) - 在

ChannelAreaTask中编写一个process方法,接收预处理后的DataStream - 使用

flatMap算子,将ClickLog对象转换为三个不同时间维度ChannelArea - 按照

频道ID、时间、地域进行分流 - 划分时间窗口(3秒一个窗口)

- 执行reduce合并计算

- 打印测试

- 将合并后的数据下沉到hbase

- 准备hbase的表名、列族名、rowkey名、列名

- 判断hbase中是否已经存在结果记录

- 若存在,则获取后进行累加

- 若不存在,则直接写入

参考代码

ChannelFreshnessTask.scala

package com.itheima.realprocess.task

import com.itheima.realprocess.bean.ClickLogWide

import com.itheima.realprocess.util.HBaseUtil

import org.apache.commons.lang.StringUtils

import org.apache.flink.streaming.api.scala.{

DataStream, KeyedStream, WindowedStream}

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.functions.sink.SinkFunction

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

// 添加一个`ChannelFreshness`样例类,它封装要统计的四个业务字段:频道ID(channelID)、日期(date)、新用户(newCount)、老用户(oldCount)

case class ChannelFreshness(var channelID: String,

var date: String,

var newCount: Long,

var oldCount: Long)

object ChannelFreshnessTask extends BaseTask[ChannelFreshness] {

// 1. 使用flatMap算子,将`ClickLog`对象转换为`ChannelFreshness`

override def map(source: DataStream[ClickLogWide]): DataStream[ChannelFreshness] = {

source.flatMap {

clicklog =>

val isOld = (isNew: Int, isDateNew: Int) => if (isNew == 0 && isDateNew == 1) 1 else 0

List(

ChannelFreshness(clicklog.channelID, clicklog.yearMonthDayHour, clicklog.isNew, isOld(clicklog.isNew, clicklog.isHourNew)),

ChannelFreshness(clicklog.channelID, clicklog.yearMonthDay, clicklog.isNew, isOld(clicklog.isDayNew, clicklog.isDayNew)),

ChannelFreshness(clicklog.channelID, clicklog.yearMonth, clicklog.isNew, isOld(clicklog.isMonthNew, clicklog.isMonthNew))

)

}

}

override def keyBy(mapDataStream: DataStream[ChannelFreshness]): KeyedStream[ChannelFreshness, String] = {

mapDataStream.keyBy {

freshness =>

freshness.channelID + freshness.date

}

}

override def timeWindow(keyedStream: KeyedStream[ChannelFreshness, String]): WindowedStream[ChannelFreshness, String, TimeWindow] = {

keyedStream.timeWindow(Time.seconds(3))

}

override def reduce(windowedStream: WindowedStream[ChannelFreshness, String, TimeWindow]): DataStream[ChannelFreshness] = {

windowedStream.reduce {

(freshness1, freshness2) =>

ChannelFreshness(freshness2.channelID, freshness2.date, freshness1.newCount + freshness2.newCount, freshness1.oldCount + freshness2.oldCount)

}

}

override def sink2HBase(reduceDataStream: DataStream[ChannelFreshness]) = {

reduceDataStream.addSink {

value => {

val tableName = "channel_freshness"

val cfName = "info"

// 频道ID(channelID)、日期(date)、新用户(newCount)、老用户(oldCount)

val channelIdColName = "channelID"

val dateColName = "date"

val newCountColName = "newCount"

val oldCountColName = "oldCount"

val rowkey = value.channelID + ":" + value.date

// - 判断hbase中是否已经存在结果记录

val newCountInHBase = HBaseUtil.getData(tableName, rowkey, cfName, newCountColName)

val oldCountInHBase = HBaseUtil.getData(tableName, rowkey, cfName, oldCountColName)

var totalNewCount = 0L

var totalOldCount = 0L

// 判断hbase中是否有历史的指标数据

if (StringUtils.isNotBlank(newCountInHBase)) {

totalNewCount = newCountInHBase.toLong + value.newCount

}

else {

totalNewCount = value.newCount

}

if (StringUtils.isNotBlank(oldCountInHBase)) {

totalOldCount = oldCountInHBase.toLong + value.oldCount

}

else {

totalOldCount = value.oldCount

}

// 将合并累计的数据写入到hbase中

HBaseUtil.putMapData(tableName, rowkey, cfName, Map(

channelIdColName -> value.channelID,

dateColName -> value.date,

newCountColName -> totalNewCount,

oldCountColName -> totalOldCount

))

}

}

}

}

3. 实时运营商分析业务开发

3.1. 业务介绍

分析出来中国移动、中国联通、中国电信等运营商的指标。来分析,流量的主要来源是哪个运营商的,这样就可以进行较准确的网络推广。

需要分析出来指标

-

PV

-

UV

-

新用户

-

老用户

需要分析的维度

- 运营商

- 时间维度(时、天、月)

统计分析后的结果如下:

| 频道ID | 运营商 | 时间 | PV | UV | 新用户 | 老用户 |

|---|---|---|---|---|---|---|

| 频道1 | 中国联通 | 201809 | 1000 | 300 | 0 | 300 |

| 频道1 | 中国联通 | 20180910 | 123 | 1 | 0 | 1 |

| 频道1 | 中国电信 | 2018091010 | 55 | 2 | 2 | 0 |

3.2. 业务开发

步骤

- 将预处理后的数据,转换为要分析出来数据(频道、运营商、时间、PV、UV、新用户、老用户)样例类

- 按照

频道、时间、运营商进行分组(分流) - 划分时间窗口(3秒一个窗口)

- 进行合并计数统计

- 打印测试

- 将计算后的数据下沉到Hbase

实现

- 创建一个

ChannelNetworkTask单例对象 - 添加一个

ChannelNetwork样例类,它封装要统计的四个业务字段:频道ID(channelID)、运营商(network)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount) - 在

ChannelNetworkTask中编写一个process方法,接收预处理后的DataStream - 使用

flatMap算子,将ClickLog对象转换为三个不同时间维度ChannelNetwork - 按照

频道ID、时间、运营商进行分流 - 划分时间窗口(3秒一个窗口)

- 执行reduce合并计算

- 打印测试

- 将合并后的数据下沉到hbase

- 准备hbase的表名、列族名、rowkey名、列名

- 判断hbase中是否已经存在结果记录

- 若存在,则获取后进行累加

- 若不存在,则直接写入

参考代码

package com.itheima.realprocess.task

import com.itheima.realprocess.bean.ClickLogWide

import com.itheima.realprocess.util.HBaseUtil

import org.apache.commons.lang.StringUtils

import org.apache.flink.streaming.api.scala.{

DataStream, KeyedStream, WindowedStream}

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.functions.sink.SinkFunction

import org.apache.flink.streaming.api.windowing.time.Time

// 2. 添加一个`ChannelNetwork`样例类,它封装要统计的四个业务字段:频道ID(channelID)、运营商(network)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

case class ChannelNetwork(var channelID: String,

var network: String,

var date: String,

var pv: Long,

var uv: Long,

var newCount: Long,

var oldCount: Long)

object ChannelNetworkTask extends BaseTask[ChannelNetwork] {

override def map(source: DataStream[ClickLogWide]): DataStream[ChannelNetwork] = {

source.flatMap {

clicklog =>

val isOld = (isNew: Int, isDateNew: Int) => if (isNew == 0 && isDateNew == 1) 1 else 0

List(

ChannelNetwork(clicklog.channelID,

clicklog.network,

clicklog.yearMonthDayHour,

clicklog.count,

clicklog.isHourNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isHourNew)), // 小时维度

ChannelNetwork(clicklog.channelID,

clicklog.network,

clicklog.yearMonthDay,

clicklog.count,

clicklog.isDayNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isDayNew)), // 天维度

ChannelNetwork(clicklog.channelID,

clicklog.network,

clicklog.yearMonth,

clicklog.count,

clicklog.isMonthNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isMonthNew)) // 月维度

)

}

}

override def keyBy(mapDataStream: DataStream[ChannelNetwork]): KeyedStream[ChannelNetwork, String] = {

mapDataStream.keyBy {

network =>

network.channelID + network.date + network.network

}

}

override def timeWindow(keyedStream: KeyedStream[ChannelNetwork, String]): WindowedStream[ChannelNetwork, String, TimeWindow] = {

keyedStream.timeWindow(Time.seconds(3))

}

override def reduce(windowedStream: WindowedStream[ChannelNetwork, String, TimeWindow]): DataStream[ChannelNetwork] = {

windowedStream.reduce {

(network1, network2) =>

ChannelNetwork(network2.channelID,

network2.network,

network2.date,

network1.pv + network2.pv,

network1.uv + network2.uv,

network1.newCount + network2.newCount,

network1.oldCount + network2.oldCount)

}

}

override def sink2HBase(reduceDataStream: DataStream[ChannelNetwork]): Unit = {

reduceDataStream.addSink(new SinkFunction[ChannelNetwork] {

override def invoke(value: ChannelNetwork): Unit = {

// - 准备hbase的表名、列族名、rowkey名、列名

val tableName = "channel_network"

val cfName = "info"

// 频道ID(channelID)、运营商(network)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

val rowkey = s"${value.channelID}:${value.date}:${value.network}"

val channelIdColName = "channelID"

val networkColName = "network"

val dateColName = "date"

val pvColName = "pv"

val uvColName = "uv"

val newCountColName = "newCount"

val oldCountColName = "oldCount"

// - 判断hbase中是否已经存在结果记录

val resultMap: Map[String, String] = HBaseUtil.getMapData(tableName, rowkey, cfName, List(

pvColName,

uvColName,

newCountColName,

oldCountColName

))

var totalPv = 0L

var totalUv = 0L

var totalNewCount = 0L

var totalOldCount = 0L

if(resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(pvColName))) {

totalPv = resultMap(pvColName).toLong + value.pv

}

else {

totalPv = value.pv

}

if(resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(uvColName))) {

totalUv = resultMap(uvColName).toLong + value.uv

}

else {

totalUv = value.uv

}

if(resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(newCountColName))) {

totalNewCount = resultMap(newCountColName).toLong + value.newCount

}

else {

totalNewCount = value.newCount

}

if(resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(oldCountColName))) {

totalOldCount = resultMap(oldCountColName).toLong + value.oldCount

}

else {

totalOldCount = value.oldCount

}

// 频道ID(channelID)、运营商(network)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

HBaseUtil.putMapData(tableName, rowkey, cfName, Map(

channelIdColName -> value.channelID,

networkColName -> value.network,

dateColName -> value.date,

pvColName -> totalPv.toString,

uvColName -> totalUv.toString,

newCountColName -> totalNewCount.toString,

oldCountColName -> totalOldCount.toString

))

}

})

}

}

4. 实时频道浏览器分析业务开发

4.1. 业务介绍

需要分别统计不同浏览器(或者客户端)的占比

需要分析出来指标

-

PV

-

UV

-

新用户

-

老用户

需要分析的维度

- 浏览器

- 时间维度(时、天、月)

统计分析后的结果如下:

| 频道ID | 浏览器 | 时间 | PV | UV | 新用户 | 老用户 |

|---|---|---|---|---|---|---|

| 频道1 | 360浏览器 | 201809 | 1000 | 300 | 0 | 300 |

| 频道1 | IE | 20180910 | 123 | 1 | 0 | 1 |

| 频道1 | Chrome | 2018091010 | 55 | 2 | 2 | 0 |

4.2. 业务开发

步骤

- 创建频道浏览器分析样例类(频道、浏览器、时间、PV、UV、新用户、老用户)

- 将预处理后的数据,使用

flatMap转换为要分析出来数据样例类 - 按照

频道、时间、浏览器进行分组(分流) - 划分时间窗口(3秒一个窗口)

- 进行合并计数统计

- 打印测试

- 将计算后的数据下沉到Hbase

实现

- 创建一个

ChannelBrowserTask单例对象 - 添加一个

ChannelBrowser样例类,它封装要统计的四个业务字段:频道ID(channelID)、浏览器(browser)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount) - 在

ChannelBrowserTask中编写一个process方法,接收预处理后的DataStream - 使用

flatMap算子,将ClickLog对象转换为三个不同时间维度ChannelBrowser - 按照

频道ID、时间、浏览器进行分流 - 划分时间窗口(3秒一个窗口)

- 执行reduce合并计算

- 打印测试

- 将合并后的数据下沉到hbase

- 准备hbase的表名、列族名、rowkey名、列名

- 判断hbase中是否已经存在结果记录

- 若存在,则获取后进行累加

- 若不存在,则直接写入

参考代码

package com.itheima.realprocess.task

import com.itheima.realprocess.bean.ClickLogWide

import com.itheima.realprocess.util.HBaseUtil

import org.apache.commons.lang.StringUtils

import org.apache.flink.streaming.api.scala.{

DataStream, KeyedStream, WindowedStream}

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.functions.sink.SinkFunction

import org.apache.flink.streaming.api.windowing.time.Time

// 2. 添加一个`ChannelBrowser`样例类,它封装要统计的四个业务字段:频道ID(channelID)、浏览器(browser)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

case class ChannelBrowser(var channelID: String,

var browser: String,

var date: String,

var pv: Long,

var uv: Long,

var newCount: Long,

var oldCount: Long)

object ChannelBrowserTask extends BaseTask[ChannelBrowser] {

override def map(source: DataStream[ClickLogWide]): DataStream[ChannelBrowser] = {

source.flatMap {

clicklog =>

val isOld = (isNew: Int, isDateNew: Int) => if (isNew == 0 && isDateNew == 1) 1 else 0

List(

ChannelBrowser(clicklog.channelID,

clicklog.browserType,

clicklog.yearMonthDayHour,

clicklog.count,

clicklog.isHourNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isHourNew)), // 小时维度

ChannelBrowser(clicklog.channelID,

clicklog.browserType,

clicklog.yearMonthDayHour,

clicklog.count,

clicklog.isDayNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isDayNew)), // 天维度

ChannelBrowser(clicklog.channelID,

clicklog.browserType,

clicklog.yearMonth,

clicklog.count,

clicklog.isMonthNew,

clicklog.isNew,

isOld(clicklog.isNew, clicklog.isMonthNew)) // 月维度

)

}

}

override def keyBy(mapDataStream: DataStream[ChannelBrowser]): KeyedStream[ChannelBrowser, String] = {

mapDataStream.keyBy {

broswer =>

broswer.channelID + broswer.date + broswer.browser

}

}

override def timeWindow(keyedStream: KeyedStream[ChannelBrowser, String]): WindowedStream[ChannelBrowser, String, TimeWindow] = {

keyedStream.timeWindow(Time.seconds(3))

}

override def reduce(windowedStream: WindowedStream[ChannelBrowser, String, TimeWindow]): DataStream[ChannelBrowser] = {

windowedStream.reduce {

(broswer1, broswer2) =>

ChannelBrowser(broswer2.channelID,

broswer2.browser,

broswer2.date,

broswer1.pv + broswer2.pv,

broswer1.uv + broswer2.uv,

broswer1.newCount + broswer2.newCount,

broswer1.oldCount + broswer2.oldCount)

}

}

override def sink2HBase(reduceDataStream: DataStream[ChannelBrowser]): Unit = {

reduceDataStream.addSink(new SinkFunction[ChannelBrowser] {

override def invoke(value: ChannelBrowser): Unit = {

// - 准备hbase的表名、列族名、rowkey名、列名

val tableName = "channel_broswer"

val cfName = "info"

// 频道ID(channelID)、浏览器(browser)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

val rowkey = s"${value.channelID}:${value.date}:${value.browser}"

val channelIDColName = "channelID"

val broswerColName = "browser"

val dateColName = "date"

val pvColName = "pv"

val uvColName = "uv"

val newCountColName = "newCount"

val oldCountColName = "oldCount"

var totalPv = 0L

var totalUv = 0L

var totalNewCount = 0L

var totalOldCount = 0L

val resultMap: Map[String, String] = HBaseUtil.getMapData(tableName, rowkey, cfName, List(

pvColName,

uvColName,

newCountColName,

oldCountColName

))

// 计算PV,如果Hbase中存在pv数据,就直接进行累加

if (resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(pvColName))) {

totalPv = resultMap(pvColName).toLong + value.pv

}

else {

totalPv = value.pv

}

if (resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(uvColName))) {

totalUv = resultMap(uvColName).toLong + value.uv

}

else {

totalUv = value.uv

}

// - 判断hbase中是否已经存在结果记录

// - 若存在,则获取后进行累加

// - 若不存在,则直接写入

if (resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(newCountColName))) {

totalNewCount = resultMap(newCountColName).toLong + value.newCount

}

else {

totalNewCount = value.newCount

}

if (resultMap != null && resultMap.size > 0 && StringUtils.isNotBlank(resultMap(oldCountColName))) {

totalOldCount = resultMap(oldCountColName).toLong + value.oldCount

}

else {

totalOldCount = value.oldCount

}

// 频道ID(channelID)、浏览器(browser)、日期(date)pv、uv、新用户(newCount)、老用户(oldCount)

HBaseUtil.putMapData(tableName, rowkey, cfName, Map(

channelIDColName -> value.channelID,

broswerColName -> value.browser,

dateColName -> value.date,

pvColName -> totalPv.toString,

uvColName -> totalUv.toString,

newCountColName -> totalNewCount.toString,

oldCountColName -> totalOldCount.toString

))

}

})

}

}

5. 实时数据同步系统介绍

5.1. 实时数据同步系统目标

- 理解

canal数据同步解决方案 - 安装

canal - 实现

Flink数据同步系统

5.2. 业务同步系统需求分析

5.2.1. 业务场景

一个大型的电商网站,每天都需要分析当天的成交量。如果使用mysql去分析,会非常慢,甚至会导致mysql宕机。要进行海量数据分析,需要将mysql中的数据同步到其他的海量数据存储介质(HDFS、hbase)中。那如何来导出呢?

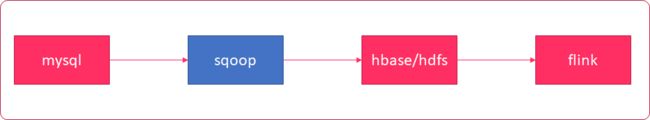

5.2.2. sqoop解决方案一

-

使用

sqoop定期导出mysql的数据到hbase或hdfs -

sqoop导出mysql的数据,需要通过sql语句来查询数据,再执行导出

存在的问题

mysql本来压力就比较大,

sqoop再执行查询时,还需要执行sql查询,到加大mysql的压力,导致mysql速度更慢

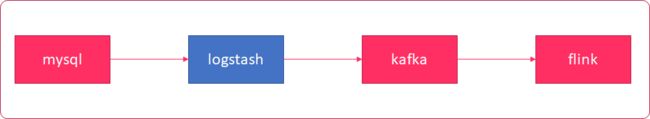

5.2.3. logstash解决方案二

-

通过

logstash将mysql的数据抽取到kafka -

在

logstatsh编写sql语句,从mysql中查询数据

存在的问题

logstash也需要在mysql中执行sql语句,也会加大mysql的压力,拖慢mysql

5.2.4. canal解决方案三

- 通过

canal来解析mysql中的binlog日志来获取数据 不需要使用sql查询mysql,不会增加mysql的压力