scikit-learn工具包中常用的特征选择方法介绍

对于特征选择的作用在这里照搬《西瓜书》中的描述:

常用的特征选择方法有以下三种(备注:以下代码采用Jupyter notebook编写,格式与传统稍有不同):

1、过滤式特征选择

简单理解就是过滤式特征选择通过选择与响应变量(目标变量)相关性度量(可能是相关系数,互信息,卡方检验等)高于设定阈值的特征。

在scikit-learn工具包中,主要有以下几种过滤式特征选择方法:

1)、移除方差小于指定阈值的特征

特征的分布方差低,表示特征的分布集中度高,多样性较低,包含的信息量少,对模型的作用不大。如某个特征的取值全为0,则该特征在模型训练过程中起不到正向作用。

对于下述数据,通过设置threshold,可以过滤掉特征方差小于threshold的特征。

from sklearn.feature_selection import VarianceThreshold

X = [[0, 0, 1], [0, 1, 0], [1, 0, 0], [0, 1, 1], [0, 1, 0], [0, 1, 1]]

# 此处使用的threshold是二分类特征中,某个取值占样本总体的80%

var_selection = VarianceThreshold(threshold=0.8 * (1 - 0.8))

X_selection = var_selection.fit_transform(X)

X_selectionarray([[0, 1],

[1, 0],

[0, 0],

[1, 1],

[1, 0],

[1, 1]])

计算得到的各个属性列的方差如下(threshold=0.16):

# 计算得到的各个属性列的方差

var_selection.variances_array([0.13888889, 0.22222222, 0.25 ])2)、单变量特征选择

单变量特征选择是通过单变量统计检验来选择最好的特征。它可以看作是估计器的预处理步骤。

示例:

i. SelectKBest按照度量得分,选择得分前k个特征。

# 使用卡方检验完成单变量特征选择

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

from sklearn.datasets import load_iris

"""

Parameters

| ----------

| score_func : callable

| Function taking two arrays X and y, and returning a pair of arrays

| (scores, pvalues) or a single array with scores.

| Default is f_classif (see below "See also"). The default function only

| works with classification tasks.

|

| k : int or "all", optional, default=10

| Number of top features to select.

| The "all" option bypasses selection, for use in a parameter search.

"""

X, y = load_iris(return_X_y=True)

# 使用卡方检验计算特征和目标值的关系,并保留得分最高的k=2个特征

kBest = SelectKBest(chi2, k=2)

kBest.fit_transform(X, y)

print(kBest.scores_, kBest.pvalues_)

print(X.shape, X_new.shape)

print(X[:5, 2:],"\n" ,X_new[:5, :])

# chi2(X, y)[ 10.81782088 3.7107283 116.31261309 67.0483602 ] [4.47651499e-03 1.56395980e-01 5.53397228e-26 2.75824965e-15]

(150, 4) (150, 2)

[[1.4 0.2]

[1.4 0.2]

[1.3 0.2]

[1.5 0.2]

[1.4 0.2]]

[[1.4 0.2]

[1.4 0.2]

[1.3 0.2]

[1.5 0.2]

[1.4 0.2]]ii. SelectPercentile按照度量得分,选择得分前百分之多少的特征

from sklearn.feature_selection import SelectPercentile

from sklearn.feature_selection import chi2

from sklearn.datasets import load_iris

"""

score_func : callable

| Function taking two arrays X and y, and returning a pair of arrays

| (scores, pvalues) or a single array with scores.

| Default is f_classif (see below "See also"). The default function only

| works with classification tasks.

|

| percentile : int, optional, default=10

| Percent of features to keep.

"""

X, y = load_iris(return_X_y=True)

# 使用卡方检验计算特征和目标值的关系,并保留特征

percentile = SelectPercentile(chi2, percentile=0.5)

percentile.fit_transform(X, y)

print(percentile.scores_, percentile.pvalues_)

print(X.shape, X_new.shape)

print(X[:5, 2:],"\n" ,X_new[:5, :])

# chi2(X, y)[ 10.81782088 3.7107283 116.31261309 67.0483602 ] [4.47651499e-03 1.56395980e-01 5.53397228e-26 2.75824965e-15]

(150, 4) (150, 2)

[[1.4 0.2]

[1.4 0.2]

[1.3 0.2]

[1.5 0.2]

[1.4 0.2]]

[[1.4 0.2]

[1.4 0.2]

[1.3 0.2]

[1.5 0.2]

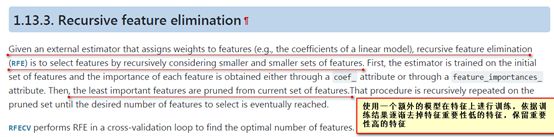

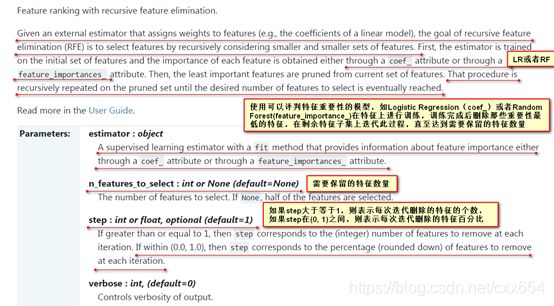

[1.4 0.2]]2、包裹式特征选择

简单理解就是包裹式特征选择方法通过不断训练模型,在每轮迭代过程中,去除那些贡献度最低的特征,直至达到最小特征数,或者模型性能出现大幅下降为止。

参数说明:

Parameters

Estimator:进行特征选择的模型,模型需要能够表示特征的重要程度

n_features_to_select:选择的特征数量

step:每轮迭代丢弃的特征数量或百分比

Attributes

n_features_:被选中的特征数量

support_:特征是否被选中的状态码,True or False,与ranking_值为1对应

ranking_:特征的排名次序,被选中值为1

estimator_:

示例:

# 使用递归的特征消除方法RFE进行特征选择

%matplotlib inline

from sklearn.datasets import load_digits

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

from matplotlib import pyplot as plt

from sklearn.svm import SVC

digits = load_digits()

X = digits.images.reshape(len(digits.images), -1)

y = digits.target

print(X.shape)

# 用于训练的简单模型

svc = SVC(kernel="linear", C=1)

# n_features_to_select:选择的特征数量, step:每次迭代清除的特征数量

rfe = RFE(estimator=svc, n_features_to_select=16, step=1)

rfe.fit(X, y)

# 特征得分

ranking = rfe.ranking_.reshape(digits.images[0].shape)

print(ranking.shape)

# ranking_结果表示每个特征的最终排序名次,被选中的特征的ranking_值为1

print("每个特征的最终排序名次:", rfe.ranking_)

# support_表示每个特征是否被选中 True or False, ranking_为1的对应位置为True

print("每次特征是否被选中:", rfe.support_)

# n_features_表示最终选择的特征数量

print("选中的特征数量:", rfe.n_features_)

print("模型:\n", rfe.estimator_)

# 依据特征选择结果选择特征

X_selected = X[:, rfe.support_]

print("被选择的特征数据:", X_selected.shape)

# Plot pixel ranking, ranking -> (8, 8)表示每个像素点的特征重要性排序

plt.matshow(ranking, cmap=plt.cm.Blues)

plt.colorbar()

plt.title("Ranking of pixels with RFE")

plt.show()(1797, 64)

(8, 8)

每个特征的最终排序名次: [49 35 16 8 1 2 19 36 42 22 15 28 1 17 29 37 39 26 4 1 13 1 24 38

40 30 1 3 5 23 1 44 48 27 10 20 14 1 1 47 46 25 1 1 1 1 1 43

41 32 11 21 9 1 7 33 45 34 1 12 18 6 1 31]

每次特征是否被选中: [False False False False True False False False False False False False

True False False False False False False True False True False False

False False True False False False True False False False False False

False True True False False False True True True True True False

False False False False False True False False False False True False

False False True False]

选中的特征数量: 16

模型:

SVC(C=1, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape='ovr', degree=3, gamma='auto_deprecated',

kernel='linear', max_iter=-1, probability=False, random_state=None,

shrinking=True, tol=0.001, verbose=False)

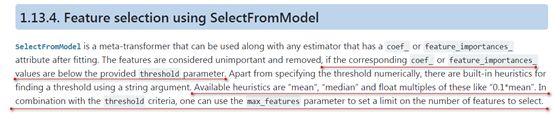

被选择的特征数据: (1797, 16)3、嵌入式特征选择

嵌入式特征选择原则:

a. 基于L1正则化的特征选择

1)、对于回归问题使用Lasso进行回归特征选择

2)、对于分类问题使用LR和LinearSVC进行特征选择

3)、基于L1正则化的特征选择方法基于coef_进行选择

b. 基于树模型的特征选择方法

1)、基于树模型的特征选择方法基于feature_importance_进行选择

示例:

1)、基于LassoCV模型完成嵌入式特征选择

加载糖尿病数据集:

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import load_diabetes

from sklearn.feature_selection import SelectFromModel

from sklearn.linear_model import LassoCV

diabetes = load_diabetes()

X = diabetes.data

y = diabetes.target

feature_names = diabetes.feature_names

print(feature_names)

X[:2]基于糖尿病数据集训练LassoCV估计器:

clf = LassoCV().fit(X, y)

# 由于LassoCV训练得到的模型参数可能为正或者负,为正表示对于正类有积极影响,为负表示对正类有消极影响

# 积极影响和消极影响都是影响,所以要对影响系数取绝对值

importance = np.abs(clf.coef_)

print(importance)[ 0. 226.2375274 526.85738059 314.44026013 196.92164002 1.48742026 151.78054083 106.52846989 530.58541123 64.50588257]基于LassoCV模型训练得到的参数绝对值选择绝对值较大的参数对应的特征:

idx_third = importance.argsort()[-3]

threshold = importance[idx_third] + 0.01

# 获取排名前2的特征索引编号

idx_features = (-importance).argsort()[:2]

# 获取排名前2的特征名

name_features = np.array(feature_names)[idx_features]

print('Selected features: {}'.format(name_features))

sfm = SelectFromModel(clf, threshold=threshold)

sfm.fit(X, y)

X_transform = sfm.transform(X)

# 特征选择后的特征数量

n_features = sfm.transform(X).shape[1]

X_transform.shape, n_featuresSelected features: ['s5' 'bmi']((442, 2), 2)查看特征选择结果:

print("特征选择标记:", sfm.get_support())

print("模型参数权重:", sfm.estimator_.coef_)

print("特征选择阈值:", sfm.threshold_)特征选择标记: [False False True False False False False False True False]

模型参数权重: [ -0. -226.2375274 526.85738059 314.44026013 -196.92164002 1.48742026 -151.78054083 106.52846989 530.58541123 64.50588257]

特征选择阈值: 314.4502601292062)、基于LR完成嵌入式特征选择

from sklearn.feature_selection import SelectFromModel

from sklearn.linear_model import LogisticRegression

X = [[ 0.87, -1.34, 0.31 ],

[-2.79, -0.02, -0.85 ],

[-1.34, -0.48, -2.55 ],

[ 1.92, 1.48, 0.65 ]]

y = [0, 1, 0, 1]

selector = SelectFromModel(estimator=LogisticRegression()).fit(X, y)

print("模型参数权重:", selector.estimator_.coef_)

# 特征选择阈值默认为权重参数绝对值的均值

print("特征选择阈值:", selector.threshold_, np.mean(np.abs(selector.estimator_.coef_)))

print("特征选择标记:", selector.get_support())

X_transformed = selector.transform(X)

X_transformed.shape模型参数权重: [[-0.32857694 0.83411609 0.46668853]]

特征选择阈值: 0.5431271870420732 0.5431271870420732

特征选择标记: [False True False]

3)、基于L1正则化的特征选择方法(回归:Lasso,分类:LR/LinearSVC)

# iris 数据集特征选择

from sklearn.svm import LinearSVC

from sklearn.datasets import load_iris

from sklearn.feature_selection import SelectFromModel

X, y = load_iris(return_X_y=True)

print(X.shape)

# 带有L1正则化项的LinearSVC分类模型

lsvc = LinearSVC(C=0.01, penalty="l1", dual=False).fit(X, y)

model = SelectFromModel(lsvc, prefit=True)

X_new = model.transform(X)

print(X_new.shape)

print(model.get_support())(150, 4)

(150, 3)

[ True True True False]4)、基于树模型的特征选择方法

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.feature_selection import SelectFromModel

X, y = load_iris(return_X_y=True)

print(X.shape)

clf = ExtraTreesClassifier(n_estimators=50)

clf = clf.fit(X, y)

print(clf.feature_importances_ )

# 默认使用的threshold是clf模型feature_importance_的均值

model = SelectFromModel(clf, prefit=True, threshold=np.mean(clf.feature_importances_))

X_new = model.transform(X)

print(X_new.shape)

print(model.get_support())(150, 4)

[0.10608772 0.0658854 0.43061022 0.39741666]

(150, 2)

[False False True True]特征选择模型及参数:

modelSelectFromModel(estimator=ExtraTreesClassifier(bootstrap=False,

class_weight=None,

criterion='gini', max_depth=None,

max_features='auto',

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=50, n_jobs=None,

oob_score=False,

random_state=None, verbose=0,

warm_start=False),

max_features=None, norm_order=1, prefit=True,

threshold=0.24999999999999994)模型属性:

model.estimator, model.threshold, model.max_features, model.prefit, clf.feature_importances_(ExtraTreesClassifier(bootstrap=False, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=50, n_jobs=None,

oob_score=False, random_state=None, verbose=0,

warm_start=False),

0.24999999999999994,

None,

True,

array([0.10608772, 0.0658854 , 0.43061022, 0.39741666]))参考:scikit-learn官方文档