Crunch compression of ETC textures

Introduction to Crunch: Compressing DXT textures.

Crunch is an open source texture compression library © Richard Geldreich, Jr. and Binomial LLC, available on GitHub. The library was originally designed for compression of DXT textures. The following section describes the main ideas used in the original algorithm.

DXT Encoding

DXT is a block-based texture compression format. The image is split up into 4×4 blocks, and each block is encoded using a fixed number of bits. In case of DXT1 format (used for compression of RGB images), each block is encoded using 64 bits. Information about each block is stored using two 16-bit color endpoint values (color0 and color1), and 16 2-bit selector values (one selector value per pixel) which determine how the color of each pixel is computed (it can be either one of the two endpoint colors or a blend between them). According to the DXT1 compression format, there are two different ways to blend the endpoint colors, depending on which endpoint color has higher value. However, Crunch algorithm uses a subset of DXT1 encoding (endpoint colors are always ordered in such a way that color0 >= color1). Therefore, when using Crunch compression, endpoint colors are always blended in the following way:

| selector value | pixel color |

| 0 | color0 |

| 1 | color1 |

| 2 | (2 * color0 + color1) / 3 |

| 3 | (color0 + 2 * color1) / 3 |

DXT encoding can therefore be visually represented in the following way:

color0 (RGB565) color0 (RGB565)16 bits/block |

color1 (RGB565) color1 (RGB565)16 bits/block |

selectors selectors2 bits/pixel |

decoded DXT decoded DXT4 bits/pixel |

Each pixel can be decoded by merging together color0 and color1 values according to the selector value.

For simplicity, information about color0 and color1 can be displayed on the same image (with the upper part of every 4×4 block filled with color0 and the lower part filled with color1). Then all the information necessary for decoding the final texture can be represented in a form of the following 2 images (4×4 blocks are displayed slightly separated from each other):

color endpoints color endpoints32 bits/block |

color selectors color selectors32 bits/block |

Tiling

For an average texture it is quite common that neighbor blocks have similar endpoints. This property can be used to improve the compression ratio. In order to achieve this, Crunch introduces the concept of “chunks”. All the texture blocks are split into “chunks” of 2×2 blocks (the size of each chunk is 8×8 pixels), and each chunk is associated with one of the following 8 chunk types:

Blocks with identical endpoints form a “tile” within a chunk, and are displayed united together on the picture above. Once the information about the chunk types has been encoded, it is sufficient to encode only one endpoint per tile. For example, in case of the leftmost chunk type, all the blocks within a chunk have the same endpoints, so for such a chunk it is sufficient to encode only one endpoint pair. In case of the rightmost chunk encoding, all the endpoint pairs are different, so it is necessary to encode all 4 of them. The following example shows texture endpoints, grouped into chunks, where each chunk is split into tiles:

Blocks with identical endpoints form a “tile” within a chunk, and are displayed united together on the picture above. Once the information about the chunk types has been encoded, it is sufficient to encode only one endpoint per tile. For example, in case of the leftmost chunk type, all the blocks within a chunk have the same endpoints, so for such a chunk it is sufficient to encode only one endpoint pair. In case of the rightmost chunk encoding, all the endpoint pairs are different, so it is necessary to encode all 4 of them. The following example shows texture endpoints, grouped into chunks, where each chunk is split into tiles:

Of course, the described chunk types don’t cover all the possible combinations of matching endpoints, but at the same time, this way the information about the matching endpoints can be encoded very efficiently. Specifically, encoding of the chunk type requires 3 bits per 4 blocks (0.75 bits per block, uncompressed).

Crunch algorithm can enforce the neighbor blocks within a chunk to have identical endpoints in cases when extra accuracy of the encoded colors isn’t worth spending extra bits for encoding of additional endpoints. This is achieved in the following way. First, each chunk is encoded in 8 different ways, corresponding to the described 8 chunk types (instead of using DXT1 optimization for each block, the algorithm is using DXT1 optimization for each tile). The quality of each encoding is then evaluated as the PSNR multiplied by a coefficient associated with the used chunk type, and the optimal encoding is selected. The trick here is that chunk types with higher number of matching endpoints also have higher quality coefficients. In other words, if using the same endpoint for two neighbor blocks within a chunk doesn’t reduce the PSNR much, then the algorithm will most likely select the chunk type where those neighbor blocks belong to the same tile. The described process can be referenced as “tiling”.

Quantization

The basic idea of Crunch compression is to perform quantization of the determined endpoints and selectors blocks, in order to encode them more efficiently. This is achieved using vector quantization. The idea is similar to color quantization, when a color image is represented using a color palette and palette indices defined for each pixel.

In order to perform vector quantization, each endpoint pair should be represented with a vector. For example, it is possible represent a tile endpoint pair with a vector (color0.r, color0.g, color0.b, color1.r, color1.g, color1.b), where color0 and color1 are obtained from DXT1 optimization. However, such representation doesn’t reflect the continuity properties of the source texture very well (for example, in case of a solid block, a small change of the block color might result in significant change of the optimal color0 and color1, which are used to encode this color). Instead, Crunch algorithm is using a different representation. Source pixels of each tile, which are represented by their (r, g, b) vectors, are split into 2 clusters using vector quantization, providing two centroids for each tile: low_colorand high_color. Then the endpoints of each tile are represented with a (low_color.r, low_color.g, low_color.b, high_color.r, high_color.g, high_color.b) vector. Such representation of the tile endpoints doesn’t depend on the DXT1 optimization result, but at the same time performs quite well.

Note that after quantization all the blocks within a tile will be associated with the same endpoint codebook element, so they will get assigned the same endpoint index. This means that initially determined chunk types will be still valid after endpoint quantization.

Selectors of each 4×4 block can be represented with a vector of 16 components, corresponding to the selector values of each block pixel. In order to improve the result of the quantization, selector values are reordered in the following way, in order to better reflect the continuity of the selected color values:

| linear selector value | pixel color |

| 0 | color0 |

| 1 | (2 * color0 + color1) / 3 |

| 2 | (color0 + 2 * color1) / 3 |

| 3 | color1 |

Vector quantization algorithm splits all the input vectors into separate groups (clusters) in such a way so that vectors in each group appear to be more or less similar. Each group is represented by its centroid, which is computed as an average of all the vectors in the group according to the selected metric. The computed centroid vectors are then used to generate the codebook (centroid vector components are clipped and rounded to integers in order to represent valid endpoints or selectors). The original texture elements are then replaced with the elements of the computed codebooks (endpoints for each source 4×4 block are replaced with the closest endpoint pair from the generated endpoint codebook, selectors for each source 4×4 block are replaced with the selector values of the closest selector codebook element).

The result of vector quantization performed for both endpoints and selectors can be represented in the following way:

endpoint codebook:  |

selector codebook:  |

After quantization, it is sufficient to store the following information in order to decode the image:

- chunk types

- endpoint codebook

- selector codebook

- endpoint indices (one index per tile)

- selector indices (one index per block)

The quality parameter provided for Crunch compressor directly controls the size of generated endpoint and selector codebooks. The higher is the quality value, the larger are the endpoint and selector codebooks, the wider is the range of the possible indices, and subsequently, the bigger is the size of the compressed texture.

Encoding of the DXT Alpha channel

DXT encoding for the alpha channel is very similar to the DXT encoding of the color information. Information about the alpha channel of each block is stored using 64 bits: two 8-bit alpha endpoint values (alpha0 and alpha1), and 16 3-bit selector values (one selector value per pixel) which determine how the alpha of each pixel is computed (it can be either one of the two alpha values or a blend between them). As has been mentioned before, Crunch algorithm uses a subset of DXT encoding, so the possible alpha values are always blended in the following way:

| selector value | pixel alpha |

| 0 | alpha0 |

| 1 | alpha1 |

| 2 | (6 * alpha0 + 1 * alpha1) / 7 |

| 3 | (5 * alpha0 + 2 * alpha1) / 7 |

| 4 | (4 * alpha0 + 3 * alpha1) / 7 |

| 5 | (3 * alpha0 + 4 * alpha1) / 7 |

| 6 | (2 * alpha0 + 5 * alpha1) / 7 |

| 7 | (1 * alpha0 + 6 * alpha1) / 7 |

Vector quantization for the alpha channel is performed exactly the same way as for the color components, except that vectors which represent alpha endpoints of each tile, consist of 2 components (low_alpha, high_alpha), and are obtained through clusterization of the alpha values of all the tile pixels.

Note that the chunk type, determined during the tiling step, is common for both color and alpha endpoints. So in case of textures using alpha channel, chunk type is determined based on the combined PSNR computed for color and alpha components.

Compression step

The main idea used in Crunch algorithm for improving the compression ratio is based on the fact that changing the order of the elements in the codebook doesn’t affect the decompression result (considering that the indices are reassigned accordingly). In other words, the elements of the generated codebooks can be reordered in such a way, so that the dictionary elements and indices acquire some specific properties, which allow them to be compressed more efficiently. Specifically, if the neighbor encoded elements appear to be similar, then each element can be used for prediction of the following element, which significantly improves the compression ratio.

According to this scheme, Crunch algorithm is using zero order prediction when encoding codebook elements and indices. Instead of encoding endpoint and selector indices, the algorithm encodes the deltas between the indices of the neighbor encoded blocks. The codebook elements are encoded using per-component prediction. Specifically, each endpoint codebook element (which is represented by two RGB565 colors) is encoded as 6 per-component deltas from the previous dictionary element. Each selector codebook element (which is represented by 16 2-bit selector values) is encoded as 16 per-component deltas from the previous dictionary element.

On the one hand, endpoint indices of the neighbor blocks should be similar, as the encoder compresses the deltas between the indices of the neighbour blocks. On the other hand, the neighbor codebook elements should be also similar, as the encoder compresses the deltas between the components of those neighbor codebook elements. The combined optimization is based on the Zeng’s technique, using a weighted function which takes into account both similarity of the indices of the neighbor blocks and similarity of the neighbor elements in the codebook. Such reordering optimization is performed both for endpoint and selector codebooks.

Finally, the reordered codebooks and indices, along with the chunk type information, are encoded with Huffman coding (using zero order prediction for indices and codebook components). Each type of encoded data uses its own Huffman table, or multiple tables. For performance reasons adaptive Huffman coding isn’t used.

Improving Crunch compression library

We performed a comprehensive analysis of the algorithms and techniques used in the original version of Crunch and introduced several modifications which allowed us to significantly improve the compression performance. The updated Crunch library, introduced in Unity 2017.3, can compress DXT textures up to 2.5 times faster, while providing about 10% better compression ratio. At the same time, decompressed textures, generated by both libraries, are identical bit by bit. The latest version of the library, which will reach Beta builds soon, will be able to perform Crunch compression of DXT textures about 5 times faster than the original version. The latest version of the Crunch library can be found in the following GitHub repository.

The main modifications of the original Crunch library are described below. The improvement in compressed size and compression time, introduced by each modification, is described as a saved portion of the compressed size and compression time spent by the original library. It has been evaluated on the Kodak image test set. When compressing real world textures, the improvement in compression size should be normally higher.

- Replace chunk encoding scheme with block encoding scheme (improvement in compressed size: 2.1%, improvement in compression time: 7%)

As described above, in the original version of Crunch algorithm all the blocks are grouped into chunks of 2×2 blocks. Each chunk is associated with one of 8 different chunk types. The type of the chunk determines which blocks inside the chunk have the same endpoints indices. This scheme performs quite well, because it is often more efficient to compress information about the endpoint equality, rather than compress duplicate endpoint indices. However, this scheme can be improved. The modified Crunch algorithm no longer uses the concept of chunks. Instead, for each block it can encode a reference to the previously processed neighbor block, where the endpoint can be copied from. Considering that the texture is decompressed from left-to-right, top-to-bottom, endpoints of each decoded block can be either decoded from the input stream, copied from the left nearest block (reference to the left) or copied from the upper nearest block (reference to the top):

The following example shows quantized texture endpoints with the references:

Note that the modified Crunch encoding is a superset of the original encoding, so all the images previously encoded with the original Crunch algorithm can be losslessly transcoded into the new format, but not vice versa. Even though the new endpoint equality encoding is more expensive (about 1.58 bits per block, uncompressed), it also provides more flexibility for endpoint matching inside the previously used “chunks”, but more importantly, it allows to copy endpoints from one “chunk” to another (which isn’t possible when using the original chunk encoding). The blocks are no longer grouped together and are encoded in the same order as they appear on the image, which significantly simplifies the algorithm and eliminates extra levels of indirection.

- Encode selector indices without prediction (improvement in compressed size: 1.8%, improvement in compression time: 10%)

The original version of Crunch encodes the deltas between the neighbour indices in order to get advantage of the neighbour indices similarity. The efficiency of such approach highly depends on the continuity of the encoded data. While neighbour color and alpha endpoints are usually similar, this is often not the case for selectors. Of course, in some situations, encoding the deltas for selector indices makes sense, for example, when an image contains a lot of regular patterns aligned to the 4×4 block boundaries. In practice, however, such situations are relatively rare, so it usually appears to be more efficient to encode raw selector indices without prediction. Note that when selector indices are encoded without prediction, the reordering of the selector indices no longer affects the size of the encoded selector indices stream (at least when using Huffman coding). This makes the Zeng optimization of selector indices unnecessary, and it’s sufficient to simply optimize the size of the packed selector codebook.

- Remove duplicate endpoints and selectors from the codebooks (improvement in compressed size: 1.7%)

By default, the size of the endpoint and selector codebooks is calculated based on the total number of blocks in the image and the quality parameter, while the actual complexity of the image isn’t evaluated and isn’t taken into account. The target codebook size is selected in such a way that even complex images can be approximated well enough. At the same time, normally, the lower the complexity of the image, the higher is the density of the quantized vectors. Considering that vector quantization is performed using floating point computations, and the quantized endpoints have integer components, high density of quantized vectors will result in a large number of duplicate endpoints. As the result, some identical endpoints are being represented with multiple different indices, which affects the compression ratio. Note that this isn’t the case for selectors, as their corresponding vector components are rounded after quantization, but instead it leads to some duplicate selectors in the codebook being unused. In the modified version of the algorithm all the duplicate codebook entries are merged together, unused entries are removed from the codebooks, endpoint and selector indices are updated accordingly.

- Use XOR-deltas for encoding of the selector codebook (improvement in compressed size: 0.9%)

In the original version of Crunch, selector codebook is encoded with Huffman coding applied to the raw deltas between corresponding pixel selectors of the neighbour codebook elements. However, using Huffman coding for raw deltas has a downside. Specifically, for each individual pixel selector, only about half of all the possible raw deltas are valid. Indeed, once the value of the current selector is determined, the selector delta depends only on the next selector value, so only n out of 2 * n – 1total raw delta values are possible at any specific point (where n is the number of possible selector values). This means that on each step the impossible raw delta values are being encoded with a non-zero probability, as the probability table is calculated only once throughout the whole codebook. The situation can be improved by using modulo-deltas instead of raw deltas (modulo 4 for color selectors and modulo 8 for alpha selectors). This eliminates the mentioned implicit restriction on the values of the decoded selector deltas, and therefore improves the compression ratio. Interestingly, the compression ratio can be improved even further if XOR-deltas are used instead of modulo-deltas (XOR-delta is computed by simply XOR-ing two selector values). At first it might seem counterintuitive that XOR-delta can perform better than modulo-delta, as it doesn’t reflect the continuity properties of the data that well. The trick here is that the encoded selectors are first sorted according to the used delta operation and the corresponding metric.

- Improve Zeng reordering algorithm (improvement in compressed size: 0.7%, improvement in compression time: 5%)

After the endpoint codebook has been computed, the endpoints are reordered to improve the compression ratio. As has been described above, optimization is based on Zeng’s technique, using a weighted function which takes into account both similarity of the indices in neighbor blocks and similarity of the neighbor elements in the codebook.

The ordered list of endpoints is built starting from a single endpoint and then adding one of the remaining endpoints to the beginning or to the end of the list on each iteration. It’s using a greedy strategy which is controlled by the optimization function. The similarity of the endpoint indices is evaluated as a combined neighborhood frequency of the candidate endpoint and all the endpoints in the ordered list. The similarity of the neighbor endpoints in the codebook is evaluated as Euclidian distance from the candidate endpoint to the extremity of the ordered list. The original optimization function for an endpoint candidate p can be represented as:

F(p) = (endpoint_similarity(p) + 1) * (neighborhood_frequency(p) + 1)

The problem with this approach is the following. While the endpoint_similarity(p) has a limited range of values, the neighborhood_frequency(p) grows rapidly with the increasing size of the ordered list of endpoints. With each iteration this introduces additional disbalance for the weighted optimization function. In order to minimize this effect, is it proposed to normalize the neighborhood_frequency(p)on each iteration. For computational simplicity, the normalizer is computed as the optimal neighborhood_frequency value from the previous iteration, multiplied by a constant. The modified optimization function can be represented as:

F(p) = (endpoint_similarity(p) + 1) * (neighborhood_frequency(p) + neighborhood_frequency_normalizer)

Other improvements

Additional improvement in compression speed has been achieved by optimizing the original algorithms, reducing the total amount of computations by caching the intermediate computation results, and spreading the computations between threads more efficiently.

Crunch encoding vs. general purpose compression

The described modifications of the Crunch algorithm don’t change the result of the quantization step, which means that decompressed textures, generated by both libraries, will be identical bit by bit. In other words, the improvement in compression ratio has been achieved by using a different lossless encoding of the quantized images. It might therefore be interesting to compare Crunch encoding with alternative ways of compressing the quantized textures. For example, quantized textures can be stored in a raw DXT format, compressed with LZMA. The following table displays the difference in compression ratio when using different approaches:

| DXT | Quantized DXT + LZMA | Quantized DXT + original Crunch encoding | Quantized DXT + improved Crunch encoding | |

| Kodak image set | 6147.4 KB | 2227.0 KB | 2016.8 KB | 1869.9 KB |

| Adam Character Pack: Adam, Guard, Lu (93 textures) | 652.7 MB | 155.8 MB | 142.8 MB | 128.7 MB |

| Adam Exterior Environment (227 textures) | 717.8 MB | 162.6 MB | 156.3 MB | 138.1 MB |

According to the test results, it seems to be more efficient to use Crunch encoding of the computed codebooks and indices, rather than compress the quantized texture with LZMA. Not to mention that Crunch decompression is also significantly faster than LZMA decompression.

Modifying Crunch algorithm to support ETC texture format

Even though the Crunch algorithm was originally designed for compression of DXT textures, it is in fact much more powerful. With some minor adjustments it can be used to compress other texture formats. This section will describe in detail how the original Crunch algorithm was modified in order to be able to compress ETC and ETC2 textures.

ETC encoding

ETC is a block-based texture compression format. The image is split up into 4×4 blocks, and each block is encoded using a fixed number of bits. In case of ETC1 format (used for compression of RGB images), each block is encoded using 64 bits.

The first 32 bits contain information about the colors used within the 4×4 block. Each 4×4 block is split either vertically or horizontally into two 2×4 or 4×2 subblocks (the orientation of each block is controlled by the “flip” bit). Each subblock is assigned its own base color and its own modifier table index.

The two base colors of a 4×4 block can be encoded either individually as RGB444, or differentially (the first base color is encoded as RGB555, and the second base color is encoded as RGB333 signed offset from the first base color). The type of the base color encoding for each block is controlled by the “diff” bit.

The modifier table index of each subblock is referencing one of the 8 possible rows in the following modifier table:

| modifier table index | modifier0 | modifier1 | modifier2 | modifier3 |

| 0 | -8 | -2 | 2 | 8 |

| 1 | -17 | -5 | 5 | 17 |

| 2 | -29 | -9 | 9 | 29 |

| 3 | -42 | -13 | 13 | 42 |

| 4 | -60 | -18 | 18 | 60 |

| 5 | -80 | -24 | 24 | 80 |

| 6 | -106 | -33 | 33 | 106 |

| 7 | -183 | -47 | 47 | 183 |

The intensity modifier set (modifier0, modifier1, modifier2, modifier3) defined by the modifier table index, along with the base color, determine 4 possible color values for each subblock:

base_color + RGB(modifier0, modifier0, modifier0)

base_color + RGB(modifier1, modifier1, modifier1)

base_color + RGB(modifier2, modifier2, modifier2)

base_color + RGB(modifier3, modifier3, modifier3)

Note that the higher is the value of the modifier table index, the more distributed are the subblock colors along the intensity axis.

Another 32 bits of the encoded ETC1 block describe 16 2-bit selectors values (each pixel in the block can take one of 4 possible color values, described above).

ETC1 encoding can therefore be visually represented in the following way:

base colors + base colors +block orientation 26 bits/block |

modifier table index modifier table index3 bits/subblock |

selectors selectors2 bits/pixel |

decoded ETC1 decoded ETC14 bits/pixel |

Each pixel color of an ETC1 block can be decoded by adding together the base color and the modifier color, defined by the modifier table index and selector value (the result color should be clamped).

For simplicity, information about the base colors, block orientations and modifier table indices can be displayed on the same image. The upper or the left part of each 2×4 or 4×2 subblock (depending on the block orientation) is filled with the base color, and the rest is filled with the modifier table index color. Then all the information necessary for decoding of the final texture can be represented in a form of the following 2 images (subblocks on the left image and blocks on the right image are displayed slightly separated from each other):

base colors + block orientation + base colors + block orientation +modifier table index 32 bits/block |

color selectors color selectors32 bits/block |

The detailed description of ETC1 format can be found at this Khronos Group page.

Using Crunch algorithm for compression of ETC1 textures

Even though DXT1 and ETC1 encodings seem to be quite different, they also have a lot in common. Each pixel of an ETC1 texture can take one of four possible color values, which means that ETC1 selector encoding is equivalent to DXT1 selector encoding, and therefore ETC1 selectors can be quantized exactly the same way as DXT1 selectors. The main difference between the encodings is that in case of ETC1, each half of a 4×4 block has its own set of possible color values. But even though ETC1 subblock colors are encoded using a base color and a modifier table index, the four computed subblock colors normally lie on the same line and are more or less evenly distributed along that line, which highly resembles DXT1 block colors. The described similarities allow to use Crunch compression for ETC1 textures, with some modifications.

As has been described above, Crunch compression involves the following main steps:

- tiling

- endpoint quantization

- selector quantization

- compression of the determined codebooks and indices

When applying Crunch algorithms to a new texture format, it is necessary to first define the codebook element. In the context of Crunch, this means that the whole image consists of smaller non-overlapping blocks, while the contents of each individual block are determined by an endpoint and a selector from the corresponding codebooks. For example, in case of DXT format, each endpoint and selector codebook element corresponds to a 4×4 pixel block. In general, the size of the blocks, which form the encoded image, depends on the texture format and quality considerations.

It’s proposed to define codebook elements according to the following limitations:

- Codebook elements should be compatible with the existing Crunch algorithm, while the image blocks defined by those codebook elements should be compatible with the texture encoding format.

- It should be possible to cover a wide range of image quality and bitrates by changing the size of the endpoint and selector codebooks. If there is no limitation for the codebook size, it should be possible to achieve lossless or near-lossless compression quality (not considering the quality loss implied by the texture format itself)

Endpoint codebook

In case of ETC1, the texture format itself determines the minimal size of the image block, defined by an endpoint: it can be either 2×4 or 4×2 rectangle, aligned to the borders of the 4×4 grid. It isn’t possible to use higher granularity, because each of those rectangles can have only one base color, according to the ETC1 format. For the same reason, any image block, defined by an endpoint codebook element, should represent a combination of ETC1 subblocks.

At the same time, each ETC1 subblock has its own base color and modifier table index, which approximately determine the high and the low colors of the subblock (even though there are some limitations on the position of those high and low colors, implied by the ETC1 encoding). If an endpoint codebook element is defined in such a way that it contains information about more than one ETC1 base color, then such a dictionary will become incompatible with the existing tile quantization algorithm for the following reason. The Crunch tiling algorithm first performs quantization of all the tile pixel colors, down to just 2 colors. Then it performs quantization of all the generated color pairs, generated by different tiles. This approach works quite well for 4×4 DXT blocks, as those 2 colors approximately represent the principal component of the tile pixel colors. In case of ETC1, however, mixing together pixels, which correspond to different base colors, doesn’t make much sense, because each group of those pixels has its own low and high color values independent from other groups. If those pixels are mixed together, the information about the original principal components of each subblock will get lost.

The described limitations suggest that ETC1 endpoint codebook element should represent the area of a single ETC1 subblock (either 2×4 or 4×2). This means that ETC1 endpoint codebook element should contain information about the subblock base color (RGB444 or RGB555) and the modifier table index (3 bits). And it is therefore proposed to encode an ETC1 “endpoint” as 3555 (3 bits for the modifier table index and 5 bits for each component of the base color).

Selector codebook

In case of DXT format, both endpoint codebook elements and selector codebook elements correspond to the same size of the decoded block (in case of DXT it is 4×4). So it would be reasonable to try the same scheme for ETC1 encoding (i.e. to use 2×4 or 4×2 blocks for selector codebooks, matching the blocks which are defined by endpoint codebook elements). Nevertheless, after additional research we discovered a very interesting observation. Specifically, endpoint blocks and selector blocks don’t have to be of the same size in order to be compatible with the existing Crunch algorithm. Indeed, selector codebook and selector indices are defined after the endpoint optimization is complete. At this point each image pixel is already associated with a specific endpoint. At the same time, the selector computation step is using those per-pixel endpoint associations as the only input information, so the size and the shape of the blocks, defined by selector codebook elements, doesn’t depend in any way on the size or shape of the blocks, defined by endpoint codebook elements.

In other words, the endpoint space of the texture can be split into one set of blocks, defined by endpoint codebook and endpoint indices. And the selector space of the texture can be split into a completely different set of blocks, defined by selector codebook and selector indices. Endpoint blocks can be different in size from the selector blocks, as well as endpoint blocks can overlap in arbitrary way with the selector blocks, and such setup will still be fully compatible with the existing Crunch algorithm. The discovered property of the Crunch algorithm opens another dimension for optimization of the compression ratio. Specifically, the quality of the compressed selectors can now be adjusted in two ways: by changing the size of the selector codebook and by changing the size of the selector block. Note that both DXT and ETC formats have selectors encoded as plain bits in the output format, so there is no limitation on the size or shape of the selector block (though, for performance reasons, non-power-of-two selector blocks might require some specific optimizations in the decoder).

Several performance tests have been conducted using different selector block sizes, and the results suggest that 4×4 selector blocks perform quite well.

Tiling

As has been described above, each element of an ETC1 endpoint codebook should correspond to an ETC1 subblock (i.e. to a 2×4 or a 4×2 pixel block, depending on the block orientation). In case of DXT encoding, the size of the encoded block is 4×4 pixels, and tiling is performed in a 8×8 pixel area (covering 4 blocks). In case of ETC1, however, tiling can be performed either in a 4×4 pixel area (covering 2 subblocks), or in a 8×8 pixel area (covering 8 subblocks), while other possibilities are either not symmetrical or too complex. For performance reasons and simplicity it is proposed to use 4×4 pixel area for tiling. There are therefore 3 possible block types: the block isn’t split (the whole block is encoded using a single endpoint), the block is split horizontally, the block is split vertically:

The following example shows computed tiles for the texture endpoints:

Endpoint references

At first, it might look like ETC1 block flipping can bring some complications for Crunch, as the subblock structure doesn’t look like a grid. This, however, can be easily resolved by flipping all the “horizontal” ETC1 blocks across the main diagonal of the block after the tiling step, so that all the ETC1 subblocks will become 2×4 and form a regular grid:

flipped color endpoints flipped color endpoints |

flipped color selectors flipped color selectors |

Note that decoded selectors should be flipped back according to the block orientation during decompression (this can be efficiently implemented by precomputing a codebook of flipped selectors).

Endpoint references for the ETC1 format are encoded in a similar way to the DXT1 format. The are however two modifications, specific to the ETC1 encoding:

- In addition to the standard endpoint references (to the top and to the left blocks), it is also possible to use an endpoint reference to the top-left diagonal neighbour block.

- Endpoint references for the primary and secondary subblocks have different meaning.

The primary ETC1 subblock has the reference value of 0 if the endpoint is decoded from the input stream, the value of 1 if the endpoint is copied from the secondary subblock of the left neighbour ETC1 block, the value of 2 if the endpoint is copied from the primary subblock of the top neighbour ETC1 block, and the value of 3 if the endpoint is copied from the secondary subblock of the top-left neighbour ETC1 block:

The reference value of secondary ETC1 subblock contains information about the block tiling and flipping. It has the reference value of 0 if the endpoint is copied from the primary subblock (note that in this case flipping doesn’t need to be encoded, as endpoints are equal), the value of 1 if the endpoint is decoded from the input stream and the corresponding ETC1 block is split horizontally, and the value of 2 if the endpoint is decoded from the input stream and the corresponding ETC1 block is split vertically:

![]() The following example shows ETC1 texture endpoints with tiles and references (considering that flipping has been already performed by the decoder):

The following example shows ETC1 texture endpoints with tiles and references (considering that flipping has been already performed by the decoder):

Quantization

Considering that each endpoint codebook element corresponds to a single ETC1 base color, the original endpoint quantization algorithm works almost the same way for the ETC1 encoding as for the DXT1 encoding. An endpoint of en ETC1 tile can be represented with a (low_color.r, low_color.g, low_color.b, high_color.r, high_color.g, high_color.b) vector, where low_color and high_color are generated by the tile palletizer, exactly the same way as for the DXT1 encoding.

Note that low_color and high_color, computed for a tile, implicitly contain information about the base color and the modifier table index, computed for this tile. Indeed, the base color normally lies somewhere in the middle between low_color and high_color, while the modifier table index corresponds to the distance between low_color and high_color. Vectors which represent tiles with close values of low_color and high_color, will most likely get into the same cluster after vector quantization. But this also means that for the tiles from the same cluster, the average values of low_color and high_color, and distances between low_color and high_color should be also pretty close. In other words, the original endpoint quantization algorithm will generate tile clusters with close values of the base color and the modifier table index.

Selectors of each 4×4 block can be represented with a vector of 16 components, corresponding to the selector values of each block pixel. This means that ETC1 selector quantization step is identical to the DXT1 selector quantization step.

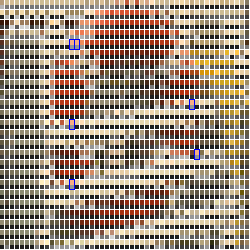

The result of the vector quantization performed for both ETC1 endpoints and selectors can be represented in the following way:

endpoint codebook: |

selector codebook:  |

Note that according to the ETC1 format, the base colors within an ETC1 block can be encoded either as RGB444 and RGB444, or differentially as RGB555 and RGB333. For simplicity, this aspect is currently not taken into account (all the quantized endpoints are encoded as 3555 in the codebook). If it appears that the base colors in the resulting ETC1 block can not be encoded differentially, the decoder will convert both base colors from RGB555 to RGB444 during decompression.

Compression of ETC2 textures

The Crunch algorithm doesn’t yet support ETC2 specific modes (T, H or P), but it’s capable of efficiently encoding the ETC2 Alpha channel. This means that the current ETC2 + Alpha compression format is equivalent to ETC1 + Alpha. Note that ETC2 encoding is a superset of ETC1, so any texture, which consists of ETC1 color blocks and ETC2 Alpha blocks, can be correctly decoded by an ETC2_RGBA8 decoder.

ETC2 encoding for the alpha channel is very similar to the ETC1 encoding of the color information. Information about the alpha channel of each block is stored using 64 bits: 8-bit base alpha, 4-bit modifier table index, 4-bit multiplier and 16 3-bit selector values (one selector value per pixel).

The modifier table index and selector value determine a modifier value for a pixel, which is selected from the ETC2 alpha modifier table. For performance reasons, ETC2 Crunch compressor is currently using only the following subset of the modifier table:

| modifier table index | modifier0 | modifier1 | modifier2 | modifier3 | modifier4 | modifier5 | modifier6 | modifier7 |

| 11 | -2 | -5 | -7 | -10 | 1 | 4 | 6 | 9 |

| 13 | -1 | -2 | -3 | -10 | 0 | 1 | 2 | 9 |

The final alpha value for each pixel is calculated as base_alpha + modifier * multiplier, which is then clamped.

Note that unlike ETC1 color, ETC2 Alpha is encoded using a single base alpha value per 4×4 pixel block. This means that each element of the alpha endpoint dictionary should correspond to a 4×4 pixel block, covering both primary and secondary ETC1 subblocks. For this reason, alpha channel can be ignored when performing color endpoint tiling.

The compression scheme for ETC2 Alpha blocks is equivalent to the compression scheme for DXT5 Alpha blocks. As has been shown before, vector representation of alpha endpoints doesn’t depend on the used encoding. This means that all the initial processing steps, including alpha endpoint quantization, will be almost identical for DXT5 and ETC2 Alpha channels. The only part which is actually different for the ETC2 Alpha encoding is the final Alpha endpoint optimization step.

In order to perform ETC2 Alpha endpoint optimization, the already existing DXT5 Alpha endpoint optimization algorithm is run to obtain the initial approximate solution. Then the approximate solution is refined based on the ETC2 Alpha modifier table values. Note that ETC2 format supports 16 different Alpha modifier indices, but for performance reasons, only 2 Alpha modifier indices are currently used: modifier index 13, which allows to perform precise approximation on short Alpha intervals, and modifier index 11, which has more or less regularly distributed values, and is used for large Alpha intervals.

At first it might seem that different size of the color and alpha blocks can bring some complications for Crunch, as according to the original algorithm, both color and alpha endpoints should share the same endpoint references. This, however, is easily resolved in the following way: each alpha block is using the endpoint reference of the corresponding primary color subblock (this allows to copy alpha endpoint from the left, top, left-top or from the input stream), while the endpoint reference of the secondary color subblock is simply ignored when decoding alpha channel.

Closing summary

The performed research demonstrates that Crunch compression algorithm in not limited to the DXT format and with some modifications can be used on a different gpu texture formats. We see some research potential to expand this work to cover further texture formats in the future.

From:https://blogs.unity3d.com/cn/2017/12/15/crunch-compression-of-etc-textures/