opencv3.1.0+ubuntu14.04+qt creator实现两张图片的拼接

图像拼接基本要求:两张图有较多的重叠部分。在ubuntu14.04+opencv3.1.0+qt creator环境下实现下面两张图片的拼接。

参考资料:

http://www.cnblogs.com/asmer-stone/p/5089764.html

http://www.cnblogs.com/skyfsm/p/7401523.html

https://www.cnblogs.com/skyfsm/p/7411961.html

http://www.cnblogs.com/feifanrensheng/p/9042813.html

opencv3.1.0下实现图像拼接有以下几个步骤:

1.检查opencv是否安装了扩展模块,因为SIFT、SURF等算法在扩展模块open-contrib中;

2.提取特征点;

3.特征点匹配;

4.图像配准

5.拼合图像

6.对重叠边界进行处理。

1.检查opencv是否安装了扩展模块

目前还不是太稳定的算法模块都在opencv_contrib里边,由于不稳定,所以不能在release版本里发行,只有在稳定以后才会放进 release里边。但是这里边有很多我们经常要用的算法,比如SIFT,SURF等(在xfeatures2d 模块里边)。官网提供了说明,可以把opencv_contrib扩展模块添加编译到已安装的opencv3.0.0里边,也可以安装的时候直接把扩展模 块编译进去一并安装。

安装扩展模块的步骤如下:

(1)安装依赖包

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev(2)在官网上下载源码

http://opencv.org/downloads.html

opencv的版本和opencv_contrib的版本需要一致。解压后将opencv_contrib放在opencv源码文件夹中。

(3)cmake

进入opencv源码文件夹,新建build目录,进入该目录执行cmake命令。

d ~/opencv

mkdir build //建立一个build目录,把cmake的文件都放着里边

cd build //进入build目录

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=../opencv_contrib/modules/ -D WITH_OPENMP=ON ..(4)把代码编译成可执行文件

官方推荐使用多进程编译,推荐七个进程:

make -j7 # 并行运行七个jobs,这一步也在build目录中进行实际编译中多进程编译可能会出错,可以采用单进程方式,直接执行make命令。

make过程中的其他错误:

无法下载ippicv_linux_20151201,解决方法:官网下载ippicv_linux_20151201.tgz,然后放到下面路径下opencv-3.1.0/3rdparty/ippicv/downloads/linux-808b791a6eac9ed78d32a7666804320e/ 没有的文件夹要新建

(5)安装

sudo make install以下代码用到的拓展模块及相关类(opencv3与opencv2有差异)

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d; 2.特征点提取:SURF算法

opencv2和opencv3中的SURF算法使用有些差异,下面给出opencv3中实现SURF算法的代码。

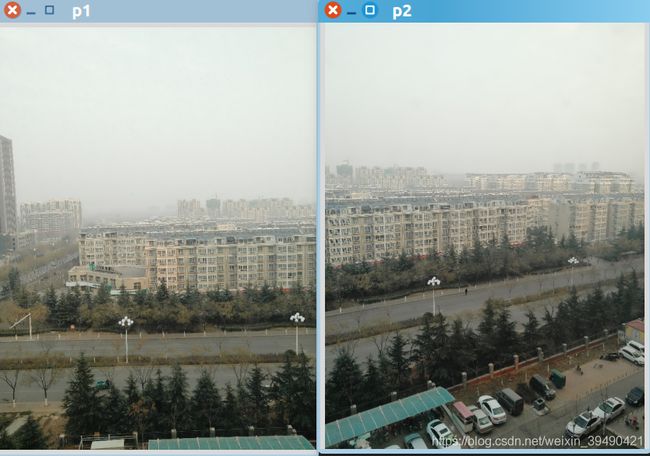

Mat image01 = imread("2.png");//图片绝对路径

Mat image02 = imread("1.png");

namedWindow("p2",0);

namedWindow("p1",0);

imshow("p2",image01);

imshow("p1",image02);

waitKey();

Mat image1,image2;

cvtColor(image01,image1,CV_RGB2GRAY);

cvtColor(image02,image2,CV_RGB2GRAY);

//1.寻找特征点,计算特征向量--SURF

Ptr surfDetector = SURF::create(2000);

vector keyPoint1, keyPoint2;

surfDetector->detect( image1, keyPoint1 );

surfDetector->detect( image2, keyPoint2 );

Ptr surfDescriptor = SURF::create();

Mat imageDesc1, imageDesc2;

surfDescriptor->compute( image1, keyPoint1, imageDesc1 );

surfDescriptor->compute( image2, keyPoint2, imageDesc2 );

3.特征点匹配

使用Lowe's 算法获取优秀匹配点

//2.特征点匹配

FlannBasedMatcher matcher;

vector > matchePoints;

vector GoodMatchePoints;

vector train_desc(1, imageDesc1);

matcher.add(train_desc);

matcher.train();

matcher.knnMatch(imageDesc2, matchePoints, 2);//cout << "total match points: " << matchePoints.size() << endl;

for (int i = 0; i < matchePoints.size(); i++)// Lowe's algorithm,获取优秀匹配点

{

if (matchePoints[i][0].distance < 0.6 * matchePoints[i][1].distance)

{

GoodMatchePoints.push_back(matchePoints[i][0]);

}

}

Mat first_match;

drawMatches(image02, keyPoint2, image01, keyPoint1, GoodMatchePoints, first_match);

imshow("特征点匹配", first_match);

waitKey(); 特征点提取及匹配的结果

4.图像配准

//3.图像配准

vector imagePoints1, imagePoints2;

for (int i = 0; i typedef struct

{

Point2f left_top;

Point2f left_bottom;

Point2f right_top;

Point2f right_bottom;

}four_corners_t;

four_corners_t corners;

void CalcCorners(const Mat& H, const Mat& src)

{

double v2[] = { 0, 0, 1 };//左上角

double v1[3];//变换后的坐标值

Mat V2 = Mat(3, 1, CV_64FC1, v2); //列向量

Mat V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

//左上角(0,0,1)

cout << "V2: " << V2 << endl;

cout << "V1: " << V1 << endl;

corners.left_top.x = v1[0] / v1[2];

corners.left_top.y = v1[1] / v1[2];

//左下角(0,src.rows,1)

v2[0] = 0;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.left_bottom.x = v1[0] / v1[2];

corners.left_bottom.y = v1[1] / v1[2];

//右上角(src.cols,0,1)

v2[0] = src.cols;

v2[1] = 0;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.right_top.x = v1[0] / v1[2];

corners.right_top.y = v1[1] / v1[2];

//右下角(src.cols,src.rows,1)

v2[0] = src.cols;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.right_bottom.x = v1[0] / v1[2];

corners.right_bottom.y = v1[1] / v1[2];

}如果不进行修正,变换后的图像由于运算时的坐标发生变化会出现一部分截断的现象。

图像配准结果:

5.拼合图像

//4.创建拼接后的图

int dst_width = imageTransform1.cols; //取最右点的长度为拼接图的长度

int dst_height = image02.rows;

Mat dst(dst_height, dst_width, CV_8UC3);

dst.setTo(0);

imageTransform1.copyTo(dst(Rect(0, 0, imageTransform1.cols, imageTransform1.rows)));

image02.copyTo(dst(Rect(0, 0, image02.cols, image02.rows)));

imshow("拼接后的图", dst);

waitKey();图像拼合结果:

6.对重叠边界进行处理。

//5.优化两图的连接处,使得拼接自然

OptimizeSeam(image02, imageTransform1, dst);

imshow("优化两图的连接处", dst);

waitKey();void OptimizeSeam(Mat& img1, Mat& trans, Mat& dst)

{

int start = MIN(corners.left_top.x, corners.left_bottom.x);//开始位置,即重叠区域的左边界

double processWidth = img1.cols - start;//重叠区域的宽度

int rows = dst.rows;

int cols = img1.cols; //注意,是列数*通道数

double alpha = 1;//img1中像素的权重

for (int i = 0; i < rows; i++)

{

uchar* p = img1.ptr(i); //获取第i行的首地址

uchar* t = trans.ptr(i);

uchar* d = dst.ptr(i);

for (int j = start; j < cols; j++)

{

//如果遇到图像trans中无像素的黑点,则完全拷贝img1中的数据

if (t[j * 3] == 0 && t[j * 3 + 1] == 0 && t[j * 3 + 2] == 0)

{

alpha = 1;

}

else

{

//img1中像素的权重,与当前处理点距重叠区域左边界的距离成正比,实验证明,这种方法确实好

alpha = (processWidth - (j - start)) / processWidth;

}

d[j * 3] = p[j * 3] * alpha + t[j * 3] * (1 - alpha);

d[j * 3 + 1] = p[j * 3 + 1] * alpha + t[j * 3 + 1] * (1 - alpha);

d[j * 3 + 2] = p[j * 3 + 2] * alpha + t[j * 3 + 2] * (1 - alpha);

}

}

} 最终拼接结果图: