python爬取CSDN博客内容为html到本地

系统环境: ubuntu16.04

python环境:3.6

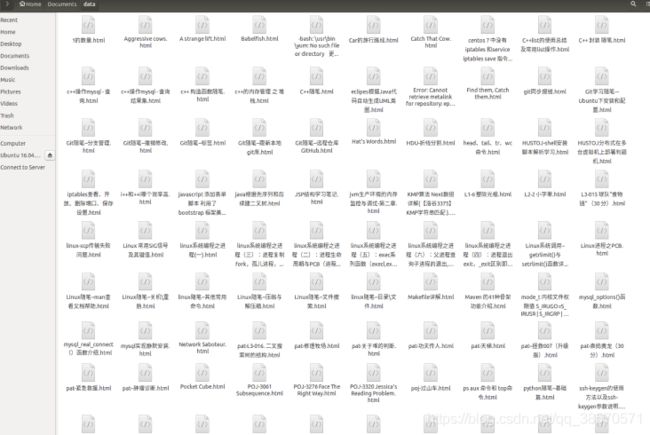

默认保存在用户目录下的 Document下

import re

import urllib.request

from bs4 import BeautifulSoup

def trim(s):

if s.startswith(' ') or s.endswith(' '):

return re.sub(r"^(\s+)|(\s+)$", "", s)

return s

def save(tdir,article):

fh = open(tdir, 'w', encoding='utf-8')

fh.write(article)

fh.close()

def dealstr(str):

return str.replace('/','\\')

def get_article(url):

tdir="/home/kjctar/Documents/data/" #保存的指定目录,其中data目录必须存在

response = urllib.request.urlopen(url)

print (response.getcode())#获取状态码,200表示成功

html_doc = response.read()

soup = BeautifulSoup(html_doc,"html.parser",from_encoding="utf-8")

article=""

#添加csdn的富文本样式

article+=''

#添加markdown的css样式

article+=''

#添加代码高亮cdn

article+=''

article+=''

article+=''

#添加bootstrap的css样式

article+=''

article+=''

article+=''

article+=''

#获取文章标题

title = soup.find('h1',class_='title-article').get_text()

tdir+=dealstr(title)+".html"

article+=""

+title+""

#获取文章发布时间

uptime = soup.find('div',class_='up-time').get_text()

article+=""

+uptime+""

#获取文章标签

tags = soup.find_all('a',class_='tag-link')

for tag in tags:

article+=trim(tag.get_text())+','

#获取文章内容

content = soup.find('div',id='content_views')

article+=content.prettify()

save(tdir,article)

article+=''

def get_article_list(url):

status=True

page=1

while status:

turl=url+"/article/list/"+str(page)

print("-----------------------------爬取第",page,"页--------------------------")

response = urllib.request.urlopen(turl)

print (response.getcode())#获取状态码,200表示成功

html_doc = response.read()

soup = BeautifulSoup(html_doc,"html.parser",from_encoding="utf-8")

soup = soup.find('div',class_='article-list')

if soup:

lists=soup.find_all('h4')

for tag in lists:

tag.a.span.clear()

print(trim(tag.a.get_text())," : ",tag.a['href'])

get_article(tag.a['href'])

print("下载完成!")

page+=1

else:

status=False

get_article_list("https://blog.csdn.net/qq_38570571")#要爬取的博客主页