Python - 深度学习系列5- 神经网络模型使用Docker服务封装调用

1 说明

深度学习的模型训练是挺花时间的,但是只是应用的话cpu服务器应该有也可以。

目的:已训练得到了模型和配置文件,将其打包为服务。

1.yolo网络的目标识别 。

2.Siamese网络的相似性识别。

2 服务内容拆解(yolov3)

github上可以找到yolov3项目的源码,里面代码的内容很多,现在的关键是把其中仅用于服务部分的代码抽出来。因为主体还是yolo麻烦,所以还是叫yolov3吧。

└── yolov3

├── app.py

├── config

│ ├── siamese

│ │ └── model

│ │ └── siamese_v4.pth

│ └── yolo

│ ├── cfg

│ │ └── yolov3.cfg

│ └── model

│ └── best.pt

├── output

├── predict.py

├── siamese.py

└── yolo.py

- app.py: 之后flask服务就起在这里,给到服务base64编码的图片,服务返回预测结果。(模型在服务中被载入)

- predict.py: 整合了流程,从获得图片到给出结果的主体函数。

- yolo.py: yolo部分的模型和配置导入函数整理

- siamese.py: 孪生网络的模型配置和导入函数整理

- conifg: 放了两个模型的配置

- ouput: 输出时可通过设置将结果保存为图片以及文本文件。

配置和模型的文件拷贝比较简单,因此不说。现在从siamese.py开始。

2.1 siamese.py

import torch

import torch.nn as nn

from PIL import Image

import torchvision.transforms as transforms

# 孪生网络的结构

class SiameseNetwork(nn.Module):

def __init__(self):

super().__init__()

self.cnn1 = nn.Sequential(

nn.ReflectionPad2d(1),

nn.Conv2d(1, 4, kernel_size=3),

nn.ReLU(inplace=True),

nn.BatchNorm2d(4),

nn.ReflectionPad2d(1),

nn.Conv2d(4, 8, kernel_size=3),

nn.ReLU(inplace=True),

nn.BatchNorm2d(8),

nn.ReflectionPad2d(1),

nn.Conv2d(8, 8, kernel_size=3),

nn.ReLU(inplace=True),

nn.BatchNorm2d(8),

)

self.fc1 = nn.Sequential(

nn.Linear(8 * 100 * 100, 500),

nn.ReLU(inplace=True),

nn.Linear(500, 500),

nn.ReLU(inplace=True),

nn.Linear(500, 5))

def forward_once(self, x):

output = self.cnn1(x)

output = output.view(output.size()[0], -1)

output = self.fc1(output)

return output

def forward(self, input1, input2):

output1 = self.forward_once(input1)

output2 = self.forward_once(input2)

return output1, output2

# 载入模型,predict方法随时可以调用

class Siamese_predict():

def __init__(self, model_path):

self.net = SiameseNetwork()

self.net.load_state_dict(torch.load(model_path, map_location=torch.device('cpu')))

self.net.eval()

self.transform = transforms.Compose(

[transforms.Resize((100, 100)), # 有坑,传入int和tuple有区别

transforms.ToTensor()])

def siamese_predict(self, image1_path, image2_path):

img1 = Image.open(image1_path)

img1 = img1.convert("L")

img1 = self.transform(img1).unsqueeze(0)

img2 = Image.open(image2_path)

img2 = img2.convert("L")

img2 = self.transform(img2).unsqueeze(0)

output1, output2 = self.net(img1, img2)

euclidean_distance = torch.nn.functional.pairwise_distance(

output1, output2)

return euclidean_distance.item()

if __name__ =='__main__':

model_file = './config/siamese/model/siamese_v4.pth'

siamese = Siamese_predict(model_file)

2.2 yolo.py

这个内容比价多,慢慢看,先倒入必要的包

from PIL import Image

from io import BytesIO

import base64

import cv2

import numpy as np

import matplotlib.pyplot as plt

import os

import torchvision.transforms as transforms

import torch.nn as nn

import torch

ONNX_EXPORT = False

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

img_size = 192

接下来从后往前追溯,假设已经可以导入模型,可以看到,依赖Darknet这个对象,config_path和model_path根据实际位置放就好了。

# 导入模型

with torch.no_grad():

model = Darknet(config_path, img_size=img_size).to(device)

model.load_state_dict(torch.load(model_path, map_location=device)['model'])

model.to(device).eval()

导入Darknet对象,尝试运行一下,会发现缺少函数,一个个拷贝过来

class Darknet(nn.Module):

# YOLOv3 object detection model

def __init__(self, cfg, img_size=(416, 416), verbose=False):

super(Darknet, self).__init__()

self.module_defs = parse_model_cfg(cfg)

self.module_list, self.routs = create_modules(

self.module_defs, img_size, cfg)

self.yolo_layers = get_yolo_layers(self)

# torch_utils.initialize_weights(self)

# Darknet Header https://github.com/AlexeyAB/darknet/issues/2914#issuecomment-496675346

# (int32) version info: major, minor, revision

self.version = np.array([0, 2, 5], dtype=np.int32)

# (int64) number of images seen during training

self.seen = np.array([0], dtype=np.int64)

# print model description

self.info(verbose) if not ONNX_EXPORT else None

def forward(self, x, augment=False, verbose=False):

if not augment:

return self.forward_once(x)

else: # Augment images (inference and test only) https://github.com/ultralytics/yolov3/issues/931

img_size = x.shape[-2:] # height, width

s = [0.83, 0.67] # scales

y = []

for i, xi in enumerate((x,

# flip-lr and scale

scale_img(

x.flip(3), s[0], same_shape=False),

scale_img(

x, s[1], same_shape=False), # scale

)):

# cv2.imwrite('img%g.jpg' % i, 255 * xi[0].numpy().transpose((1, 2, 0))[:, :, ::-1])

y.append(self.forward_once(xi)[0])

y[1][..., :4] /= s[0] # scale

y[1][..., 0] = img_size[1] - y[1][..., 0] # flip lr

y[2][..., :4] /= s[1] # scale

# for i, yi in enumerate(y): # coco small, medium, large = < 32**2 < 96**2 <

# area = yi[..., 2:4].prod(2)[:, :, None]

# if i == 1:

# yi *= (area < 96. ** 2).float()

# elif i == 2:

# yi *= (area > 32. ** 2).float()

# y[i] = yi

y = torch.cat(y, 1)

return y, None

def forward_once(self, x, augment=False, verbose=False):

img_size = x.shape[-2:] # height, width

yolo_out, out = [], []

if verbose:

print('0', x.shape)

str = ''

# Augment images (inference and test only)

if augment: # https://github.com/ultralytics/yolov3/issues/931

nb = x.shape[0] # batch size

s = [0.83, 0.67] # scales

x = torch.cat((x,

# flip-lr and scale

scale_img(x.flip(3), s[0]),

scale_img(x, s[1]), # scale

), 0)

for i, module in enumerate(self.module_list):

name = module.__class__.__name__

if name in ['WeightedFeatureFusion', 'FeatureConcat']: # sum, concat

if verbose:

l = [i - 1] + module.layers # layers

sh = [list(x.shape)] + [list(out[i].shape)

for i in module.layers] # shapes

str = ' >> ' + \

' + '.join(['layer %g %s' % x for x in zip(l, sh)])

x = module(x, out) # WeightedFeatureFusion(), FeatureConcat()

elif name == 'YOLOLayer':

yolo_out.append(module(x, out))

else: # run module directly, i.e. mtype = 'convolutional', 'upsample', 'maxpool', 'batchnorm2d' etc.

x = module(x)

out.append(x if self.routs[i] else [])

if verbose:

print('%g/%g %s -' %

(i, len(self.module_list), name), list(x.shape), str)

str = ''

if self.training: # train

return yolo_out

elif ONNX_EXPORT: # export

x = [torch.cat(x, 0) for x in zip(*yolo_out)]

return x[0], torch.cat(x[1:3], 1) # scores, boxes: 3780x80, 3780x4

else: # inference or test

x, p = zip(*yolo_out) # inference output, training output

x = torch.cat(x, 1) # cat yolo outputs

if augment: # de-augment results

x = torch.split(x, nb, dim=0)

x[1][..., :4] /= s[0] # scale

x[1][..., 0] = img_size[1] - x[1][..., 0] # flip lr

x[2][..., :4] /= s[1] # scale

x = torch.cat(x, 1)

return x, p

def fuse(self):

# Fuse Conv2d + BatchNorm2d layers throughout model

print('Fusing layers...')

fused_list = nn.ModuleList()

for a in list(self.children())[0]:

if isinstance(a, nn.Sequential):

for i, b in enumerate(a):

if isinstance(b, nn.modules.batchnorm.BatchNorm2d):

# fuse this bn layer with the previous conv2d layer

conv = a[i - 1]

fused = fuse_conv_and_bn(conv, b)

a = nn.Sequential(fused, *list(a.children())[i + 1:])

break

fused_list.append(a)

self.module_list = fused_list

# yolov3-spp reduced from 225 to 152 layers

self.info() if not ONNX_EXPORT else None

def info(self, verbose=False):

model_info(self, verbose)

第一批的两个函数是从yolov3的utils包下面拉出来的额,不细看的化大致是根据阈值进行图像的划分和边界处理(填充)

# 第一批依赖non_max_suppression,scale_coords

def non_max_suppression(prediction, conf_thres=0.1, iou_thres=0.6, multi_label=True, classes=None, agnostic=False):

"""

Performs Non-Maximum Suppression on inference results

Returns detections with shape:

nx6 (x1, y1, x2, y2, conf, cls)

"""

# Settings

merge = True # merge for best mAP

# (pixels) minimum and maximum box width and height

min_wh, max_wh = 2, 4096

time_limit = 10.0 # seconds to quit after

t = time.time()

nc = prediction[0].shape[1] - 5 # number of classes

multi_label &= nc > 1 # multiple labels per box

output = [None] * prediction.shape[0]

for xi, x in enumerate(prediction): # image index, image inference

# Apply constraints

x = x[x[:, 4] > conf_thres] # confidence

x = x[((x[:, 2:4] > min_wh) & (x[:, 2:4] < max_wh)).all(1)] # width-height

# If none remain process next image

if not x.shape[0]:

continue

# Compute conf

x[..., 5:] *= x[..., 4:5] # conf = obj_conf * cls_conf

# Box (center x, center y, width, height) to (x1, y1, x2, y2)

box = xywh2xyxy(x[:, :4])

# Detections matrix nx6 (xyxy, conf, cls)

if multi_label:

i, j = (x[:, 5:] > conf_thres).nonzero().t()

x = torch.cat((box[i], x[i, j + 5].unsqueeze(1),

j.float().unsqueeze(1)), 1)

else: # best class only

conf, j = x[:, 5:].max(1)

x = torch.cat((box, conf.unsqueeze(1), j.float().unsqueeze(1)), 1)[

conf > conf_thres]

# Filter by class

if classes:

x = x[(j.view(-1, 1) == torch.tensor(classes, device=j.device)).any(1)]

# Apply finite constraint

# if not torch.isfinite(x).all():

# x = x[torch.isfinite(x).all(1)]

# If none remain process next image

n = x.shape[0] # number of boxes

if not n:

continue

# Sort by confidence

# x = x[x[:, 4].argsort(descending=True)]

# Batched NMS

c = x[:, 5] * 0 if agnostic else x[:, 5] # classes

boxes, scores = x[:, :4].clone() + c.view(-1, 1) * \

max_wh, x[:, 4] # boxes (offset by class), scores

i = torchvision.ops.boxes.nms(boxes, scores, iou_thres)

if merge and (1 < n < 3E3): # Merge NMS (boxes merged using weighted mean)

try: # update boxes as boxes(i,4) = weights(i,n) * boxes(n,4)

iou = box_iou(boxes[i], boxes) > iou_thres # iou matrix

weights = iou * scores[None] # box weights

x[i, :4] = torch.mm(weights, x[:, :4]).float(

) / weights.sum(1, keepdim=True) # merged boxes

# i = i[iou.sum(1) > 1] # require redundancy

except: # possible CUDA error https://github.com/ultralytics/yolov3/issues/1139

print(x, i, x.shape, i.shape)

pass

output[xi] = x[i]

if (time.time() - t) > time_limit:

break # time limit exceeded

return output

def scale_coords(img1_shape, coords, img0_shape, ratio_pad=None):

# Rescale coords (xyxy) from img1_shape to img0_shape

if ratio_pad is None: # calculate from img0_shape

gain = max(img1_shape) / max(img0_shape) # gain = old / new

pad = (img1_shape[1] - img0_shape[1] * gain) / \

2, (img1_shape[0] - img0_shape[0] * gain) / 2 # wh padding

else:

gain = ratio_pad[0][0]

pad = ratio_pad[1]

coords[:, [0, 2]] -= pad[0] # x padding

coords[:, [1, 3]] -= pad[1] # y padding

coords[:, :4] /= gain

clip_coords(coords, img0_shape)

return coords

再跑一下,第二批报错的是parse_model_cfg, 从名称看的出来,这个是读取模型配置的

def parse_model_cfg(path):

# Parse the yolo *.cfg file and return module definitions path may be 'cfg/yolov3.cfg', 'yolov3.cfg', or 'yolov3'

if not path.endswith('.cfg'): # add .cfg suffix if omitted

path += '.cfg'

# add cfg/ prefix if omitted

if not os.path.exists(path) and os.path.exists('cfg' + os.sep + path):

path = 'cfg' + os.sep + path

with open(path, 'r') as f:

lines = f.read().split('\n')

lines = [x for x in lines if x and not x.startswith('#')]

lines = [x.rstrip().lstrip()

for x in lines] # get rid of fringe whitespaces

mdefs = [] # module definitions

for line in lines:

if line.startswith('['): # This marks the start of a new block

mdefs.append({

})

mdefs[-1]['type'] = line[1:-1].rstrip()

if mdefs[-1]['type'] == 'convolutional':

# pre-populate with zeros (may be overwritten later)

mdefs[-1]['batch_normalize'] = 0

else:

key, val = line.split("=")

key = key.rstrip()

if key == 'anchors': # return nparray

# np anchors

mdefs[-1][key] = np.array([float(x)

for x in val.split(',')]).reshape((-1, 2))

elif (key in ['from', 'layers', 'mask']) or (key == 'size' and ',' in val): # return array

mdefs[-1][key] = [int(x) for x in val.split(',')]

else:

val = val.strip()

# TODO: .isnumeric() actually fails to get the float case

if val.isnumeric(): # return int or float

mdefs[-1][key] = int(val) if (int(val) -

float(val)) == 0 else float(val)

else:

mdefs[-1][key] = val # return string

# Check all fields are supported

supported = ['type', 'batch_normalize', 'filters', 'size', 'stride', 'pad', 'activation', 'layers', 'groups',

'from', 'mask', 'anchors', 'classes', 'num', 'jitter', 'ignore_thresh', 'truth_thresh', 'random',

'stride_x', 'stride_y', 'weights_type', 'weights_normalization', 'scale_x_y', 'beta_nms', 'nms_kind',

'iou_loss', 'iou_normalizer', 'cls_normalizer', 'iou_thresh', 'probability']

f = [] # fields

for x in mdefs[1:]:

[f.append(k) for k in x if k not in f]

u = [x for x in f if x not in supported] # unsupported fields

assert not any(

u), "Unsupported fields %s in %s. See https://github.com/ultralytics/yolov3/issues/631" % (u, path)

return mdefs

第三个报错的是create_modules,作用是从配置文件中构建模块

def create_modules(module_defs, img_size, cfg):

# Constructs module list of layer blocks from module configuration in module_defs

# expand if necessary

img_size = [img_size] * 2 if isinstance(img_size, int) else img_size

_ = module_defs.pop(0) # cfg training hyperparams (unused)

output_filters = [3] # input channels

module_list = nn.ModuleList()

routs = [] # list of layers which rout to deeper layers

yolo_index = -1

for i, mdef in enumerate(module_defs):

modules = nn.Sequential()

if mdef['type'] == 'convolutional':

bn = mdef['batch_normalize']

filters = mdef['filters']

k = mdef['size'] # kernel size

stride = mdef['stride'] if 'stride' in mdef else (

mdef['stride_y'], mdef['stride_x'])

if isinstance(k, int): # single-size conv

modules.add_module('Conv2d', nn.Conv2d(in_channels=output_filters[-1],

out_channels=filters,

kernel_size=k,

stride=stride,

padding=k // 2 if mdef['pad'] else 0,

groups=mdef['groups'] if 'groups' in mdef else 1,

bias=not bn))

else: # multiple-size conv

modules.add_module('MixConv2d', MixConv2d(in_ch=output_filters[-1],

out_ch=filters,

k=k,

stride=stride,

bias=not bn))

if bn:

modules.add_module('BatchNorm2d', nn.BatchNorm2d(

filters, momentum=0.03, eps=1E-4))

else:

routs.append(i) # detection output (goes into yolo layer)

# activation study https://github.com/ultralytics/yolov3/issues/441

if mdef['activation'] == 'leaky':

modules.add_module(

'activation', nn.LeakyReLU(0.1, inplace=True))

elif mdef['activation'] == 'swish':

modules.add_module('activation', Swish())

elif mdef['activation'] == 'mish':

modules.add_module('activation', Mish())

elif mdef['type'] == 'BatchNorm2d':

filters = output_filters[-1]

modules = nn.BatchNorm2d(filters, momentum=0.03, eps=1E-4)

if i == 0 and filters == 3: # normalize RGB image

# imagenet mean and var https://pytorch.org/docs/stable/torchvision/models.html#classification

modules.running_mean = torch.tensor([0.485, 0.456, 0.406])

modules.running_var = torch.tensor([0.0524, 0.0502, 0.0506])

elif mdef['type'] == 'maxpool':

k = mdef['size'] # kernel size

stride = mdef['stride']

maxpool = nn.MaxPool2d(

kernel_size=k, stride=stride, padding=(k - 1) // 2)

if k == 2 and stride == 1: # yolov3-tiny

modules.add_module('ZeroPad2d', nn.ZeroPad2d((0, 1, 0, 1)))

modules.add_module('MaxPool2d', maxpool)

else:

modules = maxpool

elif mdef['type'] == 'upsample':

if ONNX_EXPORT: # explicitly state size, avoid scale_factor

g = (yolo_index + 1) * 2 / 32 # gain

modules = nn.Upsample(size=tuple(int(x * g)

for x in img_size)) # img_size = (320, 192)

else:

modules = nn.Upsample(scale_factor=mdef['stride'])

elif mdef['type'] == 'route': # nn.Sequential() placeholder for 'route' layer

layers = mdef['layers']

filters = sum([output_filters[l + 1 if l > 0 else l]

for l in layers])

routs.extend([i + l if l < 0 else l for l in layers])

modules = FeatureConcat(layers=layers)

elif mdef['type'] == 'shortcut': # nn.Sequential() placeholder for 'shortcut' layer

layers = mdef['from']

filters = output_filters[-1]

routs.extend([i + l if l < 0 else l for l in layers])

modules = WeightedFeatureFusion(

layers=layers, weight='weights_type' in mdef)

elif mdef['type'] == 'reorg3d': # yolov3-spp-pan-scale

pass

elif mdef['type'] == 'yolo':

yolo_index += 1

stride = [32, 16, 8] # P5, P4, P3 strides

# stride order reversed

if any(x in cfg for x in ['panet', 'yolov4', 'cd53']):

stride = list(reversed(stride))

layers = mdef['from'] if 'from' in mdef else []

modules = YOLOLayer(anchors=mdef['anchors'][mdef['mask']], # anchor list

nc=mdef['classes'], # number of classes

img_size=img_size, # (416, 416)

yolo_index=yolo_index, # 0, 1, 2...

layers=layers, # output layers

stride=stride[yolo_index])

# Initialize preceding Conv2d() bias (https://arxiv.org/pdf/1708.02002.pdf section 3.3)

try:

j = layers[yolo_index] if 'from' in mdef else -1

# If previous layer is a dropout layer, get the one before

if module_list[j].__class__.__name__ == 'Dropout':

j -= 1

bias_ = module_list[j][0].bias # shape(255,)

bias = bias_[:modules.no *

modules.na].view(modules.na, -1) # shape(3,85)

bias[:, 4] += -4.5 # obj

# cls (sigmoid(p) = 1/nc)

bias[:, 5:] += math.log(0.6 / (modules.nc - 0.99))

module_list[j][0].bias = torch.nn.Parameter(

bias_, requires_grad=bias_.requires_grad)

except:

print('WARNING: smart bias initialization failure.')

elif mdef['type'] == 'dropout':

perc = float(mdef['probability'])

modules = nn.Dropout(p=perc)

else:

print('Warning: Unrecognized Layer Type: ' + mdef['type'])

# Register module list and number of output filters

module_list.append(modules)

output_filters.append(filters)

routs_binary = [False] * (i + 1)

for i in routs:

routs_binary[i] = True

return module_list, routs_binary

第四批报错的是, WeightedFeatureFusion ,计算2层(或n层)的加权和,已经是越来越基础的定义了

# weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

class WeightedFeatureFusion(nn.Module):

def __init__(self, layers, weight=False):

super(WeightedFeatureFusion, self).__init__()

self.layers = layers # layer indices

self.weight = weight # apply weights boolean

self.n = len(layers) + 1 # number of layers

if weight:

self.w = nn.Parameter(torch.zeros(

self.n), requires_grad=True) # layer weights

def forward(self, x, outputs):

# Weights

if self.weight:

w = torch.sigmoid(self.w) * (2 / self.n) # sigmoid weights (0-1)

x = x * w[0]

# Fusion

nx = x.shape[1] # input channels

for i in range(self.n - 1):

# feature to add

a = outputs[self.layers[i]] * \

w[i + 1] if self.weight else outputs[self.layers[i]]

na = a.shape[1] # feature channels

# Adjust channels

if nx == na: # same shape

x = x + a

elif nx > na: # slice input

# or a = nn.ZeroPad2d((0, 0, 0, 0, 0, dc))(a); x = x + a

x[:, :na] = x[:, :na] + a

else: # slice feature

x = x + a[:, :nx]

return x

第五层报错的是YOLOLayer,洋洋洒洒一百多行的定义,可以跳过,反正是核心网络结构的定义

class YOLOLayer(nn.Module):

def __init__(self, anchors, nc, img_size, yolo_index, layers, stride):

super(YOLOLayer, self).__init__()

self.anchors = torch.Tensor(anchors)

self.index = yolo_index # index of this layer in layers

self.layers = layers # model output layer indices

self.stride = stride # layer stride

self.nl = len(layers) # number of output layers (3)

self.na = len(anchors) # number of anchors (3)

self.nc = nc # number of classes (80)

self.no = nc + 5 # number of outputs (85)

self.nx, self.ny, self.ng = 0, 0, 0 # initialize number of x, y gridpoints

self.anchor_vec = self.anchors / self.stride

self.anchor_wh = self.anchor_vec.view(1, self.na, 1, 1, 2)

if ONNX_EXPORT:

self.training = False

# number x, y grid points

self.create_grids((img_size[1] // stride, img_size[0] // stride))

def create_grids(self, ng=(13, 13), device='cpu'):

self.nx, self.ny = ng # x and y grid size

self.ng = torch.tensor(ng, dtype=torch.float)

# build xy offsets

if not self.training:

yv, xv = torch.meshgrid(

[torch.arange(self.ny, device=device), torch.arange(self.nx, device=device)])

self.grid = torch.stack((xv, yv), 2).view(

(1, 1, self.ny, self.nx, 2)).float()

if self.anchor_vec.device != device:

self.anchor_vec = self.anchor_vec.to(device)

self.anchor_wh = self.anchor_wh.to(device)

def forward(self, p, out):

ASFF = False # https://arxiv.org/abs/1911.09516

if ASFF:

i, n = self.index, self.nl # index in layers, number of layers

p = out[self.layers[i]]

bs, _, ny, nx = p.shape # bs, 255, 13, 13

if (self.nx, self.ny) != (nx, ny):

self.create_grids((nx, ny), p.device)

# outputs and weights

# w = F.softmax(p[:, -n:], 1) # normalized weights

w = torch.sigmoid(p[:, -n:]) * (2 / n) # sigmoid weights (faster)

# w = w / w.sum(1).unsqueeze(1) # normalize across layer dimension

# weighted ASFF sum

p = out[self.layers[i]][:, :-n] * w[:, i:i + 1]

for j in range(n):

if j != i:

p += w[:, j:j + 1] * \

F.interpolate(

out[self.layers[j]][:, :-n], size=[ny, nx], mode='bilinear', align_corners=False)

elif ONNX_EXPORT:

bs = 1 # batch size

else:

bs, _, ny, nx = p.shape # bs, 255, 13, 13

if (self.nx, self.ny) != (nx, ny):

self.create_grids((nx, ny), p.device)

# p.view(bs, 255, 13, 13) -- > (bs, 3, 13, 13, 85) # (bs, anchors, grid, grid, classes + xywh)

p = p.view(bs, self.na, self.no, self.ny, self.nx).permute(

0, 1, 3, 4, 2).contiguous() # prediction

if self.training:

return p

elif ONNX_EXPORT:

# Avoid broadcasting for ANE operations

m = self.na * self.nx * self.ny

ng = 1. / self.ng.repeat(m, 1)

grid = self.grid.repeat(1, self.na, 1, 1, 1).view(m, 2)

anchor_wh = self.anchor_wh.repeat(

1, 1, self.nx, self.ny, 1).view(m, 2) * ng

p = p.view(m, self.no)

xy = torch.sigmoid(p[:, 0:2]) + grid # x, y

wh = torch.exp(p[:, 2:4]) * anchor_wh # width, height

p_cls = torch.sigmoid(p[:, 4:5]) if self.nc == 1 else \

torch.sigmoid(p[:, 5:self.no]) * \

torch.sigmoid(p[:, 4:5]) # conf

return p_cls, xy * ng, wh

else: # inference

io = p.clone() # inference output

io[..., :2] = torch.sigmoid(io[..., :2]) + self.grid # xy

io[..., 2:4] = torch.exp(io[..., 2:4]) * \

self.anchor_wh # wh yolo method

io[..., :4] *= self.stride

torch.sigmoid_(io[..., 4:])

# view [1, 3, 13, 13, 85] as [1, 507, 85]

return io.view(bs, -1, self.no), p

第六次报错的是FeatureConcat, 一个连接件性质的小函数

class FeatureConcat(nn.Module):

def __init__(self, layers):

super(FeatureConcat, self).__init__()

self.layers = layers # layer indices

self.multiple = len(layers) > 1 # multiple layers flag

def forward(self, x, outputs):

return torch.cat([outputs[i] for i in self.layers], 1) if self.multiple else outputs[self.layers[0]]

第七次报错的是get_yolo_layers,也是连接件

def get_yolo_layers(model):

bool_vec = [x['type'] == 'yolo' for x in model.module_defs]

return [i for i, x in enumerate(bool_vec) if x] # [82, 94, 106] for yolov3

第八次报错的是model_info,打印模型的信息,到这里就好了。

def model_info(model, verbose=False):

# Plots a line-by-line description of a PyTorch model

n_p = sum(x.numel() for x in model.parameters()) # number parameters

n_g = sum(x.numel() for x in model.parameters()

if x.requires_grad) # number gradients

if verbose:

print('%5s %40s %9s %12s %20s %10s %10s' %

('layer', 'name', 'gradient', 'parameters', 'shape', 'mu', 'sigma'))

for i, (name, p) in enumerate(model.named_parameters()):

name = name.replace('module_list.', '')

print('%5g %40s %9s %12g %20s %10.3g %10.3g' %

(i, name, p.requires_grad, p.numel(), list(p.shape), p.mean(), p.std()))

try: # FLOPS

from thop import profile

macs, _ = profile(model, inputs=(

torch.zeros(1, 3, 480, 640),), verbose=False)

fs = ', %.1f GFLOPS' % (macs / 1E9 * 2)

except:

fs = ''

print('Model Summary: %g layers, %g parameters, %g gradients%s' %

(len(list(model.parameters())), n_p, n_g, fs))

测试一下载入情况:ok

if __name__ == '__main__':

config_path = './config/yolo/cfg/yolov3.cfg'

model_path = './config/yolo/model/best.pt'

# 导入模型

with torch.no_grad():

model = Darknet(config_path, img_size=img_size).to(device)

model.load_state_dict(torch.load(model_path, map_location=device)['model'])

model.to(device).eval()

----

In [15]: run yolo.py

WARNING: smart bias initialization failure.

WARNING: smart bias initialization failure.

WARNING: smart bias initialization failure.

Model Summary: 222 layers, 6.15237e+07 parameters, 6.15237e+07 gradients

2.3 predict.py

这个程序里做很多"杂事"。把图片进行格式转换,缩放等操作,这样在app.py里就可以很干净的调用了

3 内容

3.1 使用docker制作镜像

dockerfile

FROM python:3.6

COPY ./v1 /v1

WORKDIR /v1

RUN pip3 install -r requires1.txt -i https://mirrors.aliyun.com/pypi/simple/

EXPOSE 5000

CMD ["/bin/bash"]

requires1.txt

Flask==1.0.2

gevent==1.4.0

gunicorn==20.0.4

Cython

matplotlib>=3.2.2

numpy>=1.18.5

opencv-python>=4.1.2

pillow

# pycocotools>=2.0

PyYAML>=5.3

scipy>=1.4.1

tensorboard>=2.2

torch>=1.6.0

torchvision>=0.7.0

tqdm>=4.41.0

opencv-python==4.2.0.34

3.2 将项目文件准备

4 安装部署

4.1 在Ucloud上安装

安装Docker, 安装方式参考

- 建立镜像仓库

- 登录镜像仓库 docker login

- 更改本地镜像名 docker tag image_id

docker tag {

本地镜像名} uhub.service.ucloud.cn/{

已创建镜像仓库}/{

镜像}:tag

- 推送镜像

docker push uhub.service.ucloud.cn/{

已创建镜像仓库}/{

镜像}:tag

- 拉取镜像

docker pull uhub.service.ucloud.cn/{

已创建镜像仓库}/{

镜像}:tag

部署应用

1 登录仓库

#1 docker登录(第一次)

docker login uhub.service.ucloud.cn -u [email protected]

输入密码:xinshuxinshuxinshu

2 拉取镜像

# 2 docker镜像拉取(第一次)

docker pull uhub.service.ucloud.cn/python_yolo/mlncpu:v1

3 上传项目文件夹

#1 建立文件夹

cd mkdir /tmp/uploads

#2 scp上传文件夹

scp -r YourAbsoluteFolderPath [email protected]:/tmp/uploads/yolov3

4 启动

docker run -p 【宿主机端口5500】:5000 -d -v 【宿主机文件夹/tmp/uploads/yolov3】:/yolov3 镜像:版本 sh /yolov3/start.sh

# 注意start.sh:进程数不要超过cpu的核数

cd /yolov3

gunicorn -preload -w 【进程数】 -k gevent app:app -b 0.0.0.0:5000

4.2 在阿里云上

4.2.1 注册登录

4.2.2 创建仓库

- 登录方式

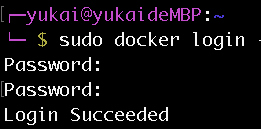

阿里这个教程太特么坑了吧,用sudo命令,结果第一个要你输入的是本机管理员密码,这谁看的出来?

sudo docker login --username=阿里账号 registry.cn-hangzhou.aliyuncs.com