-----本文大纲

简介

术语

环境配置

实现过程

命令行管理工具

-------------

一、简介

RHCS 即 RedHat Cluster Suite ,中文意思即红帽集群套件。红帽集群套件(RedHat Cluter Suite, RHCS)是一套综合的软件组件,可以通过在部署时采用不同的配置,以满足你的对高可用性,负载均衡,可扩展性,文件共享和节约成本的需要。对于需要最大 正常运行时间的应用来说,带有红帽集群套件(Red Hat Cluster Suite)的红帽企业 Linux 集群是最佳的选择。红帽集群套件专为红帽企业 Linux 量身设计,它提供有如下两种不同类型的集群: 1、应用/服务故障切换-通过创建n个节点的服务器集群来实现关键应用和服务的故障切换 2、IP 负载均衡-对一群服务器上收到的 IP 网络请求进行负载均衡利用红帽集群套件,可以以高可用性配置来部署应用,从而使其总是处于运行状态-这赋予了企业向外扩展(scale- out)Linux 部署的能力。对于网络文件系统(NFS)、Samba 和Apache 等大量应用的开源应用来说,红帽集群套件提供了一个随时可用的全面故障切换解决方案。

二、术语

-

分布式集群管理器(CMAN)

- 锁管理(DLM)

- 配置文件管理(CCS)

- 栅设备(FENCE)

- 高可用服务管理器

- 集群配置管理工具

- Redhat GFS

三、环境配置

| 系统 | 角色 | ip地址 | 安装包 |

| Centos6.5 x86_64 | 管理集群节点端 | 192.168.1.110 | luci |

| Centos6.5 x86_64 | web节点端(node1) | 192.168.1.103 | ricci |

| Centos6.5 x86_64 | web节点端(node2) | 192.168.1.109 | ricci |

| Centos6.5 x86_64 | web节点端(node3) | 192.168.1.108 | ricci |

四、实现过程

前提

ssh互信

注:如果yum源中有epel源要禁用,这是因为,此套件为redhat官方只认可自己发行的版本,如果不是认可的版本,可能将无法启动服务

-

管理集群节点端

[root@essun ~]# yum install -y luci

[root@essun ~]# service luci start

Adding following auto-detected host IDs (IP addresses/domain names), corresponding to `essun.node4.com' address, to the configuration of self-managed certificate `/var/lib/luci/etc/cacert.config' (you can change them by editing `/var/lib/luci/etc/cacert.config', removing the generated certificate `/var/lib/luci/certs/host.pem' and restarting luci):

(none suitable found, you can still do it manually as mentioned above)

Generating a 2048 bit RSA private key

writing new private key to '/var/lib/luci/certs/host.pem'

Starting saslauthd: [ OK ]

Start luci... [ OK ]

Point your web browser to https://essun.node4.com:8084 (or equivalent) to access luci

[root@essun ~]# ss -tnpl |grep 8084

LISTEN 0 5 *:8084 *:* users:(("python",2920,5))

[root@essun ~]#而节点间要安装ricci,并且要为各节点上的ricci用户创建一个密码,以便集群服务管理各节点,为每一个节点提供一个测试页面(此处以一个节点为例,其它两个节点安装方式一样)

[root@essun .ssh]# yum install ricci -y

[root@essun ~]# service ricci start

Starting oddjobd: [ OK ]

generating SSL certificates... done

Generating NSS database... done

Starting ricci: [ OK ]

[root@essun ~]# ss -tnlp |grep ricci

LISTEN 0 5 :::11111 :::* users:(("ricci",2241,3))

#ricci默认监听于11111端口

[root@essun .ssh]# echo "ricci" |passwd --stdin ricci

Changing password for user ricci.

passwd: all authentication tokens updated successfully.

#此处以ricci为密码

[root@essun html]# echo "`hostname`

" >index.html

[root@essun html]# service httpd start

Starting httpd: [ OK ]-

打开web界面就可以配置了

输入正确的用户及密码就可登录了,如果是root登录会有警告提示信息

-

这时就可以使用Manager Clusters管理集群了

-

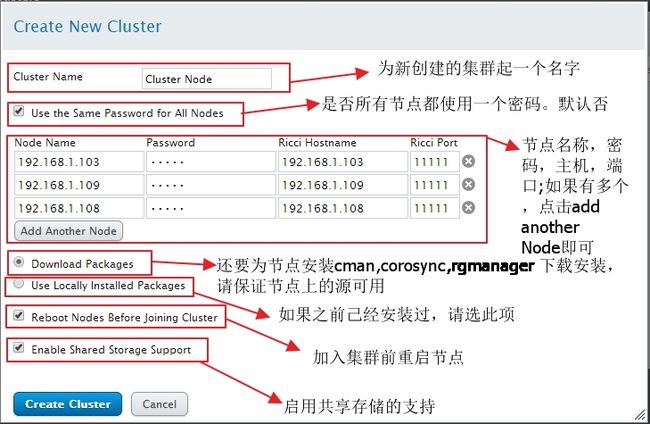

创建一个集群

-

创建完成后

标签说明

-

定义故障转移域

-

定义故障转移域的优先级,当节点重新上线后,资源是否切换

-

添加后的状态

-

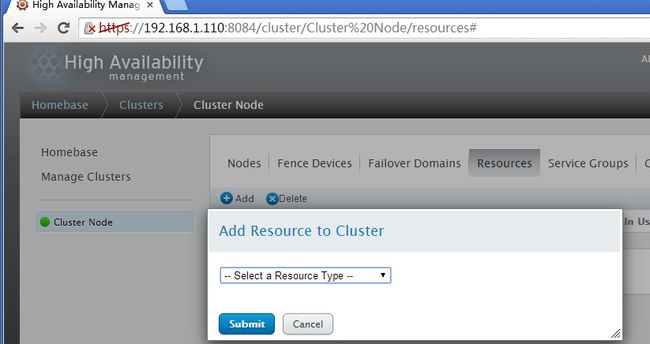

可以选择的资源类型

-

添加一个ip地址

- 提交后生成的一条记录

-

将此资源添加到组中(也可以在service group定义资源)

-

还可以添加资源

-

将己经定义的资源添加到组中(之前己经定义过的ip地址)

-

添加一个web服务

定义完成后就可以提交了,如果此资源想撤销,可以点击右上角remove即可

-

提交后的组资源

- 重启组资源(Restart)

- 当前的状态己经显示运行于节点node1上

访问测试一下,己经运行于node1上

- 模拟故障转移

-

查看资源是否转移,可以查看service group,也可能通过网页测试

-

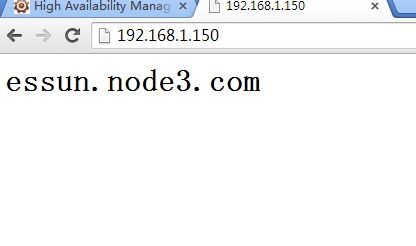

服务己经切换到node3上,访问一下网页看一下效果

-

资源的确己经切换了,让node1重新上线后,资源是不会切换回到node1上的,因为在定义节时己经设置了no failback

五、命令行管理工具

1、clustat

clustat 显示集群状态。它为您提供成员信息、仲裁查看、所有高可用性服务的状态,并给出运行 clustat 命令的节点(本地)

命令参数

[root@essun html]# clustat --help

clustat: invalid option -- '-'

usage: clustat

-i Refresh every seconds. May not be used

with -x.

-I Display local node ID and exit

-m Display status of and exit

-s Display status of and exit

-v Display version and exit

-x Dump information as XML

-Q Return 0 if quorate, 1 if not (no output)

-f Enable fast clustat reports

-l Use long format for services

#查看节点状态信息

[root@essun html]# clustat -l

Cluster Status for Cluster Node @ Wed May 7 11:32:55 2014

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1 1 Online, Local, rgmanager

node2 2 Online

node3 3 Online

Service Information

------- -----------

Service Name : service:webservice

Current State : started (112)

Flags : none (0)

Owner : node1

Last Owner : none

Last Transition : Wed May 7 10:06:48 2014

2、clusvcadm

您可以使用 clusvcadm 命令管理 HA 服务。使用它您可以执行以下操作:

重启服务

命令参数

[root@essun html]# clusvcadm

usage: clusvcadm [command]

Resource Group Control Commands:

-v Display version and exit

-d Disable . This stops a group

until an administrator enables it again,

the cluster loses and regains quorum, or

an administrator-defined event script

explicitly enables it again.

-e Enable

-e -F Enable according to failover

domain rules (deprecated; always the

case when using central processing)

-e -m Enable on

-r -m Relocate [to ]

Stops a group and starts it on another

cluster member.

-M -m Migrate to

(e.g. for live migration of VMs)

-q Quiet operation

-R Restart a group in place.

-s Stop . This temporarily stops

a group. After the next group or

or cluster member transition, the group

will be restarted (if possible).

-Z Freeze resource group. This prevents

transitions and status checks, and is

useful if an administrator needs to

administer part of a service without

stopping the whole service.

-U Unfreeze (thaw) resource group. Restores

a group to normal operation.

-c Convalesce (repair, fix) resource group.

Attempts to start failed, non-critical

resources within a resource group.

Resource Group Locking (for cluster Shutdown / Debugging):

-l Lock local resource group managers.

This prevents resource groups from

starting.

-S Show lock state

-u Unlock resource group managers.

This allows resource groups to start.

#资源迁移

[root@essun html]# clusvcadm -r webservice -m node1

Trying to relocate service:webservice to node1...Success

service:webservice is now running on node1

[root@essun html]# curl http://192.168.1.150

essun.node1.com

3、cman_tool

cman_tool是一种用来管理CMAN集群管理子系统的工具集,cman_tool可以用来添加集群节点,杀死另一个集群节点或改变预期集群的选票的价值。

注意:cman_tool发出的命令会影响你的集群中的所有节点。

命令参数

[root@essun html]# cman_tool -h

Usage:

cman_tool <join|leave|kill|expected|votes|version|wait|status|nodes|services|debug> [options]

Options:

-h Print this help, then exit

-V Print program version information, then exit

-d Enable debug output

join

Cluster & node information is taken from configuration modules.

These switches are provided to allow those values to be overridden.

Use them with extreme care.

-m Multicast address to use

-v Number of votes this node has

-e Number of expected votes for the cluster

-p UDP port number for cman communications

-n The name of this node (defaults to hostname)

-c The name of the cluster to join

-N <id> Node id

-C Config file reader (default: xmlconfig)

-w Wait until node has joined a cluster

-q Wait until the cluster is quorate

-t Maximum time (in seconds) to wait

-k <file> Private key file for Corosync communications

-P Don't set corosync to realtime priority

-X Use internal cman defaults for configuration

-A Don't load openais services

-D What to do about the config. Default (without -D) is to

validate the config. with -D no validation will be done.

-Dwarn will print errors but allow the operation to continue.

-z Disable stderr debugging output.

wait Wait until the node is a member of a cluster

-q Wait until the cluster is quorate

-t Maximum time (in seconds) to wait

leave

-w If cluster is in transition, wait and keep trying

-t Maximum time (in seconds) to wait

remove Tell other nodes to ajust quorum downwards if necessary

force Leave even if cluster subsystems are active

kill

-n The name of the node to kill (can specify multiple times)

expected

-e New number of expected votes for the cluster

votes

-v New number of votes for this node

status Show local record of cluster status

nodes Show local record of cluster nodes

-a Also show node address(es)

-n Only show information for specific node

-F Specify output format (see man page)

services Show local record of cluster services

version

-r Reload cluster.conf and update config version.

-D What to do about the config. Default (without -D) is to

validate the config. with -D no validation will be done. -Dwarn will print errors

but allow the operation to continue

-S Don't run ccs_sync to distribute cluster.conf (if appropriate)

#查看节点属性

[root@essun html]# cman_tool status

Version: 6.2.0

Config Version: 6

Cluster Name: Cluster Node

Cluster Id: 26887

Cluster Member: Yes

Cluster Generation: 36

Membership state: Cluster-Member

Nodes: 3

Expected votes: 3

Total votes: 3

Node votes: 1

Quorum: 2

Active subsystems: 9

Flags:

Ports Bound: 0 11 177

Node name: node1

Node ID: 1

Multicast addresses: 239.192.105.112

Node addresses: 192.168.1.103