qemu内存迁移格式

文章目录

- 前言

- 内存镜像格式

- libvirt元数据

- qemu内存数据

-

- 迁移模型

- 数据结构

- 总体布局

- 标记迁移开始

- section分析

-

- configuration section

- start section

- part section

- end section

- full section

- vmdescription section

- Q&A

前言

- qemu内存迁移功能基于savevm和loadvm接口实现,savevm可以保存一个运行态虚拟机所有内存和设备状态到镜像文件,loadvm可以实现从镜像状态文件读取信息,恢复虚拟机。

- qemu虚机内存的迁出通过savevm功能实现,迁入通过loadvm实现。libvirt使用此接口不仅实现了内存迁移,还实现了内存快照。内存迁移,将一个节点上运行的虚拟机,动态迁移到另一个节点上;内存快照,将运行虚拟机的内存快照保存到镜像文件中,通过快照还原,可以将虚拟机还原到做内存快照时刻的状态。内存迁移和快照都基于savevm/loadvm接口实现,因此分析快照镜像相当于于分析内存迁移的静态数据。本文基于这个原理,通过分析libvirt save命令保存的镜像文件,来间接分析qemu内存迁移的内存和格式。

内存镜像格式

- 我们分析的内存镜像使用下面这条命令产生,c75_test是测试虚拟机,后面内存镜像文件的输出路径:

virsh save c75_test /home/data/c75_test_save_cmd.mem - 下面这条命令可以启动并将虚拟机还原到快照时刻的状态:

virsh restore /home/data/c75_test_save_cmd.mem - save命令生成的内存镜像格式由两部分组成,第一部分由libvirt写入,第二部分由qemu写入。libvirt写入的元数据,主要用于内存快照的恢复,由header,xml和cookie组成。qemu写入的部分是内存数据,包括描述内存的元数据和真正的内存数据。如下图所示:

libvirt元数据

- virQEMUSaveData为libvirt元数据数据结构,如下:

#define QEMU_SAVE_MAGIC "LibvirtQemudSave"

#define QEMU_SAVE_PARTIAL "LibvirtQemudPart"

struct _virQEMUSaveHeader {

char magic[sizeof(QEMU_SAVE_MAGIC)-1];

uint32_t version;

uint32_t data_len;

uint32_t was_running;

uint32_t compressed;

uint32_t cookieOffset;

uint32_t unused[14];

};

typedef struct _virQEMUSaveData virQEMUSaveData;

typedef virQEMUSaveData *virQEMUSaveDataPtr;

struct _virQEMUSaveData {

virQEMUSaveHeader header;

char *xml;

char *cookie;

};

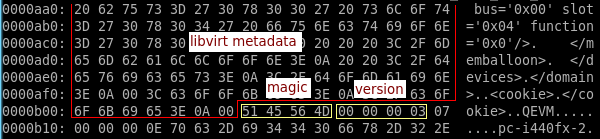

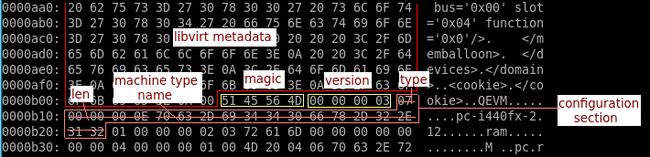

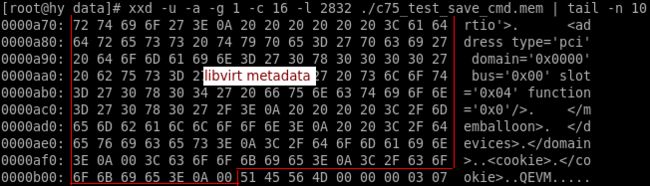

- 打印内存镜像的前128字节,libvirt元数据header占了前92(0X5C)字节,之后是xml的内容,如下:

- header字段的data_len是0XAAB=2731,表示xml和cookie的总长度为2731,加上header的92字节之后,就是qemu内存数据在内存镜像中的偏移:2731+92=2823(0XB10),如下:

qemu内存数据

迁移模型

- qemu内存迁移的有三个阶段:

- 标脏所有的内存页

- 迭代迁移所有脏页,直到剩余脏页降低到一定水线

- 暂停虚拟机,一次性迁移剩余脏页,然后迁移设备状态,启动目的端虚拟机

- 迁移第一阶段会把所有页标脏,首次迁移肯定会传输所有内存页,第二次迁移前如果计算得到的剩余脏页降低到水线以下,可以暂停虚拟机剩余脏页一次性迁移完,因此迁移最理想的状态是迭代两次;当虚拟机内存变化大时,会不断有脏页产生,迟迟不能降到水线以下,内存变化越大迁移越难收敛,最糟糕的情况是内存脏页永远无法降到水线以下,迁移永远无法完成

- 针对上述问题,qemu提出postcopy迁移模式,把传统迁移模式称为precopy,两种模型的不同点在于第二次及其之后的内存脏页拷贝时机不同。precopy模型的脏页拷贝在目的端虚拟机启动之前必须完成;postcopy模型的脏页拷贝在启动之后还会继续。

- postcopy的内存迁移也有三个阶段:

- 迁移设备状态

- 标脏所有内存页,将源端所有内存页拷贝到目的端,启动虚拟机

- 当目的端虚机访问到内存脏页时,会触发缺页异常,qemu从源端拷贝脏页对应内存

- 分析这种迁移模型可以知道,随着目的端虚机内存访问覆盖的地址空间越来越多,脏页的拷贝会越来越少,直到不存在。并且第一次内存访问任何地址都会造成缺页,从而触发源端的拷贝。本文介绍的是precopy模式下的内存迁移格式。

数据结构

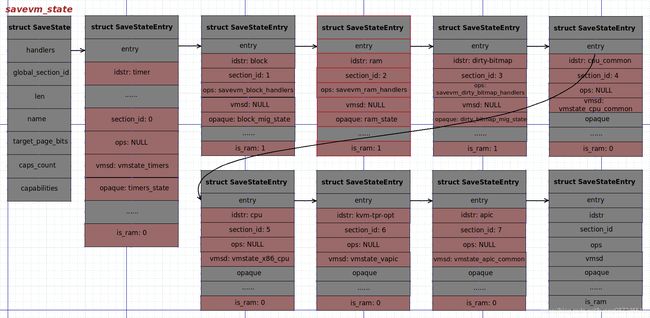

- SaveStateEntry是内存迁移的静态单位,它是一个可迁移信息的抽象,迁移的实现原理就是将一个个SaveStateEntry传送到目的端。SaveStateEntry包含的信息可以是内存页(pages),可以是设备状态(VMState),通过其is_ram成员可以区分。对于运行的虚拟机,这些信息随时可能改变,是动态变化的,因此SaveStateEntry还必须包含收集这些信息的操作函数。SaveStateEntry数据结构如下:

typedef struct SaveStateEntry {

QTAILQ_ENTRY(SaveStateEntry) entry; // 所有SaveState组织成队列由全局变量savevm_state.handler维护,entry用来加入该队列

char idstr[256]; /* qemu将同类可迁移信息组织成一个SaveStateEntry,比如timer,ram,dirty-bitmap,apic等,idstr是这类信息的类名 */

int instance_id; /* 同一个idstr的不同se,用instance_id来区分 */

/* SaveStateEntry.idstr这个域表示的仅仅是相同类型se的名字

* 同类se中还有不同se实例,这些实例在savevm_state.handlersl链表中

* 通过instance_id或者alias_id区分

*/

int alias_id;

int version_id;

/* version id read from the stream

* 从源端读取到的VMState的版本ID

*/

int load_version_id;

int section_id; /* 可迁移信息迁移过程中以section为单位传输,qemu为每个添加到SaveState.hanler链表上se分配一个id,从0开始递增 */

/* section id read from the stream */

int load_section_id;

const SaveVMHandlers *ops; // 内存信息的收集操作,比如ram,ops包括了内存传输前的设置操作,内存传输操作等

const VMStateDescription *vmsd; // 设备状态信息,包含设备状态的搜集操作,比如保存设备状态,加载设备状态等

void *opaque;

CompatEntry *compat;

int is_ram; // 区分SaveStateEntry包含的是内存信息,还是设备状态信息

} SaveStateEntry;

- SaveState管理所有的SaveStateEntry,它将所有SaveStateEntry添加到自己的handlers成员中,通过global_section_id为每个entry分配section_id。初始化时global_section_id为0,每添加一个entry,global_section_id加1。SaveState数据结构如下:

typedef struct SaveState {

QTAILQ_HEAD(, SaveStateEntry) handlers;

int global_section_id;

uint32_t len;

const char *name;

uint32_t target_page_bits;

uint32_t caps_count;

MigrationCapability *capabilities;

} SaveState;

- 内存迁移的核心实现,是遍历全局变量savevm_state的handlers成员,它指向一个队列,队列的每个成员是个SaveStateEntry,迁移内存就是将其中包含内存信息(is_ram)的SaveStateEntry传输,迁移设备状态就是将其中包含设备状态的SaveStateEntry传输。全局变量savevm_state的声明如下:

static SaveState savevm_state = {

.handlers = QTAILQ_HEAD_INITIALIZER(savevm_state.handlers),

.global_section_id = 0,

};

- 内存迁移需要迁移的最重要的SaveStateEntry是ram entry,它的idstr就是"ram",它集合了qemu向主机申请的所有虚拟内存,这个内存用来供虚拟机使用,是迁移内存最重要的内容,ram SaveStateEntry的注册如下:

void ram_mig_init(void)

{

qemu_mutex_init(&XBZRLE.lock);

register_savevm_live(NULL, "ram", 0, 4, &savevm_ram_handlers, &ram_state);

}

- 总结一下,QEMU的内存迁移设计是将所有设备分类,抽象出迁移一类设备的结构体SaveStateEntry,其中内存设备是一大类,普通设备是另一大类,这两类设备的SaveStateEntry被组织到一个全局的SaveStateEntry链表。迁移时遍历链表中的这些结构体,然后通过其提供的方法迁移数据就可以了。

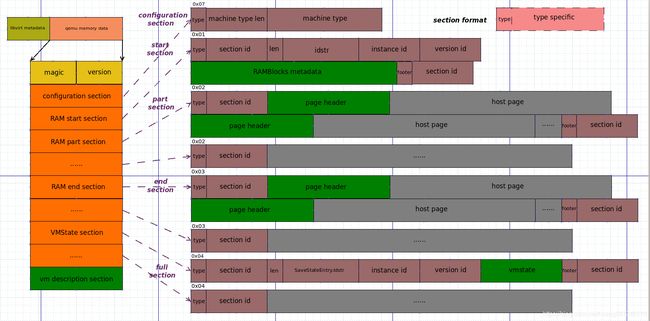

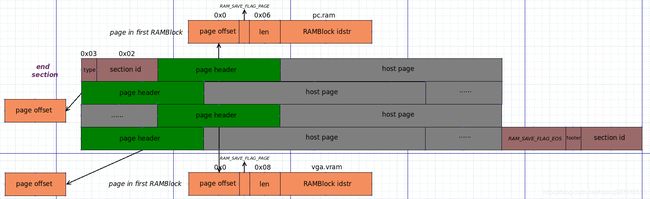

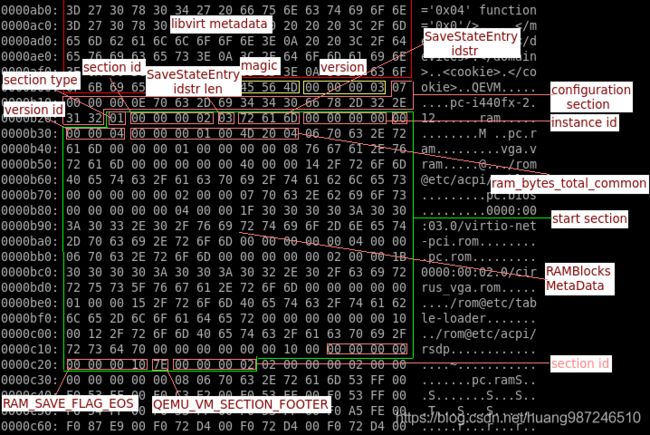

总体布局

- qemu内存迁移以section为单位,迁移的所有信息都被封装成一个个section写入输出流。precopy模式下会先迭代传输内存,当剩余内

存脏页数降低到水线以下,一次性传输所有剩余内存,然后传输设备状态。简单讲,precopy的内存迁移顺序就是先传内存,再传设备状态,

最后启动虚拟机。 - 内存迁移的总体布局如下,首先是用于标记qemu内存迁移开始的magic,然后是版本信息。之后的所有内容,都以section为单位,section的格式见下图右上角,第1个字节是类型字段,后面的内容随不同section而变化,section有以下几种:

#define QEMU_VM_SECTION_START 0x01

#define QEMU_VM_SECTION_PART 0x02

#define QEMU_VM_SECTION_END 0x03

#define QEMU_VM_SECTION_FULL 0x04

#define QEMU_VM_SUBSECTION 0x05

#define QEMU_VM_VMDESCRIPTION 0x06

#define QEMU_VM_CONFIGURATION 0x07

- 迁移的第一个section比较特殊,是configuration section,顾名思义,它的作用是配置迁移,是一个设备状态的section,表明了源端虚拟机的machine type,当目的端解析该section时会比较machine type字段,如果不相同,不允许进行迁移,由此限制了源端和目的端不同machine type的虚机迁移。

- 迁移的第二个section也比较特殊,它是所有迁移内存的元数据,包括所有内存SaveStateEntry包含的内存总大小,每个内存SaveStateEntry指向的RAMBlock的总长度等。

- 从第三个section开始是内存SaveStateEntry对应的section,它的内容结束后,是设备SaveStateEntry对应的section。

- 最后一个section描述所有迁移的设备状态域。

标记迁移开始

- qemu迁移从migration_thread开始,在qemu_savevm_state_header中发送magic和版本信息,可能还会发送configuration section,如下:

#define QEMU_VM_FILE_MAGIC 0x5145564d

#define QEMU_VM_FILE_VERSION 0x00000003

void qemu_savevm_state_header(QEMUFile *f)

{

trace_savevm_state_header();

qemu_put_be32(f, QEMU_VM_FILE_MAGIC); // 发送"QEVM" magic

qemu_put_be32(f, QEMU_VM_FILE_VERSION); // 发送版本信息

if (migrate_get_current()->send_configuration) { // 如果标记了发送configuration,发送

qemu_put_byte(f, QEMU_VM_CONFIGURATION);

vmstate_save_state(f, &vmstate_configuration, &savevm_state, 0);

}

}

- 目的端接收迁移内容从qemu_loadvm_state开始,当判断到magic和版本不对时,会终止迁移,如下:

int qemu_loadvm_state(QEMUFile *f)

{

......

v = qemu_get_be32(f);

if (v != QEMU_VM_FILE_MAGIC) { // 判断magic

error_report("Not a migration stream");

return -EINVAL;

}

......

if (v != QEMU_VM_FILE_VERSION) { // 判断版本

error_report("Unsupported migration stream version");

return -ENOTSUP;

}

......

}

section分析

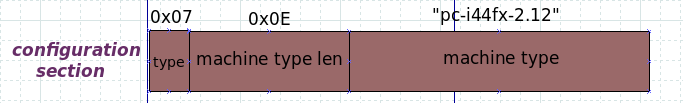

configuration section

- configration section是一个VMState的section,它传输的VMStateDescription如下:

static const VMStateDescription vmstate_configuration = {

.name = "configuration",

.version_id = 1,

.pre_load = configuration_pre_load,

.post_load = configuration_post_load,

.pre_save = configuration_pre_save, // 保存VMState信息的操作函数

.fields = (VMStateField[]) {

VMSTATE_UINT32(len, SaveState),

VMSTATE_VBUFFER_ALLOC_UINT32(name, SaveState, 0, NULL, len),

VMSTATE_END_OF_LIST()

},

.subsections = (const VMStateDescription*[]) {

&vmstate_target_page_bits,

&vmstate_capabilites,

NULL

}

};

- vmstate_configuration就是一个VMState,其中SaveState的len和name成员值是必须传送的,因为它们在vmstate_configuration.fields数组成员中,而fields描述的是VMState必须传送的信息。还有一个prev_save成员,它是搜集VMState信息的操作函数,如下:

static int configuration_pre_save(void *opaque)

{

SaveState *state = opaque;

const char *current_name = MACHINE_GET_CLASS(current_machine)->name; // 获取machine type

......

state->len = strlen(current_name); // 计算machine type长度

state->name = current_name; // 获取machine type

......

}

- 在接收端,qemu_loadvm_state中检查是否传输了configuration section,如果需要传输但是没有传输,终止,流程如下:

qemu_loadvm_state

if (migrate_get_current()->send_configuration) {

if (qemu_get_byte(f) != QEMU_VM_CONFIGURATION) { // 如果没有configuratin section,终止

error_report("Configuration section missing");

qemu_loadvm_state_cleanup();

return -EINVAL;

}

ret = vmstate_load_state(f, &vmstate_configuration, &savevm_state, 0)

......

}

- 如果传输了configuration section,首先查看源端发来的VMState版本是否高于本地的,如果高于本地的,终止检查和本地的machine type是否相同,如果不同,也终止,流程如下:

vmstate_load_state(f, &vmstate_configuration, &savevm_state, 0)

static int configuration_post_load(void *opaque, int version_id)

{

SaveState *state = opaque;

const char *current_name = MACHINE_GET_CLASS(current_machine)->name;

/* 比较machine type是否与本地不同,不同就终止 */

if (strncmp(state->name, current_name, state->len) != 0) {

error_report("Machine type received is '%.*s' and local is '%s'",

(int) state->len, state->name, current_name);

return -EINVAL;

}

......

}

start section

- qemu_savevm_state_setup会发送start section,函数会遍历全局变量savevm_state.handlers,通过判断ops,ops->setup,ops->is_active,将不满足条件的SaveStateEntry筛选出去,最终会获取到ram对应的SaveStateEntry,调用其对应的save_setup函数ram_save_setup,如下:

void qemu_savevm_state_setup(QEMUFile *f)

{

SaveStateEntry *se;

Error *local_err = NULL;

int ret;

trace_savevm_state_setup();

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if (!se->ops || !se->ops->save_setup) {

continue;

}

if (se->ops && se->ops->is_active) {

if (!se->ops->is_active(se->opaque)) {

continue;

}

}

save_section_header(f, se, QEMU_VM_SECTION_START);

ret = se->ops->save_setup(f, se->opaque);

save_section_footer(f, se);

......

}

}

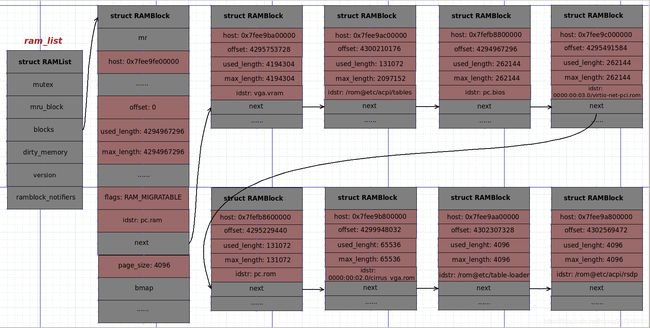

- savevm_state的组织如下,section_id为2的entry就是ram entry,它会在setup的遍历中被选中,然后调用它的save_setup回调函数ram_save_setup

- ram_save_setup会遍历ram_list.blocks上的每一个RAMBlock,发送其长度和idstr,流程如下:

static int ram_save_setup(QEMUFile *f, void *opaque)

{

RAMState **rsp = opaque;

RAMBlock *block;

......

/* 发送ram总长度 */

qemu_put_be64(f, ram_bytes_total_common(true) | RAM_SAVE_FLAG_MEM_SIZE);

/* 遍历ram_list.blocks的每个RAMBlock,发送其idstr和已使用长度 */

RAMBLOCK_FOREACH_MIGRATABLE(block) {

qemu_put_byte(f, strlen(block->idstr));

qemu_put_buffer(f, (uint8_t *)block->idstr, strlen(block->idstr));

qemu_put_be64(f, block->used_length);

if (migrate_postcopy_ram() && block->page_size != qemu_host_page_size) {

qemu_put_be64(f, block->page_size);

}

if (migrate_ignore_shared()) {

qemu_put_be64(f, block->mr->addr);

qemu_put_byte(f, ramblock_is_ignored(block) ? 1 : 0);

}

}

......

/* 结束发送 */

qemu_put_be64(f, RAM_SAVE_FLAG_EOS);

qemu_fflush(f);

......

}

- ram_list是一个全局变量,它维护着qemu向主机申请的所有虚拟内存,被组织成一个链表,每个成员是一个RAMBlock结构,它表示主机分配给qemu的一段物理内存,qemu正是通过这些内存实现内存模拟,RAMBlock数据结构如下:

struct RAMBlock {

struct rcu_head rcu;

struct MemoryRegion *mr;

uint8_t *host; /* HVA,qemu通过malloc向主机申请得到 */

uint8_t *colo_cache; /* For colo, VM's ram cache */

ram_addr_t offset; /* 本段内存相对host地址的偏移 */

ram_addr_t used_length; /* 已使用的内存长度 */

ram_addr_t max_length; /* 申请的内存长度*/

void (*resized)(const char*, uint64_t length, void *host);

uint32_t flags;

/* Protected by iothread lock. */

char idstr[256];

/* RCU-enabled, writes protected by the ramlist lock */

QLIST_ENTRY(RAMBlock) next; /* 指向链表的下一个成员 */

QLIST_HEAD(, RAMBlockNotifier) ramblock_notifiers;

int fd;

size_t page_size;

/* 用于迁移时记录脏页的位图 */

/* dirty bitmap used during migration */

unsigned long *bmap;

/* bitmap of pages that haven't been sent even once

* only maintained and used in postcopy at the moment

* where it's used to send the dirtymap at the start

* of the postcopy phase

*/

unsigned long *unsentmap;

/* bitmap of already received pages in postcopy */

unsigned long *receivedmap;

};

- ram_list的组织结构图如下:

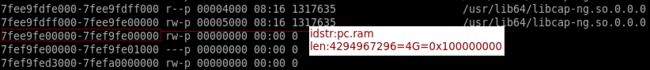

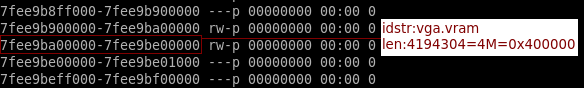

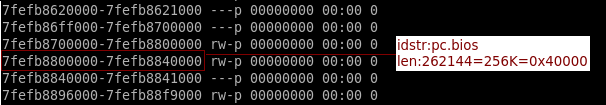

- 选取其中的几个RAMBlock,在qemu进程的内存映射中查看其所属的vm_area_struct区域,首先通过命令

cat /proc/qemu_pic/maps | less获取qemu进程的所有虚拟机内存空间,查找到以下内存区域:

- start section中主要发送RAMBlock元数据信息,主要是RAMBlock的idstr和used_length,下图中绿色部分,这里可以看到qemu RAMBlock的构成,我们熟悉的内存有pc.ram,vga.vram,pc.bios等

- 下面是内存镜像中start section的分析结果:

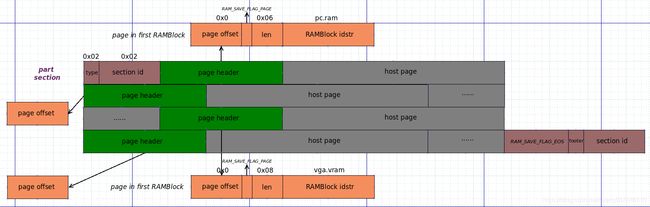

part section

- part section传输内存页,是内存迁移的主要内容,在qemu_savevm_state_iterate中发起,该函数和qemu_savevm_state_setup类似,也会遍历全局变量savevm_state.handlers,调用满足条件的SaveStateEntry对应的save_live_iterate函数,如下:

int qemu_savevm_state_iterate(QEMUFile *f, bool postcopy)

{

SaveStateEntry *se;

int ret = 1;

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if (!se->ops || !se->ops->save_live_iterate) {

continue;

}

if (se->ops && se->ops->is_active) {

if (!se->ops->is_active(se->opaque)) {

continue;

}

}

if (se->ops && se->ops->is_active_iterate) {

if (!se->ops->is_active_iterate(se->opaque)) {

continue;

}

}

......

/* 找到合适的SaveStateEntry,首先写入part section的头部*/

save_section_header(f, se, QEMU_VM_SECTION_PART);

/* 调用save_live_iterate,如果是ram section,调用ram_save_iterate */

ret = se->ops->save_live_iterate(f, se->opaque);

/* 发送结束,标记section结束 */

save_section_footer(f, se);

......

}

- ram_save_iterate会遍历ram_list.blocks所有RAMBlock,根据位图找出脏页,然后迁移内存,如下:

ram_save_iterate

ram_find_and_save_block

pss.block = rs->last_seen_block

/* 取出ram_list维护的第一个RAMBlock */

if (!pss.block) {

pss.block = QLIST_FIRST_RCU(&ram_list.blocks);

}

/* 根据位图查找脏的RAMBlock */

find_dirty_block(rs, &pss, &again)

/* 从位图中找到下一个脏页,如果找到脏页的索引 */

pss->page = migration_bitmap_find_dirty(rs, pss->block, pss->page)

ram_save_host_page(rs, &pss, last_stage)

/* 发送脏页*/

ram_save_target_page(rs, pss, last_stage)

ram_save_page

save_normal_page

save_page_header

qemu_put_buffer_async

- save_normal_page函数实现以各内存页的迁移,它首先发送描述内存页的头部信息,主要是内存页在RAMBlock中的偏移:

save_page_header(rs, rs->f, block, offset | RAM_SAVE_FLAG_PAGE)

/**

* save_page_header: write page header to wire

*

* If this is the 1st block, it also writes the block identification

* 如果发送的内存页所属的RAMBlock是一个新的RAMBlock,将RAMBlock的idstr一起发送

* Returns the number of bytes written

*

* @f: QEMUFile where to send the data

* @block: block that contains the page we want to send

* @offset: offset inside the block for the page

* in the lower bits, it contains flags

*/

static size_t save_page_header(RAMState *rs, QEMUFile *f, RAMBlock *block,

ram_addr_t offset)

{

size_t size, len;

if (block == rs->last_sent_block) {

offset |= RAM_SAVE_FLAG_CONTINUE;

}

qemu_put_be64(f, offset); /* 发送内存页在RAMBlock内存区域的偏移*/

size = 8;

if (!(offset & RAM_SAVE_FLAG_CONTINUE)) {

len = strlen(block->idstr);

qemu_put_byte(f, len);

qemu_put_buffer(f, (uint8_t *)block->idstr, len);

size += 1 + len;

rs->last_sent_block = block;

}

return size;

}

- 头部信息发送完之后,发送内存页的内容,这是内存迁移的核心目的:

/*

* directly send the page to the stream

*

* Returns the number of pages written.

*

* @rs: current RAM state

* @block: block that contains the page we want to send

* @offset: offset inside the block for the page

* @buf: the page to be sent

* @async: send to page asyncly

*/

static int save_normal_page(RAMState *rs, RAMBlock *block, ram_addr_t offset,

uint8_t *buf, bool async)

{

ram_counters.transferred += save_page_header(rs, rs->f, block,

offset | RAM_SAVE_FLAG_PAGE);

if (async) {

qemu_put_buffer_async(rs->f, buf, TARGET_PAGE_SIZE,

migrate_release_ram() &

migration_in_postcopy());

} else {

qemu_put_buffer(rs->f, buf, TARGET_PAGE_SIZE);

}

ram_counters.transferred += TARGET_PAGE_SIZE;

ram_counters.normal++;

return 1;

}

- part section发送的内容如下,以pc.ram RAMBlock为例,发送pc.ram的第一个内存页,这时检查到该页所属的RAMBlock是一个新的RAMBlock,page header除了填写必须的偏移,还会附加上RAMBlock的idstr,之后如果再次发送pc.ram RAMBlock包含的内存页,page header就只包含该页在RAMBlock中的偏移,对于vga.vram,pc.bios等其它RAMBlock,在发送时也做同样的处理。

end section

- 迁移内存迭代一次后,下一次迁移前会计算剩余脏页数,将其与水线比较,如果脏页数大于水线,继续迁移,如果小于水线,走migration_completion流程,migration_iteration_run是迁移迭代函数,如下:

/*

* Return true if continue to the next iteration directly, false

* otherwise.

*/

static MigIterateState migration_iteration_run(MigrationState *s)

{

uint64_t pending_size, pend_pre, pend_compat, pend_post;

bool in_postcopy = s->state == MIGRATION_STATUS_POSTCOPY_ACTIVE;

/* pending_size,剩余的脏页总和 */

qemu_savevm_state_pending(s->to_dst_file, s->threshold_size, &pend_pre,

&pend_compat, &pend_post);

pending_size = pend_pre + pend_compat + pend_post;

trace_migrate_pending(pending_size, s->threshold_size,

pend_pre, pend_compat, pend_post);

/* 当剩余脏页数大于水线时,继续迁移 */

if (pending_size && pending_size >= s->threshold_size) {

/* Still a significant amount to transfer */

if (migrate_postcopy() && !in_postcopy &&

pend_pre <= s->threshold_size &&

atomic_read(&s->start_postcopy)) {

if (postcopy_start(s)) {

error_report("%s: postcopy failed to start", __func__);

}

return MIG_ITERATE_SKIP;

}

/* Just another iteration step */

qemu_savevm_state_iterate(s->to_dst_file,

s->state == MIGRATION_STATUS_POSTCOPY_ACTIVE);

} else { /* 小于水线时,进入迁移完成阶段 */

trace_migration_thread_low_pending(pending_size);

migration_completion(s);

return MIG_ITERATE_BREAK;

}

return MIG_ITERATE_RESUME;

}

- 调用migration_completion函数进入迁移完成阶段之后,会调用qemu_savevm_state_complete_precopy,该函数工作流程和qemu_savevm_state_iterate类似,迭代查找所有SaveStateEntry,找到ram SaveStateEntry之后,将里面所有RAMBlock的内存内容一次性发送,如下:

int qemu_savevm_state_complete_precopy(QEMUFile *f, bool iterable_only,

bool inactivate_disks)

{

QJSON *vmdesc;

int vmdesc_len;

SaveStateEntry *se;

int ret;

......

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if (!se->ops ||

(in_postcopy && se->ops->has_postcopy &&

se->ops->has_postcopy(se->opaque)) ||

(in_postcopy && !iterable_only) ||

!se->ops->save_live_complete_precopy) {

continue;

}

if (se->ops && se->ops->is_active) {

if (!se->ops->is_active(se->opaque)) {

continue;

}

}

trace_savevm_section_start(se->idstr, se->section_id);

save_section_header(f, se, QEMU_VM_SECTION_END);

ret = se->ops->save_live_complete_precopy(f, se->opaque);

trace_savevm_section_end(se->idstr, se->section_id, ret);

save_section_footer(f, se);

......

}

......

}

full section

- qemu迁移一个设备状态VMState使用的是full section,在qemu_savevm_state_complete_precopy中,迁移完剩余内存之后紧接着就迁移VMState,如下:

int qemu_savevm_state_complete_precopy(QEMUFile *f, bool iterable_only,

bool inactivate_disks)

{

......

/* json对象记录所有迁移的VMState,如果需要,会在迁移结束后发送到目的端 */

vmdesc = qjson_new();

json_prop_int(vmdesc, "page_size", qemu_target_page_size());

json_start_array(vmdesc, "devices");

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

/* 如果SaveStateEntry的vmsd为空,说明它是一个内存section,跳过*/

if ((!se->ops || !se->ops->save_state) && !se->vmsd) {

continue;

}

if (se->vmsd && !vmstate_save_needed(se->vmsd, se->opaque)) {

trace_savevm_section_skip(se->idstr, se->section_id);

continue;

}

......

json_start_object(vmdesc, NULL);

json_prop_str(vmdesc, "name", se->idstr);

json_prop_int(vmdesc, "instance_id", se->instance_id);

/* 添加full类型的section header */

save_section_header(f, se, QEMU_VM_SECTION_FULL);

/* 迁移VMState*/

ret = vmstate_save(f, se, vmdesc);

......

/* 添加页尾 */

save_section_footer(f, se);

json_end_object(vmdesc);

}

......

}

- VMStateDescription描述一个VMState,VMState简单讲,就是qemu设备模型中的设备状态,每个设备状态都有一个结构体,VMState就指代这些结构体的一个qemu术语。一个VMStateDescription的主要作用是在qemu迁移时,帮助qemu判断,VMState结构体中哪些成员需要迁移,哪些成员不需要迁移,因为qemu的设备状态数据结构需要向前兼容,因此这些判断是必须而且有用的。

- 假设高版本qemu-3.0的一个VMState数据结构新增了成员A,迁移也需要发送A的值,同版本之间,源端发送A的信息,目的端接收A的信息,因为VMState数据结构是一样的,因此A的收发都可以成功。但如果源端是qemu-2.0的低版本,它没有增加这个新的成员A,它想让自己的虚拟机迁移到高版本上去就不可能了,因为高版本目的端会检查源端是否发送了A成员,如果没有发送,不符合预期,迁移就失败了。

- 为解决上面的问题,qemu为一个VMState数据结构增加了version_id字段,引入了subsections字段,每个field也增加了version_id字段,所有这些都是为了设备状态信息可以迁移成功。要说清楚这个机制需要更多笔墨,之后有时间会另写一篇专门分析VMState迁移原理的文章

- TODO:VMState迁移原理

vmdescription section

- vmdescription字段是一个可选内容,它只可能在precopy中被发送,在迁移VMState时,qemu会把每个VMState的idstr,instance_id字段都记录下来,组织成一个json字符串,在VMState迁移完成之后,发送到目的端

Q&A

Q:迁移实现中的SaveStateEntry和section是什么关系,为什么说它俩都是迁移操作的基本单位?

A:SaveStateEntry和section并没有直接关系,SaveStateEntry QEMU设计用来组织迁移的数据结构,它提供给QEMU的是“一类设备迁移的操作方法”。而section是迁移动态数据流的基本单位,主要用于迁移两端信息同步。其实section才是迁移数据流的基本单位,SaveStateEntry只是迁移信息数据结构的封装,这两者不是一一对应的。