pytorch .pth模型转tensorflow .pb模型

训练好的pytorch模型如何转化为tensorflow的pb模型?

本人初步使用的是onnx框架: pytorch ---> onnx ----> tensorflow

使用onnx转pb后,加载pb模型时出现 in __call__ raise ValueError("callback %s is not found" % token) ValueError: callback pyfunc_0 is not found,本人不才查了很多资料也未解决,https://github.com/onnx/onnx-tensorflow/issues/167, 针对这个问题大家说法不一,有人说resnet18转换过程没有出现这个问题,反正挺玄学的,于是弃用onnx转pb。

下面分享一下本人使用方法:

实现的大体过程是 pytorch--->onnx--->keras---->pb

1. 下载工程https://github.com/nerox8664/pytorch2keras,安装pytorch

pip install pytorch2keras

git clone https://github.com/nerox8664/pytorch2keras.git2. 安装依赖库,onnx, keras, tensorflow, numpy, torch, torchvision, onnx2keras

本人使用版本为:

- tensorflow-gpu==1.12.0

- onnx2keras==1.1.17

- pytorch2keras==0.2.3

- torch==1.1.0

- keras==2.2.4

- torchvision==0.4.2

3. 在pytorch2keras创建torch2tf.py

# -*- coding: utf-8 -*-

import sys

sys.path.append('/workspace/pytorch2keras') ##engineering path

import numpy as np

import torch

from torch.autograd import Variable

from pytorch2keras.converter import pytorch_to_keras

import torchvision

import os.path as osp

import os

os.environ['KERAS_BACKEND'] = 'tensorflow'

from keras import backend as K

K.clear_session()

K.set_image_dim_ordering('tf')

import test

import tensorflow as tf

import torch

from torch import nn

from torchsummary import summary

from torch.autograd import Variable

from tensorflow.python.keras.backend import get_session

from tensorflow.python.keras.models import load_model

from tensorflow.python.framework import graph_util,graph_io

from keras.utils import plot_model

# K.set_image_data_format('channels_first')

import cv2

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

def softmax(x):

exp_x = np.exp(x)

softmax_x = exp_x / np.sum(exp_x)

return softmax_x

def check_error(output, k_model, input_np, epsilon=1e-3):

pytorch_output = output[0].data.cpu().numpy()

# pytorch_output = np.max(pytorch_output)

#print('torch:',pytorch_output)

# print('=====================')

# print('torch:',pytorch_output)

keras_output = k_model.predict(input_np)

keras_output = keras_output[0]

# keras_output = np.max(keras_output)

# print('=====================')

# print('keras pre:',keras_output)

error = np.max(pytorch_output - keras_output)

print('Error:', error)

assert error < epsilon

return error

import numpy as np

def normalization0_1(data):

_range = np.max(data) - np.min(data)

data = (data - np.min(data)) / _range

mean = [0.485, 0.456, 0.406]

std_ad = [0.229, 0.224, 0.225]

return np.divide(np.subtract(data, mean), std_ad)

def h5_to_pb(h5_model,output_dir,model_name,out_prefix = "output_",):

if osp.exists(output_dir) == False:

os.mkdir(output_dir)

out_nodes = ["output_0_1"] ##get from init_graph

# out_nodes.append(out_prefix + str(0))

tf.identity(h5_model.output[0], out_prefix+str(0))

sess = get_session()

init_graph = sess.graph.as_graph_def() ##get out_nodes

main_graph = graph_util.convert_variables_to_constants(sess,init_graph,out_nodes)

graph_io.write_graph(main_graph,output_dir,name = model_name,as_text = False)

if __name__ == '__main__':

##step1: load pytorch model

model = test.main()

model = model.cuda() ##cuda

summary(model, (3, 448, 448)) ##summary(model, (channels, pic_h, pic_w))

model.eval()

##step2: pytorch .pth to keras .h5 and test .h5

input_np = np.random.uniform(0, 1, (1,3, 448, 448))

input_var = Variable(torch.FloatTensor(input_np)).cuda() ##cuda

# input_var = Variable(torch.FloatTensor(input_np))

k_model = pytorch_to_keras(model, input_var, (3, 448, 448,), verbose=True, name_policy='short')

k_model.summary()

k_model.save('my_model.h5')

output = model(input_var)

check_error(output, k_model, input_np) ## check the error between .pth and .h5

##step3: load .h5 and .h5 to .pb

tf.keras.backend.clear_session()

tf.keras.backend.set_learning_phase(0) ##不可少,

my_model = load_model('my_model.h5')

h5_to_pb(my_model, output_dir='./model/', model_name='model.pb')

##step4: load .pb and test .pb

pb_path = './model/model.pb'

with tf.Session() as sess:

tf.global_variables_initializer().run()

graph_def = tf.GraphDef()

with tf.gfile.GFile(pb_path, 'rb') as f:

graph_def.ParseFromString(f.read())

_ = tf.import_graph_def(graph_def, name="")

pic_file = './datasets/data'

pic_list = os.listdir(pic_file)

for name in pic_list:

img_path = '{}/{}'.format(pic_file, name)

im = cv2.imread(img_path)

im = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

img = cv2.resize(im, (448, 448))

img = np.asarray(img, dtype=np.float32)

img = normalization0_1(img)

img_data = np.transpose(img, (2, 0, 1))

img_input = np.asarray(img_data, dtype=np.float32)[np.newaxis, :, :, :]

input = sess.graph.get_tensor_by_name("input_0:0")

output = sess.graph.get_tensor_by_name("output_0_1:0")

pre_label = sess.run([output],feed_dict={input:img_input})

pre_label = pre_label[0][0]

# print(pre_label)

pre_label = np.argmax(softmax(pre_label))

print('------------------------')

print('{} prelabel is {}'.format(pic_name, pre_label))

对代码步骤解释:

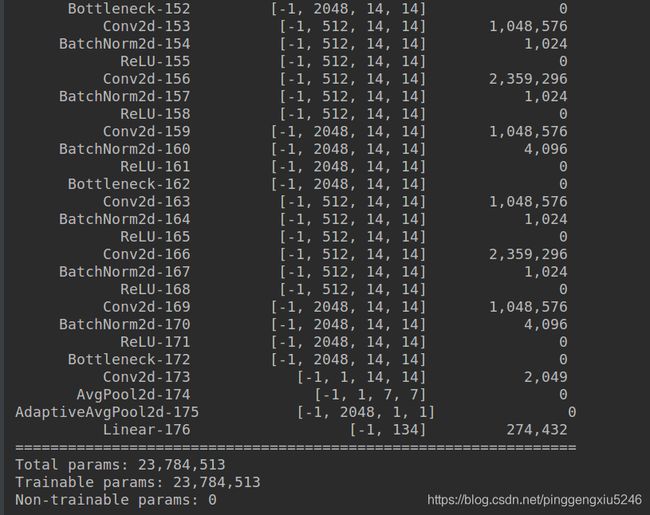

1. 加载自己的pytorch模型,参数与网络结构,利用summary()可将模型结构打印出来,注意BN层参数为2*channels,这是因为pytorch中只有可学习的参数才称为params,故.pth中的BN层参数为2*channels,默认参数eps=1e-0.5 momentum=0.1

torch.nn.BatchNorm1d(num_features,eps=1e-05,momentum=0.1,affine=True,track_running_stats=True)

##step1: load pytorch model

model = test.main() ##load network and params

model = model.cuda() ##cuda

summary(model, (3, 448, 448)) ##summary(model, (channels, pic_h, pic_w))

model.eval()

##step2: pytorch .pth to keras .h5 and test .h5

input_np = np.random.uniform(0, 1, (1,3, 448, 448))

input_var = Variable(torch.FloatTensor(input_np)).cuda() ##cuda

# input_var = Variable(torch.FloatTensor(input_np))

k_model = pytorch_to_keras(model, input_var, (3, 448, 448,), verbose=True, name_policy='short')

k_model.summary()

k_model.save('my_model.h5')训练参数是一样的,这里需要解释一下.h5模型中的None-trainable params, 这是因为.h5的BN层默认参数为4*channels, 2*channels个参数是非训练参数;默认参数 momentum=0.99, epsilon=0.001,可以看到keras与pytorch中BN操作的默认参数是不同的。

keras.layers.normalization.BatchNormalization(axis=-1, momentum=0.99, epsilon=0.001, center=True, scale=True, beta_initializer='zeros', gamma_initializer='ones', moving_mean_initializer='zeros', moving_variance_initializer='ones', beta_regularizer=None, gamma_regularizer=None, beta_constraint=None, gamma_constraint=None)

3. 测试.pth与.h5模型输出结果是否有出入,测试结果Error: -6.1035156e-05。将各层参数打印出来进行比对后,猜测应该是两个模型的BN默认参数不一致导致的。

##step3: check the error between .pth and .h5

output = model(input_var)

check_error(output, k_model, input_np) 4. 将.h5模型转化为.pb模型,可在init_graph = sess.graph.as_graph_def()处加断点,查看输出节点名称,然后对out_nodes名称进行修改。 重点: 不要直接使用keras中backend,请使用tf中的keras进行相关操作,不然后期会遇到很多坑。

##step4: load .h5 and .h5 to .pb

tf.keras.backend.clear_session()

tf.keras.backend.set_learning_phase(0) ##不可少,

my_model = load_model('my_model.h5')

my_model.summary()

h5_to_pb(my_model, output_dir='./model/', model_name='model.pb')5. 调用加载.pb模型, pic_file存放测试图片,结果就不再显示了,和.pth原模型结果会有一些差距,因为.h5与.pth的BN初始化不同导致的,基本不影响分类结果。

注意请查看自己的数据是否进行了预处理过程,我这里的pytorch的数据先进行了归一化处理Normalize = transforms.Normalize(std=[0.485, 0.456, 0.406], mean=[0.229, 0.224, 0.225])再进入模型训练的,故固化后的模型不包括预处理过程。后期使用pb模型时需要先对数据进行预处理,确保二者的预处理过程一致。pytorch中的 transforms.Normalize操作本质是分为两步进行的, step1: 归一化,将数值缩小到0-1区间内。step2: 标准化,将数值聚集在mean附近,方差为std

##step4: load .pb and test .pb

pb_path = './model/model.pb'

with tf.Session() as sess:

tf.global_variables_initializer().run()

graph_def = tf.GraphDef()

with tf.gfile.GFile(pb_path, 'rb') as f:

graph_def.ParseFromString(f.read())

_ = tf.import_graph_def(graph_def, name="")

pic_file = './datasets/data'

pic_list = os.listdir(pic_file)

for name in pic_list:

img_path = '{}/{}'.format(pic_file, name)

im = cv2.imread(img_path)

im = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

img = cv2.resize(im, (448, 448))

img = np.asarray(img, dtype=np.float32)

img = normalization0_1(img)

img_data = np.transpose(img, (2, 0, 1))

img_input = np.asarray(img_data, dtype=np.float32)[np.newaxis, :, :, :]

input = sess.graph.get_tensor_by_name("input_0:0")

output = sess.graph.get_tensor_by_name("output_0_1:0")

pre_label = sess.run([output], feed_dict={input: img_input})

pre_label = pre_label[0][0]

# print(pre_label)

pre_label = np.argmax(softmax(pre_label))

print('------------------------')

print('{} prelabel is {}'.format(pic_name, pre_label))## 支持层名称

* Activations:

+ ReLU

+ LeakyReLU

+ SELU

+ Sigmoid

+ Softmax

+ Tanh

* Constants

* Convolutions:

+ Conv2d

+ ConvTrsnpose2d

* Element-wise:

+ Add

+ Mul

+ Sub

+ Div

* Linear

* Normalizations:

+ BatchNorm2d

+ InstanceNorm2d

* Poolings:

+ MaxPool2d

+ AvgPool2d

+ Global MaxPool2d (adaptive pooling to shape [1, 1])

## Models converted with pytorch2keras

* ResNet*

* VGG*

* PreResNet*

* DenseNet*

* AlexNet

* Mobilenet v2