《深入理解Spark》之Spark-Stream概述1(官方文档翻译版)

最近在学英语,学以致用,就尝试着看Spark的官方文档,并试着翻译了部分,由于水平有限如果有所疏漏的地方欢迎指正

* Spark Stream Overview

* Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput,

* fault-tolerant stream processing of live data streams.

* Data can be ingested from many sources like Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets,

* and can be processed using complex algorithms expressed with high-level functions

* like map, reduce, join and window. Finally, processed data can be pushed out to filesystems,

* databases, and live dashboards. In fact, you can apply Spark’s machine learning and graph processing algorithms on data

* streams.

*

* Spark Stream 概述

* Spark Streaming是一个可扩展的、高吞吐量、容错的实时数据处理框架

* 它可以从很多种类的数据源中摄取数据比如Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets

* 并且可以使用高阶函数表示的复杂的算法处理数据,这些高阶函数有map, reduce, join and window等。。

* 最后的处理结果可以被放入到外部的文件系统如数据库或者现场仪表盘(就好比每年双11天猫在大屏幕上实时显示的交易额)

* 实际上你还可以把spark的机器学习和图形处理算法都使用到spark Stream上

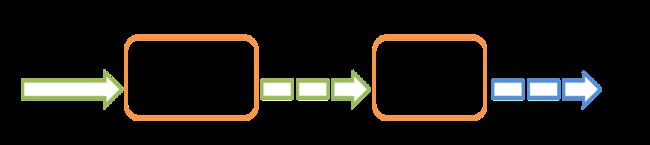

* Internally, it works as follows. Spark Streaming receives live input data streams and divides the data into batches,

* which are then processed by the Spark engine to generate the final stream of results in batches

* 它的内部工作原理如下,

* spark Streaming接收实时输入数据流然后按照批次分配数据,然后按照spark引擎生成最终的批处理的结果流数据

* Spark Streaming provides a high-level abstraction called discretized stream or DStream,

* which represents a continuous stream of data.

* DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis,

* or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs.

* This guide shows you how to start writing Spark Streaming programs with DStreams.

* You can write Spark Streaming programs in Scala, Java or Python (introduced in Spark 1.2),

* all of which are presented in this guide.

* You will find tabs throughout this guide that let you choose between code snippets of different languages.

* Note: There are a few APIs that are either different or not available in Python.

* Throughout this guide, you will find the tag Python API highlighting these differences.

*

*

* Spark Streaming提供了一个高级的抽象的被称作 discretized stream(离散化的流)的概念简称为DStream

* 它代表一个可以包含数据的流的抽象

* DStreams可以由从其他数据源中摄入的数据创建,这些数据源有 Kafka, Flume, and Kinesis

* 也可以吧高级函数应用到 DStream上面,实际上一个DStream是由一系列的RDD组成的

* 这篇指南会指导你使用DStream快速的写一个Spark Streaming程序,你可以使用Scala, Java or Python来写Spark Streaming程序

* 在整个指南手册中找到选项卡在不同的语言中来选择代码的片段

* 提示:你会发现有一些python版本的Api(高亮显示的部分)有一点儿不一样A Quick Example

* Before we go into the details of how to write your own Spark Streaming program,

* let’s take a quick look at what a simple Spark Streaming program looks like.

* Let’s say we want to count the number of words in text data received from a data server listening on a TCP socket.

* All you need to do is as follows

*

* 一个快速开始的小栗子

* 在研究如何写spark Streaming 程序的细节之前,让我们来快速看下面一个例子

* 我们统计一个文本文件的单词个数,这个文本文件是通过监听 TCP套接字而来的,你应该向下面这样做 import org.apache.spark._

import org.apache.spark.streaming._

import org.apache.spark.streaming.StreamingContext._ // not necessary since Spark 1.3// Create a local StreamingContext with two working thread and batch interval of 1 second.

// 通过两个worker线程创建一个本地的 StreamingContext(Streaming上下文),并且间隔是1秒钟

// The master requires 2 cores to prevent from a starvation scenario.

// master需要两个核 以防止出现饥饿的情况

val conf = new SparkConf().setMaster("local[2]").setAppName("NetworkWordCount")

val ssc = new StreamingContext(conf, Seconds(1))

* Using this context, we can create a DStream that represents streaming data from a TCP source,

* specified as hostname (e.g. localhost) and port (e.g. 9999).

*

* 使用这个context对象我们可以创建一个DStream对象,这个DStream对象代表从TCP源而来的数据流对象

* 该TCP源是指定了主机和端口的

*

*

* This lines DStream represents the stream of data that will be received from the data server.

* Each record in this DStream is a line of text. Next, we want to split the lines by space characters into words.

*

* 这行DStream对象代表从数据服务器接受的数据流

* DStream对象中的每一行文本记录我们都想通过空白字符切割为单词val lines = ssc.socketTextStream("localhost", 9999)

val words = lines.flatMap(_.split(" "))* flatMap is a one-to-many DStream operation that creates a new DStream by generating multiple new records from

* each record in the source DStream. In this case, each line will be split into multiple words and the

* stream of words is represented as the words DStream. Next, we want to count these words

*

* flatMap是DStream的一个一对多的操作通过生成一个新的DStream把原来DStream里面的记录生成多个记录

* 这种情况下,每一行都会被分割为多个单词并且被当做一个单词的DStream对象,然后我们再统计这些单词val pairs = words.map(word => (word, 1))

val wordCounts = pairs.reduceByKey(_ + _)

// Print the first ten elements of each RDD generated in this DStream to the console

wordCounts.print()* The words DStream is further mapped (one-to-one transformation) to a DStream of (word, 1) pairs,

* which is then reduced to get the frequency of words in each batch of data. Finally,

* wordCounts.print() will print a few of the counts generated every second.

*

* 这些单词的DStream被进一步映射成键值对的形式(类型依旧是Dstream)

* 然后把一批数据中单词按照出现的频率归并起来,通过wordCounts.print()没秒打印一次

*

*

* Note that when these lines are executed, Spark Streaming only sets up the computation it will perform

* when it is started, and no real processing has started yet.

* To start the processing after all the transformations have been setup, we finally call

*

* 当每行被执行却还没有被执行的那些transformation算子,我们总是延迟执行,我们在最后调用

* 即懒加载模式ssc.start() // Start the computation

ssc.awaitTermination() // Wait for the computation to terminate* The complete code can be found in the Spark Streaming example NetworkWordCount.

*

* 为了完成代码你可以找到名字叫NetworkWordCount的Spark Streaming 的例子

*

*

* If you have already downloaded and built Spark, you can run this example as follows.

* You will first need to run Netcat (a small utility found in most Unix-like systems) as a data server by using

*

* 如果你已经下载并构建了Spark,你可以按照下面的方法运行这个例子

* 首先你需要运行 Netcat 作为数据服务器使用(Netcat一个像类Unix的系统中的小工具)

*

* $ nc -lk 9999

* Then, in a different terminal, you can start the example by using

*

* 然后你可以通过使用一个不同的中断来开始使用例子程序

*

*

* Then, any lines typed in the terminal running the netcat server will be counted and printed on screen every second

* 然后,在 netcat 终端上运行的任何类型的数据都将按照每秒的频率被统计并打印到屏幕