centos下安装slurm

centos下安装slurm

控制节点node16

计算节点node16,node18

删除安装失败的slurm

yum remove slurm -y

cat /etc/passwd | grep slurm

userdel - r slurm创建用户

export SLURMUSER=412

groupadd -g $SLURMUSER slurm

useradd -m -c "SLURM workload manager" -d /var/lib/slurm -u $SLURMUSER -g slurm -s /bin/bash slurm查看slurm用户组id是否一致,控制节点和所有计算节点都要一样

id slurm安装slurm

先装epel库:

yum install epel-release装slurm的依赖包:

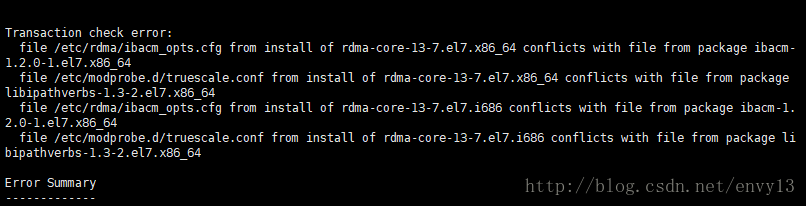

yum install openssl openssl-devel pam-devel numactl numactl-devel hwloc hwloc-devel lua lua-devel readline-devel rrdtool-devel ncurses-devel man2html libibmad libibumad -y如果出现以下报错:

直接卸载冲突部分再重新运行上述命令即可:

yum -y remove ibacm-1.2.0-1.el7.x86_64

yum -y remove libipathverbs-1.3-2.el7.x86_64

yum -y remove ibacm-1.2.0-1.el7.x86_64

yum -y remove libipathverbs-1.3-2.el7.x86_64

装rpm:

yum install rpm-build下载slurm:

wget https://www.schedmd.com/archives.php/downloads/archive/slurm-17.02.4.tar.bz2

rpmbuild -ta slurm-17.02.4.tar.bz2对于控制节点和计算节点:

cd /root/rpmbuild/RPMS/x86_64

mkdir slurm-rpms

cp slurm-15.08.7-1.el7.centos.x86_64.rpm

slurm-devel-15.08.7-1.el7.centos.x86_64.rpm

slurm-munge-15.08.7-1.el7.centos.x86_64.rpm

slurm-pam_slurm-15.08.7-1.el7.centos.x86_64.rpm

slurm-perlapi-15.08.7-1.el7.centos.x86_64.rpm

slurm-plugins-15.08.7-1.el7.centos.x86_64.rpm

slurm-sjobexit-15.08.7-1.el7.centos.x86_64.rpm

slurm-sjstat-15.08.7-1.el7.centos.x86_64.rpm

slurm-slurmdbd-15.08.7-1.el7.centos.x86_64.rpm

slurm-slurmdb-direct-15.08.7-1.el7.centos.x86_64.rpm

slurm-sql-15.08.7-1.el7.centos.x86_64.rpm

slurm-torque-15.08.7-1.el7.centos.x86_64.rpm /slurm-rpms对于计算节点:

yum --nogpgcheck localinstall slurm-15.08.7-1.el7.centos.x86_64.rpm slurm-devel-15.08.7-1.el7.centos.x86_64.rpm slurm-munge-15.08.7-1.el7.centos.x86_64.rpm slurm-perlapi-15.08.7-1.el7.centos.x86_64.rpm slurm-plugins-15.08.7-1.el7.centos.x86_64.rpm slurm-sjobexit-15.08.7-1.el7.centos.x86_64.rpm slurm-sjstat-15.08.7-1.el7.centos.x86_64.rpm slurm-torque-15.08.7-1.el7.centos.x86_64.rpm配置slurm.conf:

cd /etc/slurm

cp slurm.conf.example slurm.conf

vim slurm.conf以下是配置好的slurm.conf:

ControlMachine=node16

ControlAddr=10.192.168.116

#MailProg=/bin/mail

MpiDefault=none

#MpiParams=ports=#-#

ProctrackType=proctrack/pgid

ReturnToService=1

SlurmctldPidFile=/var/run/slurmctld.pid

#SlurmctldPort=6817

SlurmdPidFile=/var/run/slurmd.pid

#SlurmdPort=6818

SlurmdSpoolDir=/var/spool/slurmd

SlurmUser=slurm

#SlurmdUser=root

StateSaveLocation=/var/spool/slurmctld

SwitchType=switch/none

TaskPlugin=task/none

#

#

# TIMERS

#KillWait=30

#MinJobAge=300

#SlurmctldTimeout=120

#SlurmdTimeout=300

#

#

# SCHEDULING

FastSchedule=1

SchedulerType=sched/backfill

#SchedulerPort=7321

SelectType=select/linear

#

#

# LOGGING AND ACCOUNTING

AccountingStorageType=accounting_storage/none

ClusterName=node

#JobAcctGatherFrequency=30

JobAcctGatherType=jobacct_gather/none

#SlurmctldDebug=3

SlurmctldLogFile=/var/log/slurmctld.log

#SlurmdDebug=3

SlurmdLogFile=/var/log/slurmd.log

#

#

# COMPUTE NODES

#NodeName=node16 CPUs=20 RealMemory=63989 Sockets=2 CoresPerSocket=10 ThreadsPerCore=1 State=IDLE

#NodeName=node11 CPUs=20 RealMemory=62138 Sockets=4 CoresPerSocket=5 ThreadsPerCore=1 State=IDLE

#NodeName=node18 CPUs=20 RealMemory=64306 Sockets=2 CoresPerSocket=10 ThreadsPerCore=1 State=IDLE

#PartitionName=control Nodes=node16 Default=NO MaxTime=INFINITE State=UP

#PartitionName=compute Nodes=node16,node11,node18 Default=YES MaxTime=INFINITE State=UP

NodeName=node16 NodeAddr=10.192.168.116 CPUs=20 State=UNKNOWN

#NodeName=node11 NodeAddr=10.192.168.111 CPUs=20 State=UNKNOWN

NodeName=node18 NodeAddr=10.192.168.118 CPUs=20 State=UNKNOWN

#PartitionName=debug Nodes=node16,node11,node18 Default=YES MaxTime=INFINITE State=UP

PartitionName=control Nodes=node16 Default=NO MaxTime=INFINITE State=UP

PartitionName=compute Nodes=node16,node18 Default=YES MaxTime=INFINITE State=UP查看配置文件:

scontrol show config将配置好的conf文件发送到计算节点:

scp slurm.conf root@snode18:/etc/slurm/slurm.conf在控制节点上配置:

mkdir /var/spool/slurmctld

chown slurm: /var/spool/slurmctld

chmod 755 /var/spool/slurmctld

touch /var/log/slurmctld.log

chown slurm: /var/log/slurmctld.log

touch /var/log/slurm_jobacct.log /var/log/slurm_jobcomp.log

chown slurm: /var/log/slurm_jobacct.log /var/log/slurm_jobcomp.log在计算节点上配置:

mkdir /var/spool/slurmctld

chown slurm: /var/spool/slurmctld chmod 755 /var/spool/slurmctld

touch /var/log/slurmctld.log

chown slurm: /var/log/slurmctld.log

touch /var/log/slurm_jobacct.log /var/log/slurm_jobcomp.log

chown slurm: /var/log/slurm_jobacct.log /var/log/slurm_jobcomp.log计算节点需关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld测试slurm是否装成功:

如果出现不能连接控制节点的报错就重启一下slurm

slurmd -C

/etc/init.d/slurm start显示结果如下:

ClusterName=(null) NodeName=node16 CPUs=20 Boards=1 SocketsPerBoard=2 CoresPerSocket=10 ThreadsPerCore=1 RealMemory=63989 TmpDisk=51175

UpTime=18-18:49:53