基于虚拟机的slurm集群搭建

(1)原材料:一台纯净的centos7的主节点:worker,同样配置的两台节点worker1,worker2,安装包若干:munge_0.5.10.orig.tar.bz2,slurm-16.05.11.tar.bz2

(2)附加操作:为便于后面文件传输和节点间的交互,修改如下操作。

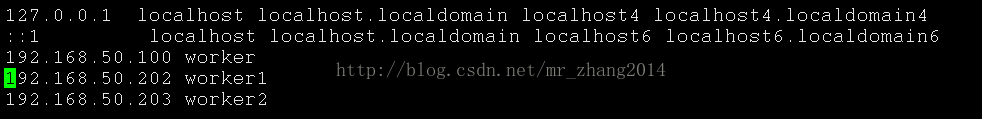

2.1 修改每台节电的/etc/hosts文件如下:

2.2 配置主节点worker到子节点的免密码登录

每台节点上运行如下指令:

mr_zhang2014mr_zhang2014的博客

个人主页|我的博客

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

之后各自可以自己免密码ssh连接它们自己,这是为了生成authorized_keys,为后续追加提供前提条件。

ssh worker

ssh worker1

ssh worker2

将worker的公钥(.ssh/id_dsa.pub)通过远程方式追加到各个机器刚才生成.ssh/authorized_keys后就可以免密码登录。

cd .ssh

scp id_dsa.pub root@worker1:/root/

scp id_dsa.pub root@worker2:/root/

在worker1上执行

cat id_dsa.pub >> /root/.ssh/authorized_keys

在worker2上执行

cat id_dsa.pub >> /root/.ssh/authorized_keys

hostnamectl --static set-hostname worker

hostnamectl --static set-hostname worker1

hostnamectl --static set-hostname worker2

(3)安装 MUNGE

3.1 在https://launchpad.net/ubuntu/+source/munge/0.5.10-1/下载:munge_0.5.10.orig.tar.bz2文件

修改命名,否则后面rpmbuild指令会出错

mv munge_0.5.10.orig.tar.bz2 munge-0.5.10.tar.bz2

为centos安装rpmbuild指令:

yum install -y rpm-build

rpmbuild - tb --clean munge-0.5.10.tar.bz2 //编译munge

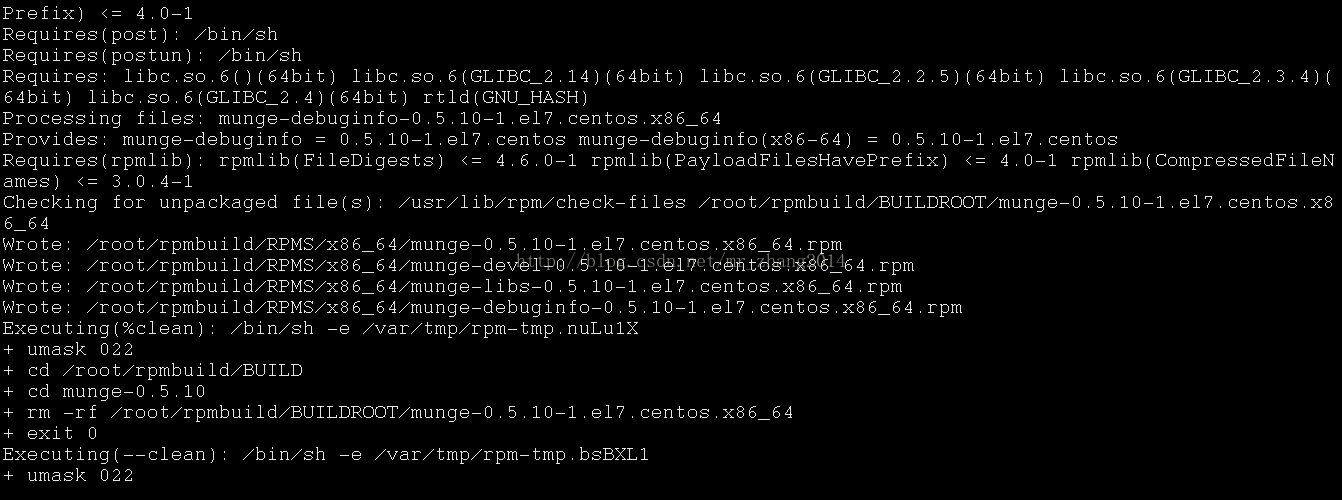

当运行出现如下界面表示编译成功

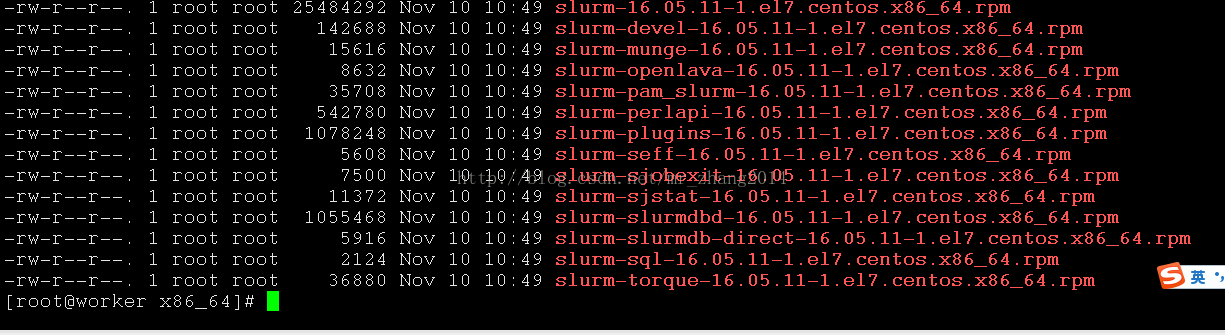

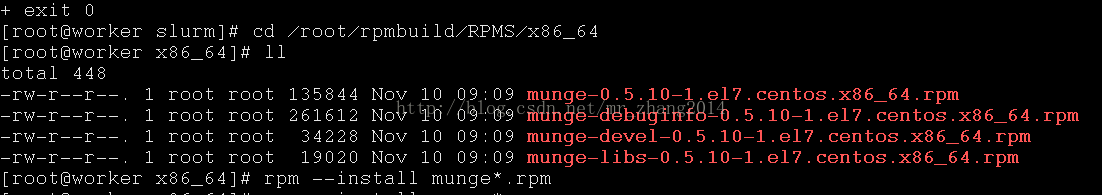

此时进入安装rpmbuild的目录下会发现如下编译好的指令:

cd /root/rpmbuild/RPMS/x86_64

安装指令:

rpm --install munge*.rpm

修改文件权限:

chmod -Rf 700 /etc/munge

chmod -Rf 711 /var/lib/munge

chmod -Rf 700 /var/log/munge

chmod - Rf 0755 /var/run/munge

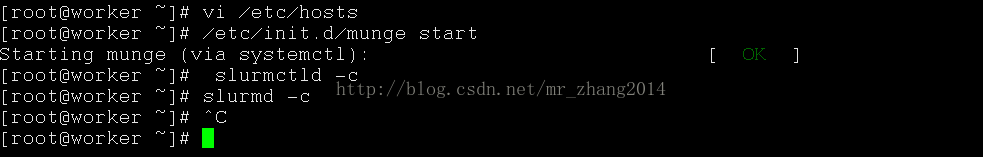

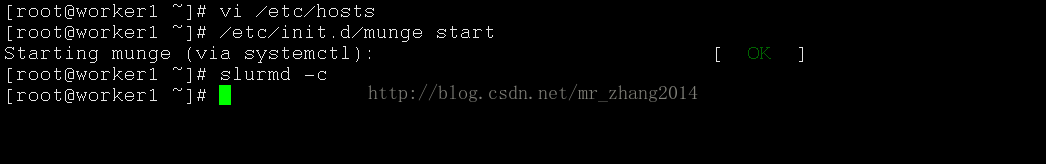

启动munge:

/etc/init.d/munge start

如图表示munge已经正常启动了

(4)安装 Slurm

从http://slurm.schedmd.com/下载slurm-16.05.11.tar.bz2

编译slurm安装包

rpmbuild -ta --clean slurm-16.05.11.tar.bz2

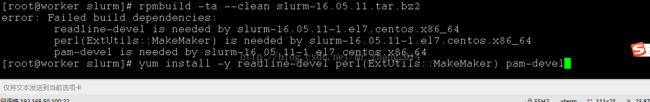

发现缺少依赖包,如图:

安装依赖

yum install -y readline-devel perl-ExtUtils-MakeMaker pam-devel

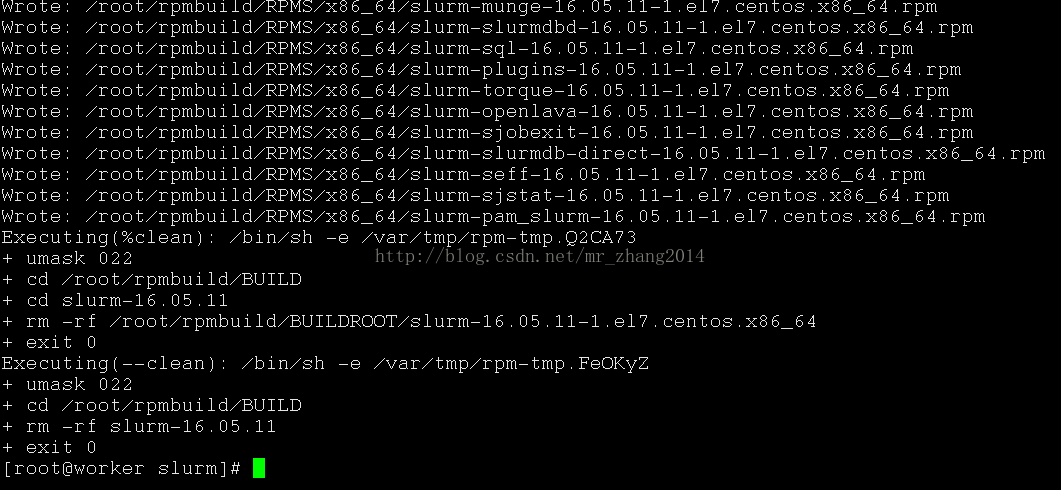

再次执行编译

rpmbuild -ta --clean slurm-16.05.11.tar.bz2

cd /root/rpmbuild/RPMS/x86_64

安装slurm

rpm --install slurm*.rpm

会发现缺少依赖包

修改配置文件:slurm.conf

ClusterName=ssc

#集群名称

ControlMachine=worker

#主节点名

ControlAddr=192.168.50.100

#主节点地址

#BackupController=

#BackupAddr=

#

SlurmUser=slurm

#SlurmdUser=root

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

#组件间认证授权通信方式,使用munge

#JobCredentialPrivateKey=

#JobCredentialPublicCertificate=

StateSaveLocation=/tmp

SlurmdSpoolDir=/tmp/slurmd

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmdPidFile=/var/run/slurmd.pid

ProctrackType=proctrack/pgid

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

SchedulerType=sched/backfill

SelectType=select/linear

FastSchedule=1

# LOGGING

SlurmctldDebug=3

SlurmctldLogFile=/var/log/slurmctld.log

SlurmdDebug=3

SlurmdLogFile=/var/log/slurmd.log

JobCompType=jobcomp/none

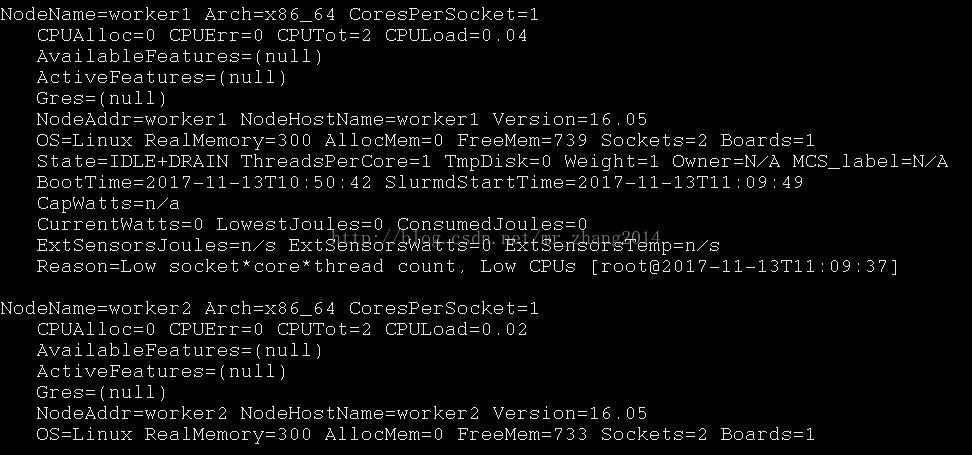

# COMPUTE NODES

NodeName=worker CPUs=2 RealMemory=150 Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 Procs=1 State=IDLE

NodeName=worker1 CPUs=2 RealMemory=150 Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 Procs=1 State=IDLE

NodeName=worker2 CPUs=2 RealMemory=150 Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 Procs=1 State=IDLE

PartitionName=control Nodes=worker Default=NO MaxTime=INFINITE State=UP

PartitionName=compute Nodes=worker1 Default=YES MaxTime=INFINITE State=UP

PartitionName=compute Nodes=worker2 Default=YES MaxTime=INFINITE State=UP将worker的munge.key和slurm.conf分发给worker1,和worker1

scp /etc/munge/munge.key root@worker1:/etc/munge/

scp /etc/munge/munge.key root@worker2:/etc/munge/

scp /etc/slurm/slurm.conf root@worker1:/etc/slurm/

scp /etc/slurm/slurm.conf root@worker2:/etc/slurm/

(5)最后启动工作

启动顺序

主节点:

slurmctld -c

slurmd -c

两个计算节点slaver和slaver2分别执行

slurmd -c

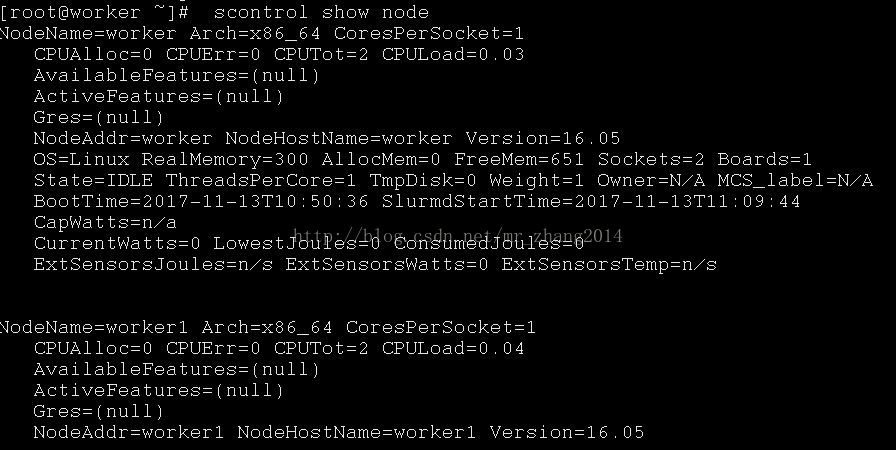

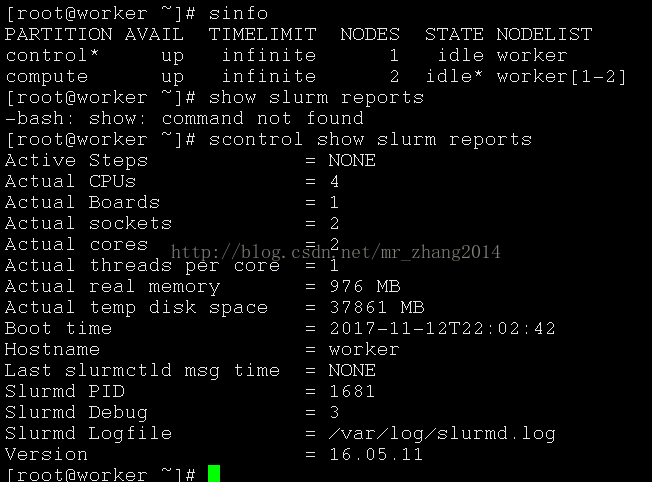

查看集群状态并提交作业

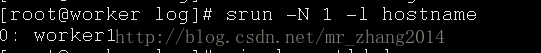

提交作业,将作业分发给一个计算节点

在一个节点上启动两个任务数

至此,整个集群搭建成功!