机器学习平台系列(八) - 模型在线预测服务之模型转换PMML

文章目录

- 1.Spark MLlib

-

- 1.1 原生方法

- 1.2 命令方式(尚未测试)

- 1.3 jpmml-sparkml(测试通过)

- 1.4 问题思考

- 1.5 PMML文件结构(针对LR)

- 2.Sklearn

-

- 2.1 sklearn2pmml

- 2.2 jpmml-sklearn

- 3.LightGBM(Python版本)

-

- 3.1 jpmml-lightgbm

- 4.XGBoost(Python版本)

-

- 4.1 sklearn2pmml

- 4.2 jpmml-xgboost

本文介绍如何将各类模型转换成 PMML 文件,模型在线预测服务需要支持如下几类模型:

- Spark MLlib 模型

- Sklearn 模型

- Python 版本 LightGBM

- Python 版本 XGBoost

1.Spark MLlib

1.1 原生方法

以 LogisticRegressionModel 为例,分析是否有原生方法生成 PMML。当前版本(spark-mllib_2.11:2.3.2)下存在两个版本:

- spark.mllib(支持)

extends org.apache.spark.mllib.regression.GeneralizedLinearModel with org.apache.spark.mllib.classification.ClassificationModel with scala.Serializable with org.apache.spark.mllib.util.Saveable with org.apache.spark.mllib.pmml.PMMLExportable

PMMLExportable 中有相应的 toPMML 方法,如下图所示:

- spark.ml(不支持)

extends org.apache.spark.ml.classification.ProbabilisticClassificationModel[org.apache.spark.ml.linalg.Vector, org.apache.spark.ml.classification.LogisticRegressionModel] with org.apache.spark.ml.classification.LogisticRegressionParams with org.apache.spark.ml.util.MLWritable

后续分析如何将 spark.ml 下的模型生成 PMML文件。

1.2 命令方式(尚未测试)

- 使用可执行 jar 包

1.3 jpmml-sparkml(测试通过)

- jpmml-sparkml 中有相关工程可以将 Spark MLlib 模型转换为 PMML

- pom依赖版本对应要求:spark 2.3.x -> jpmml-sparkml 1.4.x

- 核心流程为先构建 pipeline,然后结合 PMMLBuilder 生成 PMML 文件

val pipeline = new Pipeline().setStages(Array(vectorAssembler, lr))

val pipelineModel = pipeline.fit(featuresAndLabelDF)

val pmml = new PMMLBuilder(featuresAndLabelDF.schema, pipelineModel).build()

val hadoopConf = new Configuration()

val fs = FileSystem.get(hadoopConf)

val path = new Path(outPutPMMLPath)

if (fs.exists(path)) {

fs.delete(path, true)

}

val out = fs.create(path)

MetroJAXBUtil.marshal(pmml, out)

1.4 问题思考

- 如果上游输入为 Vector 类型,报错如下所示

User class threw exception: java.lang.IllegalArgumentException: Expected string, integral, double or boolean data type, got vector data type

- 解决方案如下

# 1.定义function,将Vector类型转换为Array类型:

import org.apache.spark.sql.functions._

import org.apache.spark.ml._

val vecToArray = udf( (x : linalg.Vector) => x.toArray)

val dfArr = df.withColumn("featuresArr", vecToArray(col(dp.trainFeatures)))

# 2.根据vector大小生成虚拟字段,并生成DataFrame

val elements = Array("col0","col1", "col2", "col3", "col4", "col5", "col6", "col7", "col8", "col9",

"col10", "col11", "col12", "col13", "col14", "col15", "col16")

val sqlExpr = elements.zipWithIndex.map{

case (alias, idx) => col("featuresArr").getItem(idx).as(alias) }

val sqlExprWithLabel = sqlExpr.+:(col(labelCol))

val featuresAndLabelDF = dfArr.select(sqlExprWithLabel: _*)

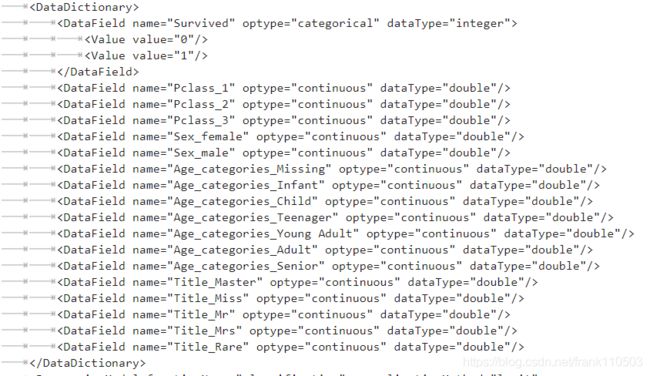

1.5 PMML文件结构(针对LR)

- Header

- DataDictionary

- DataField

- RegressionModel

- MiningSchema

- Output

- RegressionTable

2.Sklearn

2.1 sklearn2pmml

- sklearn2pmml 可以用 API 方式将 Sklearn 模型转换为 PMML

- 安装命令:pip install sklearn2pmml

- 版本对应要求:sklearn2pmml 0.51.0 -> jdk 1.8

- 核心流程:先构建 pipeline;调用 sklearn2pmml API 生成PMML文件

import os

os.environ["PATH"] = '/opt/soft/jdk/jdk1.8.0_66/bin' + os.pathsep + os.environ["PATH"]

print(os.environ["PATH"])

from sklearn2pmml.pipeline import PMMLPipeline

from sklearn2pmml import sklearn2pmml

lr = LogisticRegression(penalty='l2', tol=0.000001, C=0.01, max_iter=10, random_state=9999)

pipeline = PMMLPipeline([("classifier", lr)]);

pipeline.fit(train_X, train_Y)

sklearn2pmml(pipeline, "demo.pmml", with_repr = True)

重点关注1:PATH环境变量中如果有1.7版本的JDK,请将JDK1.8的设置放在前边

重点关注2:需要将模型(lr)封装到 PMMLPipeline 中

2.2 jpmml-sklearn

- jpmml-sklearn 中有相关工程可以将 Sklearn 模型转换为 PMML

- 核心流程1:使用 Python 训练模型,保存为 pickle

Demo1:将模型封装到 PMMLPipeline 中然后保存

lr2 = LogisticRegression(penalty='l2', tol=0.000001, C=0.01, max_iter=10, random_state=9999)

pipeline = PMMLPipeline([("classifier", lr2)])

pipeline.fit(train_X, train_Y)

from sklearn.externals import joblib

joblib.dump(pipeline, "pipeline.pkl.z", compress = 9)

Demo2:将原生模型使用 joblib 进行保存

lr3 = LogisticRegression(penalty='l2', tol=0.000001, C=0.01, max_iter=10, random_state=9999)

lr3.fit(train_X, train_Y)

local_model_path = "lr3.pkl"

joblib.dump(lr3, local_model_path)

- 核心流程2:调用 Java 命令生成两个 PMML 文件(详见github),并对两种方式生成的 PMML 进行对比

3.LightGBM(Python版本)

3.1 jpmml-lightgbm

- jpmml-lightgbm 可以用 Java 命令方式将 Python 版本的 LightGBM 模型转换为 PMML

- 核心流程1:调用 LightGBM 原生方法将模型保存为 txt 文件

from sklearn.datasets import load_boston

from lightgbm import LGBMRegressor

boston = load_boston()

lgbm = LGBMRegressor(objective = "regression")

lgbm.fit(boston.data, boston.target, feature_name = boston.feature_names.tolist())

lgbm.booster_.save_model("lightgbm.txt")

- 核心流程2:调用 Java 命令生成 PMML(详见 github)

4.XGBoost(Python版本)

4.1 sklearn2pmml

- 核心流程:先构建 pipeline;调用 sklearn2pmml API 生成PMML文件

import numpy as np

import pandas as pd

from sklearn.datasets import load_boston

boston = load_boston()

data = pd.DataFrame(boston.data)

data.columns = boston.feature_names

data['PRICE'] = boston.target

X, y = data.iloc[:,:-1],data.iloc[:,-1]

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=123)

import os

import xgboost as xgb

os.environ["PATH"] = '/opt/soft/jdk/jdk1.8.0_66/bin' + os.pathsep + os.environ["PATH"]

print(os.environ["PATH"])

from sklearn2pmml.pipeline import PMMLPipeline

from sklearn2pmml import sklearn2pmml

# 使用xgboost模型

xg_reg = xgb.XGBRegressor(objective ='reg:linear', colsample_bytree = 0.3, learning_rate = 0.1,

max_depth = 5, alpha = 10, n_estimators = 10)

pipeline = PMMLPipeline([("classifier", xg_reg)]);

pipeline.fit(X_train,y_train)

sklearn2pmml(pipeline, "xgboost_sklearn2pmml.pmml", with_repr = True)

4.2 jpmml-xgboost

- jpmml-lightgbm 可以用 Java 命令方式将 Python 版本的 XGBoost 模型转换为 PMML

- 核心流程1:自定义函数,生成所需的 fmap 文件

def ceate_feature_map(file_name,features):

outfile = open(file_name, 'w')

for i, feat in enumerate(features):

outfile.write('{0}\t{1}\tq\n'.format(i, feat))

#feature type, use i for indicator and q for quantity

outfile.close()

ceate_feature_map("xgboost.fmap", boston.feature_names)

- 核心流程2:将模型保存为模型文件

Demo1:

import numpy as np

import pandas as pd

import xgboost as xgb

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

boston = load_boston()

data = pd.DataFrame(boston.data)

data.columns = boston.feature_names

data['PRICE'] = boston.target

X, y = data.iloc[:,:-1],data.iloc[:,-1]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=123)

xg_reg = xgb.XGBRegressor(objective ='reg:linear', colsample_bytree = 0.3, learning_rate = 0.1,

max_depth = 5, reg_alpha = 10, n_estimators = 10)

xg_reg.fit(X_train, y_train)

xg_reg.save_model("xgboost_1.model")

Demo2:

import numpy as np

import pandas as pd

import xgboost as xgb

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

boston = load_boston()

data = pd.DataFrame(boston.data)

data.columns = boston.feature_names

data['PRICE'] = boston.target

X, y = data.iloc[:,:-1],data.iloc[:,-1]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=123)

data_dmatrix = xgb.DMatrix(data=X_train, label=y_train)

params = {

"objective":"reg:linear",'colsample_bytree': 0.3,'learning_rate': 0.1,

'max_depth': 5, 'alpha': 10}

xg_reg = xgb.train(params=params, dtrain=data_dmatrix, num_boost_round=10)

xg_reg.save_model("xgboost_2.model")

注意:demo1 中使用的参数为 reg_alpha,demo2 中使用的是 alpha

- 核心流程3:调用 Java 命令生成 PMML(详见 github)