qiushibaike.py:

# 导入相应的库文件

import requests

import re

# 加入请求头

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36'

}

# 初始化列表,用于装入爬虫信息

info_lists = []

# 定义获取用户性别的函数

def judgment_sex(class_name):

if class_name == 'womenIcon':

return '女'

else:

return '男'

def get_info(url):

res = requests.get(url)

ids = re.findall('(.*?)

', res.text, re.S)

levels = re.findall('(.*?)', res.text, re.S)

sexes = re.findall('', res.text, re.S)

contents = re.findall('.*?(.*?)', res.text, re.S)

laughs = re.findall('(\d+)', res.text, re.S)

comments = re.findall('(\d+) 评论', res.text, re.S)

for id, level, sex, content, laugh, comment in zip(ids, levels, sexes, contents, laughs, comments):

info = {

'id': id,

'level': level,

'sex': judgment_sex(sex),

'content': content,

'laugh': laugh,

'comment': comment

}

info_lists.append(info)

# 程序主入口

if __name__ == '__main__':

urls = ['http://www.qiushibaike.com/text/page/{}/'.format(str(i)) for i in range(1, 36)]

for url in urls:

# 循环调用获取爬虫信息的函数

get_info(url)

for info_list in info_lists:

# 遍历列表,创建TXT文件

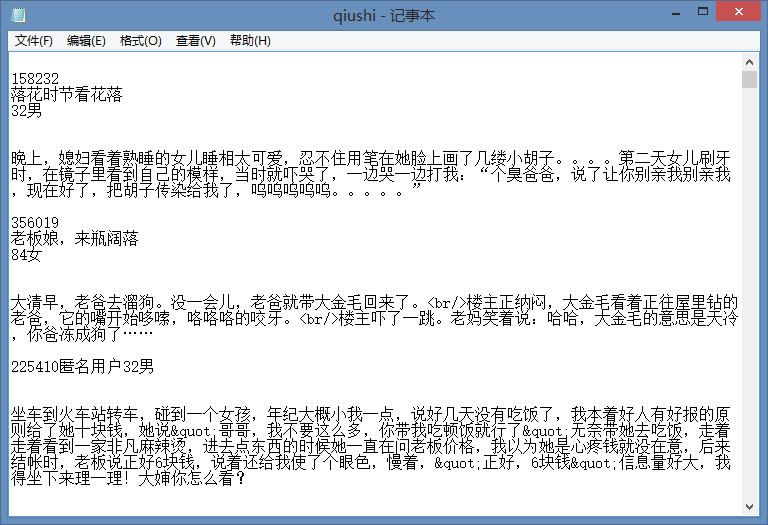

f = open('C:/Users/Administrator//Desktop/qiushi.text', 'a+')

try:

# 写入数据到TXT

f.write(info_list['id'])

f.write(info_list['level'])

f.write(info_list['sex'])

f.write(info_list['content'])

f.write(info_list['laugh'])

f.write(info_list['comment'])

f.close()

except UnicodeEncodeError:

# pass掉错误编码

pass

转载于:https://www.cnblogs.com/silverbulletcy/p/8005142.html